- Perform operations using SDK for cosmosdb (read/write)

- Configure and work with RUs (request units)

- Consistency levels :

- Be familiar with how

- When to use which replication level

Strong: happens to all replicas immediatelyBounded stalenessDEFAULT: set maximum amount of time to lag from time the write accours until it is readSession: all reads/writes are the same for the same session (especially when they share the same session token), apps that doesn't have the same session will haveEventualconsistencyConsistent prefix: Allows delays when replicating, but ensures writes happen in all replicas in same exact order (no out of order writes).Eventual: will write the data in all replicas "eventually". doesn't guarantee the order.

- Costs:

-

Level Costs Eventual Lowest Strong Highest

-

- Use case scenarios:

-

Level Use case Eventual Likes within an application (so you don't worry about the order of the likes) Session E-commerce, social media apps and similar with persistent user connection Bounded Staleness Near real time apps (stock exchange etc) Strong Financial transactions, Scheduling, Forecasting workloads

- For processing the changed data from external applications

- Enabled by DEFAULT

- DELETE not activated

- You can capture deletes by soft-delete

- Set / Retrieve properties and metadata for blob resources using REST

- Header format: x-ms-meta-name:string-value

- Format URI for retrieving properties and metadata:

ContainerGET/HEAD https://myaccount.blob.core.windows.net/mycontainer?restype=containerBlobGET/HEAD https://myaccount.blob.core.windows.net/myblob?comp=metadata

- Format URI for different containers:

- Common properties

- E-tag

- Last-modified

- Client library for Java

- Know how to read/write data using classes

-

Class .Net Equivalent Description BlobServiceClient BlobServiceClient Manipulate storage resources and blob containers BlobServiceClientBuilder BlobClientOptions Config and installation of BlobServiceClient objects BlobContainerClient BlobContainerClient Manipulate containers and its blobs BlobClient BlobClient Manipulate storage blobs BlobItem ? Returned object for Individual blobs returned from listBlobs

flowchart LR

Account[Account - John-Naber] --> Container[Container - images] --> Blob[Blob - image.jpeg]

Hot: Frequently accessCool: Less frequentArchive: Rarely accessed- Policies to manage:

- How to transfer from one storage to another

- Delete when needed (

⚠️ you need to activatelast access time tracking) - Define rules to be run per day at the storage account level

- Apply rules to containers or subset of blobs (using prefixes as filters)

- Policy structure:

name(*)enabledtype(*)definition(*)

- Rules:

filter-set(which blobs is affected) andaction-set(what actions to take)

⚠️ MUST contain at least one rule.- Policy example:

-

{ "rules": [ { "name": "rule1", "enabled": true, "type": "Lifecycle", "definition: {...} } }

-

- How to release a static website

- Configure Capabilities > Static website , select enabled

- Specify index and error documents

- In

$webcontainer'sUploadicon and openthe Upload blobpane - Select files and add files

- Enable metrics with

MetricsunderMonitorsection

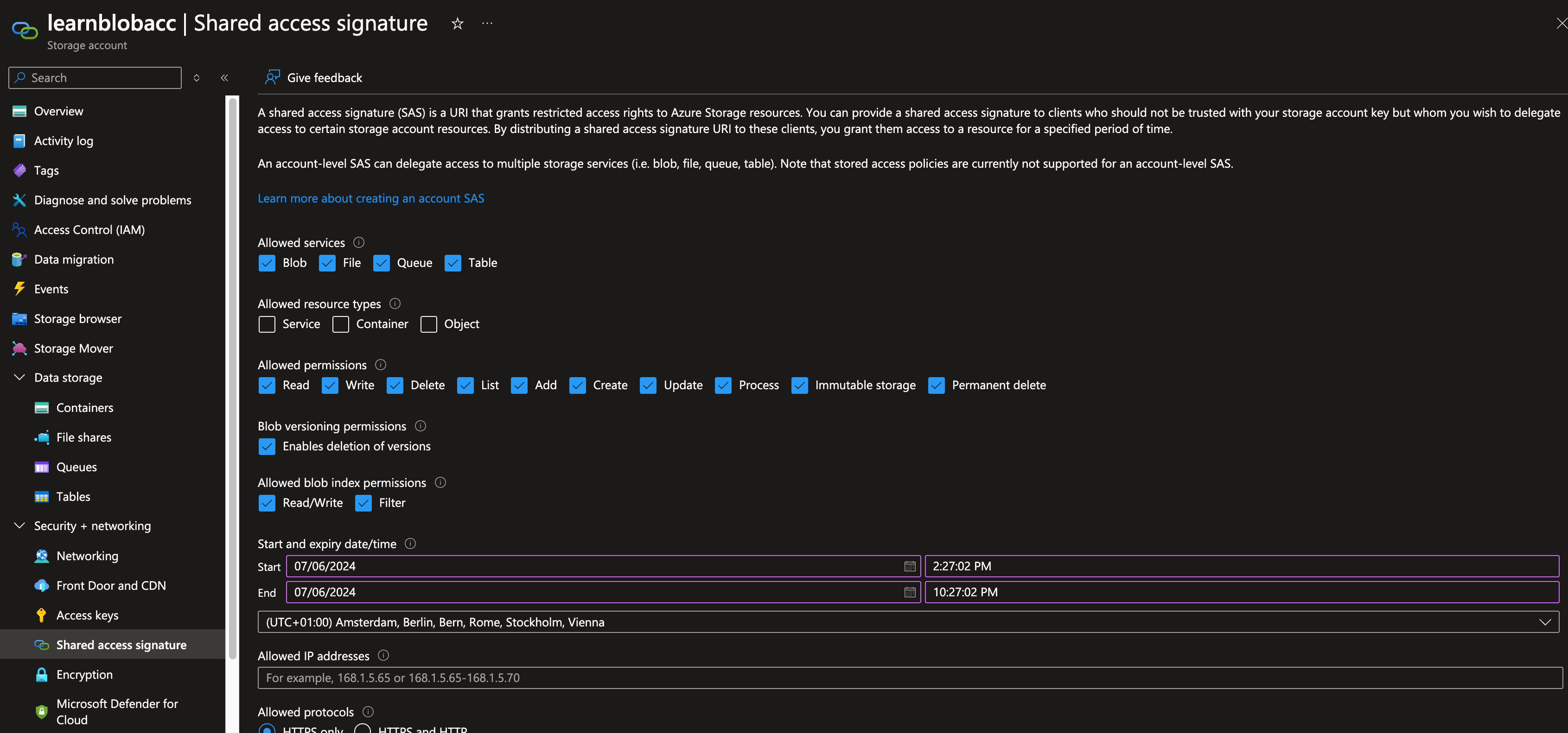

- Go to storage account > Security + Networking > Shared access signature

- You can also set start and expiry dates for the signatures

- You can either pick up the SAS and create your own connection string, or use already existing connection string which you will see as soon as creating a SAS token.

⚠️ This project assumes you have created a resource group.- Go to https://portal.azure.com

- Navigate to Resource Groups on the main panel or Search resources > Resource group

- Then click on

Create, and give it a name (REQUIRED), you can leave the rest as empty

⚠️ This project assumes you have created a storage account (can be empty).- Go to https://portal.azure.com

- Navigate to the resource group that you created above.

- Click on

Createat the top left. - This will navigate you to the marketplace, where you can search for

Storage Account ⚠️ Make sure the creation module has selected your resource group correctly, if not make sure to select the one you created above.

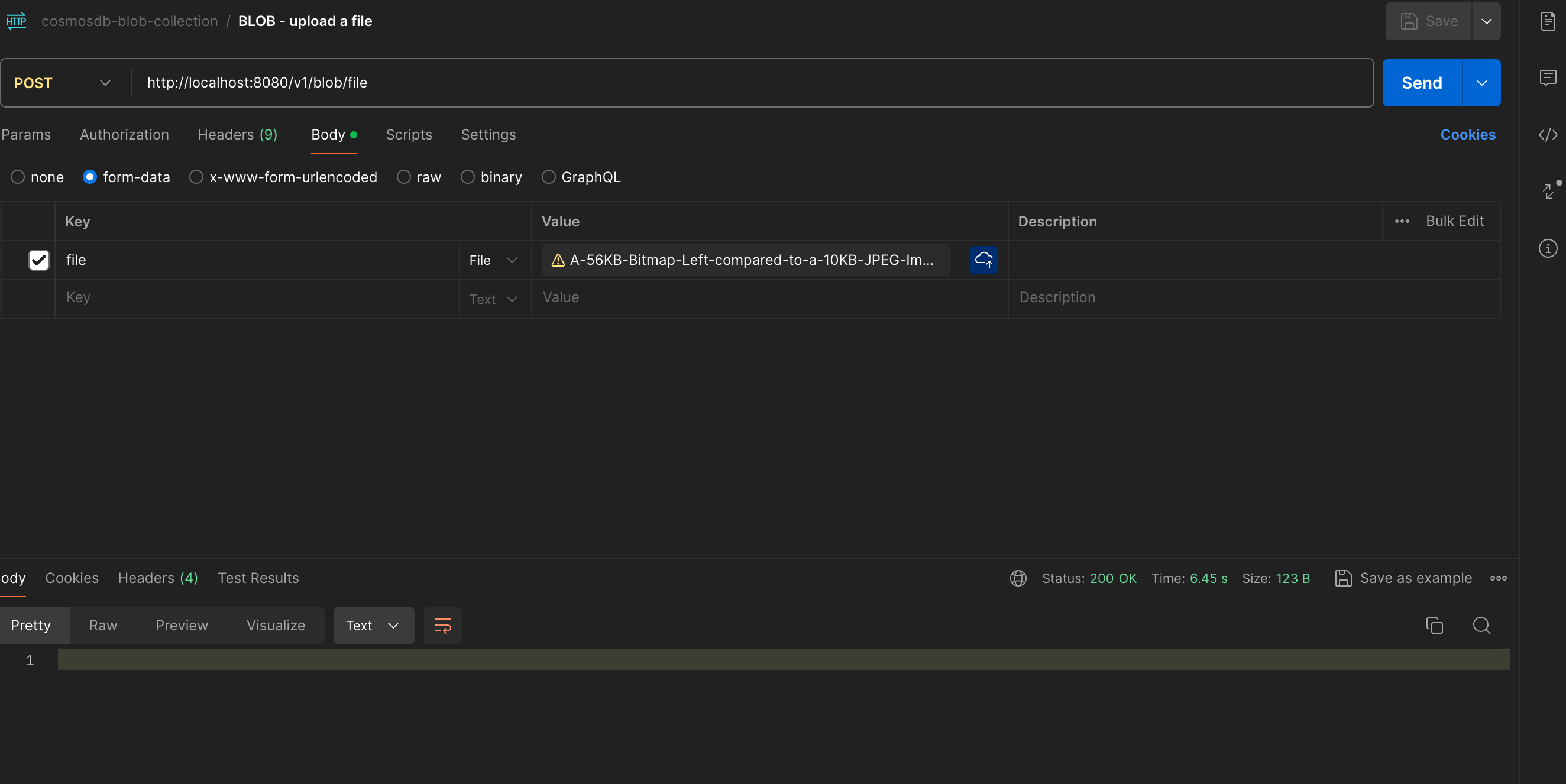

⚠️ This project assumes you have created a shared access signature from the storage account you have created. See: Creating SAS Token- Open up the cosmosdb-blob-collection.json in postman.

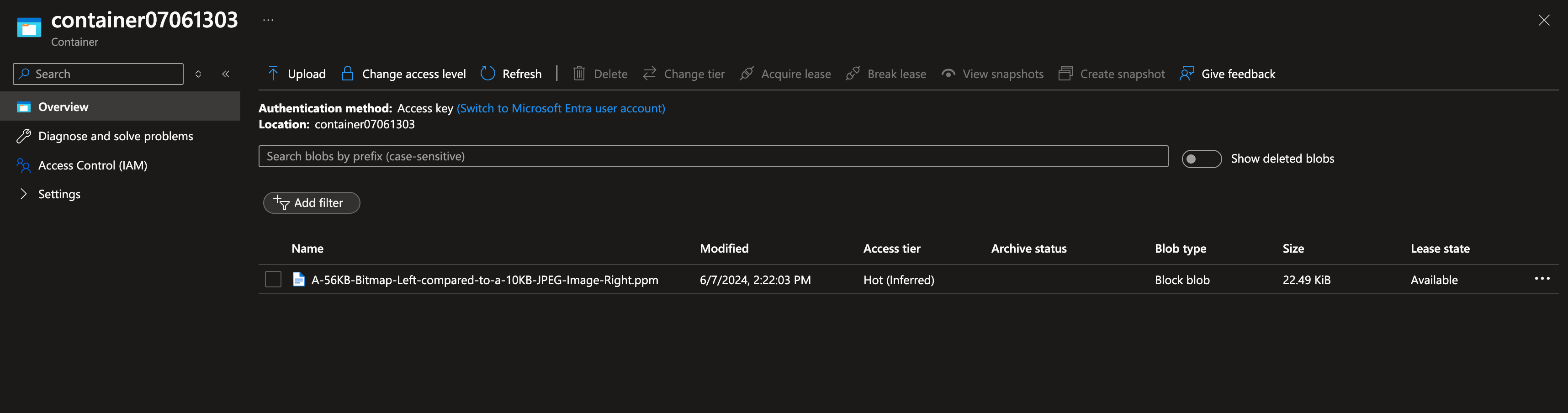

⚠️ First fire theBLOB - create containerrequest, this will create a container for you, and it is a must.⚠️ Make sure to select a proper file, then upload the image usingBLOB - upload a filerequest.

⚠️ You will be able to see the file, as I did, on azure blob container (that you created).

- https://parveensingh.com/cosmosdb-consistency-levels/

- https://learn.microsoft.com/en-us/azure/cosmos-db/use-cases

- Make sure to create your own application.properties from application-template.properties and put it under

src/main/resources - Make sure to update the property

com.cosmos.connectionStringWithSA - If you would like to upload images greater than 1MB, then you need to update the following properties:

spring.servlet.multipart.max-file-size: the maximum file size in the backendspring.servlet.multipart.max-request-size: accepted request size

For further reference, please consider the following sections:

- Official Gradle documentation

- Spring Boot Gradle Plugin Reference Guide

- Create an OCI image

- Azure Cosmos DB

- Spring Web

The following guides illustrate how to use some features concretely:

- How to use Spring Boot Starter with Azure Cosmos DB SQL API

- Building a RESTful Web Service

- Serving Web Content with Spring MVC

- Building REST services with Spring

These additional references should also help you:

- Not required for data under 10GB

- It is a placement handle to tell cosmosdb

How towrite and find the data - We can write queries without a partition key, with

fanout queryfanout query: You change your statements each time for filtering the results (for example, one for a customer's age, then customer's city), the database will first go through a more common node, but then each time the filter parameter change, it will start to look at each and every node!

- Since

fanout queriesdoesn't scale, we will have bottlenecks or hit the limit of maximum amount of queries per single node - We can scale :

READ,WRITEandSTORAGE.STORAGE: Use X number of nodes , and you will usefanout queriesto retrieve the data.- (-) You will have

READbottlenecks (as we will ask each node where to find our data!)

- (-) You will have

READ: To scale, replicate the data for each of these nodes.- (-) You will have

WRITEbottlenecks (as we will need to write to each and every node!).

- (-) You will have

WRITE: The only way to scale writes is toSHARDorPARTITIONthe data.

- To scale easier on these kinds of scenarios, it is suggested to have

partition_key. - Best practices to choose a

partition_key:- Understand the ratio of reads and writes:

- if your application is reading more than writing to the database,

optimize for frequency. - if your application is writing more than reading from the database,

load balance.

- if your application is reading more than writing to the database,

- Example of a bad partition key:

- Suppose you have an IoT system, You would get 10000 requests coming in to your database at the same time.

- If you choose

creation_timestampas the partition key, then you would end up getting write bottlenecks, as you will write to the same node and as the timestamp would be very similar and won't make sense. - If you choose

device_idas the partition key, the database will know exactly where to put the data to which node. So reads and writes will be easier.

- If you choose

- Suppose you have an IoT system, You would get 10000 requests coming in to your database at the same time.

- Look for the dataset and try to find the most common field (it shouldn't be unique id or non changing field)

- In the example of IoT, check

whereclause of most run queries, and you would see thedevice_idshow up, then it would be a good partition key for your usecase.

- In the example of IoT, check

- Understand the ratio of reads and writes:

⚠️ To be honest, I wasn't able to see the data on Data explorer. However, I was able to see the data when I ran postman collection locally.⚠️ This project assumes you have created a resource group.⚠️ This project assumes you have created a cosmosdbtabledatabase.- Go to your resource group and select

Create - In marketplace search for

cosmos db tableand create the database with any given account name. - Once you create entities using postman, the database will be created automatically.

- Go to your resource group and select

⚠️ You're expected to provide the following properties in your application.properties file.

spring.cloud.azure.cosmos.endpoint=https://contosoaccounttest.documents.azure.com:443/

spring.cloud.azure.cosmos.key=your-cosmosdb-primary-key

spring.cloud.azure.cosmos.database=contosoaccounttest- Activate the cosmosdb repositories with :

@EnableCosmosRepositories - Create a repository with

CosmosRepository(see alsoCosmosReactiveRepositoryfor reactive operations).

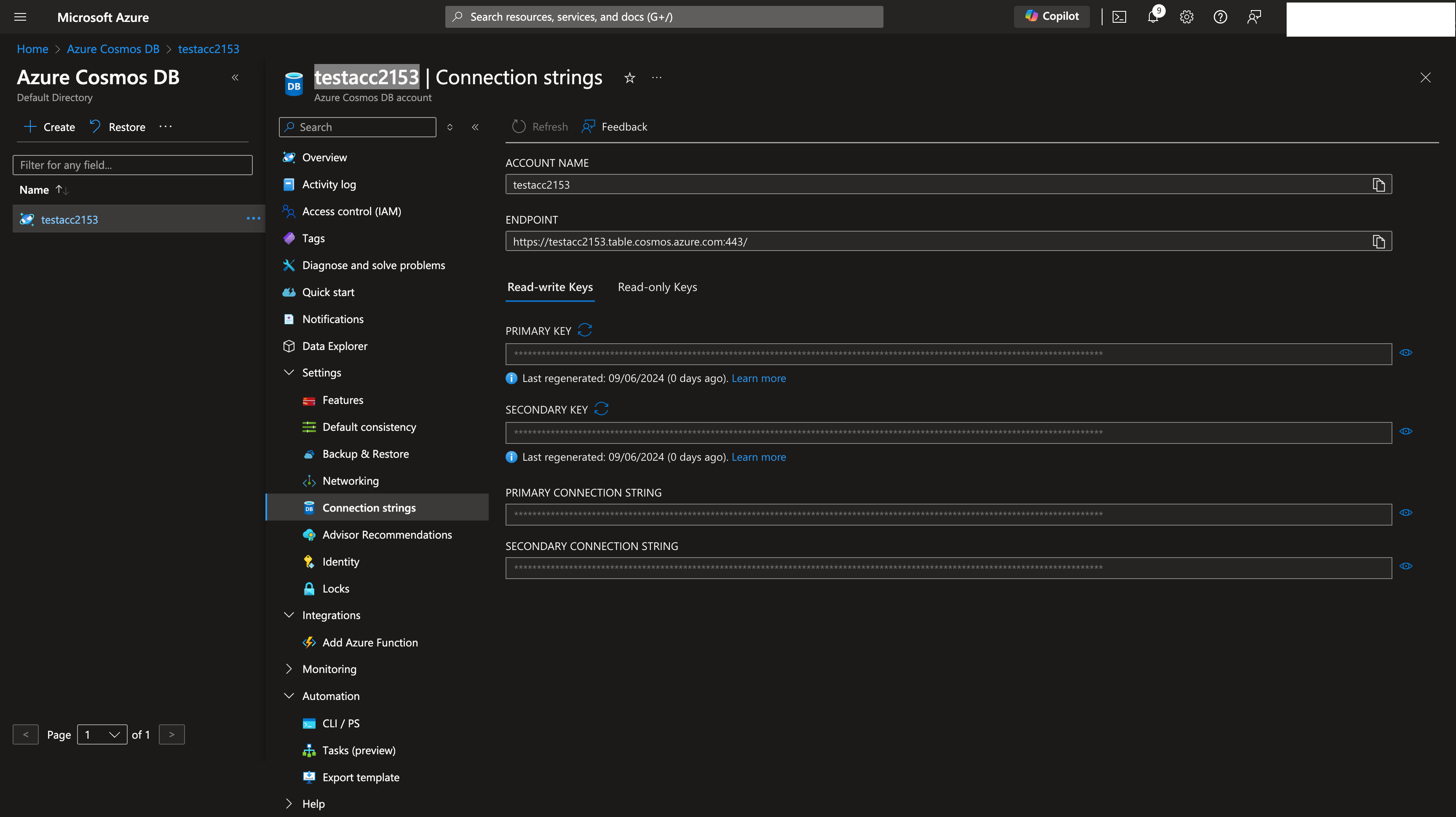

- Go to DB Account > Settings > Connection Strings

- Note down endpoint , key (primary key) and the name of the database that you have created. And replace the values of the properties above.

- Please find the sample date under resources

- There are 4 endpoints in place for you test,

create customer: to create a customerget customer: to get the created customerget all customers: to get all customersget all customers by country: to filter customers by country

spring.cloud.azure.cosmos.endpoint=https://contosoaccounttest.documents.azure.com:443/

spring.cloud.azure.cosmos.key=your-cosmosdb-primary-key

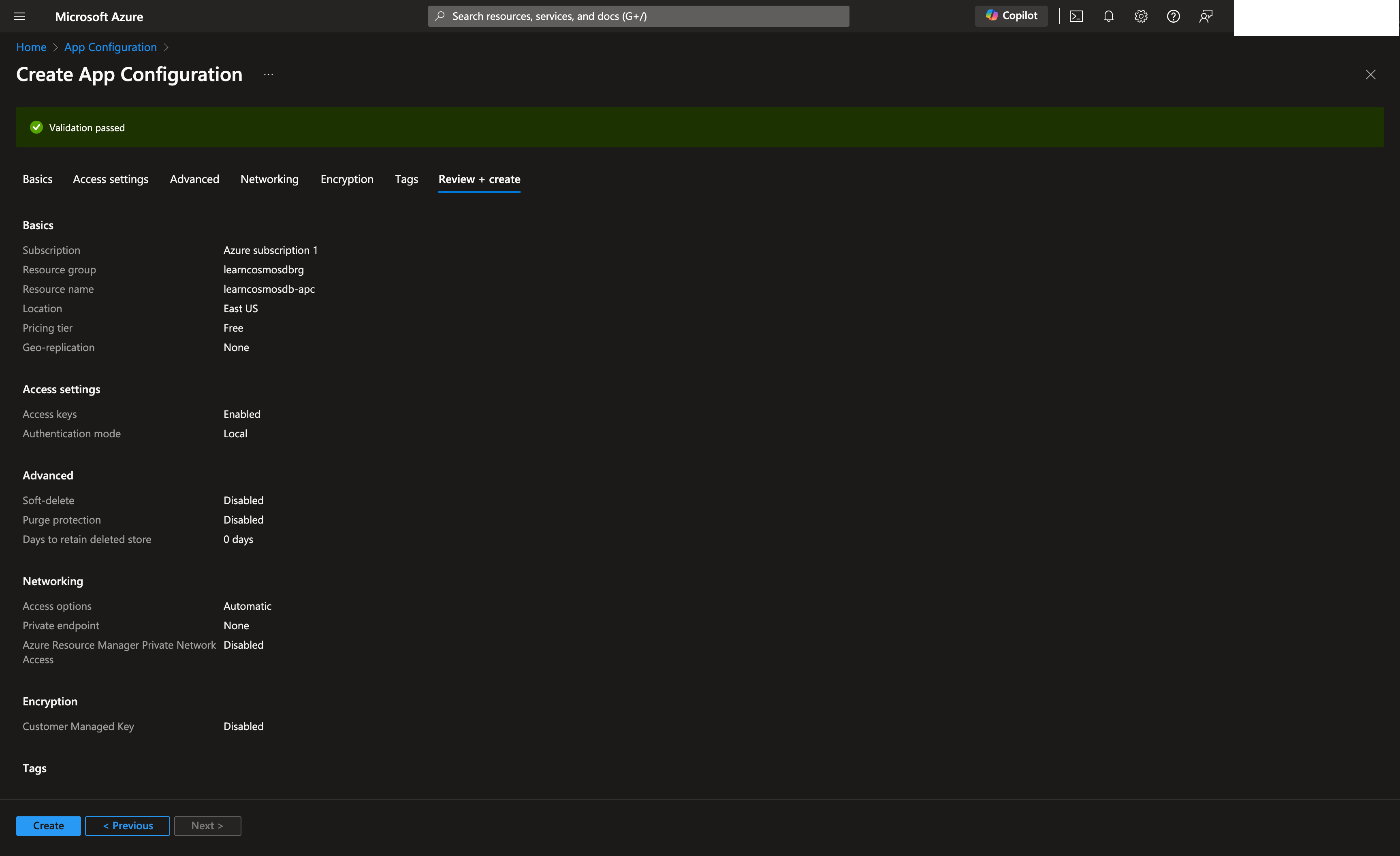

spring.cloud.azure.cosmos.database=contosoaccounttest- The app config service is there to keep your credential information, connection strings, and anything related to your service.

- Once you set it up, you can also use the credentials from key-vault service or move already existing credentials from your app config service to key vault, to add extra level of security and encryption.

- More importantly, you can use it to store your feature flags!

- To create the service, simply reuse the resource group you created and select mostly the default settings:

⚠️ Imports with yaml files are not very convenient (aka. NOT WORKING!)⚠️ - Simply go to Operations > Import > Source: Configuration file

- Select the configuration file to be uploaded (json or properties)

- Select the language (in our case spring)

- Select the prefix, in our case we need /application

- Go to Operations > Configuration Explorer

- After that you can create key values using the following format:

/application/spring.cloud.azure.cosmos.endpoint

/application/spring.cloud.azure.cosmos.key

/application/spring.cloud.azure.cosmos.database(you can also add other properties like this too.)

- You will need to add following dependency:

implementation("com.azure.spring:spring-cloud-azure-starter-appconfiguration-config")⚠️ You will need to rename your application.properties file to bootstrap.properties (because azure properties really doesn't like application.yaml or application.property files!)⚠️ - Get the connection properties under Settings > Connection string - you can use read/write or read only keys.

- et voila! you will be using your application without any extra property defined in your local environment, AND it is FREE 🤩 !

- User assigned

- System assigned

For this, I will reuse the analogy that is used in describing this concept.

Say that you have a car , and a garage. You need to park your car to this garage of yours. There are 3 ways to authorise yourself to this car park:

- Key

- Car mirror buttons (if any) (System assigned)

- Remote control (User assigned)

So as you can see, it fits perfectly to explain this concept. I will use system assigned identity, and let Azure take care of the authentication of my application.

- Create system assigned identity on azure (or via shell)

- Go to your app > Settings > Identity

- Status > On > Click on : Save

- Now save your object (principal) id somewhere.

- Grant access to your blob

- Now switch to your blob (or create one with default settings)

- Access Control (IAM)

- Add > Add Role Assignment > Select `Storage Blob Data Contributor'

- On the members section > Managed Idendity > +Select Members > Select the app that you have created (this one!, i mean select the app that you just created managed identity for)

- You can verify by going to Access control > Role Assignments > At the bottom > Storage Blob Data Contributor

- Let's try with postman!

The sample data provided in this repository, including but not limited to names, addresses, phone numbers, and email addresses, are entirely fictional. Any resemblance to real persons, living or dead, actual addresses, phone numbers, or email addresses is purely coincidental.

I do not bear any responsibility for any coincidental matches to real-world data and am not responsible for any related data issues. This data is meant solely for testing and demonstration purposes.