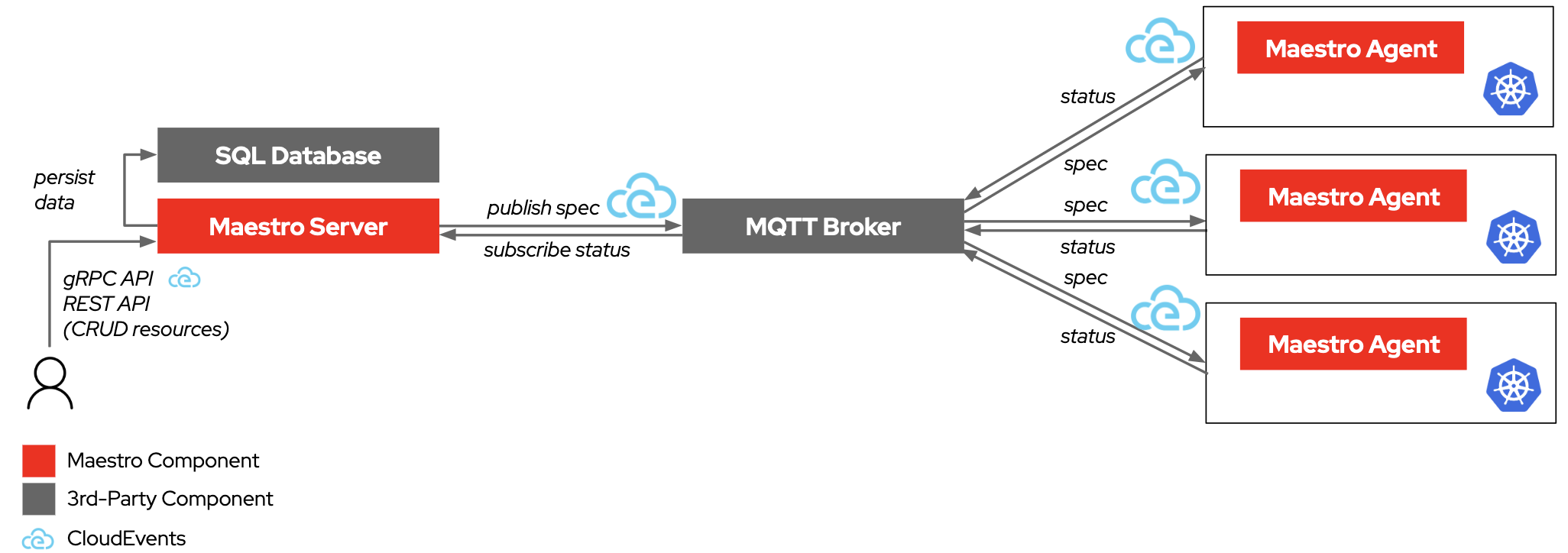

Maestro is a system to leverage CloudEvents to transport Kubernetes resources to the target clusters, and then transport the resource status back. The resources are stored in a database and the status is updated in the database as well. The system is composed of two parts: the Maestro server and the Maestro agent.

- The Maestro server is responsible for storing the resources and their status. And sending the resources to the message broker in the CloudEvents format. The Maestro server provides Resful APIs and gRPC APIs to manage the resources.

- Maestro agent is responsible for receiving the resources and applying them to the target clusters. And reporting back the status of the resources.

# 1. build the project

$ go install gotest.tools/gotestsum@latest

$ make binary

# 2. run a postgres database locally in docker

$ make db/setup

$ make db/login

root@f076ddf94520:/# psql -h localhost -U maestro maestro

psql (14.4 (Debian 14.4-1.pgdg110+1))

Type "help" for help.

maestro=# \dt

Did not find any relations.

# 3. run a mqtt broker locally in docker

$ make mqtt/setupThe initial migration will create the base data model as well as providing a way to add future migrations.

# Run migrations

./maestro migration

# Verify they ran in the database

$ make db/login

root@f076ddf94520:/# psql -h localhost -U maestro maestro

psql (14.4 (Debian 14.4-1.pgdg110+1))

Type "help" for help.

maestro=# \dt

List of relations

Schema | Name | Type | Owner

--------+------------+-------+---------------------

public | resources | table | maestro

public | events | table | maestro

public | migrations | table | maestro

(3 rows)

make test

make test-integration

make e2e-test

make run

To verify that the server is working use the curl command:

curl http://localhost:8000/api/maestro/v1/resources | jq

That should return a 401 response like this, because it needs authentication:

{

"kind": "Error",

"id": "401",

"href": "/api/maestro/errors/401",

"code": "API-401",

"reason": "Request doesn't contain the 'Authorization' header or the 'cs_jwt' cookie"

}

Authentication in the default configuration is done through the RedHat SSO, so you need to login with a Red Hat customer portal user in the right account (created as part of the onboarding doc) and then you can retrieve the token to use below on https://console.redhat.com/openshift/token To authenticate, use the ocm tool against your local service. The ocm tool is available on https://console.redhat.com/openshift/downloads

ocm login --token=${OCM_ACCESS_TOKEN} --url=http://localhost:8000

This will be empty if no Resource is ever created

ocm get /api/maestro/v1/resources

{

"items": [],

"kind": "ResourceList",

"page": 1,

"size": 0,

"total": 0

}

ocm post /api/maestro/v1/resources << EOF

{

"consumer_name": "cluster1",

"version": 1,

"manifest": {

"apiVersion": "apps/v1",

"kind": "Deployment",

"metadata": {

"name": "nginx",

"namespace": "default"

},

"spec": {

"replicas": 1,

"selector": {

"matchLabels": {

"app": "nginx"

}

},

"template": {

"metadata": {

"labels": {

"app": "nginx"

}

},

"spec": {

"containers": [

{

"image": "nginxinc/nginx-unprivileged",

"name": "nginx"

}

]

}

}

}

}

}

EOF

ocm get /api/maestro/v1/resources

{

"items": [

{

"consumer_name": "cluster1",

"created_at": "2023-11-23T09:26:13.43061Z",

"href": "/api/maestro/v1/resources/f428e21d-71cb-47a4-8d7f-82a65d9a4048",

"id": "f428e21d-71cb-47a4-8d7f-82a65d9a4048",

"kind": "Resource",

"manifest": {

"apiVersion": "apps/v1",

"kind": "Deployment",

"metadata": {

"name": "nginx",

"namespace": "default"

},

"spec": {

"replicas": 1,

"selector": {

"matchLabels": {

"app": "nginx"

}

},

"template": {

"metadata": {

"labels": {

"app": "nginx"

}

},

"spec": {

"containers": [

{

"image": "nginxinc/nginx-unprivileged",

"name": "nginx"

}

]

}

}

}

},

"status": {

"ContentStatus": {

"availableReplicas": 1,

"conditions": [

{

"lastTransitionTime": "2023-11-23T07:05:50Z",

"lastUpdateTime": "2023-11-23T07:05:50Z",

"message": "Deployment has minimum availability.",

"reason": "MinimumReplicasAvailable",

"status": "True",

"type": "Available"

},

{

"lastTransitionTime": "2023-11-23T07:05:47Z",

"lastUpdateTime": "2023-11-23T07:05:50Z",

"message": "ReplicaSet \"nginx-5d6b548959\" has successfully progressed.",

"reason": "NewReplicaSetAvailable",

"status": "True",

"type": "Progressing"

}

],

"observedGeneration": 1,

"readyReplicas": 1,

"replicas": 1,

"updatedReplicas": 1

},

"ReconcileStatus": {

"Conditions": [

{

"lastTransitionTime": "2023-11-23T09:26:13Z",

"message": "Apply manifest complete",

"reason": "AppliedManifestComplete",

"status": "True",

"type": "Applied"

},

{

"lastTransitionTime": "2023-11-23T09:26:13Z",

"message": "Resource is available",

"reason": "ResourceAvailable",

"status": "True",

"type": "Available"

},

{

"lastTransitionTime": "2023-11-23T09:26:13Z",

"message": "",

"reason": "StatusFeedbackSynced",

"status": "True",

"type": "StatusFeedbackSynced"

}

],

"ObservedVersion": 1,

"SequenceID": "1744926882802962432"

}

},

"updated_at": "2023-11-23T09:26:13.457419Z",

"version": 1

}

],

"kind": "",

"page": 1,

"size": 1,

"total": 1

}Take OpenShift Local as an example to deploy the maestro. If you want to deploy maestro in an OpenShift cluster, you need to set the external_apps_domain environment variable to point your cluster.

$ export external_apps_domain=`oc -n openshift-ingress-operator get ingresscontroller default -o jsonpath='{.status.domain}'`Use OpenShift Local to deploy to a local openshift cluster. Be sure to have CRC running locally:

$ crc status

CRC VM: Running

OpenShift: Running (v4.13.12)

RAM Usage: 7.709GB of 30.79GB

Disk Usage: 23.75GB of 32.68GB (Inside the CRC VM)

Cache Usage: 37.62GB

Cache Directory: /home/mturansk/.crc/cacheLog into CRC:

$ make crc/login

Logging into CRC

Logged into "https://api.crc.testing:6443" as "kubeadmin" using existing credentials.

You have access to 66 projects, the list has been suppressed. You can list all projects with 'oc projects'

Using project "ocm-mturansk".

Login Succeeded!Deploy maestro:

We will push the image to your OpenShift cluster default registry and then deploy it to the cluster. You need to follow this document to expose a default registry manually and login into the registry with podman.

$ make deploy

$ oc get pod -n maestro-root

NAME READY STATUS RESTARTS AGE

maestro-85c847764-4xdt6 1/1 Running 0 62s

maestro-db-1-deploy 0/1 Completed 0 62s

maestro-db-1-kwv4h 1/1 Running 0 61s

maestro-mqtt-6cb7bdf46c-kcczm 1/1 Running 0 63sCreate a consumer:

$ ocm login --token=${OCM_ACCESS_TOKEN} --url=https://maestro.${external_apps_domain} --insecure

$ ocm post /api/maestro/v1/consumers << EOF

{

"name": "cluster1"

}

EOF

{

"created_at":"2023-12-08T11:35:08.557450505Z",

"href":"/api/maestro/v1/consumers/3f28c601-5028-47f4-9264-5cc43f2f27fb",

"id":"3f28c601-5028-47f4-9264-5cc43f2f27fb",

"kind":"Consumer",

"name":"cluster1",

"updated_at":"2023-12-08T11:35:08.557450505Z"

}

Deploy maestro agent:

$ export consumer_name=cluster1

$ make deploy-agent

$ oc get pod -n maestro-agent-root

NAME READY STATUS RESTARTS AGE

maestro-agent-5dc9f5b4bf-8jcvq 1/1 Running 0 13sCreate a resource:

$ ocm post /api/maestro/v1/resources << EOF

{

"consumer_name": "cluster1",

"version": 1,

"manifest": {

"apiVersion": "apps/v1",

"kind": "Deployment",

"metadata": {

"name": "nginx",

"namespace": "default"

},

"spec": {

"replicas": 1,

"selector": {

"matchLabels": {

"app": "nginx"

}

},

"template": {

"metadata": {

"labels": {

"app": "nginx"

}

},

"spec": {

"containers": [

{

"image": "nginxinc/nginx-unprivileged",

"name": "nginx"

}

]

}

}

}

}

}

EOFYou should be able to see the pod is created in default namespace.

$ oc get pod -n default

NAME READY STATUS RESTARTS AGE

nginx-5d6b548959-829c7 1/1 Running 0 70s- Add to openapi.yaml

- Generate the new structs/clients (

make generate)