Author: Sam Stoltenberg

Do you want to GET RICH QUICK using data science and machine learning to predict stock prices? Going this route has many heartaches, and decision trees to traverse such as.

- Drop some sparse columns, or fill the missing data with 0?

- Do you one hot encode an analyst saying "BUY", "HOLD", or map numerical values to each of the given features?

- What if there are irregularities in the price data you have, how do you fix them?

Our data was scraped using Selenium from an investment firm consisting of analyst opinions, performance statistics, prices, and company information for 7000+ stock symbols from August, 9th of 2019 to present. Although after cleaning our data, and dropping irregularities we end with roughly 2000 symbols.

We are predicting time series data, so we have to define things such as the number of days to predict the next with. The data is then transformed into multiple matrices of X_data correlating to y_targets. The X_data being all the data from n day(s) before, and the y_targets being the data we are trying to predict. If one wanted to know the information two days ahead they would have to predict all the data for one day then use the predicted data to predict the next, or structure the data in such a way where one day is being used to predict two.

Our first networks had infinite loss due to predicting everything as 0, so we had to devise a method for creating the best network to use on the data. There are few `plug-and-play` methods for tuning neural networks, and especially tuning Time Series predicting networks. The method we did find was a Hyperband from kerastuner. The Hyperband takes a build function and inside of the build function one can use a Hyperband choice function which reports back to the Hyperband what effect a given quotient had on the validation loss of the network. Our NetworkTuner can tune items such as:

- n_input (number of days to use in the prediction)

- Columns (which of the given columns to use in the prediction)

- Scale or not to scale the data between 0 and 1

- How many neurons to use in any given layer

- How much regularization if any to use, and which type

With the Hyperband we also developed a cross validation method, as kerastuner does not supply one out of the bag for time series. Cross validation ensures that the parameters are not being tuned solely for one set of testing data. K validation sets are also held back throughout the tuning process to test the network at the end of tuning.

- Obtain the data

- Scrub the data

- Explore the data

- Model the data

- Interpret the data

- Reference

-

A Jupyter notebook

main.ipynbdetailing my EDA and neural network processes -

A technical

presentation.pdfof the project. -

A python script

tune.pywhich is ran from a shell with:

python tune.py [name_of_network]

# name_of_network being a predefined name correlating to a

# function that tunes that specific network on predefined

# hyper-parameters

-

A Jupyter notebook

Pull and clean data.ipynbfor pulling and replacing all the pickles of data, refreshing data in the 'stock_cleaned' SQL server, and refreshing the Firebase database. -

Folder

dbwith filesfirebase.pyanddatabase.pyfor connecting to and posting to Google Firebase and our SQL server. -

Folder

modelingwith files:-

build.pywith class NetworkBuilder which takes parameters that directly correlate to how a network is put together. This class is also used for tuning those same parameters. -

create.pywith class NetworkCreator that does everything from preparing the time series data to creating an html report on how well the model performed on the train, test, and validation data. -

sequential.pywith class CustomSequential for wrapping a keras Sequential model and overriding its fit function to implement a custom cross validation method. -

tuner.pywith class NetworkTuner for tuning a neural network's architecture, and data processing methods.

-

-

Folder

old(unorganized) with files:-

Old Modeling.ipynbWhich is a Jupyter Notebook where I failed to predict on all the data -

Old main.ipynbWhich is my original Jupyter Notebook containing the scrubbing process, and attempts at modeling -

Old main2.ipynbWhich is a Jupyter Notebook showing my attempt at predicting all the data from three sides before realizing it was impossible with my single GPU, and that company info is irrelevant there since it is unchanging. -

Pull and update data.ipynbWhich is an almost working notebook for updating the data rather than pulling it all and updating everything. -

scratch.ipynbWhich is a Jupyter Notebook showcasing where I really dug into Time Series data, exactly what the generator was doing, and forecasting.

-

-

Folder

reportscontaining HTML reports of how each model performed, and which columns directly effected their performance. -

File

styles/custom.csscontaining the css used to style the jupyter notebooks -

Folder

test_notebooks(unorganized) with files: -

Firebase Test.ipynbWhich is a Jupyter Notebook -

Prediction_testing.ipynbWhich is a Jupyter Notebook testing predictions with my old method of greek god named models. -

dashboard_test.ipynbWhich is a Jupyter Notebook with my first tests of plotly graphs and my scraped data for my Website -

model_scratch_testing.ipynbWhich is a Jupyter Notebook containing the actual function tests that were used in the beginning development of my NetworkCreator class.

- Reindex to valid dates 2019-08-09 => onwards without the four days that have very little data '2019-12-09', '2020-06-23', '2020-06-24', '2020-06-25'

- Forward interpolate the data with a limit of three days. So if 6-25 was a valid price, and the four days after were null it would fill the first three, but not the third

- Drop symbols with null values

- Post to

stock_cleanedSQL server - Pickle

- Make an apply column which is `num`/`den`

- Load in performance and clean

- Drop symbols not in price symbols

- Match index to price index

- Fill null ExDividend dates with 1970-01-01 then encode days since then for numerical data

- Decide columns to fill, and columns to fill then drop if the symbol still has null values

- Interpolate null values for both, fill Na for columns to fill

- Drop columns with negative min that still have many null values

- Drop symbols that still have null values in the columns with a negative minimum as filling with 0 not be adequate.

- Add price to performance

- Apply splits

- Separate out penny stocks ( stocks where price is < 1 dollar )

- Post to

stock_cleanedSQL server - Pickle penny and non-penny performances

- Split out symbols that are in performance symbols

- Fill null text values with `unknown`

- Pickle.

- Interpolate null values by symbol, then fill the rest with 0

- Map text values to numeric

- Convert all to float

- Post to

stock_cleanedSQL server - Pickle

- One hot encode Company

- Combine the three data frames into one

After the process is complete, we update Firebase for website with performance and performance penny, possibly company and analyst if added later.

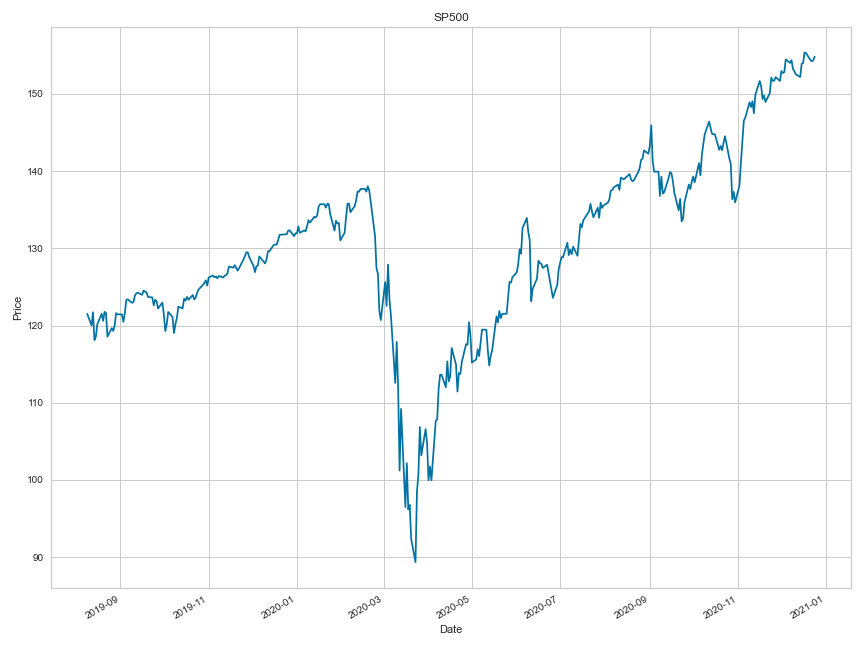

This is an average of our SP500 prices you can clearly see the covid-19 dip in march

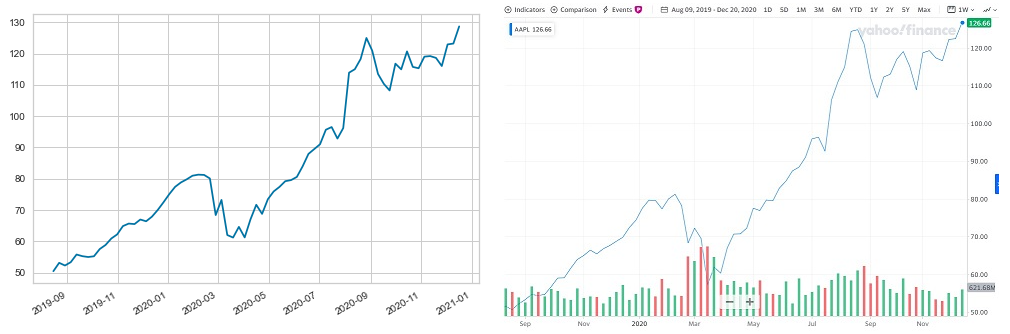

As you can see below, our data is not perfect as it is only collected once per day, but we have many more features then we know what to do with.

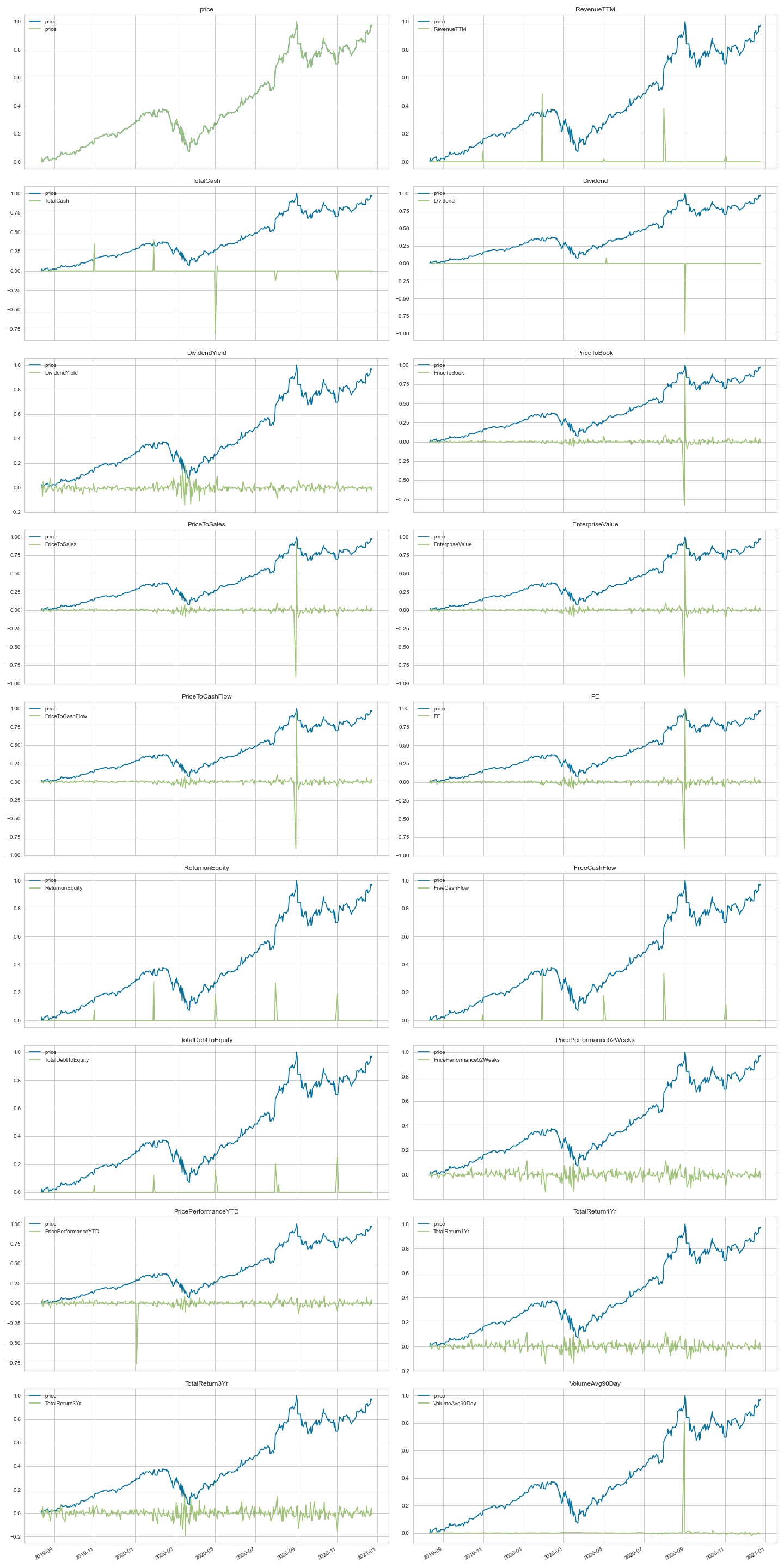

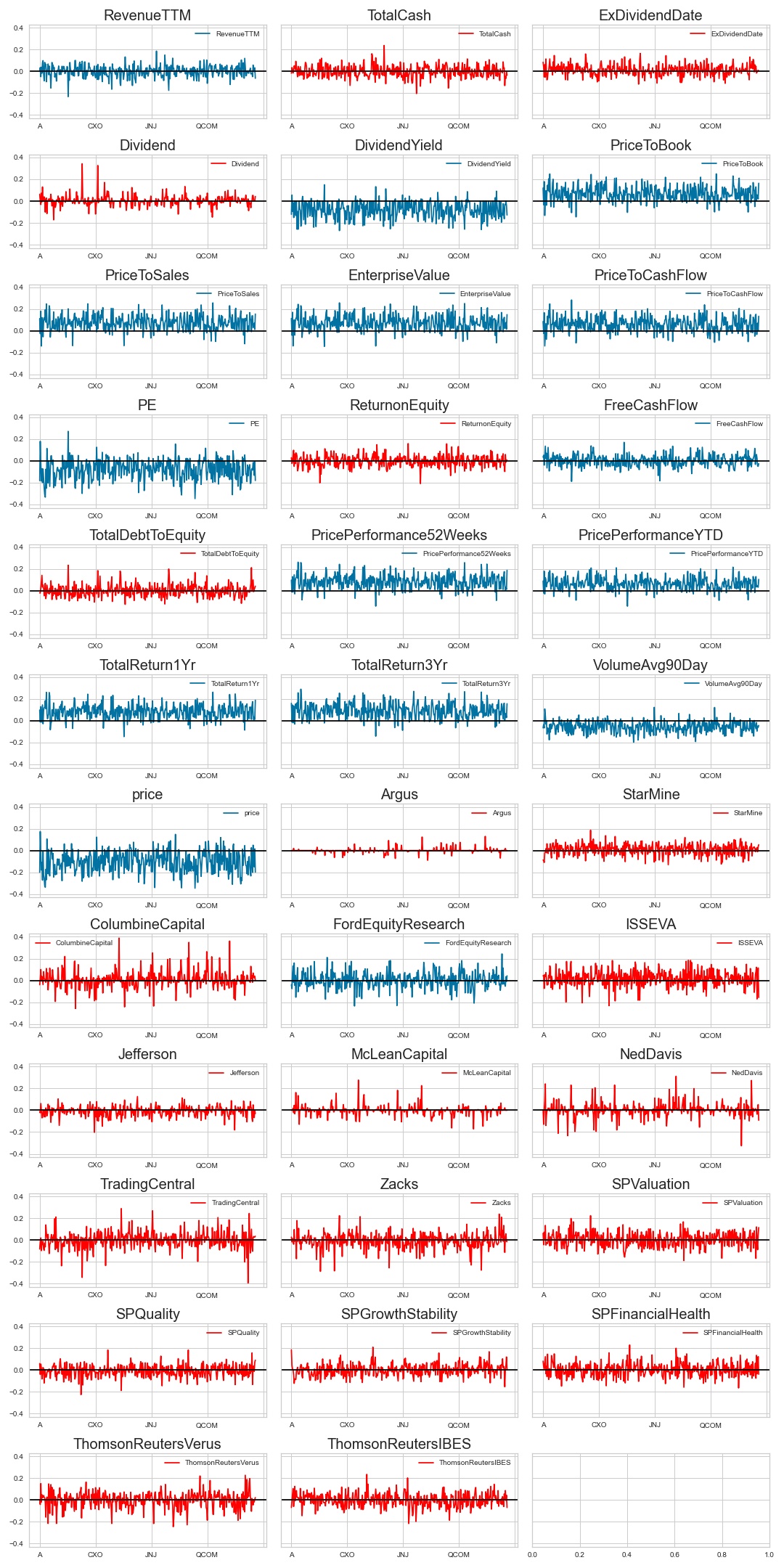

Here we difference each feature so that the 2nd day is now (1st day) subtracted from (2nd day) and so on. We then plot that on the same scale as price to see if there are any indicators of price jumps, and to check the vitality of our data.

- You can see where AAPL had a price split when `VolumeAvg90Day` peaked.

- Features such as `ReturnonEquity` are quarterly reports, thus they are showing difference on the quarters.

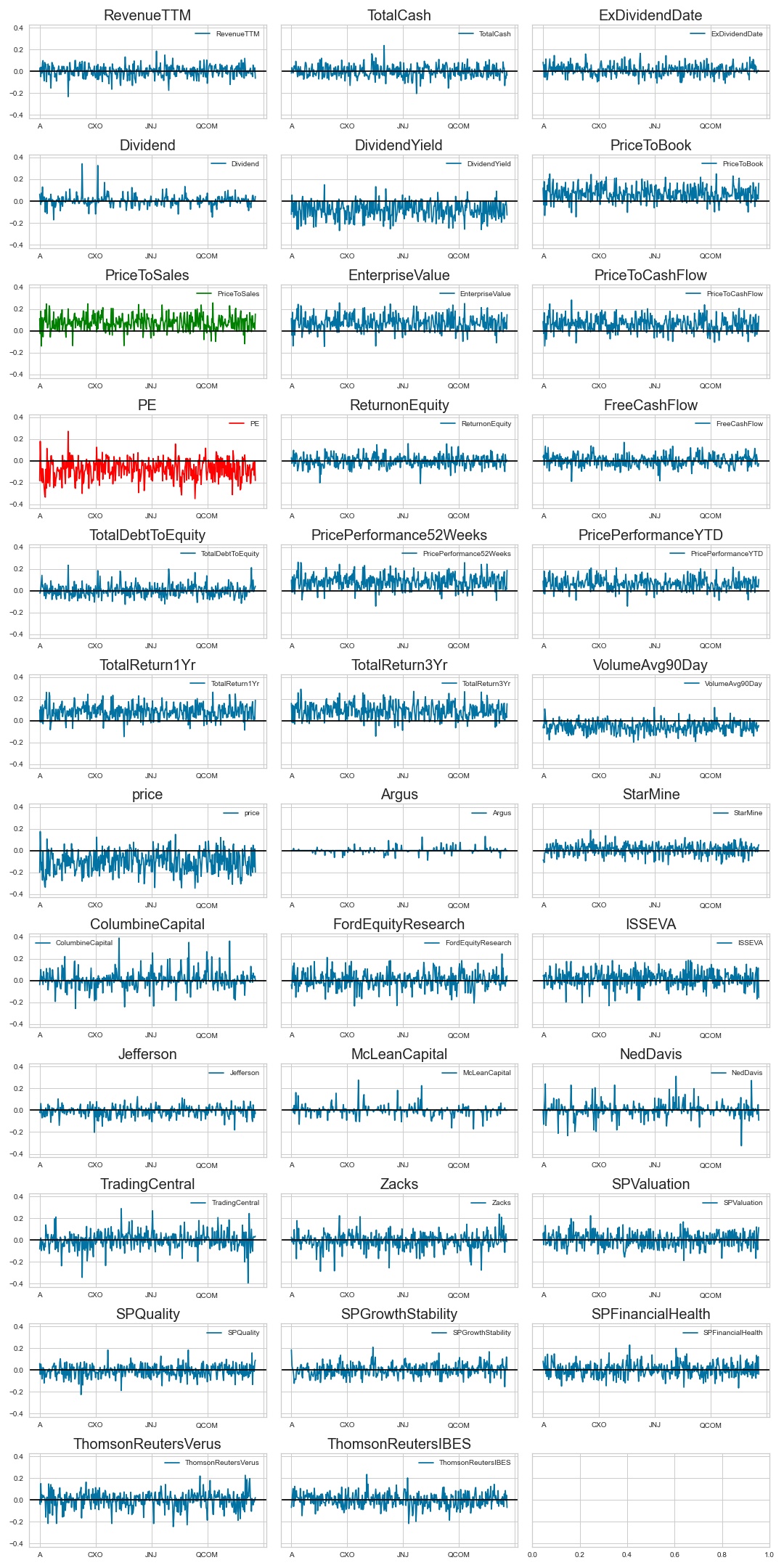

Pay special attention to the columns in red and green as they are showing negative and positive correlation to price the next day.

Here we took the overall prediction quality of each column, and plotted their sum qualities for each symbol. The quality was determined by how well a given feature correlated to changes in all the other features.

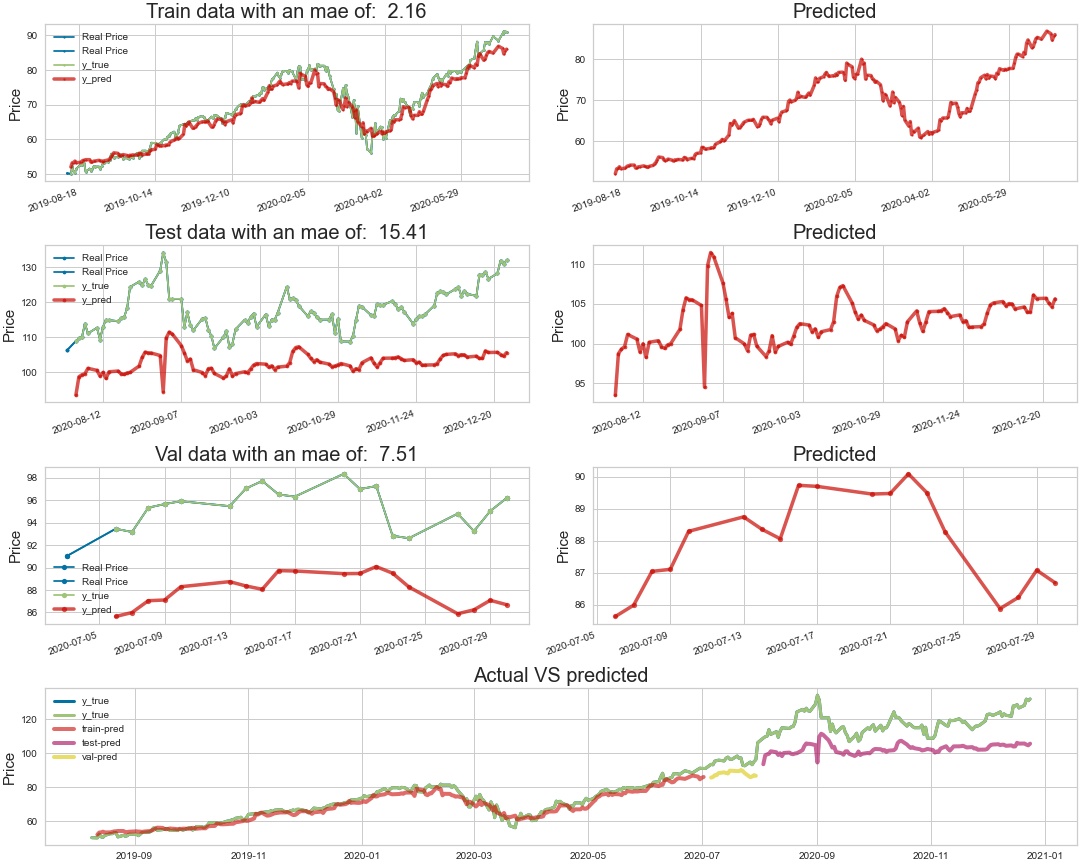

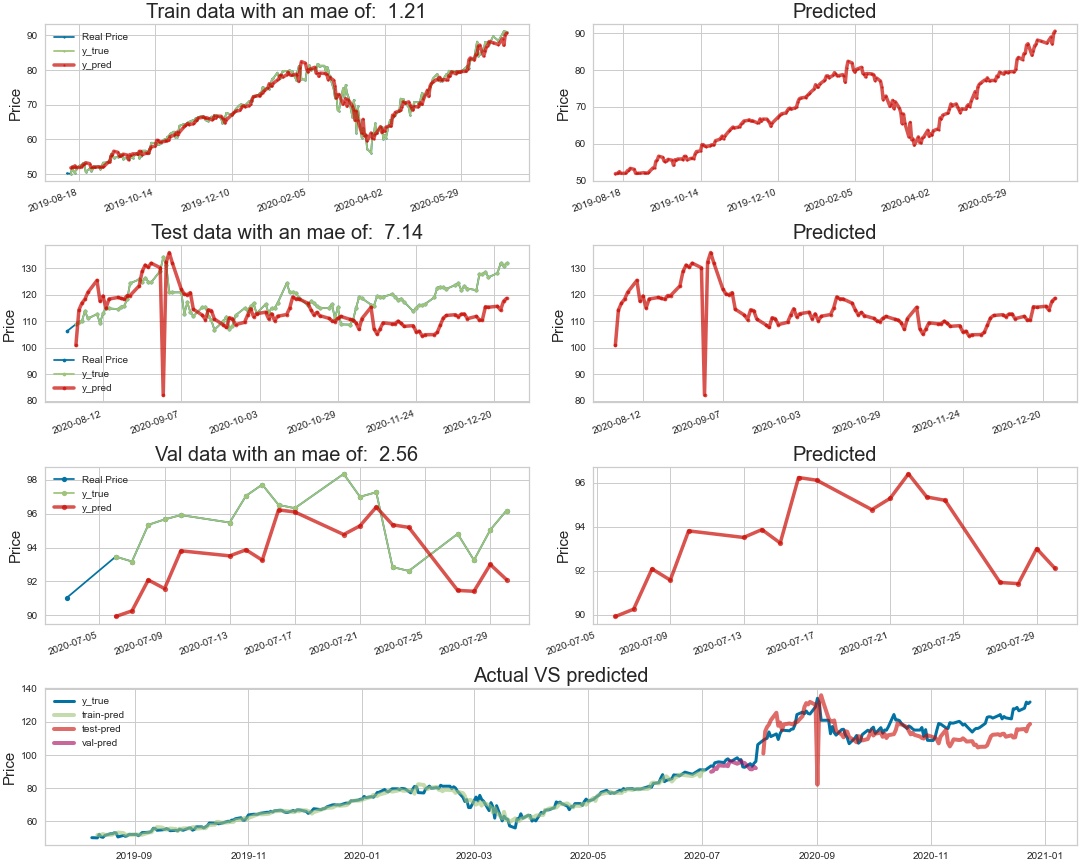

- The network is not doing too well at predicting the test or validation data

- Drop in quality of the testing data is showing through, as AAPL had a split in September 2020

After some slight manual tuning of the network here are the predictions.

- Much better than the base model

- You can see the same split drop in quality here on the testing data, maybe we could remove outliers.

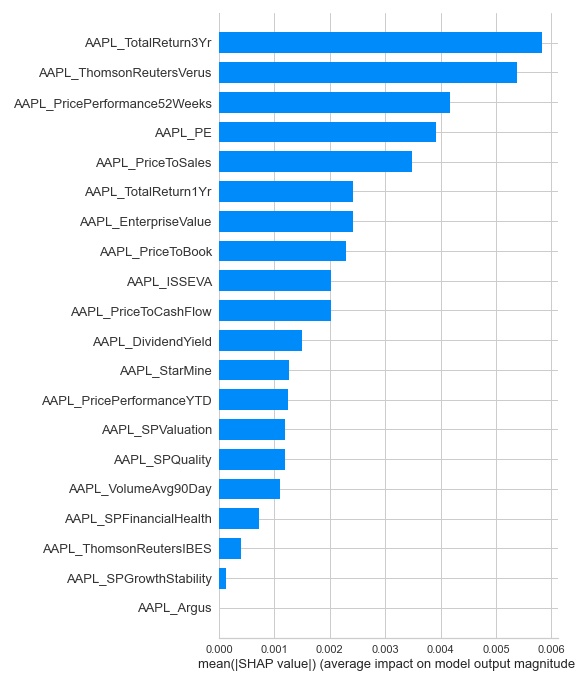

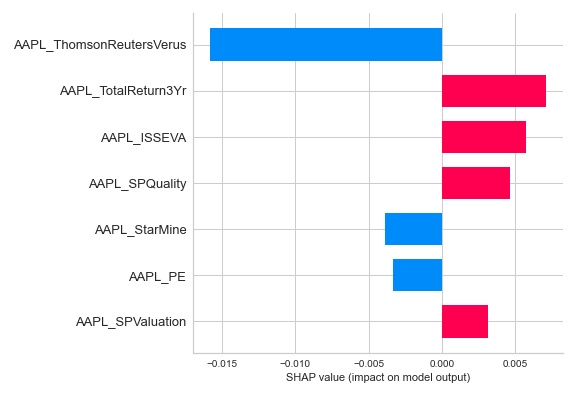

Here we use shap to get how much each column is effecting the network

- AAPL_PE is having the greatest effect on predicting the next day

Here we use shap to get how each column is effecting the network

- AAPL_TotalReturn1Yr going up is predicting that price will go up. This correlates to our correlation where AAPL_TR1YR was correlating positively to price.

Coming soon...

Here we will do a simple walk through of the Hyper-parameter tuning process.

Here is a small test for the tune.py you can see that there are many different parameters defined such as:

input_neuronscorresponds to how many neurons will be used in the input layer.n_dayscorresponds to how many days are used for predicting the next, or the length of the TimeSeriesGenerator.

import copy

import pandas as pd

# Import our NetworkTuner

from modeling.tuner import NetworkTuner

if __name__ == "__main__":

# Define parameters to tune

parameters = {

'input_neurons': [2, 4, 8, 16],

'input_dropout_rate': [.1, .3, .5],

'use_input_regularizer': [0, 1, 2],

'input_regularizer_penalty': [0.01, 0.05, 0.1, 0.3],

'n_hidden_layers': [1, 3, 5, 8],

'hidden_dropout_rate': [0.0, .3, .5, .9],

'hidden_neurons': [16, 32, 64],

'use_hidden_regularizer': [0, 1, 2],

'hidden_regularizer_penalty': [0.01, 0.05, 0.1, 0.3],

'patience': [5, 25, 50, 100],

'batch_size': [32, 64, 128],

'use_early_stopping': [0, 1],

'n_days': [1, 2, 3]

}

# Build the test data frame

_list = list(range(20))

df = pd.DataFrame({

'apple': copy.copy(_list),

'orange': copy.copy(_list),

'banana': copy.copy(_list),

'pear': copy.copy(_list),

'cucumber': copy.copy(_list),

'tomato': copy.copy(_list),

'plum': copy.copy(_list),

'watermelon': copy.copy(_list)

})

# Define which columns are feature(s) and which are the target(s)

X_cols = list(df.columns)

y_cols = 'banana'

# On the instantiation of NetworkTuner our data is split

# into k many folds, and then each fold is split again into

# training, testing, and validation data.

# Instantiate our NetworkTuner

nt = NetworkTuner(

df=df, X_cols=X_cols,

y_cols=y_cols, k_folds=5, max_n_days=3

)

# Call the tune function

nt.tune(

'Albert', max_epochs=100

)When nt.tune is ran the following function is called from modeling.NetworkTuner

def tune(self, name, max_epochs=10, **parameters):

"""Running the tuner with kerastuner.Hyperband"""

# Feeding parameters to tune into the build function

# before feeding it into the Hyperband

self.build_and_fit_model = partial(

self.build_and_fit_model, **parameters

)

# Register Logger dir and instantiate kt.Hyperband

Logger.register_directory(name)

tuner = kt.Hyperband(self.build_and_fit_model,

objective='val_loss',

max_epochs=max_epochs,

factor=3,

directory='./tuner_directory',

project_name=name,

logger=Logger)

# Start the search for best hyper-parameters

tuner.search(self)

# Get the best hyper-parameters

best_hps = tuner.get_best_hyperparameters(num_trials=1)[0]

# Display the best hyper-parameters

print(f"""The hyperparameter search is complete.

The optimal number of units in the first densely-connected layer

{best_hps.__dict__['values']}

""")The NetworkBuilder has a series of functions such as the below for searching the different parameters, getting each selection from the Hyperband. Here is a small cut-out of our input layer showcasing where the Hyperband makes choices.

input_neurons = hp.Choice('input_neurons', input_neurons)

model.add(LSTM(input_neurons))As the tuner begins its search we move to our CustomSequential that is used by the NetworkTuner as its primary network when tuning. The CustomSequential overrides tensorflow.keras.models.Sequantial fit function to implement a cross-validation split. A simplified version of our CustomSequential.fit is defined as follows:

def fit(self, nt, **kwargs):

"""

Overrides model fit to call it k_folds times

then averages the loss and val_loss to return back

as the history.

"""

histories = []

h = None

# Iterate over number of k_folds

for k in range(1, self.k_folds+1):

train, test, val = self.nt.n_day_gens[self.n_days][k]

# Split data and targets

X, y = train[0]

X_t, y_t = test[0]

# Calling Sequential.fit() with each fold

h = super(CustomSequential, self).fit(

X, y,

validation_data=(X_t, y_t),

**kwargs)

histories.append(h.history)

# Get and return average of model histories

df = pd.DataFrame(histories)

h.history['loss'] = np.array(df['loss'].sum()) / len(df)

h.history['val_loss'] = np.array(df['val_loss'].sum()) / len(df)

return h-

Data can be manipulated in many different ways, and networks can be tuned in many different ways. To accurately predict the stock market one would have to come across a lucky set of hyper-parameters and training set that the big players have not tried on their huge servers. The parameters chosen would also not work forever.

-

Over time if you are trading in large volumes the market would become "used" to your predictions, and the market movers would start basing their predictions off of yours, and they would become useless.

-

Coming soon...

- Cluster on absolute correlation takeing correlation for different symbols.

- Tune network on which columns are being used for predictions.

- Tune network with vs without difference data and/or scaling.

- Forecast tomorrow's prices

\--- bin

|

\--- db

|

\--- img

|

\--- modeling

\--- \--- tests

\ \--- _python

\ \--- create

\ \--- tuner

|

\--- old

|

\--- reports

\ \--- aapl_price_w_aapl_info

\ \--- aapl_price_w_all_price

\ \--- aapl_price_w_sector

|

\--- styles

|

\--- test_notebooks

\--- bin

\ | __init__.py

\ | anomoly.py

\ | database-schema.py

\ | NN.py

\ | out.png

\ | correlation data csv files

|

|

\--- db

\ | __init__.py

\ | database.py

\ | firebase.py

|

|

\--- img

\ | flow.png

|

|

\--- modeling

\--- \--- tests

\ \--- _python

\ | test_param_setting.py

\

\ \--- create

\ \--- tuner

\ | test_cv.py

\ \- val_folds

\ | __init__.py

\ | build.py

\ | create.py

\ | sequential.py

\ | tuner.py

|

|

\--- old

\ | Old main.ipynb

\ | Old main2.ipynb

\ | Old model_creation.ipynb

\ | Old Modeling.ipynb

\ | Pull and update data.ipynb

\ | scratch.ipynb

\ | scratch.py

|

|

\--- reports

\ \--- aapl_price_w_aapl_info

\ \--- aapl_price_w_all_price

\ \--- aapl_price_w_sector

|

|

\--- styles

\ | custom.css

\ |

|

|

\--- test_notebooks

\ | dashboard_test.ipynb

\ | Firebase Test.ipynb

\ | model_scratch_testing.ipynb

\ | Prediction_testing.ipynb

|

| .gitignore

| main.ipynb

| presentation.pdf

| Pull and clean data.ipynb

| Readme.ipynb

| README.md

| run_tests.py

| todo.txt

| tune.py