- train_audios: Contain 182 folders, each folder contain multiple audio files of particular bird species.

- train_metadata.csv: Contain metadata of each audio file.

- unlabeled_soundscapes: Contain multiple audio files of bird sounds.

- eBIrd_Taxonomy_v2021.csv: Data on the relationships between different species.

- test_soundscapes: Only the hidden rerun copy of the test soundscape dirctory will only be populated.

- SampleSubmission.csv: A sample submission file in the correct format. First column is the filename, and remaining are scores for each bird species.

- A version of macro-averaged ROC-AUC that skips classes which have no true positive labels.

- train audio are in different length and sample rate is 32000

- If an audio is for 6 second, when you read with soundfile, it will be 6*32000 = 192000 samples

- So the size of array will be (192000,)

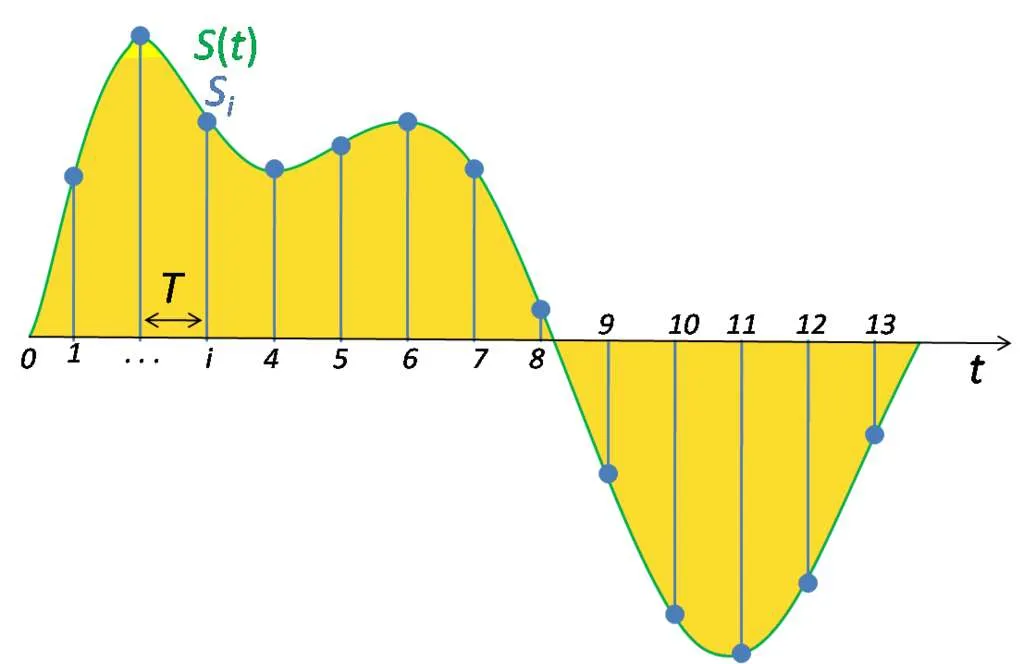

- Since we have to predict for each 5 second, we will split the audio into 5 second.

- Now, will select one of the 5 second audio and convert it into melspectrogram of shape (3, 128, 201)

- When we make batch of it the shape will be (bs, 3, 128, 201)

- Model will give output of shape (bs, num_classes), i.e. (bs, 182)

- Setup local evaluation for the competition metric

- Do more EDA on the available data

- Apply augmentation in the next submission.

To digitize a sound wave we must turn the signal into a series of numbers so that we can input it into our models. This is done by measuring the amplitude of the sound at fixed intervals of time.

Each such measurement is called a sample, and the sample rate is the number of samples per second. For instance, a common sampling rate is about 44,100 samples per second. That means that a 10-second music clip would have 441,000 samples!

- Read the meta data dataframe

- Split the data into train and valid

- Add a column for path of the audio file

- Use this dataframe to convert audio to image

- Read an audio file using soundfile.read method

- Chop the audio file into multiple audio files of 5 second each

- Convert all the audio files to spectrogram

- Stack all the images using numpy

- Save the image to disk

- Use train and valid dataframe to create a dataset

- Read the row of the dataframe

- Load the corresponding saved numpy file with custom max number of images

- While training select one of the image randomly

- Convert to tensor, augmentate it

- Stack 2 duplicate images to make it 3 channel

- Normalize the image

- Create a batch of 64 images to pass it to model.

- Using tf_efficientnet_b0_ns model from timm

- Take a batch of images and target.

- If mixup is true, calculate mixup loss else calculate normal loss

- Convert Audio To Spectrogram: https://www.kaggle.com/code/nischaydnk/split-creating-melspecs-stage-1

- Pytorch Lightning Inferece: https://www.kaggle.com/code/nischaydnk/birdclef-2023-pytorch-lightning-inference

- Pytorch LIghtning training: https://www.kaggle.com/code/nischaydnk/birdclef-2023-pytorch-lightning-training-w-cmap

- Audio Deep Learning: https://www.kaggle.com/competitions/birdclef-2024/discussion/491668