Repository of pre-trained Language Models and NLP models.

unstructured library | Get the JSON and HTML versions of any PDF (legal, financial, medical…), even PDF with tables!

- Blog post: unstructured library | Get the JSON and HTML versions of any PDF (legal, financial, medical…), even PDF with tables!

- Notebook: Unstructured_PDF_to_JSON_and_HTML.ipynb

Speech-to-Text | Get transcription WITH SPEAKERS from large audio file in any language (OpenAI Whisper + NeMo Speaker Diarization)

- Blog post: Speech-to-Text | Get transcription WITH SPEAKERS from large audio file in any language (OpenAI Whisper + NeMo Speaker Diarization)

- Notebook: speech_to_text_transcription_with_speakers_Whisper_Transcription_+_NeMo_Diarization.ipynb

- Blog post: Video-to-Audio | A notebook and Web APP to get mp3 audio file from any YouTube video

- Notebook: youtube_video_to_audio.ipynb

- Web APP: Free YouTube URL Video-to-Audio

Speech-to-Text | Quickly get a transcription of a large audio file in any language with "Faster-Whisper"

- Blog post: Speech-to-Text | Quickly get a transcription of a large audio file in any language with "Faster-Whisper"

- Notebook: Speech_to_Text_with_faster_whisper_on_large_audio_file_in_any_language.ipynb

Document AI | Accuracy of layout finetuned models (LiLT and LayoutXLM base) on the dataset DoclayNet base (notebooks)

-

Line level

-

Paragraph level

Document AI | Inference at paragraph level by using the association of 2 Document Understanding models (LiLT and LayoutXLM base fine-tuned on DocLayNet base dataset)

- Notebook: Document AI | Inference at paragraph level by using the association of 2 Document Understanding models (LiLT and LayoutXLM base fine-tuned on DocLayNet base dataset)

- Notebook: Document AI | Inference APP at paragraph level by using the association of 2 Document Understanding models (LiLT and LayoutXLM base fine-tuned on DocLayNet base dataset)

Document AI | APP to compare the Document Understanding LiLT and LayoutXLM (base) models at paragraph level

- Blog Post: Document AI | APP to compare the Document Understanding LiLT and LayoutXLM (base) models at paragraph level

- Notebook: Document AI | Inference APP at paragraph level with 2 Document Understanding models (LiLT base and LayoutXLM base fine-tuned on DocLayNet base dataset)

Document AI | Inference APP and fine-tuning notebook for Document Understanding at paragraph level with LayoutXLM base

- Blog Post: Document AI | Inference APP and fine-tuning notebook for Document Understanding at paragraph level with LayoutXLM base

- Notebooks:

- Document AI | Inference at paragraph level with a Document Understanding model (LayoutXLM base fine-tuned on DocLayNet dataset)

- Document AI | Inference APP at paragraph level with a Document Understanding model (LayoutXLM base fine-tuned on DocLayNet base dataset)

- Document AI | Fine-tune LayoutXLM base on DocLayNet base in any language at paragraph level (chunk of 512 tokens with overlap)

Document AI | APP to compare the Document Understanding LiLT and LayoutXLM (base) models at line level

- Blog Post: Document AI | APP to compare the Document Understanding LiLT and LayoutXLM (base) models at line level

- Notebook: Document AI | Inference APP at line level with 2 Document Understanding models (LiLT base and LayoutXLM base fine-tuned on DocLayNet base dataset)

Document AI | Inference APP and fine-tuning notebook for Document Understanding at line level with LayoutXLM base

- Blog Post: Document AI | Inference APP and fine-tuning notebook for Document Understanding at line level with LayoutXLM base

- Notebooks:

- Document AI | Inference at line level with a Document Understanding model (LayoutXLM base fine-tuned on DocLayNet dataset)

- Document AI | Inference APP at line level with a Document Understanding model (LayoutXLM base fine-tuned on DocLayNet base dataset)

- Document AI | Fine-tune LayoutXLM base on DocLayNet base in any language at line level (chunk of 384 tokens with overlap)

- Blog Post: Document AI | Inference APP and fine-tuning notebook for Document Understanding at paragraph level

- Notebook: Document AI | Inference APP at paragraph level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)

- Notebook: Document AI | Inference at paragraph level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)

- Notebook: Document AI | Fine-tune LiLT on DocLayNet base in any language at paragraph level (chunk of 512 tokens with overlap)

- Blog Post: Document AI | Inference APP for Document Understanding at line level

- Notebook: Document AI | Inference APP at line level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)

- Blog Post: Document AI | Document Understanding model at line level with LiLT, Tesseract and DocLayNet dataset

- Notebook (update on 02/14/2023): Document AI | Inference at line level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)

- Notebook: Document AI | Fine-tune LiLT on DocLayNet base in any language at line level (chunk of 384 tokens with overlap)

- Blog post: Document AI | DocLayNet image viewer APP

- Notebook DocLayNet image viewer APP

Document AI | Processing of DocLayNet dataset to be used by layout models of the Hugging Face hub (finetuning, inference)

- Blog post: Document AI | Processing of DocLayNet dataset to be used by layout models of the Hugging Face hub (finetuning, inference)

- Notebook Processing of DocLayNet dataset to be used by layout models of the Hugging Face hub (finetuning, inference)

- Blog post: Speech-to-Text & IA | Transcreva qualquer áudio para o português com o Whisper (OpenAI)... sem nenhum custo!

- Notebook Whisper em português

- Notebook Whisper en français

- Notebook Inference code for Whisper (example with Whisper Medium in Portuguese)

- Blog post: IA & empresas | Diminua o tempo de inferência de modelos Transformer com BetterTransformer

- Notebook QA in Portuguese with BetterTransformer

- Blog post: NLP & Código para todos | Função de perda ponderada para classificação de texto (multiclasse)

- Notebook Text Classification on GLUE with weighted Loss function

- Blog post: NLP nas empresas | Como eu treinei um modelo T5 em português na tarefa QA no Google Colab

- Notebook: Finetuning of the language model T5 base on a Question-Answering task (QA) with the dataset SQuAD 1.1 Portuguese

- QA App in the Hugging Face Spaces

NLP | Modelos e Web App para Reconhecimento de Entidade Nomeada (NER) no domínio jurídico brasileiro

- Blog post: NLP | Modelos e Web App para Reconhecimento de Entidade Nomeada (NER) no domínio jurídico brasileiro

- NER App in the Hugging Face Spaces

Finetuning of the specialized version of the language model BERTimbau on a token classification task (NER) with the dataset LeNER-Br

- notebook: HuggingFace_Notebook_token_classification_NER_LeNER_Br.ipynb (nbviewer of the notebook)

- BERT base NER model in the legal domain in Portuguese (LeNER-Br) in the Hugging Face model hub

- BERT large NER model in the legal domain in Portuguese (LeNER-Br) in the Hugging Face model hub

- notebook: Finetuning_language_model_BERtimbau_LeNER_Br.ipynb (nbviewer of the notebook)

- dataset: pierreguillou/lener_br_finetuning_language_model

- BERT base Language modeling in the legal domain in Portuguese (LeNER-Br) in the Hugging Face model hub

- BERT large Language modeling in the legal domain in Portuguese (LeNER-Br) in the Hugging Face model hub

- Blog post: NLP nas empresas | Técnicas para acelerar modelos de Deep Learning para inferência em produção

- notebooks:

- notebook OCR_DeepLearning_Tesseract_DocTR_colab.ipynb (nbviewer of the notebook)

- Blog post: NLP nas empresas | Reconhecimento de textos com Deep Learning em PDFs e imagens

NLP nas empresas | Como criar um modelo BERT de Question-Answering (QA) de desempenho aprimorado com AdapterFusion?

- notebook question_answering_adapter_fusion.ipynb (nbviewer of the notebook): finetuning a MLM (Masked Language Model) like BERT (base or large) with the library adapter-transformers on the Question Answering task (QA) with AdapterFusion

- Blog post: NLP nas empresas | Como criar um modelo BERT de Question-Answering (QA) de desempenho aprimorado com AdapterFusion?

NLP nas empresas | Como ajustar um modelo de linguagem natural como BERT para a tarefa de Question-Answering (QA) com um Adapter?

- notebooks question_answering_adapter.ipynb (nbviewer of the notebook) and question_answering_adapter_script.ipynb (nbviewer of the notebook): finetuning a MLM (Masked Language Model) like BERT (base or large) with the library adapter-transformers on the Question Answering task (QA)

- Blog post: NLP nas empresas | Como ajustar um modelo de linguagem natural como BERT para a tarefa de Question-Answering (QA) com um Adapter?

NLP nas empresas | Como ajustar um modelo de linguagem natural como BERT para a tarefa de classificação de tokens (NER) com um Adapter?

- notebook token_classification_adapter.ipynb (nbviewer of the notebook): finetuning a MLM (Masked Language Model) like BERT (base or large) with the library adapter-transformers on the Token Classification task (NER)

- Blog post: NLP nas empresas | Como ajustar um modelo de linguagem natural como BERT para a tarefa de classificação de tokens (NER) com um Adapter?

NLP nas empresas | Como ajustar um modelo de linguagem natural como BERT a um novo domínio linguístico com um Adapter?

- notebook language_modeling_adapter.ipynb (nbviewer of the notebook): finetuning a MLM (Masked Language Model) like BERT (base or large) with the library adapter-transformers

- Blog post: NLP nas empresas | Como ajustar um modelo de linguagem natural como BERT a um novo domínio linguístico com um Adapter?

NLP | Modelo de Question Answering em qualquer idioma baseado no BERT large (estudo de caso em português)

- notebook question_answering_BERT_large_cased_squad_v11_pt.ipynb (nbviewer of the notebook): training code of a Portuguese BERT large cased QA (Question Answering), finetuned on SQUAD v1.1

- Blog post: NLP | Como treinar um modelo de Question Answering em qualquer linguagem baseado no BERT large, melhorando o desempenho do modelo utilizando o BERT base? (estudo de caso em português)

- Model in the Model Hub of Hugging Face: Portuguese BERT large cased QA (Question Answering), finetuned on SQUAD v1.1

NLP | How to add a domain-specific vocabulary (new tokens) to a subword tokenizer already trained like BERT WordPiece

Summary: In some cases, it may be crucial to enrich the vocabulary of an already trained natural language model with vocabulary from a specialized domain (medicine, law, etc.) in order to perform new tasks (classification, NER, summary, translation, etc.). While the Hugging Face library allows you to easily add new tokens to the vocabulary of an existing tokenizer like BERT WordPiece, those tokens must be whole words, not subwords. This article explains why and how to obtain these new tokens from a specialized corpus.

- notebook nlp_how_to_add_a_domain_specific_vocabulary_new_tokens_to_a_subword_tokenizer_already_trained_like_BERT_WordPiece.ipynb (nbviewer of the notebook) (notebook in colab)

- Blog post: NLP | How to add a domain-specific vocabulary (new tokens) to a subword tokenizer already trained like BERT WordPiece

NLP | Modelo de Question Answering em qualquer idioma baseado no BERT base (estudo de caso em português)

- notebook colab_question_answering_BERT_base_cased_squad_v11_pt.ipynb (nbviewer of the notebook): training code of a Portuguese BERT base cased QA (Question Answering), finetuned on SQUAD v1.1

- Blog post: NLP | Modelo de Question Answering em qualquer idioma baseado no BERT base (estudo de caso em português)

- Model in the Model Hub of Hugging Face: Portuguese BERT base cased QA (Question Answering), finetuned on SQUAD v1.1

I trained 1 Portuguese Bidirectional Language Model (PBLM) with the MultiFit configuration with 1 NVIDIA GPU v100 on GCP.

WARNING: a Bidirectional LM model using the MultiFiT configuration is a good model to perform text classification but with only 46 millions of parameters, it is far from being a LM that can compete with GPT-2 or BERT in NLP tasks like text generation. This my next step ;-)

Note: The training times shown in the tables on this page are the sum of the creation time of Fastai Databunch (forward and backward) and the training duration of the bidirectional model over 10 periods. The download time of the Wikipedia corpus and its preparation time are not counted.

MultiFiT configuration (architecture 4 QRNN with 1550 hidden parameters by layer / tokenizer SentencePiece (15 000 tokens))

- notebook lm3-portuguese.ipynb (nbviewer of the notebook): code used to train a Portuguese Bidirectional LM on a 100 millions corpus extrated from Wikipedia by using the MultiFiT configuration.

- link to download pre-trained parameters and vocabulary in models

| PBLM | accuracy | perplexity | training time |

|---|---|---|---|

| forward | 39.68% | 21.76 | 8h |

| backward | 43.67% | 22.16 | 8h |

- Applications:

- notebook lm3-portuguese-classifier-TCU-jurisprudencia.ipynb (nbviewer of the notebook): code used to fine-tune a Portuguese Bidirectional LM and a Text Classifier on "(reduzido) TCU jurisprudência" dataset.

- notebook lm3-portuguese-classifier-olist.ipynb (nbviewer of the notebook): code used to fine-tune a Portuguese Bidirectional LM and a Sentiment Classifier on "Brazilian E-Commerce Public Dataset by Olist" dataset.

[ WARNING ] The code of this notebook lm3-portuguese-classifier-olist.ipynb must be updated in order to use the SentencePiece model and vocab already trained for the Portuguese Language Model in the notebook lm3-portuguese.ipynb as it was done in the notebook lm3-portuguese-classifier-TCU-jurisprudencia.ipynb (see explanations at the top of this notebook).

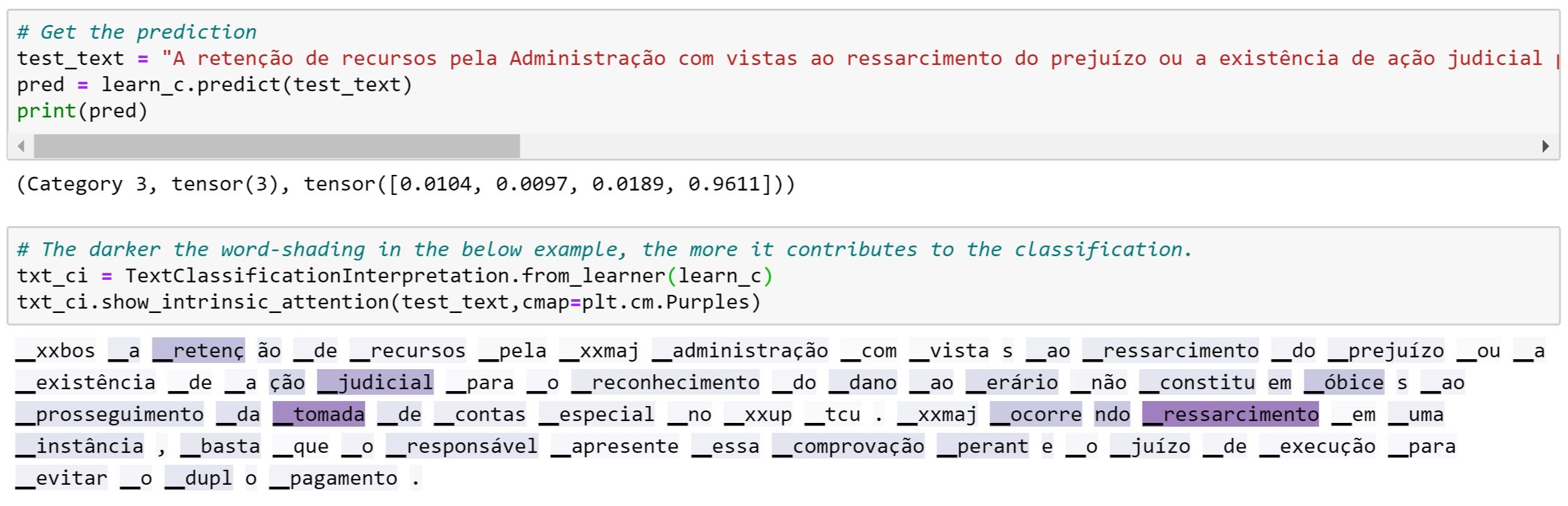

Here's an example of using the classifier to predict the category of a TCU legal text:

I trained 3 French Bidirectional Language Models (FBLM) with 1 NVIDIA GPU v100 on GCP but the best is the one trained with the MultiFit configuration.

| French Bidirectional Language Models (FBLM) | accuracy | perplexity | training time | |

|---|---|---|---|---|

| MultiFiT with 4 QRNN + SentencePiece (15 000 tokens) | forward | 43.77% | 16.09 | 8h40 |

| backward | 49.29% | 16.58 | 8h10 | |

| ULMFiT with 3 QRNN + SentencePiece (15 000 tokens) | forward | 40.99% | 19.96 | 5h30 |

| backward | 47.19% | 19.47 | 5h30 | |

| ULMFiT with 3 AWD-LSTM + spaCy (60 000 tokens) | forward | 36.44% | 25.62 | 11h |

| backward | 42.65% | 27.09 | 11h |

1. MultiFiT configuration (architecture 4 QRNN with 1550 hidden parameters by layer / tokenizer SentencePiece (15 000 tokens))

- notebook lm3-french.ipynb (nbviewer of the notebook): code used to train a French Bidirectional LM on a 100 millions corpus extrated from Wikipedia by using the MultiFiT configuration.

- link to download pre-trained parameters and vocabulary in models

| FBLM | accuracy | perplexity | training time |

|---|---|---|---|

| forward | 43.77% | 16.09 | 8h40 |

| backward | 49.29% | 16.58 | 8h10 |

- Application: notebook lm3-french-classifier-amazon.ipynb (nbviewer of the notebook): code used to fine-tune a French Bidirectional LM and a Sentiment Classifier on "French Amazon Customer Reviews" dataset.

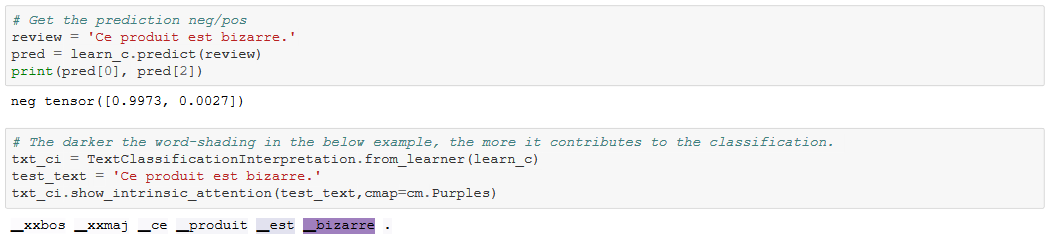

Here's an example of using the classifier to predict the feeling of comments on an amazon product:

- notebook lm2-french.ipynb (nbviewer of the notebook): code used to train a French Bidirectional LM on a 100 millions corpus extrated from Wikipedia

- link to download pre-trained parameters and vocabulary in models

| FBLM | accuracy | perplexity | training time |

|---|---|---|---|

| forward | 40.99% | 19.96 | 5h30 |

| backward | 47.19% | 19.47 | 5h30 |

- Application: notebook lm2-french-classifier-amazon.ipynb (nbviewer of the notebook): code used to fine-tune a French Bidirectional LM and a Sentiment Classifier on "French Amazon Customer Reviews" dataset.

- notebook lm-french.ipynb (nbviewer of the notebook): code used to train a French Bidirectional LM on a 100 millions corpus extrated from Wikipedia

- link to download pre-trained parameters and vocabulary in models

| FBLM | accuracy | perplexity | training time |

|---|---|---|---|

| forward | 36.44% | 25.62 | 11h |

| backward | 42.65% | 27.09 | 11h |

- Application: notebook lm-french-classifier-amazon.ipynb (nbviewer of the notebook): code used to fine-tune a French Bidirectional LM and a Sentiment Classifier on "French Amazon Customer Reviews" dataset.