This is a serverless application that uses AnimateDiff to run a Text-to-Video task on RunPod.

See also SDXL_Serverless_Runpod for Text-to-Imgae task.

Serverless means that you are only charged for the time you use the application, and you don't need to pay for the idle time, which is very suitable for this kind of application that is not used frequently but needs to respond quickly.

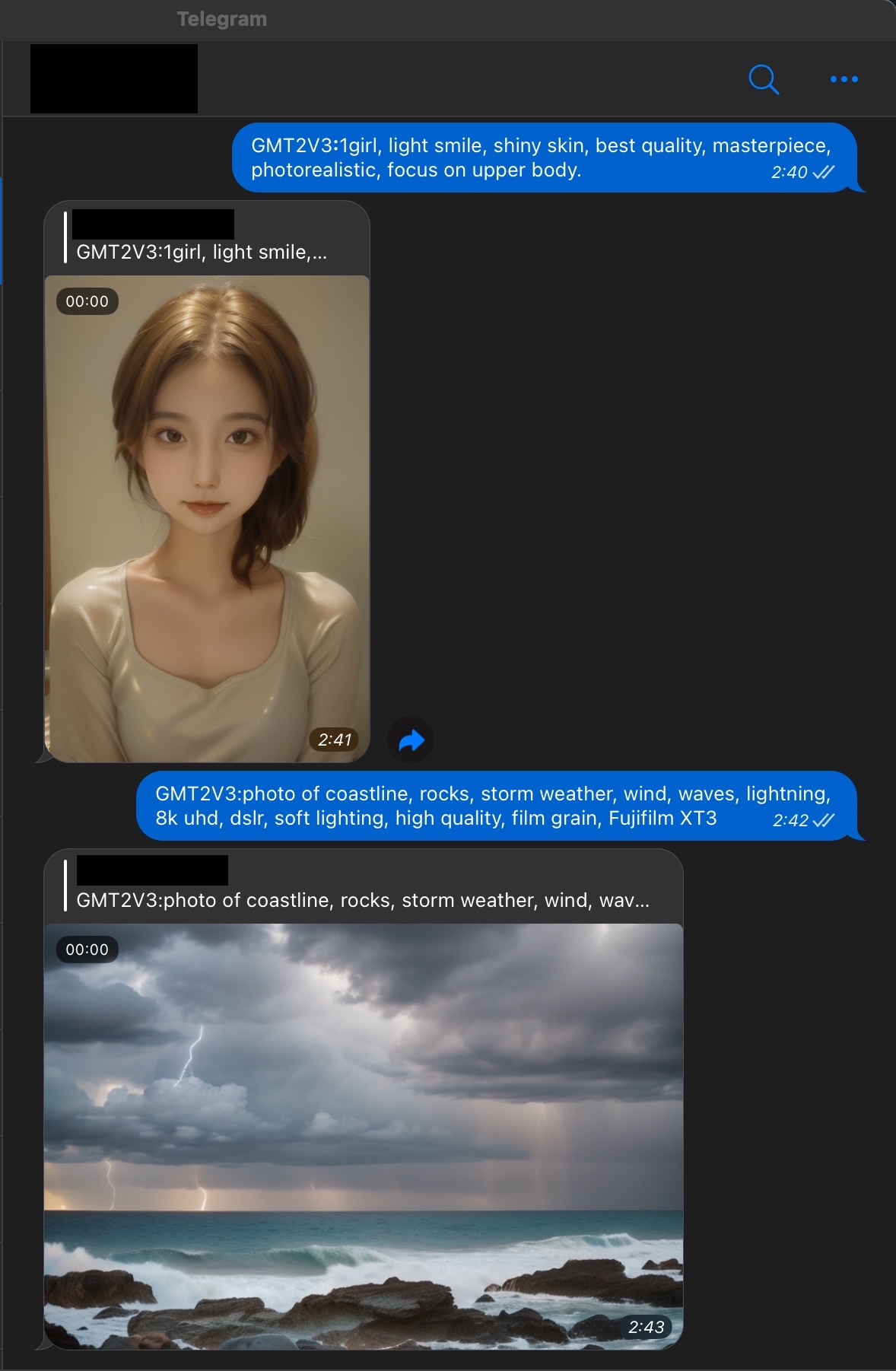

Theoretically, this application can be called by any other application. Here we provide two examples:

- A simple Python script

- A Telegram bot

See Usage below for more details.

Input Prompt: (random seed: 445608568)

1girl, focus on face, offshoulder, light smile, shiny skin, best quality, masterpiece, photorealistic

Result: (Original | PanLeft, 28 steps, 768x512, around 60 seconds on RTX 3090, 0.015$😱 on RunPod)

example_result_person_445608568.mp4

Input Prompt: (random seed: 195577361)

photo of coastline, rocks, storm weather, wind, waves, lightning, 8k uhd, dslr, soft lighting, high quality, film grain, Fujifilm XT3

Result: (Original | ZoomOut, 28 steps, 768x512, around 60 seconds on RTX 3090, 0.015$😱 on RunPod)

example_result_scene_195577361.mp4

The time is measured from the moment the input prompt is sent to the moment the result image is received, including the time for all the following steps:

- Receive the request from the client

- Serverless container startup

- Model loading

- Inference

- Sending the result image back to the client.

- Python >= 3.9

- Docker

- Local GPU is necessary for testing but not necessary for deployment. (Recommended: RTX 3090)

If you don't have a GPU, you can modify and test the code on Google Colab and then build and deploy the application on RunPod.

Example Notebook: link

# Install dependencies

pip install -r requirements.txt

# Download models

python scripts/download.py

# Edit (or not) config to customize your inference, e.g., change base model, lora model, motion lora model, etc.

rename inference_v2(example).yaml to inference_v2.yaml

# Run inference test

python inference_util.py

# Run server.py local test

python server.py

During downloading, if you encounter errors like "gdown.exceptions.FileURLRetrievalError: Cannot retrieve the public link of the file.", reinstalling the gdown package using "pip install --upgrade --no-cache-dir gdown" and rerunning the download.py may help.

-

First, make sure you have installed Docker and have accounts on both DockerHub and RunPod.

-

Then, decide a name for your Docker image, e.g., "your_username/anidiff:v1" and set your image name in "./scripts/build.sh".

-

Run the following commands to build and push your Docker image to DockerHub.

bash scripts/build.sh

Sorry for not providing detailed instructions here as the author is quite busy recently. You can find many detailed instructions on Google about how to deploy a Docker image on RunPod.

Feel free to contact me if you encounter any problems after searching on Google.

# Make sure to set API key and endpoint ID before running the script.

python test_client.py

- Support for specific base model for different objectives. (Person and Scene)

- Support for LoRA models. (Edit yaml file and place your model in "./models/DreamBooth_LoRA")

- Support for Motion LoRA models. (Also editable in yaml file, see here for details and downloads.)

- More detailed instructions

- One-click deploy (If anyone is interested...)

Thanks to AnimateDiff and RunPod.