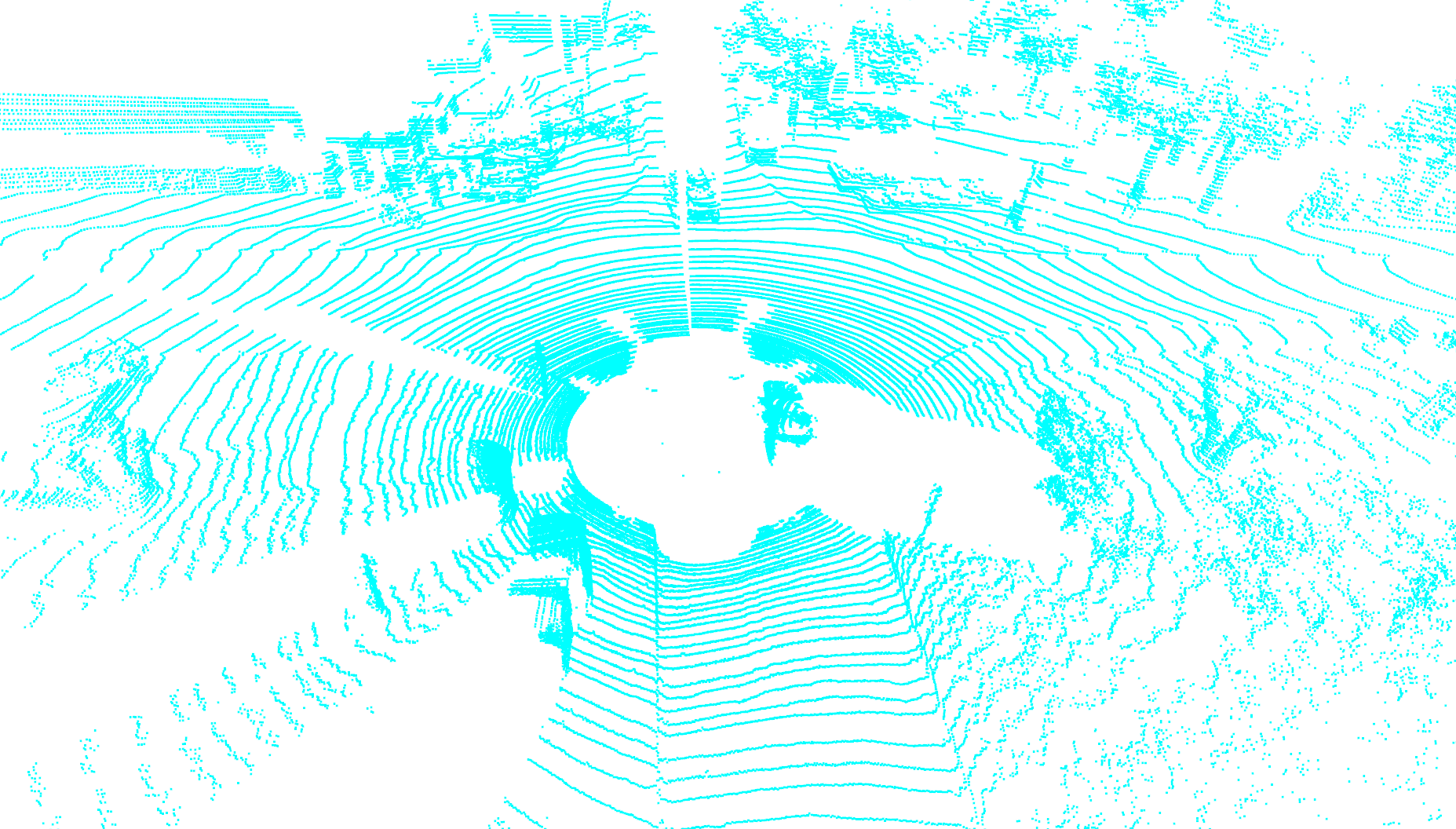

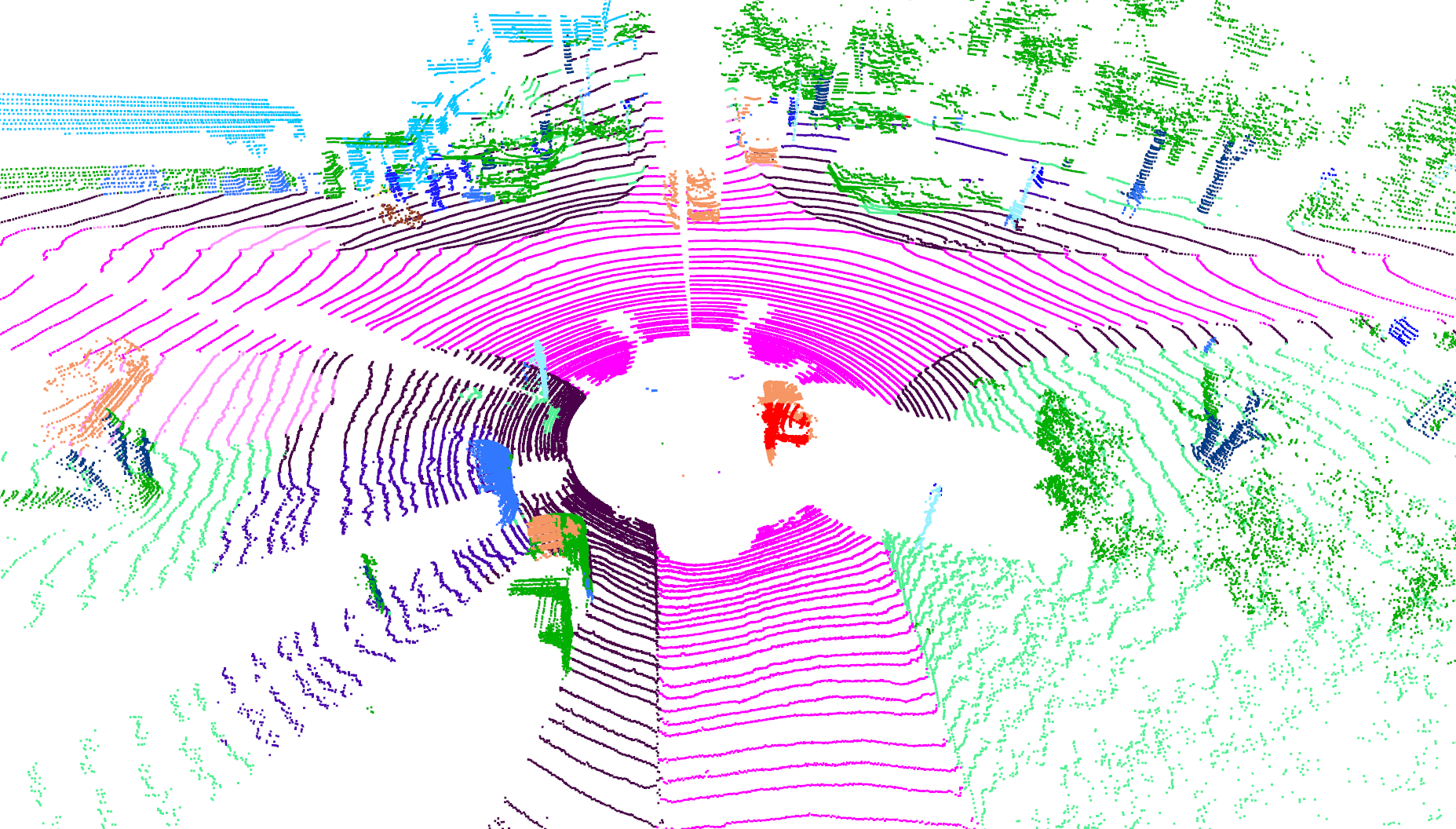

LiDAR scan visualization of SemanticKITTI dataset(left) and the prediction result of PolarNet(right).

LiDAR scan visualization of SemanticKITTI dataset(left) and the prediction result of PolarNet(right).

Official PyTorch implementation for online LiDAR scan segmentation neural network PolarNet (CVPR 2020).

PolarNet: An Improved Grid Representation for Online LiDAR Point Clouds Semantic Segmentation Yang Zhang*; Zixiang Zhou*; Philip David; Xiangyu Yue; Zerong Xi; Boqing Gong; Hassan Foroosh Conference on Computer Vision and Pattern Recognition, 2020 *Equal contribution

[2021-03] Our paper on panoptic segmentation task is accepted at CVPR 2021. Code is now available at Panoptic-PolarNet

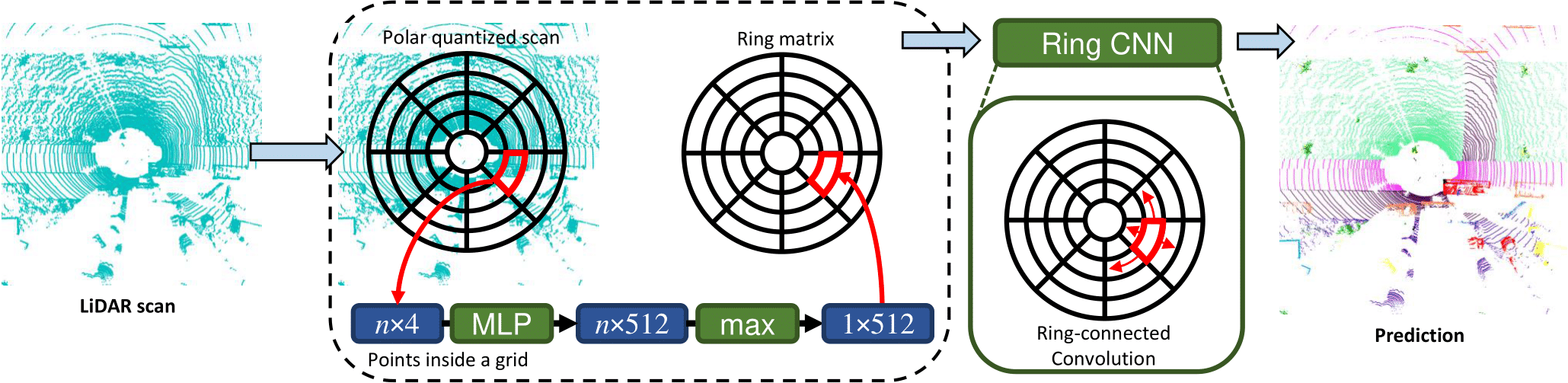

PolarNet is a lightweight neural network that aims to provide near-real-time online semantic segmentation for a single LiDAR scan. Unlike existing methods that require KNN to build a graph and/or 3D/graph convolution, we achieve fast inference speed by avoiding both of them. As shown below, we quantize points into grids using their polar coordinations. We then learn a fixed-length representation for each grid and feed them to a 2D neural network to produce point segmentation results.

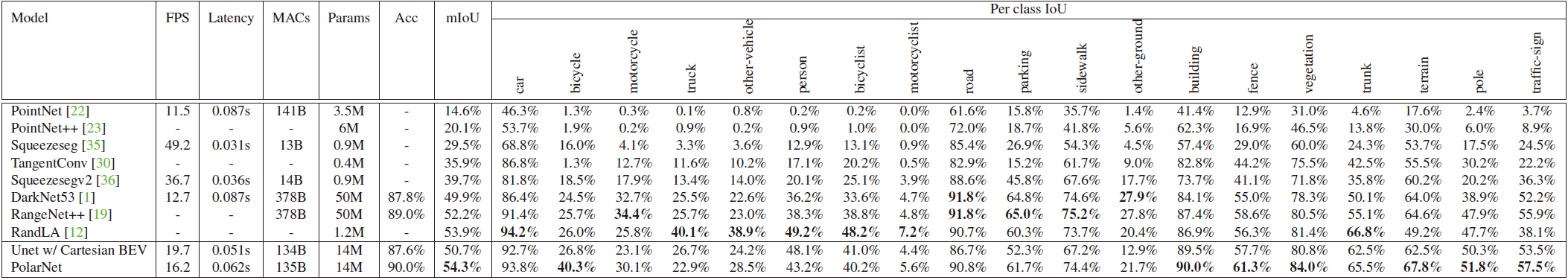

We achieved leading mIoU performance in the following LiDAR scan datasets : SemanticKITTI, A2D2 and Paris-Lille-3D.

| Model | SemanticKITTI | A2D2 | Paris-Lille-3D |

|---|---|---|---|

| Squeezesegv2 | 39.7% | 16.4% | 36.9% |

| DarkNet53 | 49.9% | 17.2% | 40.0% |

| RangeNet++ | 52.2% | - | - |

| RandLA | 53.9% | - | - |

| 3D-MiniNet | 55.8% | - | - |

| Squeezesegv3 | 55.9% | - | - |

| PolarNet | 57.2% | 23.9% | 53.3% |

This code is tested on Ubuntu 16.04 with Python 3.5, CUDA 9.2 and Pytorch 1.3.1.

1, Install the following dependencies by either pip install -r requirements.txt or manual installation.

- numpy

- pytorch

- tqdm

- yaml

- Cython

- numba

- torch-scatter

- dropblock

- (Optional) nuscenes-devkit

2, Download Velodyne point clouds and label data in SemanticKITTI dataset here.

3, Extract everything into the same folder. The folder structure inside the zip files of label data matches the folder structure of the LiDAR point cloud data.

4, Data file structure should look like this:

./

├── train_SemanticKITTI.py

├── ...

└── data/

├──sequences

├── 00/

│ ├── velodyne/ # Unzip from KITTI Odometry Benchmark Velodyne point clouds.

| | ├── 000000.bin

| | ├── 000001.bin

| | └── ...

│ └── labels/ # Unzip from SemanticKITTI label data.

| ├── 000000.label

| ├── 000001.label

| └── ...

├── ...

└── 21/

└── ...

Run

python train_SemanticKITTI.pyto train a SemanticKITTI segmentation PolarNet from scratch after dataset preparation. The code will automatically train, validate and early stop training process.

Note that we trained our model on a single TITAN Xp which has 12 GB GPU memory. Training model on GPU with less memory would likely cause GPU out-of-memory. You will see the exception report if there is a OOM. In this case, you might want to train model with smaller quantization grid/ feature map via python train_SemanticKITTI.py --grid_size 320 240 32.

We also provide a pretrained SemanticKITTI PolarNet weight.

python test_pretrain_SemanticKITTI.pyResult will be stored in ./out folder. Test performance can be evaluated by uploading label results onto the SemanticKITTI competition website here.

Remember to shift label number back to the original dataset format before submitting! Instruction can be found in semantic-kitti-api repo.

python remap_semantic_labels.py -p </your result path> -s test --inverseYou should be able to reproduce the SemanticKITTI results reported in our paper.

Download Paris-Lille-3D and nuScenes datasets and put it in the data folder like this:

data/

├──NuScenes/

| ├── trainval/

| │ ├── lidarseg/

| | ├── maps/

| | ├── ...

| │ └── v1.0-trainval/

| └── test/

| └── ...

└──paris_lille/

├── coarse_classes.xml

├── Lille1.ply

├── ...

└── Paris.ply

Training and evaluation code works similar to SemanticKITTI:

python train_nuscenes.py --visibility

python train_PL.pyPlease cite our paper if this code benefits your research:

@InProceedings{Zhang_2020_CVPR,

author = {Zhang, Yang and Zhou, Zixiang and David, Philip and Yue, Xiangyu and Xi, Zerong and Gong, Boqing and Foroosh, Hassan},

title = {PolarNet: An Improved Grid Representation for Online LiDAR Point Clouds Semantic Segmentation},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2020}

}