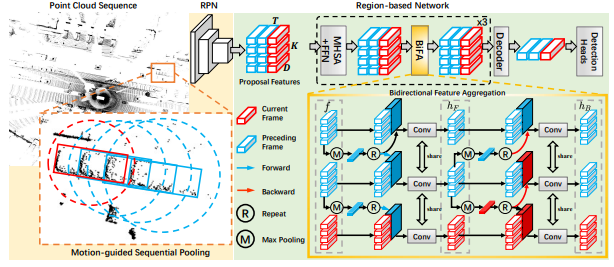

MSF: Motion-guided Sequential Fusion for Efficient 3D Object Detection from Point Cloud Sequences (CVPR2023)[paper]

Authors: Chenhang He, Ruihuang Li, Yabin Zhang Shuai Li, Lei Zhang.

This project is built on OpenPCDet.

The performance of MSF 4 frames on Waymo valdation split are as follows.

| % Training | Car AP/APH | Ped AP/APH | Cyc AP/APH | Log file | |

|---|---|---|---|---|---|

| Level 1 | 100% | 81.50/81.02 | 85.10/82.19 | 78.46/77.66 | Download |

| Level 2 | 100% | 73.95/73.50 | 78.03/75.21 | 76.21/75.43 |

The training of MSF is similar to MPPNet, first train the RPN model

bash scripts/dist_train.sh ${NUM_GPUS} --cfg_file cfgs/waymo_models/centerpoint_4frames.yamlThe ckpt will be saved in ../output/waymo_models/centerpoint_4frames/default/ckpt. Then Save the RPN model's prediction results of training and val dataset

# training

bash scripts/dist_test.sh ${NUM_GPUS} --cfg_file cfgs/waymo_models/centerpoint_4frames.yaml \

--ckpt ../output/waymo_models/centerpoint_4frames/default/ckpt/checkpoint_epoch_36.pth \

--set DATA_CONFIG.DATA_SPLIT.test train

# val

bash scripts/dist_test.sh ${NUM_GPUS} --cfg_file cfgs/waymo_models/centerpoint_4frames.yaml \

--ckpt ../output/waymo_models/centerpoint_4frames/default/ckpt/checkpoint_epoch_36.pth \

--set DATA_CONFIG.DATA_SPLIT.test valThe prediction results of train and val dataset will be saved in

../output/waymo_models/centerpoint_4frames/default/eval/epoch_36/train/default/result.pkl,

../output/waymo_models/centerpoint_4frames/default/eval/epoch_36/val/default/result.pkl.

After that, train MSF with

bash scripts/dist_train.sh ${NUM_GPUS} --cfg_file cfgs/waymo_models/msf_4frames.yaml --batch_size 2 # Single GPU

python test.py --cfg_file cfgs/waymo_models/msf_4frames.yaml --batch_size 1 \

--ckpt ../output/waymo_models/msf_4frames/default/ckpt/checkpoint_epoch_6.pth

# Multiple GPUs

bash scripts/dist_test.sh ${NUM_GPUS} --cfgs/waymo_models/mppnet_4frames.yaml --batch_size 1 \

--ckpt ../output/waymo_models/msf_4frames/default/ckpt/checkpoint_epoch_6.pth@InProceedings{He_2023_CVPR,

author = {He, Chenhang and Li, Ruihuang and Zhang, Yabin and Li, Shuai and Zhang, Lei},

title = {MSF: Motion-Guided Sequential Fusion for Efficient 3D Object Detection From Point Cloud Sequences},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {5196-5205}

}