One-click deployment of the ChatGPT private proxy, power by Next.js, support SSE!

English | 简体中文

Introduction

This project is based on Next.js, use Rewriter to complete proxy function, only 2 lines of core code, combining Zeabur or Vercel can easily host your private proxy service

ps: The SSE part of the code from openai-api-proxy

Demo

Before you start, you'd better check the How to use section to determine whether this project is applicable to you

Quick jump

Deploy on Zeabur

The Zeabur is recommended, Specific operations are as follows

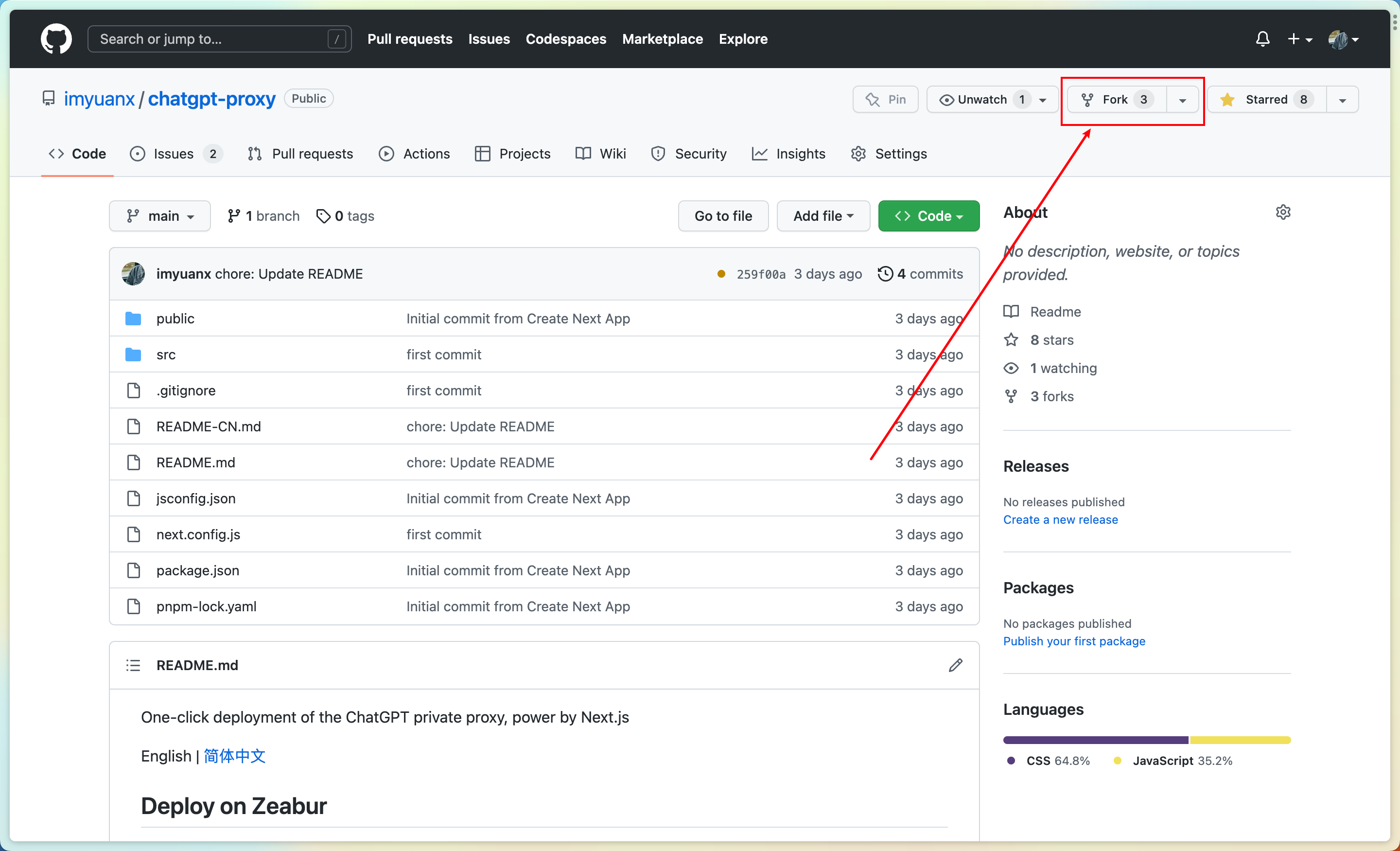

- Fork this repository for your own repository

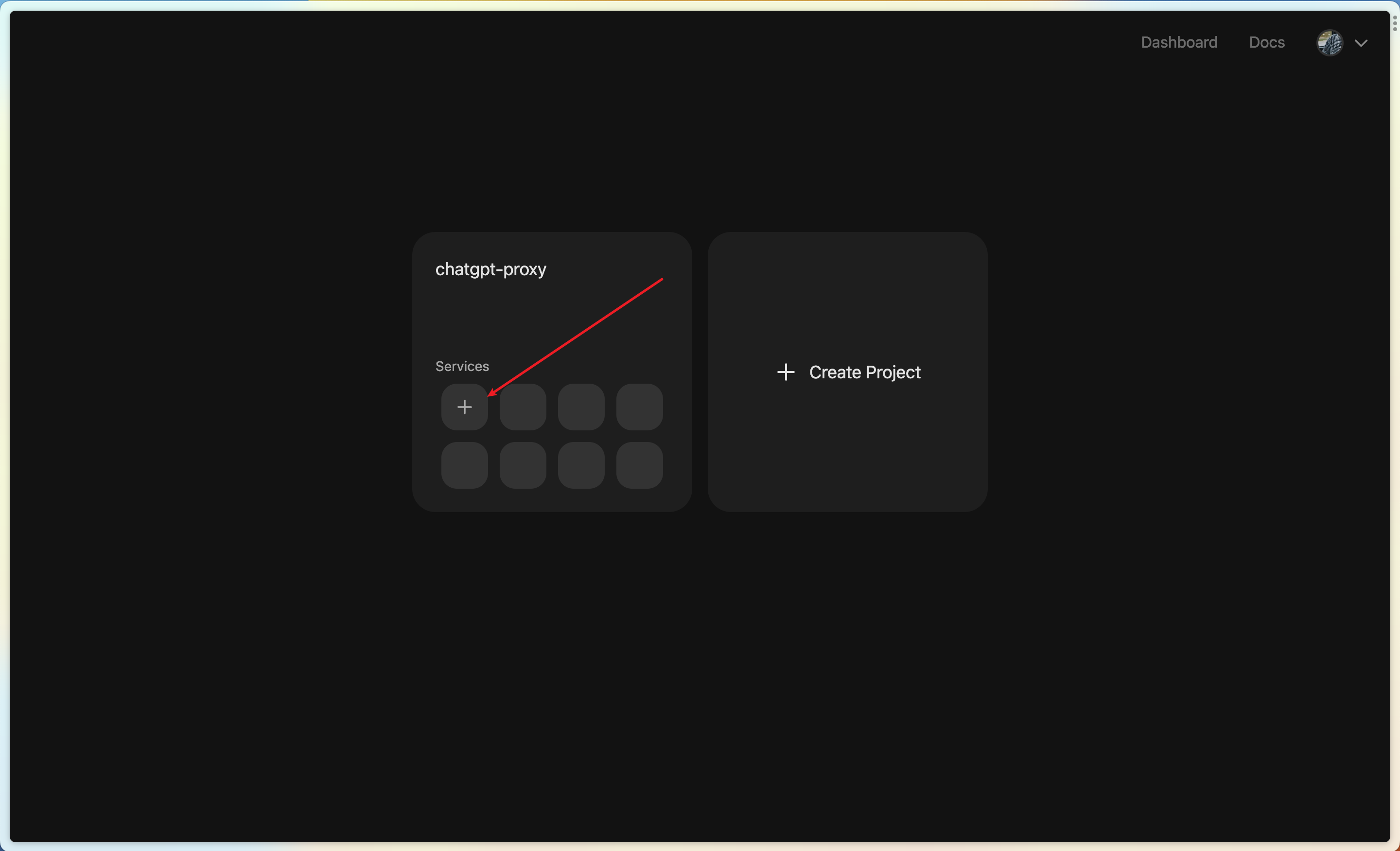

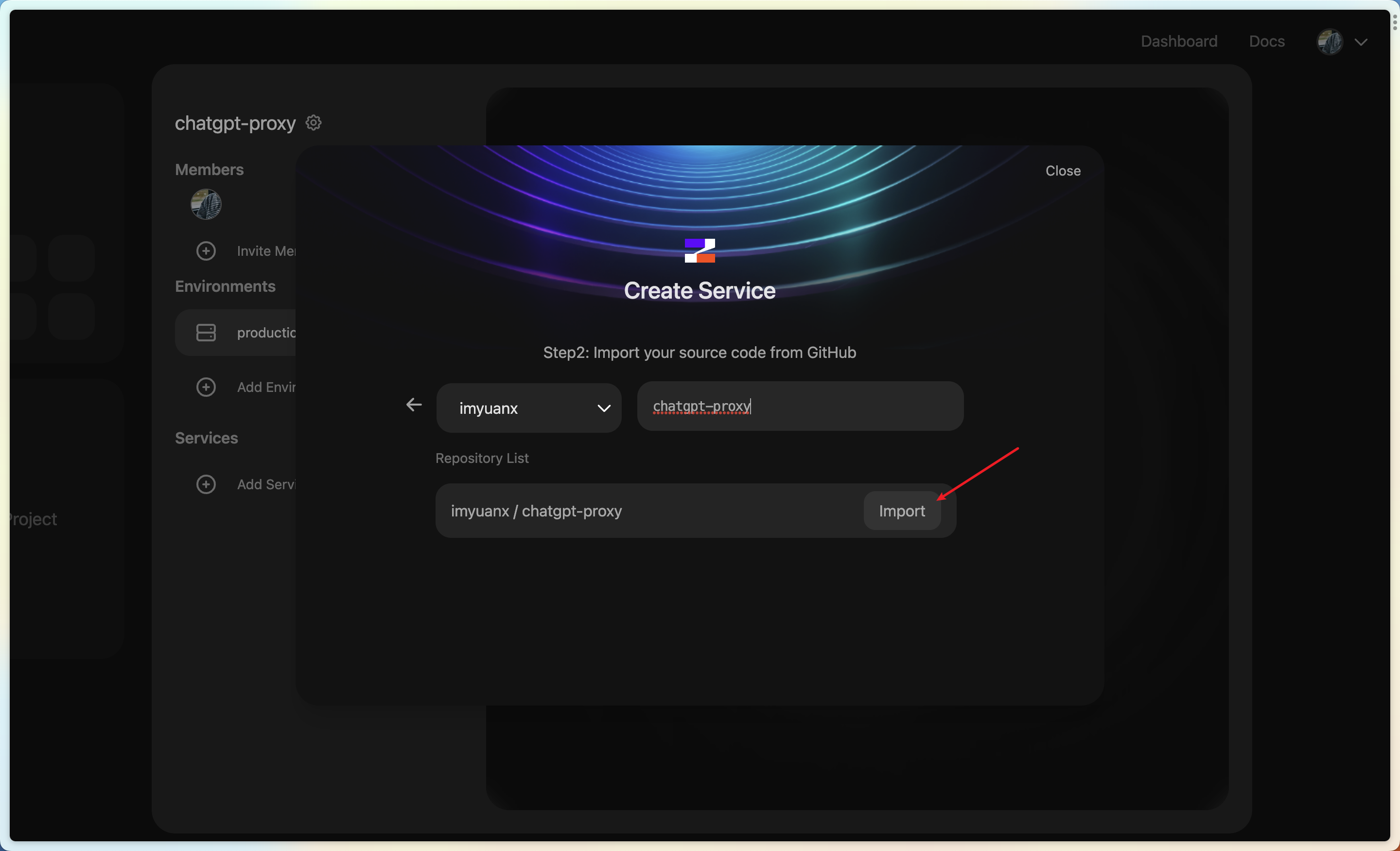

- Add a new service on Zeabur console

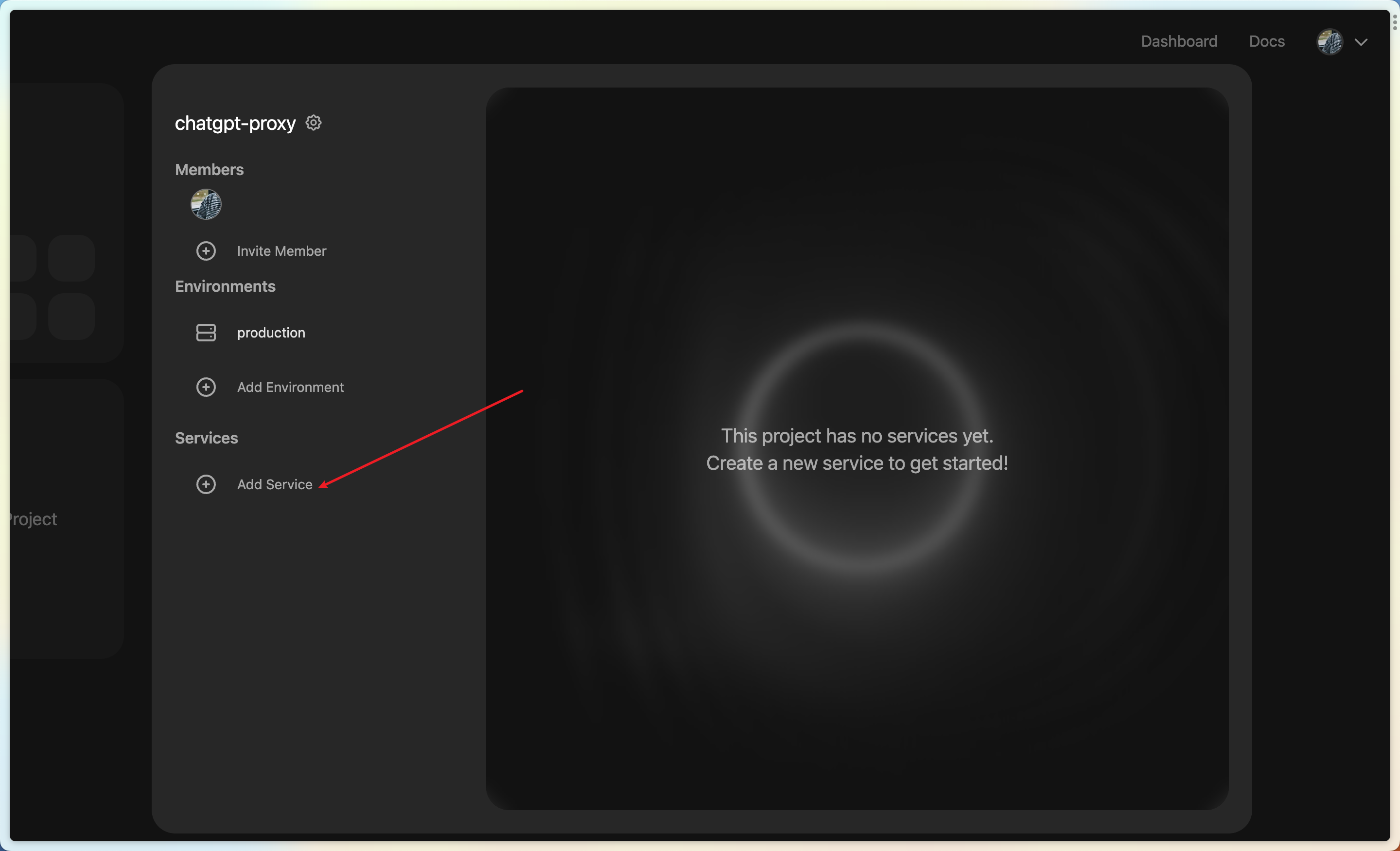

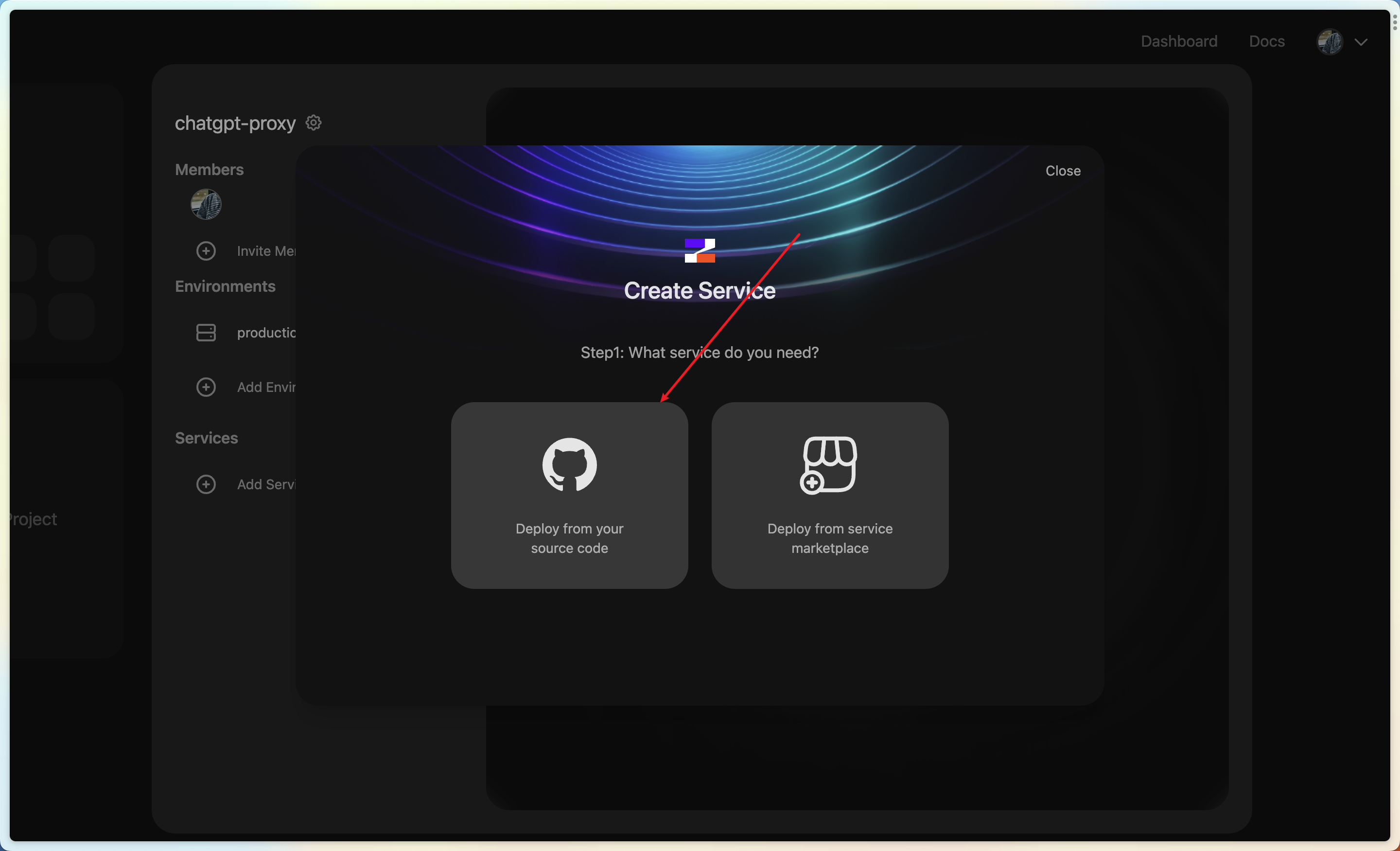

- Add service and deploy from source code

- Select your forked repo

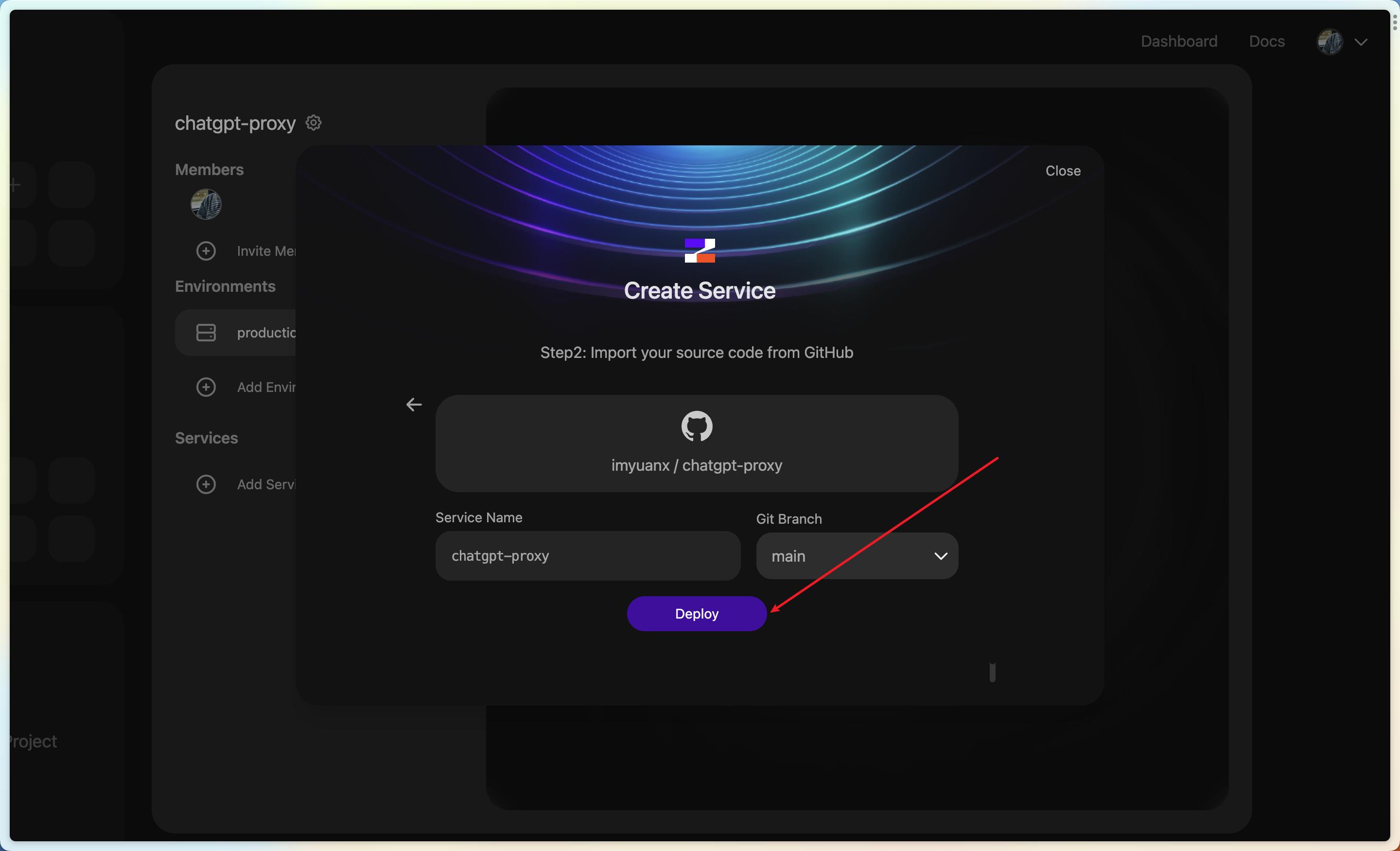

- Select main and deploy

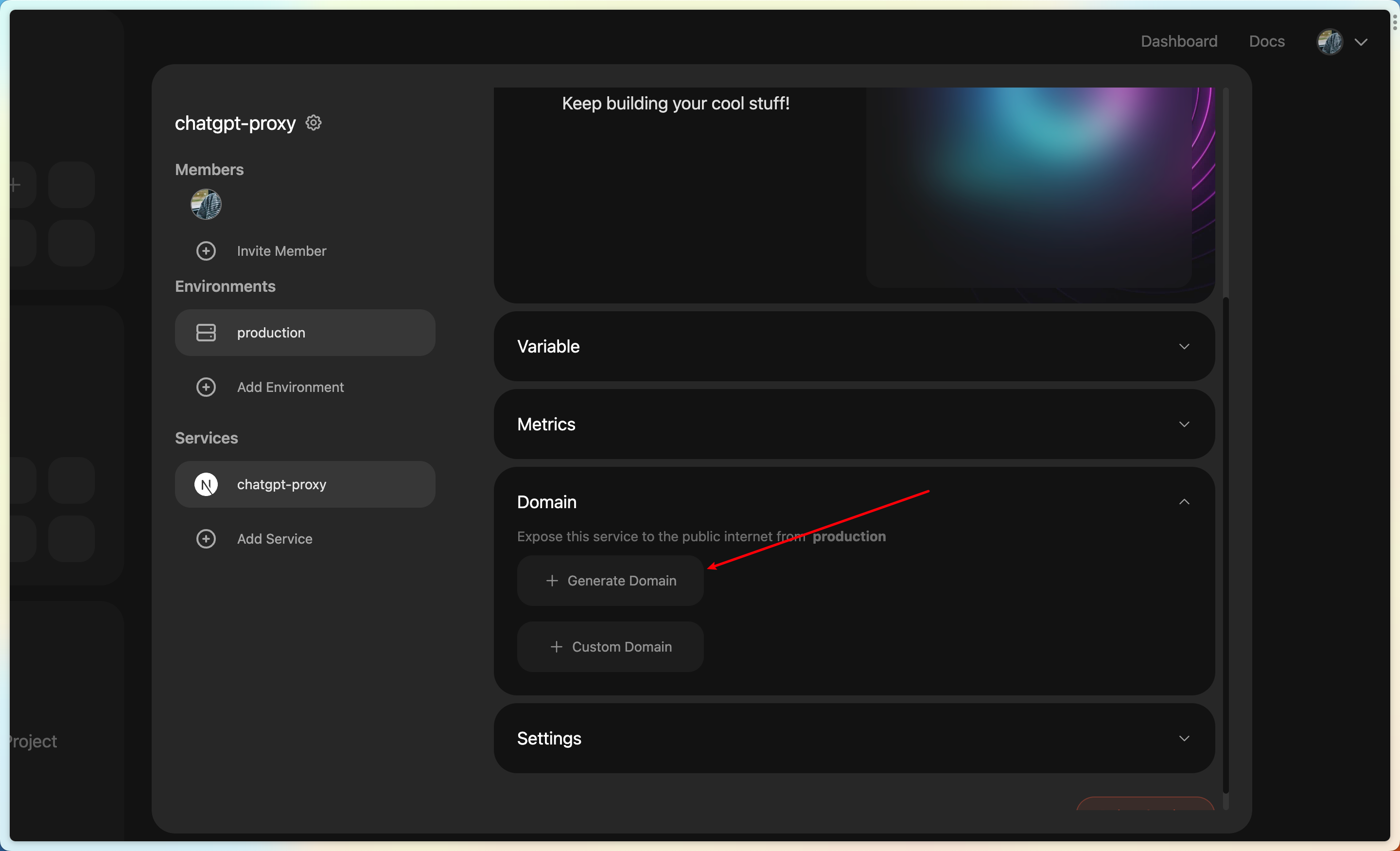

- After the deployment is successful, Generate the domain name.

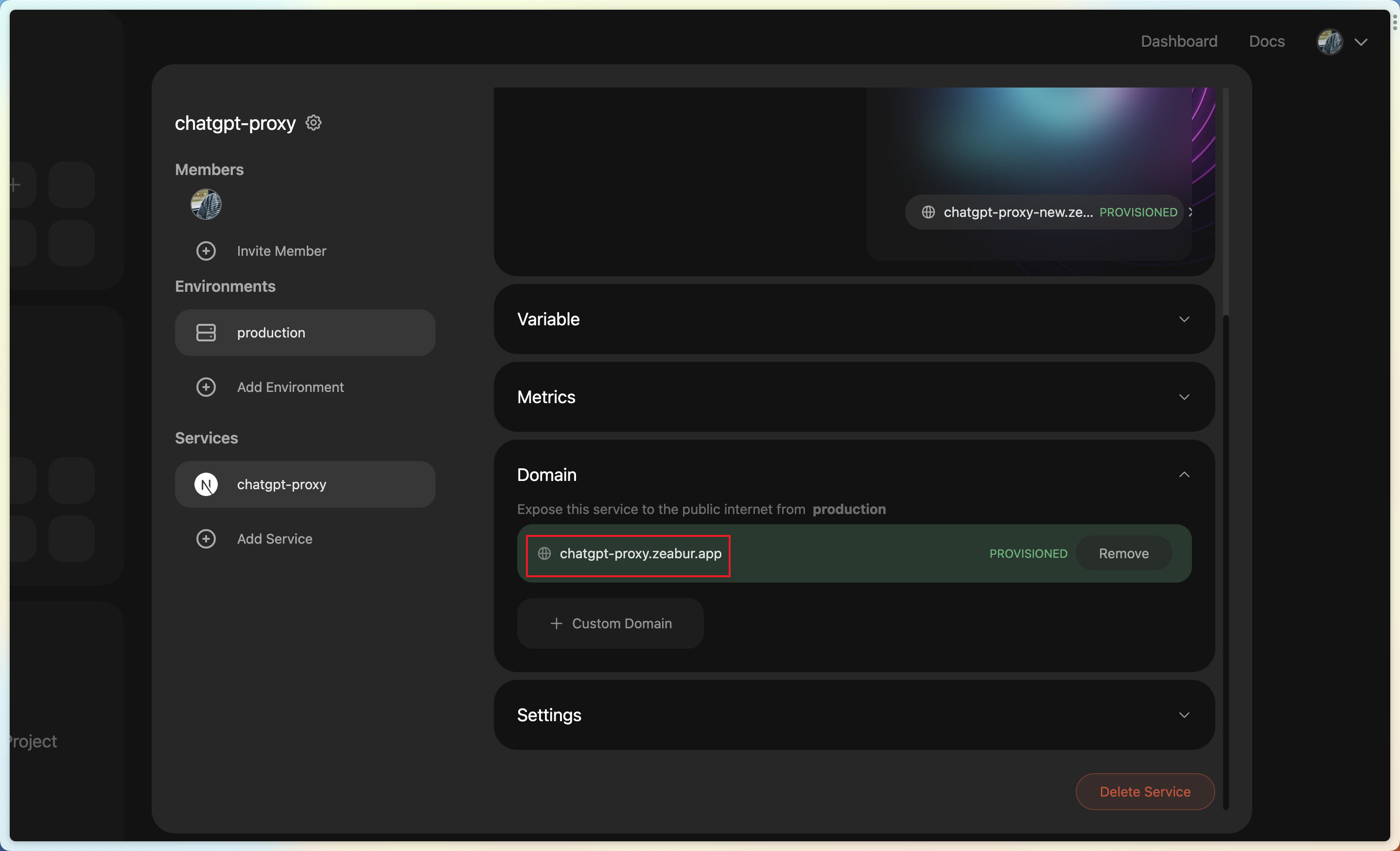

- Finally get your service

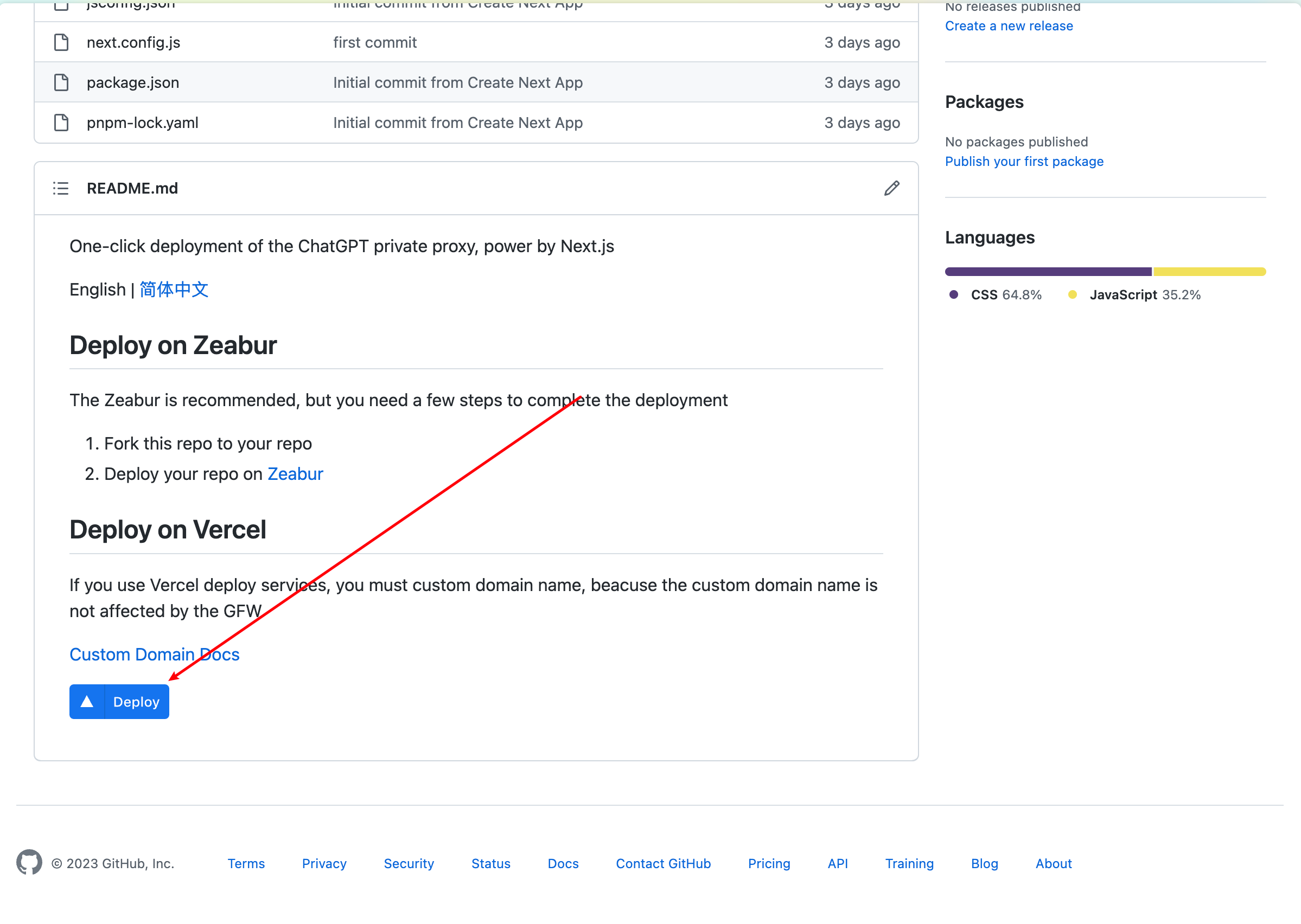

Deploy on Vercel

❗️ ⚠️ ❗️ Warning: This project may violate the Never Fair Use - Proxies and VPNs entries under the Vercel Terms of Use. Vercel hosting this project is strongly not recommended!

❗️ ⚠️ ❗️ Warning: If your account is punished due to the deployment of this project to Vercel, please bear the consequences

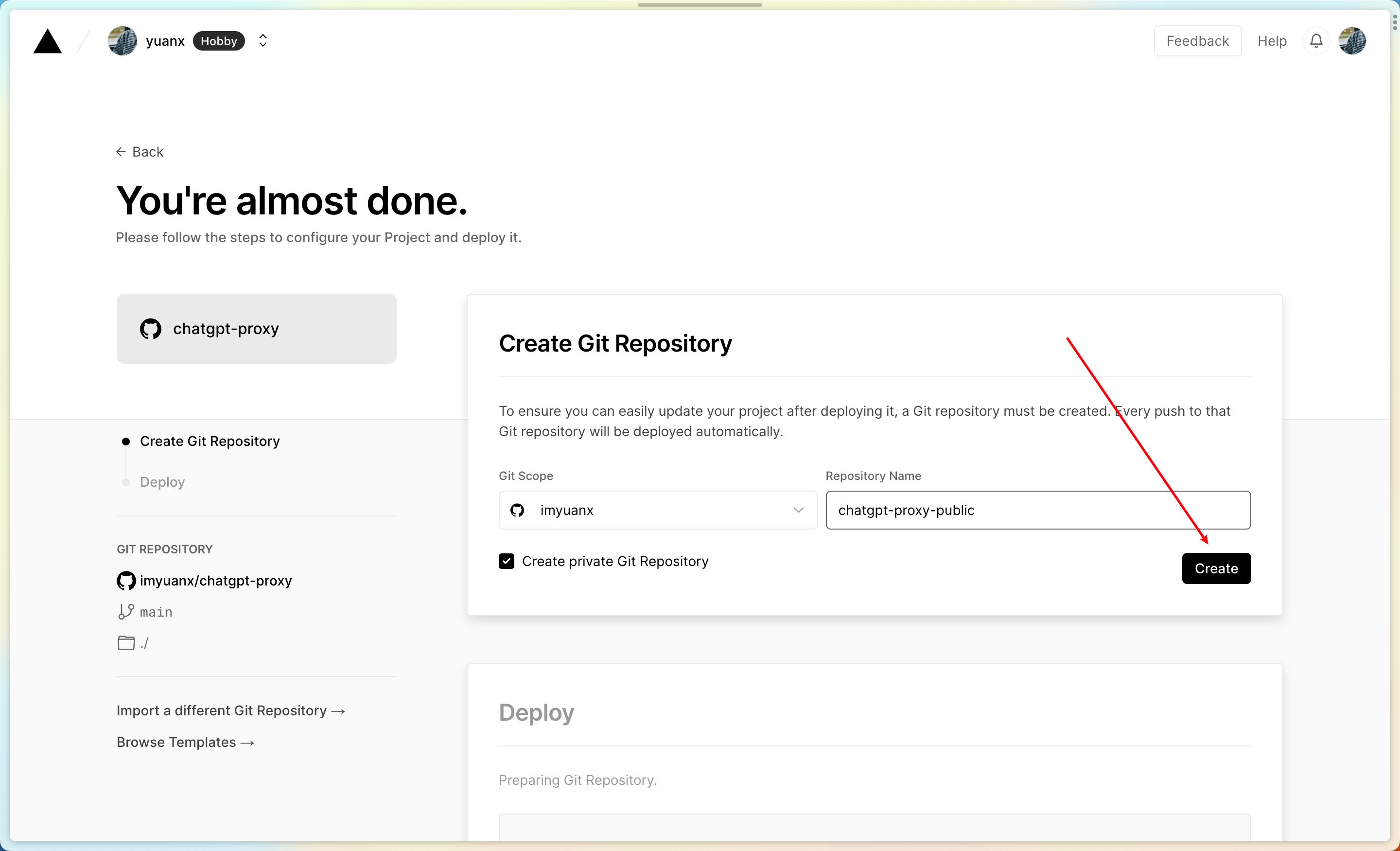

If you use Vercel deploy services, you must custom domain name, beacuse the custom domain name is not affected by the GFW, Specific operations are as follows

- Click the deploy button at the top

- After deployment, the repository will be forked automatically for you, entering a custom repository name in the input field

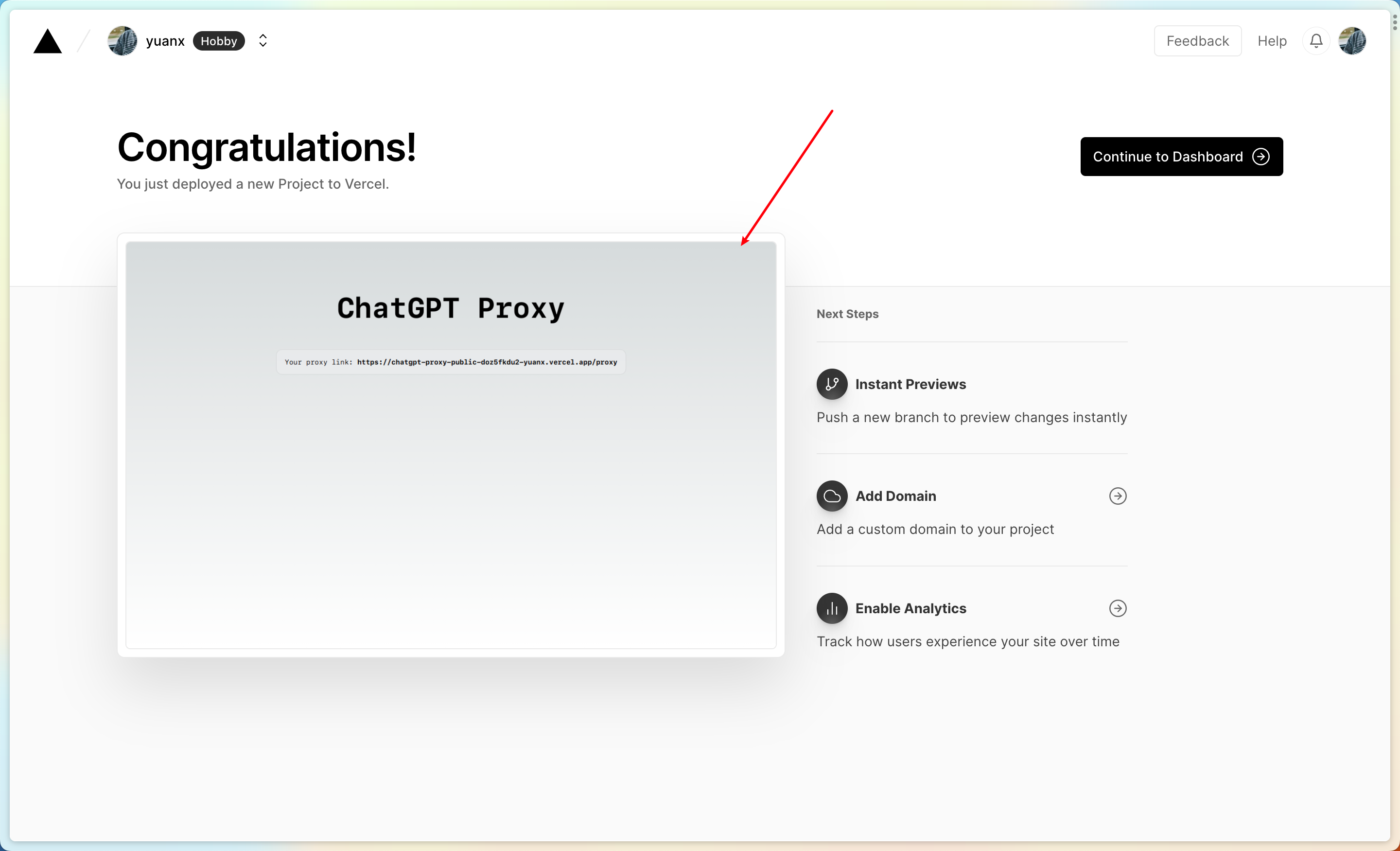

- After successful deployment, get your service

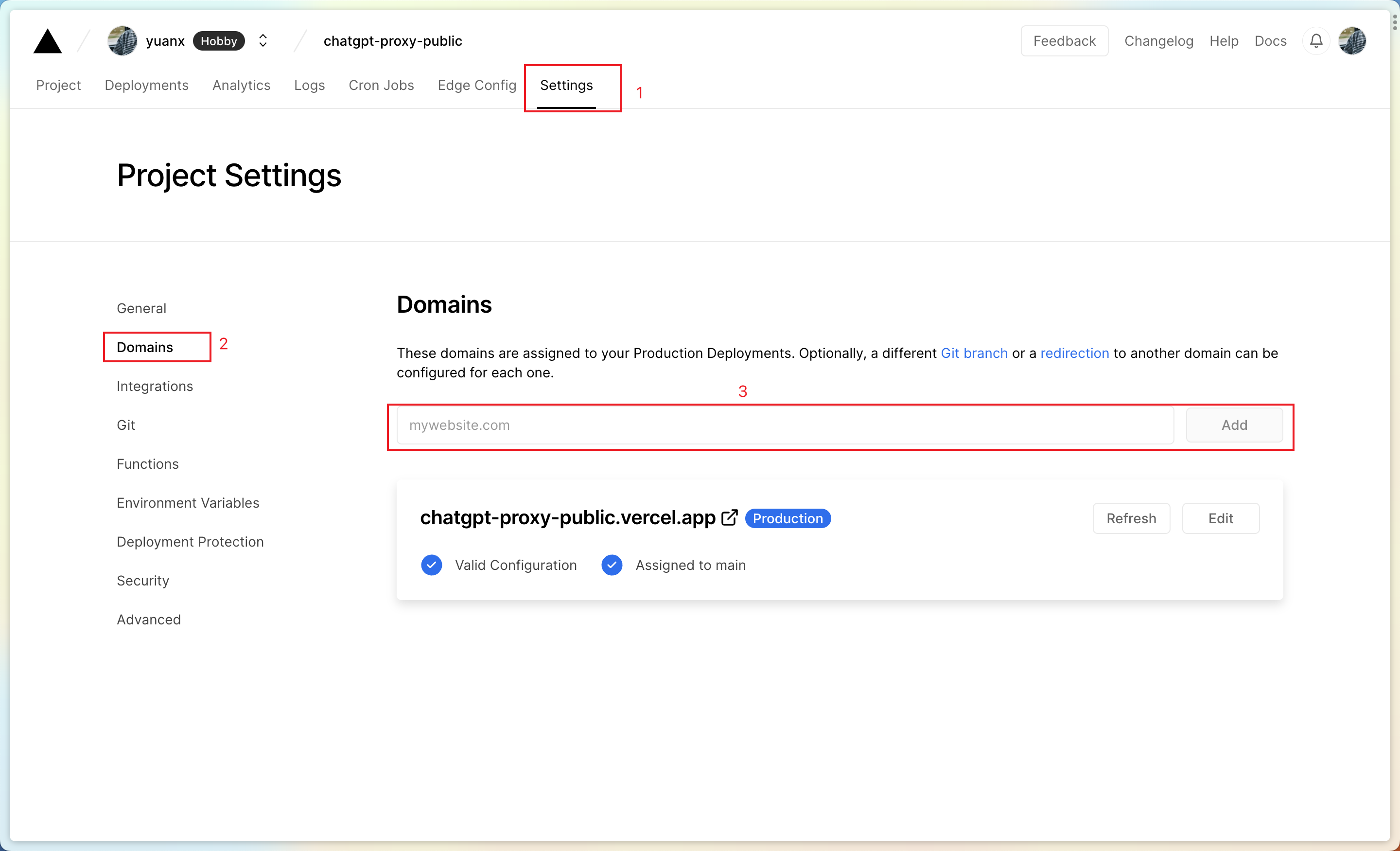

- You must add a custom domain name for your service, otherwise you will not be able to access your service in the country

Deploy on your server

You must have a server and make sure your server can access ChatGPT, if not you can deploy on Zeabur / Vercel

You need some knowledge about Docker

- Fork this repository for your own repository

-

Switch to the your forked project directory and run

docker build -t chatgpt-proxy . -

then run

docker run --name chatgpt-proxy -d -p 8000:3000 chatgpt-proxy -

open

http://127.0.0.1:8000on your browser

How to use

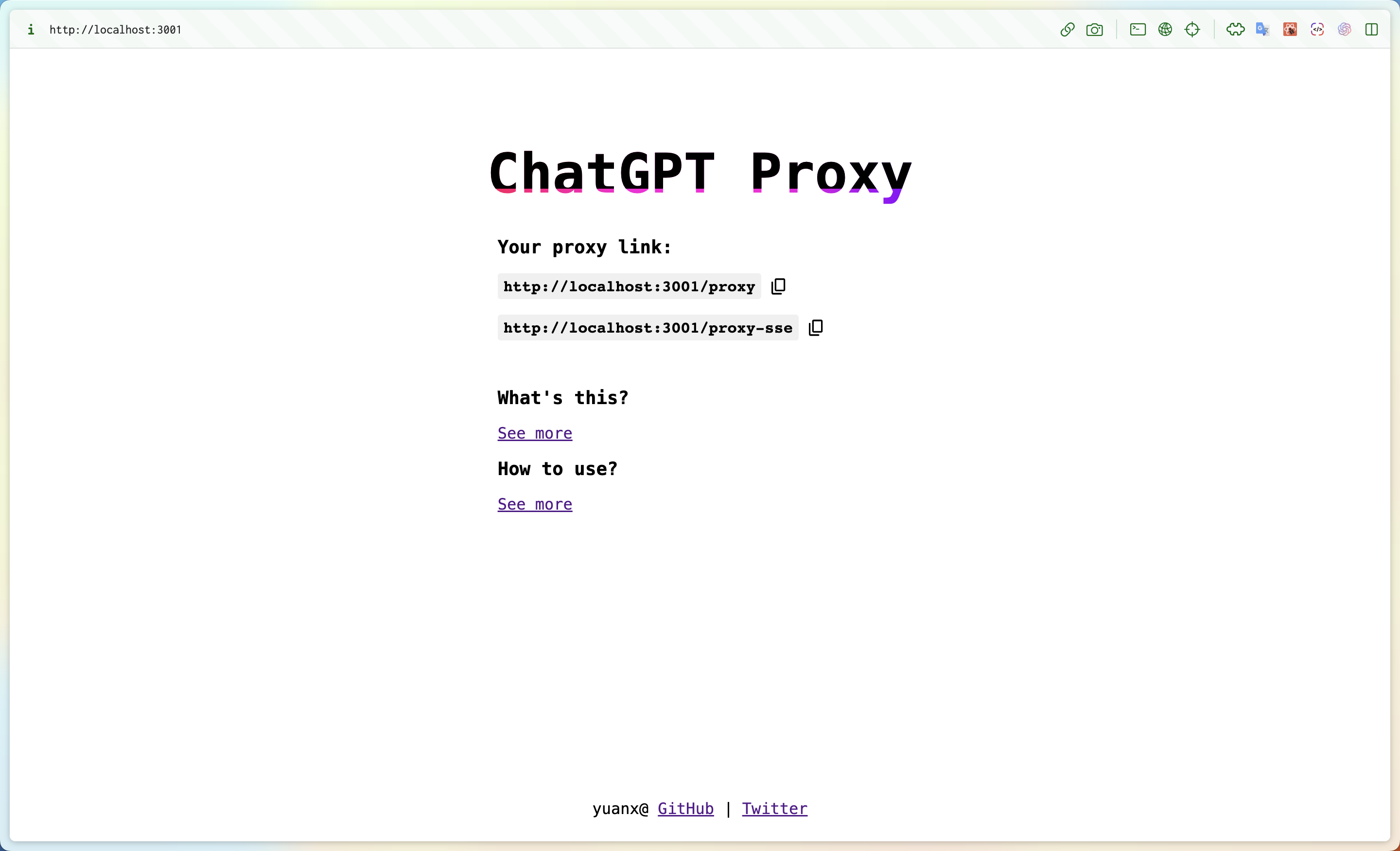

Whether you use Zeabur or Vercel, you will get the following proxy service after deployment

The resulting two addresses will be fully forwarded to https://api.openai.com and both will be domestically accessible, where .../proxy-sse supports SSE

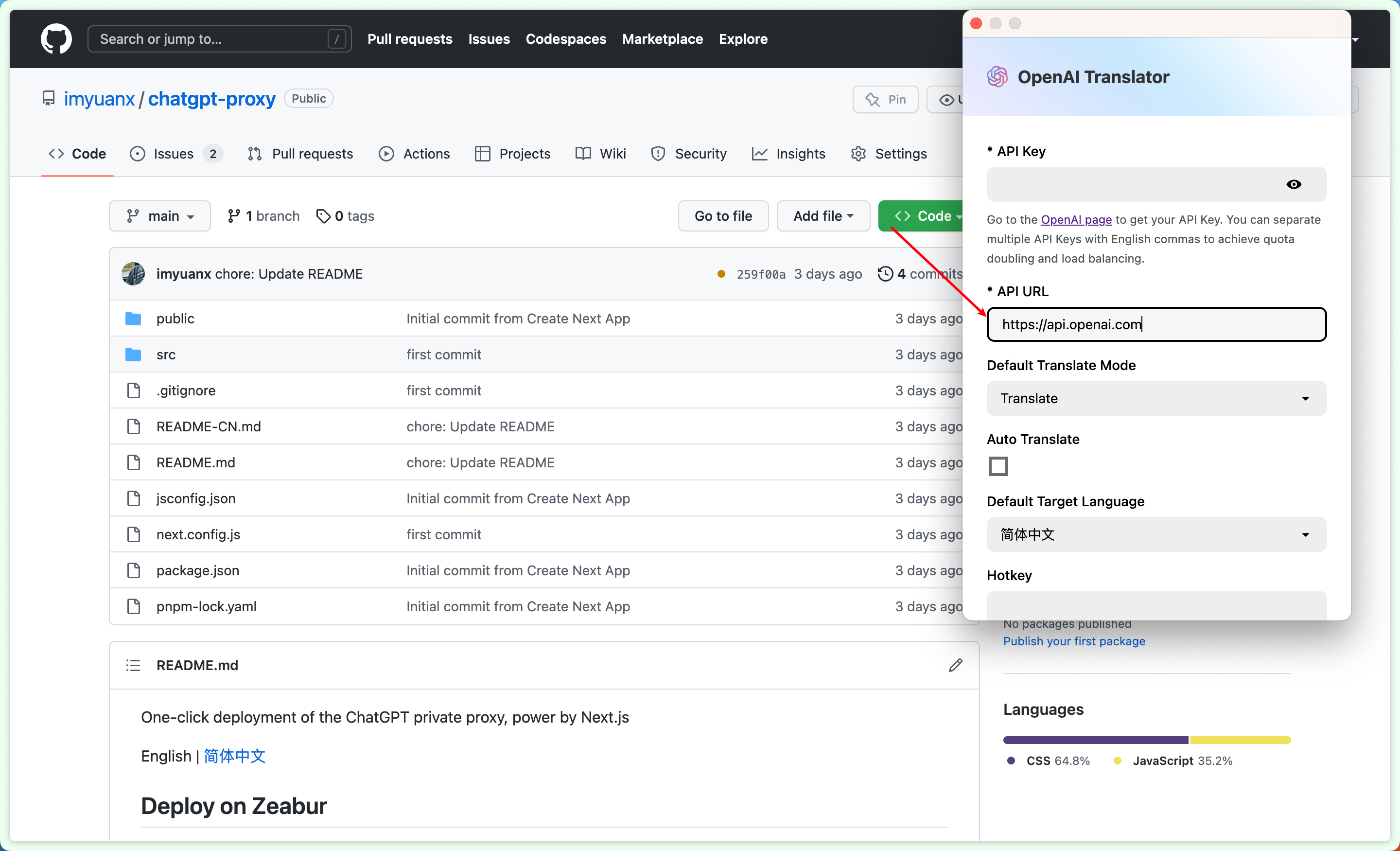

You can use the proxy service in applications that support custom apis to invoke the "openai" interface domestically

Fro example, openai-translator: