Ondrej Sika (sika.io) | ondrej@sika.io | go to course -> | install docker ->

Ondrej Sika <ondrej@ondrejsika.com>

https://github.com/ondrejsika/docker-training

My Docker course with code examples.

Write me mail to ondrej@sika.io

- https://github.com/ondrejsika/kubernetes-training-examples

- https://github.com/ondrejsika/bare-metal-kubernetes

./pull-images.sh

If you want update list of used images in file images.txt, run ./save-image-list.sh and remove locally built images.

Docker is an open-source project that automates the deployment of applications inside software containers ...

Docker containers wrap up a piece of software in a complete filesystem that contains everything it needs to run: code, runtime, system tools, system libraries – anything you can install on a server.

A VM is an abstraction of physical hardware. Each VM has a full server hardware stack from virtualized BIOS to virtualized network adapters, storage, and CPU.

That stack allows run any OS on your host but it takes some power.

Containers are abstraction in linux kernel, just proces, memory, network, … namespaces.

Containers run in same kernel as host - it is not possible use different OS or kernel version, but containers are much more faster than VMs.

- Performance

- Management

- Application (image) distribution

- Security

- One kernel / "Linux only"

- Almost everywhere

- Development, Testing, Production

- Better (easier, faster) deployment process

- Separates running applications

- Kubernetes (Kubernetes Training)

- Swarm

Docker CE is ideal for individual developers and small teams looking to get started with Docker and experimenting with container-based apps.

Docker Engine - Enterprise is designed for enterprise development of a container runtime with security and an enterprise grade SLA in mind.

Docker Enterprise is designed for enterprise development and IT teams who build, ship, and run business critical applications in production at scale.

Source: https://docs.docker.com/install/overview/

Set of 12 rules how to write modern applications.

- Official installation - https://docs.docker.com/engine/installation/

- My install instructions (in Czech) - https://ondrej-sika.cz/docker/instalace/

- Bash Completion on Mac - https://blog.alexellis.io/docker-mac-bash-completion/

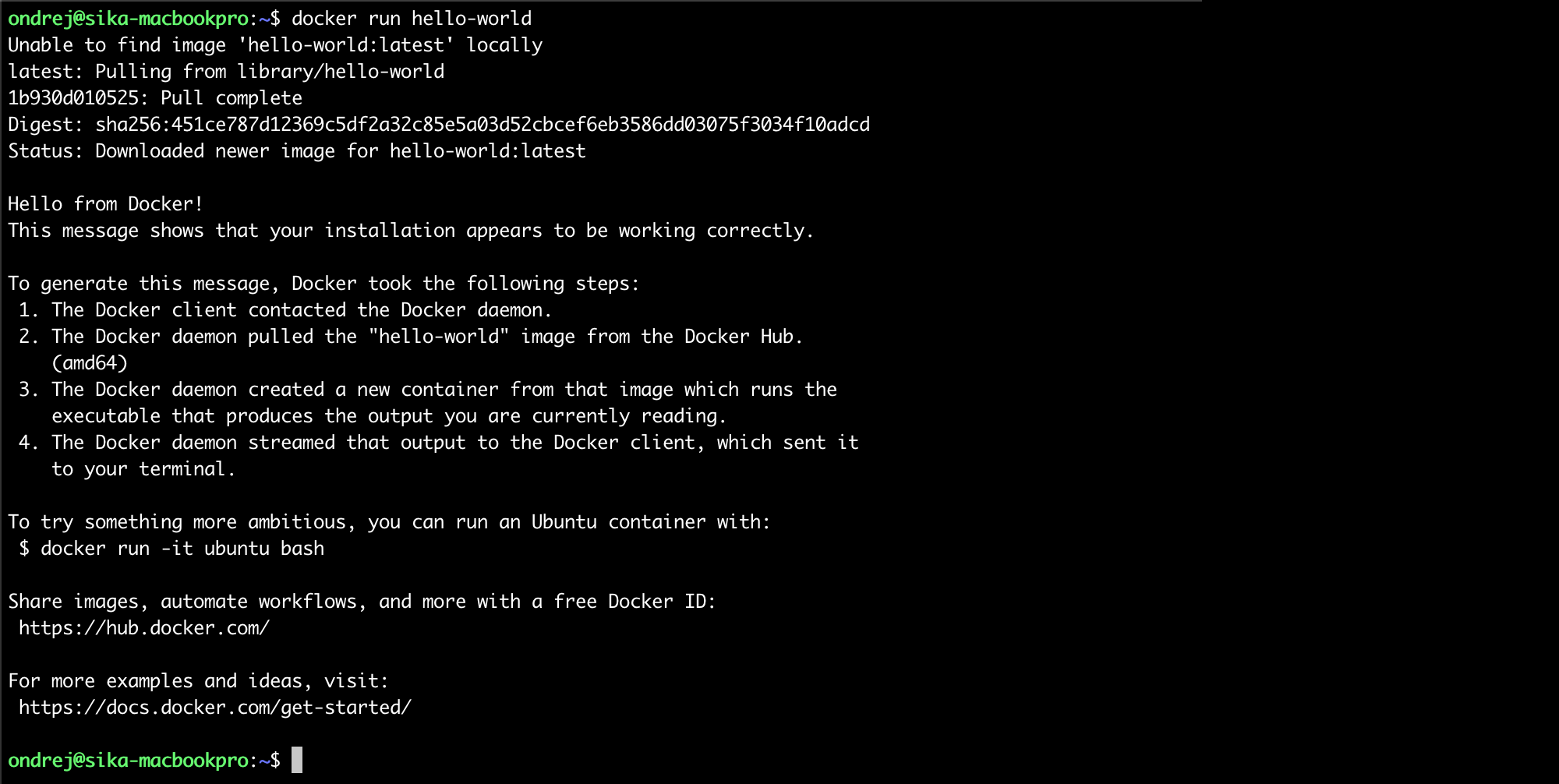

docker run hello-world

You can use remote Docker using SSH. Just export varibale DOCKER_HOST with ssh://root@docker.sikademo.com and your local Docker clint will be executed on docker.sikademo.com server.

export DOCKER_HOST=ssh://root@docker.sikademo.com

docker version

docker info

You can connect Docker using TCP socket, see chapter Connect Shell to the Machine

An image is an inert, immutable, file that's essentially a snapshot of a container. Images are created with the build command, and they'll produce a container when started with run. Images are stored in a Docker registry.

docker version- print versiondocker info- system wide informationdocker system df- docker disk usagedocker system prune- cleanup unused data

docker pull <imaage>- download an imagedocker image ls- list all imagesdocker image ls -q- quiet output, just IDsdocker image ls <image>- list image versionsdocker image rm <image>- remove imagedocker image history <image>- show image historydocker image inspect <image>- show image properties

Docker image name also contains location of it source. Those names can be used:

debian- Official images on Docker Hubondrejsika/debian- User (custom) images on Docker Hubreg.istry.cz/debian- Image in my own registry

If you have Gitlab, Docker reqistry is build in.

You can run registry manually using this command:

docker run -d -p 5000:5000 --restart=always --name registry registry:2

See full deployment configuration here: https://docs.docker.com/registry/deploying/

docker run <image> [<command>]

Examples

docker run hello-world

docker run debian date

docker run -ti debian

docker ps- list containersdocker start <container>docker stop <container>docker restart <container>docker logs <container>- show STDOUT & STDERRdocker rm <container>- remove container

--name <name>--rm- remove container after stop-d- run in detached mode-ti- map TTY a STDIN (for bash eg.)-e <variable>=<value>- set ENV variable

By default, if container process stop (or fail), container will be stopped.

You can choose another behavion using argument --restart <restart policy>.

--restart on-failure- restart only when container return non zero return code--restart always- always, even on Docker daemon restart (server restart also)--restart unless-stopped- similar to always, but keep stopped container stopped on Docker daemon restart (server restart also)

docker ps- list running containersdocker ps -a- list all containersdocker ps -a -q- list IDs of all containers

Example of -q

docker rm -f $(docker ps -a -q)

or my dra (docker remove all) alias

alias dra='docker ps -a -q | xargs docker rm -f'

dra

docker exec <container> <command>

Arguments

-d- run in detached mode-e <variable>=<value>- set ENV variable-ti- map TTY a STDIN (for bash eg.)-u <user>- run command by specific user

Example

docker run --name db11 -d postgres:11

docker exec -ti -u postgres db11 psql

docker run --name db12 -d postgres:12

docker exec -ti -u postgres db12 psql

docker logs [-f] <container>

Examples

docker logs my-debian

docker logs -f mydebian # following

You can use native Docker logging or some log drivers.

For example, if you want to log into syslog, you can use --log-driver syslog.

You can send logs directly to ELK (EFK) or Graylog using gelf. For elk logging you have to use --log-driver gelf –-log-opt gelf-address=udp://1.2.3.4:12201.

See the logging docs: https://docs.docker.com/config/containers/logging/configure/

Get lots of information about container in JSON.

docker inspect <container>

- Volumes are persistent data storage for containers.

- Volumes can be shared between containers and data are written directly to host.

Examples

docker run -ti -v /data debiandocker run -ti -v my-volume:/data debiandocker run -ti -v $(pwd)/my-data:/data debian

If you want to mount your volumes read only, you have to add :ro to volume argument.

Examples

docker run -ti -v my-volume:/data:ro debiandocker run -ti -v $(pwd)/my-data:/data:ro debian

First example does't make sense read only.

If you want to forward socket into container, you can also use volume. If you work with sockets, read only parameter doesn't work.

docker run -v /var/run/docker.sock:/var/run/docker.sock -ti docker

Docker can forward specific port from container to host

docker run -p <host port>:<cont. port> <image>

You can specify an address on the host as well

docker run -p <host address>:<host port>:<cont. port> <image>

Examples

docker run -ti -p 8080:80 nginx

docker run -ti -p 127.0.0.1:8080:80 nginx

The latter will make connection possible only from localhost.

Dockerfile is preferred way to create images.

Dockerfile defines each layer of image by some command.

To make image use command docker build

FROM <image>- define base imageRUN <command>- run command and save as layerCOPY <local path> <image path>- copy file or directory to image layerADD <source> <image path>- instead of copy, archives added by add are extractedENV <variable> <value>- set ENV variableUSER <user>- switch userWORKDIR <path>- change working directoryVOLUME <path>- define volumeENTRYPOINT <command>- executableCMD <command>- parameters for entrypointEXPOSE <port>- Define on which port the conteiner will be listening

- Ignore files for docker build process.

- Similar to

.gitignore

Example of .dockerignore

Dockerfile

out

node_modules

.DS_Store

docker build <path> -t <image>- build imagedocker build <path> -f <dockerfile> -t <image>docker tag <source image> <target image>- rename docker image

See Simple Image example

wget https://raw.githubusercontent.com/ondrejsika/docker-training/master/examples/simple-image/app.py

wget https://raw.githubusercontent.com/ondrejsika/docker-training/master/examples/simple-image/requirements.txt

Or download files app.py, requirements.txt manually.

Let's create Dockerfile together.

There are few steps, how to get propper Dockerfile.

cat > Dockerfile.1 <<EOF

FROM debian:10

WORKDIR /app

RUN apt-get update

RUN apt-get install -y python3 python3-pip

RUN rm -rf /var/lib/apt/lists/*

COPY . .

RUN pip3 install -r requirements.txt

CMD python3 app.py

EXPOSE 80

EOFBuild

docker build -t simple-image:1 -f Dockerfile.1 .

Run

docker run --name simple-image -d -p 8000:80 simple-image:1

Stop & remove container

docker rm -f simple-image

Let's update the Dockerfile

cat > Dockerfile.2 <<EOF

FROM debian:10

WORKDIR /app

RUN apt-get update && \

apt-get install -y python3 python3-pip && \

rm -rf /var/lib/apt/lists/*

COPY . .

RUN pip3 install -r requirements.txt

CMD python3 app.py

EXPOSE 80

EOFBuild & Run

docker build -t simple-image:2 -f Dockerfile.2 .

docker run --name simple-image -d -p 8000:80 simple-image:2

Stop & remove container

docker rm -f simple-image

Let's update the Dockerfile again. Install only required not recommended packages.

cat > Dockerfile.3 <<EOF

FROM debian:10

WORKDIR /app

RUN echo 'APT::Install-Recommends "0";\nAPT::Install-Suggests "0";' > /etc/apt/apt.conf.d/99recommends

RUN apt-get update && \

apt-get install -y python3 python3-pip && \

rm -rf /var/lib/apt/lists/*

COPY . .

RUN pip3 install -r requirements.txt

CMD python3 app.py

EXPOSE 80

EOFBuild & Run

docker build -t simple-image:3 -f Dockerfile.3 .

docker run --name simple-image -d -p 8000:80 simple-image:3

Stop & remove container

docker rm -f simple-image

Let's update the Dockerfile for better caching.

cat > Dockerfile.4 <<EOF

FROM debian:10

WORKDIR /app

RUN echo 'APT::Install-Recommends "0";\nAPT::Install-Suggests "0";' > /etc/apt/apt.conf.d/99recommends

RUN apt-get update && \

apt-get install -y python3 python3-pip && \

rm -rf /var/lib/apt/lists/*

COPY requirements.txt .

RUN pip3 install -r requirements.txt

COPY . .

CMD python3 app.py

EXPOSE 80

EOFBuild & Run

docker build -t simple-image:4 -f Dockerfile.4 .

docker run --name simple-image -d -p 8000:80 simple-image:4

Stop & remove container

docker rm -f simple-image

Final touch. Source image and CMD.

cat > Dockerfile.5 <<EOF

FROM python:3.7-slim

WORKDIR /app

COPY requirements.txt .

RUN pip3 install -r requirements.txt

COPY . .

CMD ["python3", "app.py"]

EXPOSE 80

EOFBuild & Run

docker build -t simple-image:5 -f Dockerfile.5 .

docker run --name simple-image -d -p 8000:80 simple-image:5

Stop & remove container

docker rm -f simple-image

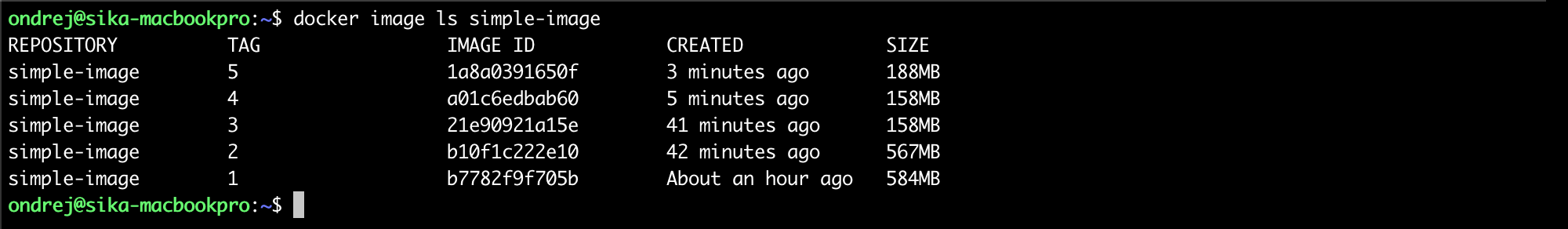

List images and see the difference in image sizes

docker image ls simple-image

Example in Dockerfile

ARG FROM_IMAGE=debian:9

FROM $FROM_IMAGEFROM debian

ARG PYTHON_VERSION=3.7

RUN apt-get update && \

apt-get install python==$PYTHON_VERSIONBuild using

docker build \

--build-arg FROM_IMAGE=python .

docker build .

docker build \

--build-arg PYTHON_VERSION=3.6 .

See Build Args example.

FROM java-jdk as build

RUN gradle assembly

FROM java-jre

COPY --from=build /build/demo.jar .# By default, last stage is used

docker build -t <image> <path>

# Select output stage

docker build -t <image> --target <stage> <path>Examples

docker build -t app .

docker build -t build --target build .

See Multistage Image example

wget https://raw.githubusercontent.com/ondrejsika/docker-training/master/examples/multistage-image/app.go

Or download file app.go manually.

cat > Dockerfile.1 <<EOF

FROM golang

WORKDIR /app

COPY app.go .

RUN go build app.go

CMD ["./app"]

EXPOSE 80

EOF

Build & Run

docker build -t multistage-image:1 -f Dockerfile.1 .

docker run --name multistage-image -d -p 8000:80 multistage-image:1

Stop & remove container

docker rm -f multistage-image

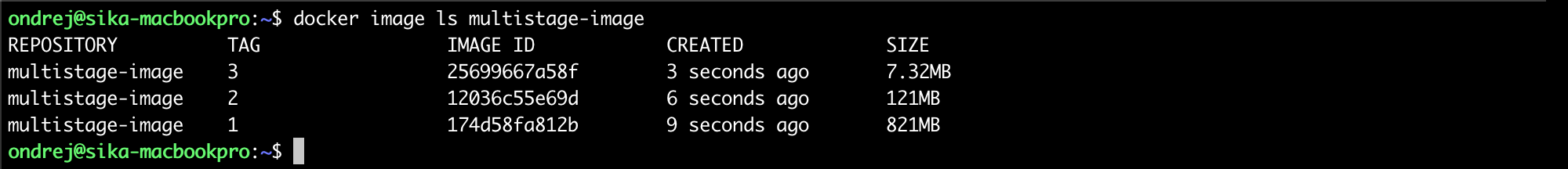

See the image size

docker image ls multistage-image

cat > Dockerfile.2 <<EOF

FROM golang as build

WORKDIR /build

COPY app.go .

RUN go build app.go

FROM debian:10

COPY --from=build /build/app .

CMD ["/app"]

EXPOSE 80

EOF

Build & Run

docker build -t multistage-image:2 -f Dockerfile.2 .

docker run --name multistage-image -d -p 8000:80 multistage-image:2

Stop & remove container

docker rm -f multistage-image

See the image size

docker image ls multistage-image

If you build you Go app to static binary (no dynamic dependencies), you can create image from scratch - without OS.

cat > Dockerfile.3 <<EOF

FROM golang as build

WORKDIR /build

COPY app.go .

ENV CGO_ENABLED=0

RUN go build -a -ldflags \

'-extldflags "-static"' app.go

FROM scratch

COPY --from=build /build/app .

CMD ["/app"]

EXPOSE 80

EOF

Build & Run

docker build -t multistage-image:3 -f Dockerfile.3 .

docker run --name multistage-image -d -p 8000:80 multistage-image:3

Stop & remove container

docker rm -f multistage-image

See the image size

docker image ls multistage-image

Docker has new build tool called BuildKit which can speedup your builds. For example, it build multiple stages in parallel and more. You can also extend Dockerfile functionality for caches, mounts, ...

To enable BuildKit, just set environment variable DOCKER_BUILDKIT to 1.

Example

export DOCKER_BUILDKIT=1

docker build .

or

DOCKER_BUILDKIT=1 docker build .

If you are on Windows, you can set variable in PowerShell

Set-Variable -Name "DOCKER_BUILDKIT" -Value "1"and in CMD

SET DOCKER_BUILDKIT=1You can enable BuildKit by default in Docker config file /etc/docker/daemon.json:

{ "features": { "buildkit": true } }

BuildKit has interactive output by default, if you can use plan, for example for CI, use:

docker build --progress=plain .

Docker Build Kit comes with new syntax of Dockerfile.

Here is a description of Dockerfile frontend experimental syntaxes - https://github.com/moby/buildkit/blob/master/frontend/dockerfile/docs/experimental.md

Example

cat > Dockerfile.1 <<EOF

# syntax = docker/dockerfile:experimental

FROM ubuntu

RUN rm -f /etc/apt/apt.conf.d/docker-clean; echo 'Binary::apt::APT::Keep-Downloaded-Packages "true";' > /etc/apt/apt.conf.d/keep-cache

RUN --mount=type=cache,target=/var/cache/apt --mount=type=cache,target=/var/lib/apt \

apt-get update && apt-get install -y python gcc

EOF

Build

docker build -t buildkit-example-1 -f Dockerfile.1 .

Another Dockerfile which use APT cached packages

cat > Dockerfile.2 <<EOF

# syntax = docker/dockerfile:experimental

FROM ubuntu

RUN rm -f /etc/apt/apt.conf.d/docker-clean; echo 'Binary::apt::APT::Keep-Downloaded-Packages "true";' > /etc/apt/apt.conf.d/keep-cache

RUN --mount=type=cache,target=/var/cache/apt --mount=type=cache,target=/var/lib/apt \

apt-get update && apt-get install -y gcc

EOF

Build

docker build -t buildkit-example-2 -f Dockerfile.2 .

See, packages are in APT cache, no download is needed.

More about BuildKit: https://docs.docker.com/develop/develop-images/build_enhancements/

You have your tool (echo) with default configuration (in command).

cat > Dockerfile.1 <<EOF

FROM debian:10

CMD ["echo", "hello", "world"]

EOF

Build

docker build -t echo:1 -f Dockerfile.1 .Run

# default command

docker run --rm echo:1

# updated command (didn't work)

docker run --rm echo:1 ahoj svete

# properly updated command

docker run --rm echo:1 echo ahoj sveteYou can split command array to command and entrypoint like:

cat > Dockerfile.2 <<EOF

FROM debian:10

ENTRYPOINT ["echo"]

CMD ["hello", "world"]

EOF

Build

docker build -t echo:2 -f Dockerfile.2 .Run

# default command (same)

docker run --rm echo:2

# updated command (works)

docker run --rm echo:2 ahoj sveteDocker support those network drivers:

- bridge (default)

- host

- none

- custom (bridge)

docker run debian ip a

docker run --net host debian ip a

docker run --net none debian ip a

docker network lsdocker network create <network>docker network rm <network>

Example:

docker network create -d bridge my_bridge

Run & Add Containers:

# Run on network

docker run -d --net=my_bridge --name nginx nginx

docker run -d --net=my_bridge --name apache httpd

# Connect to network

docker run -d --name nginx2 nginx

docker network connect my_bridge nginx2Test the network

docker run -ti --net my_bridge ondrejsika/host nginx

docker run -ti --net my_bridge ondrejsika/host apache

docker run -ti --net my_bridge ondrejsika/curl nginx

docker run -ti --net my_bridge ondrejsika/curl apache

Docker Compose is a tool for defining and running multi-container Docker applications.

With Docker Compose, you use a Compose file to configure your application's services.

Docker Compose is part of Docker Desktop (Mac, Windows). Only on Linux, you have to install it:

version: '3.7'

services:

app:

build: .

ports:

- 8000:80

redis:

image: redisHere is a compose file reference: https://docs.docker.com/compose/compose-file/

Here is a nice tutorial for YAML: https://learnxinyminutes.com/docs/yaml/

Service is a container running and managed by Docker Compose.

Just pull & run image

services:

app:

image: redisSimple, just build path

services:

app:

build: .Extended form with every build configuration

services:

app:

build:

context: ./app

dockerfile: ./app/docker/Dockerfile

args:

BUILD_NO: 1

image: reg.istry.cz/appservices:

app:

ports:

- 8000:80Volumes are very similar but there is a little difference

services:

app:

volumes:

- /data1

- data:/data2

- ./data:/data3

volumes:

data:services:

app:

command: ["python", "app.py"]

services:

app:

environment:

RACK_ENV: development

SHOW: 'true'

SESSION_SECRET:x-base: &base

image: debian

command: ['env']

services:

en:

<<: *base

environment:

HELLO: Hello

cs:

<<: *base

environment:

HELLO: HelloThis is ignored by Docker Compose. It's used by Docker Swarm (Docker native cluster).

services:

app:

deploy:

placement:

constraints: [node.role == manager]services:

app:

deploy:

mode: replicated

replicas: 4Clone this repository, cd to example an remove the Dockerfile & docker-compose.yml.

git clone https://github.com/ondrejsika/docker-training.git example--simple-compose

cd example--simple-compose/examples/simple-compose

rm Dockerfile docker-compose.yml

Now, we can create Docker compose and Compose File manually.

Create Dockerfile:

cat > Dockerfile <<EOF

FROM python:3.7-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY . .

CMD ["python", "app.py"]

EXPOSE 80

EOF

Create docker-compose.yml:

cat > docker-compose.yml <<EOF

version: '3.7'

services:

counter:

build: .

image: reg.istry.cz/examples/simple-compose/counter

ports:

- ${PORT:-80}:80

depends_on:

- redis

redis:

image: redis

EOF

docker-compose config- validate & see final docker compose yamldocker-compose ps- see all composite's containersdocker-compose exec <service> <command>- run something in containerdocker-compose version- see version ofdocker-composebinarydocker-compose logs [-f] [<service>]- see logs

Just build, don't run

docker-compose build

Build without cache

docker-compose build --no-cache

Build with args

docker-compose build --build-arg BUILD_NO=53

Docker Compose doesn't support BuildKit yet. They are working on it.

It's because Docker Compose is written in Python and Python Docker client it doesn't support yet.

See:

-d- run in detached mode--force-recreate- always create new cont.--build- build on every run--no-build- don't build, even images not exist--remove-orphans--abort-on-container-exit

docker-compose start [<service>]docker-compose stop [<service>]docker-compose restart [<service>]docker-compose kill [<service>]

docker-compose down

docker-compose up --scale <service>=<n>

Docker Machine is a tool that lets you install Docker Engine on virtual hosts, and manage the hosts with docker-machine commands.

You can use Machine to create Docker hosts on your local Mac or Windows box, on your company network, in your data center, or on cloud providers like AWS or Digital Ocean.

Docker Compose is part of Docker Desktop (Mac, Windows). Only on Linux, you have to install it:

https://docs.docker.com/machine/install-machine/

docker-machine ls- list machinesdocker-machine version- show version

docker-machine create [-d <driver>] <machine>

Example:

docker-machine create default

docker-machine create --driver digitalocean ci

List of drivers: https://docs.docker.com/machine/drivers/

docker-machine ip [<machine>]

Example:

docker-machine ip default

docker-machine ip

eval "$(docker-machine env [<machine>])"

Example

eval "$(docker-machine env default)"

eval "$(docker-machine env)"

eval "$(docker-machine env -u)"

docker-machine ssh [<machine>]

Example:

docker-machine ssh default

docker-machine ssh

docker-machine start [<machine>]docker-machine stop [<machine>]docker-machine restart [<machine>]docker-machine kill [<machine>]

docker-machine rm <machine>

Example:

docker-machine rm default

A native clustering system for Docker. It turns a pool of Docker hosts into a single, virtual host using an API proxy system. It is Docker's first container orchestration project that began in 2014. Combined with Docker Compose, it's a very convenient tool to manage containers.

docker swarm init --advertise-addr <manager_ip>

docker swarm init --advertise-addr 192.168.99.100

docker swarm join --token <token> <manager_ip>:2377

Example:

docker swarm join \

--token SWMTKN-1-49nj1cmql0...acrr2e7c \

192.168.99.100:2377

docker node ls- list nodesdocker node rm <node>- remove node from swarmdocker node ps [<node>]- list swarm taskdocker node update ARGS <node>

docker service create [ARGS] <image> [<command>]

Example:

docker service create --name ping debian ping oxs.cz

docker service lsdocker service inspect <service>docker service ps <service>docker service scale <service>=<n>docker service rm <service>

docker service scale <service>=<n>

Example:

docker service scale ping=5

Swarm (also Kubernetes) can't build the images, you have to build and push to registry first.

# Build

docker-compose build

# Push

docker-compose pushdocker stack deploy \

--compose-file <compose-file> \

<stack>

Example:

docker stack deploy \

--compose-file docker-compose.yml \

counter

Test from host:

curl `docker-machine ip manager`

curl `docker-machine ip manager`

curl `docker-machine ip worker1`

curl `docker-machine ip worker1`

curl `docker-machine ip worker2`

docker stack lsdocker stack services <stack>docker stack ps <stack>docker stack rm <stack>

- email: ondrej@sika.io

- web: https://ondrejsika.com

- twitter: @ondrejsika

- linkedin: /in/ondrejsika/

Do you like the course? Write me recommendation on Twitter (with handle @ondrejsika) and LinkedIn (add me /in/ondrejsika and I'll send you request for recommendation). Thanks.

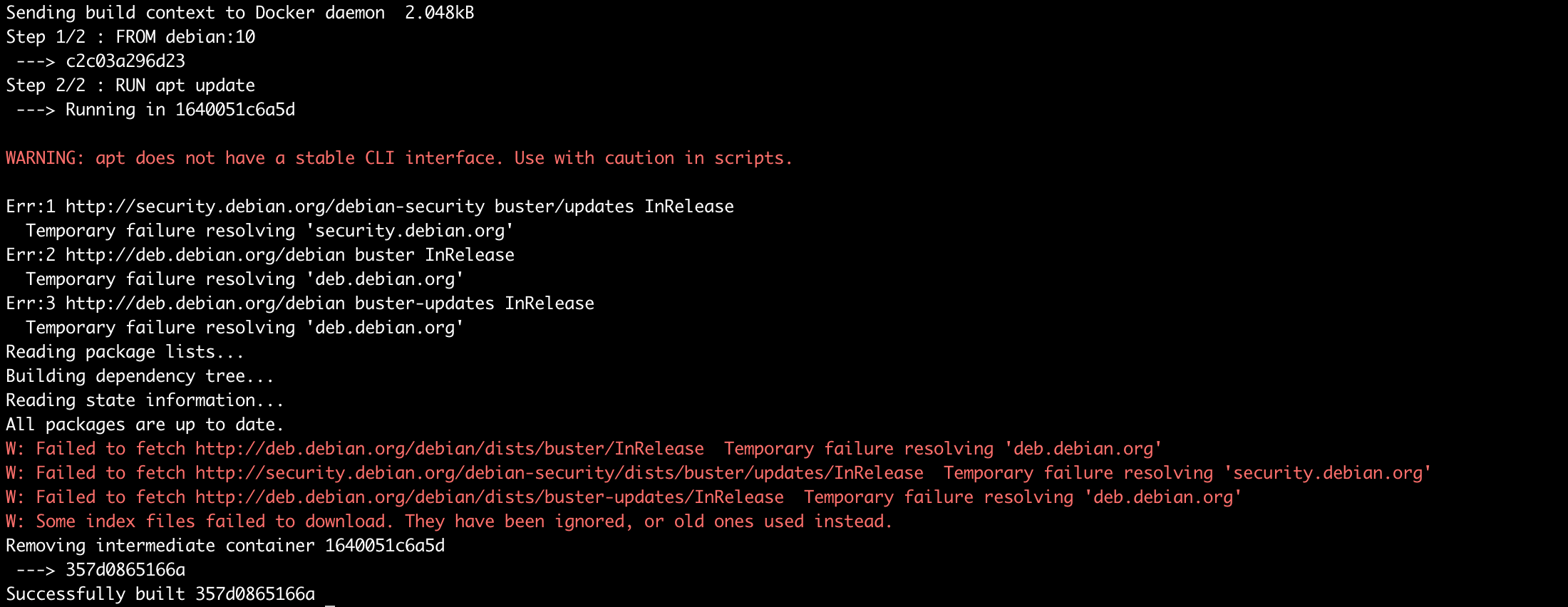

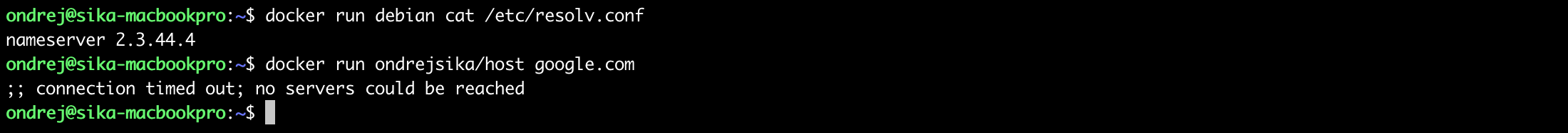

If you see something like that, it may be caused by DNS server trouble.

You can check see your DNS server using:

docker run debian cat /etc/resolv.conf

Or check if it works:

docker run ondrejsika/host google.com

You can fix it by setting Google or Cloudflare DNS to /etc/docker/daemon.json:

{"dns":["1.1.1.", "8.8.8.8"]}No. But they are work on it...