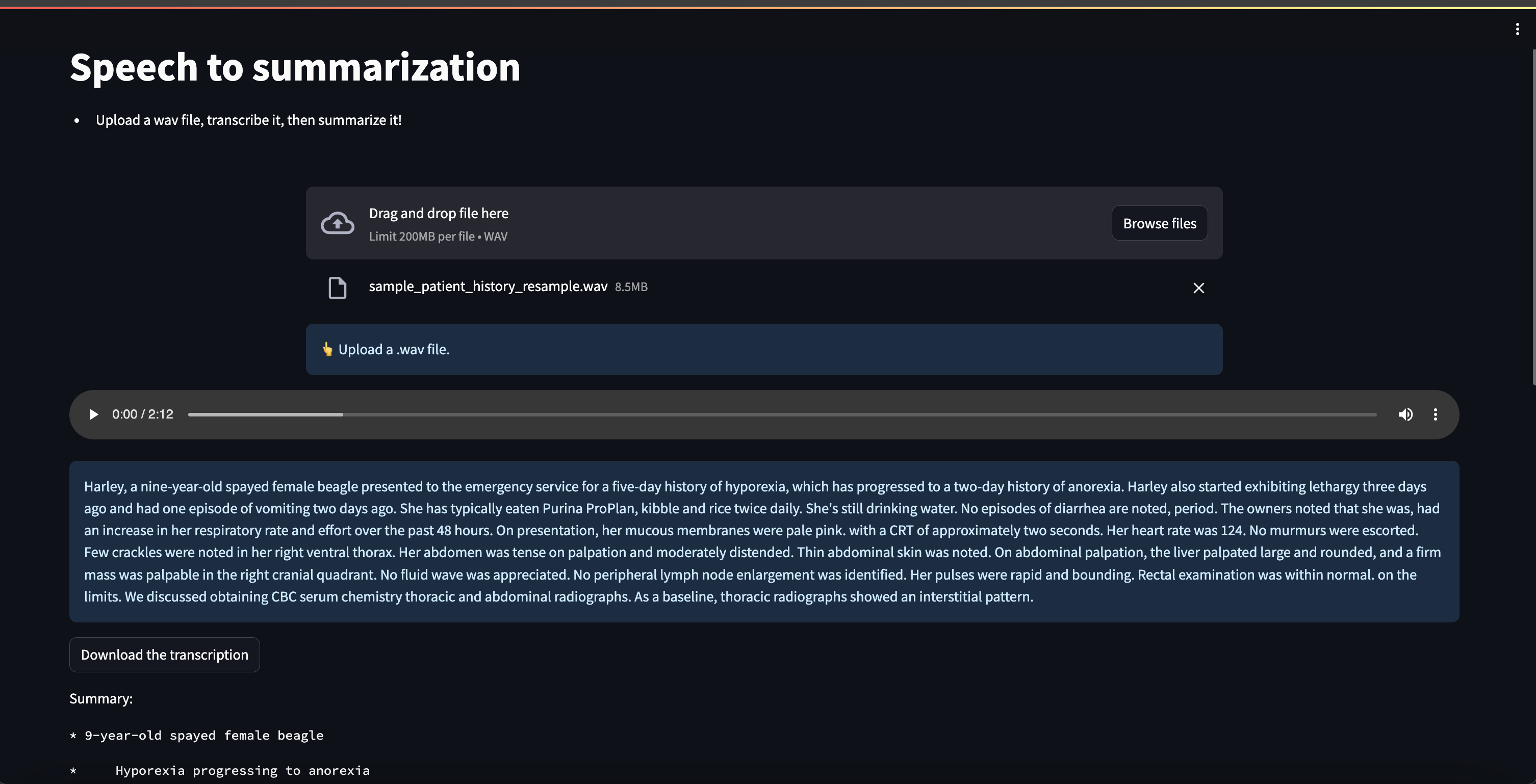

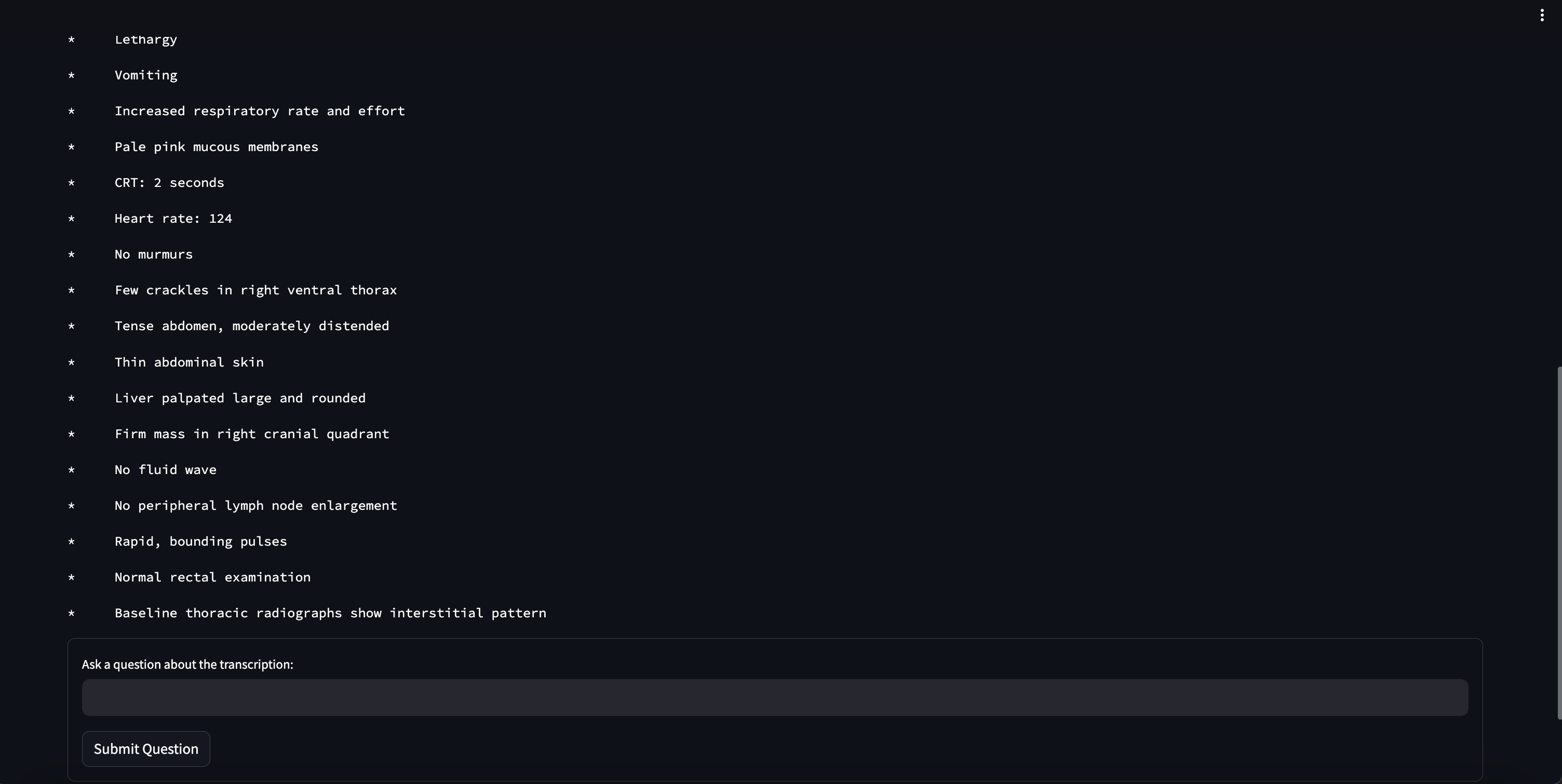

This repo will contain explorations on using LLMs and ASR to process different types of medical data. Here is an example of the streamlit app. Below is a sample transcribed patient history. This can then be summarized to pull out pertinant information. The audio clip used here is

./audio/sample_patient_history.wav.

The docker image can be built using ./Dockerfile. You can build it using the following command, run from the root directory

docker build --build-arg WB_API_KEY=<your_api_key> . -f Dockerfile --rm -t llm-finetuning:latestFirst navigate to this repo on your local machine. Then run the container:

docker run --gpus all --name medical-summarization-and-transcription -it --rm -p 8888:8888 -p 8501:8501 -p 8000:8000 --entrypoint /bin/bash -w /medical-summarization-and-transcription -v $(pwd):/medical-summarization-and-transcription llm-finetuning:latestInside the Container:

jupyter lab --ip 0.0.0.0 --no-browser --allow-root --NotebookApp.token=''Host machine access this url:

localhost:8888/<YOUR TREE HERE>Inside the container:

streamlit run app.pyHost machine:

localhost:8501- Evalueate pre-trained models on pubmed dataset:

./notebooks/dataset.ipynb - Adjust audio sample rate to preprocess for Whisper model:

./notebooks/speech_sample_rate.ipynb - Speech to text to summarization streamlit app:

./app.py

- Evaluate pre-trained models on pubmed dataset

- Train model on pubmed dataset

- Audio transcription: transcribe conversations between patients and doctors

- Experiment with phi-3-mini 4k and 128k instruct models, experiment with llama 3 8B, 8B Instruct models.