Labs for CSE7323: Mobile Apps for Sensing & Learning

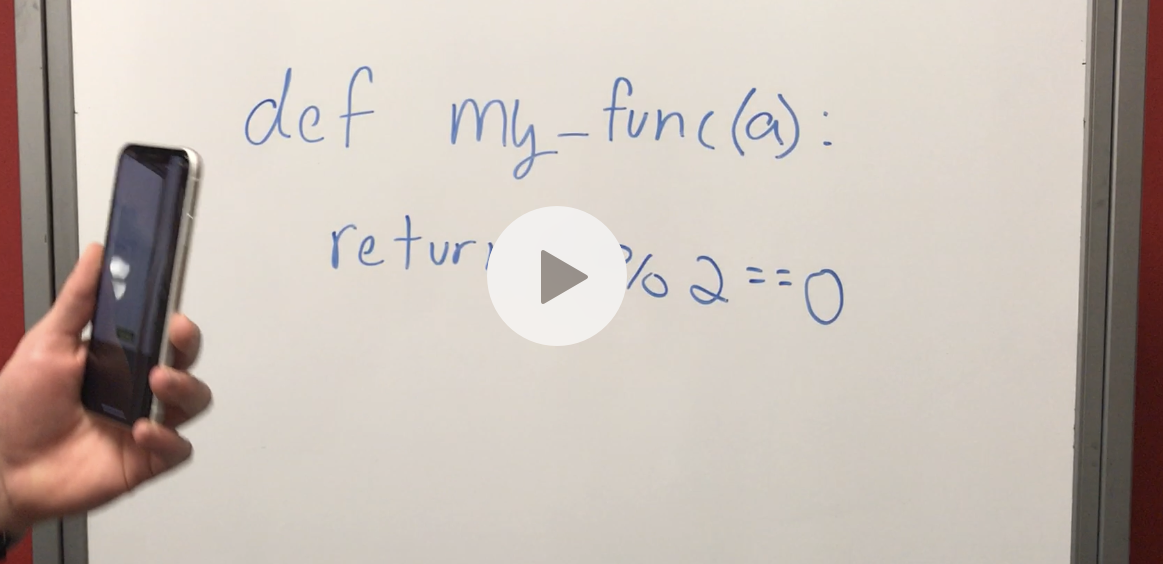

Our final project turns whiteboard code into the real thing! Utilizing SwiftOCR, we parse and interpret Python code as it's written on a board. That code is then sent to a server where a Docker container is created and the code is evaluated. The result is then showed to the user in real time.

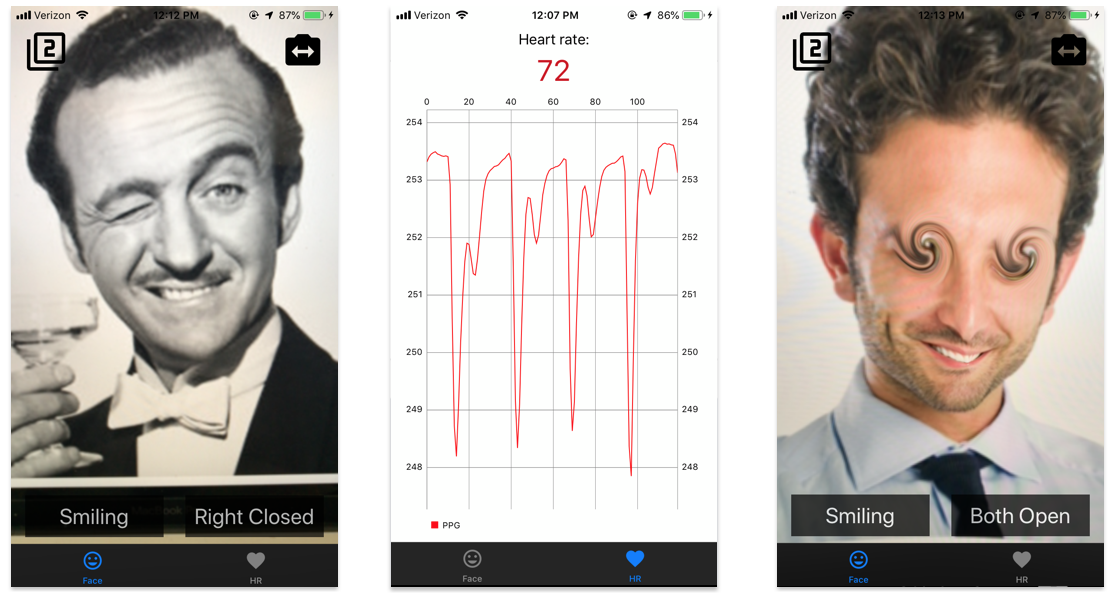

This lab is a little weird - we made use of the iPhone's cameras to do some fun things. The first half of the app is able to detect a face, determine which eyes someone has open, and even tell if the person is smiling! The other part of the app uses the phone's camera to measure heart rate. Just put your finger over the rear camera, and your HR is graphed live on the screen.

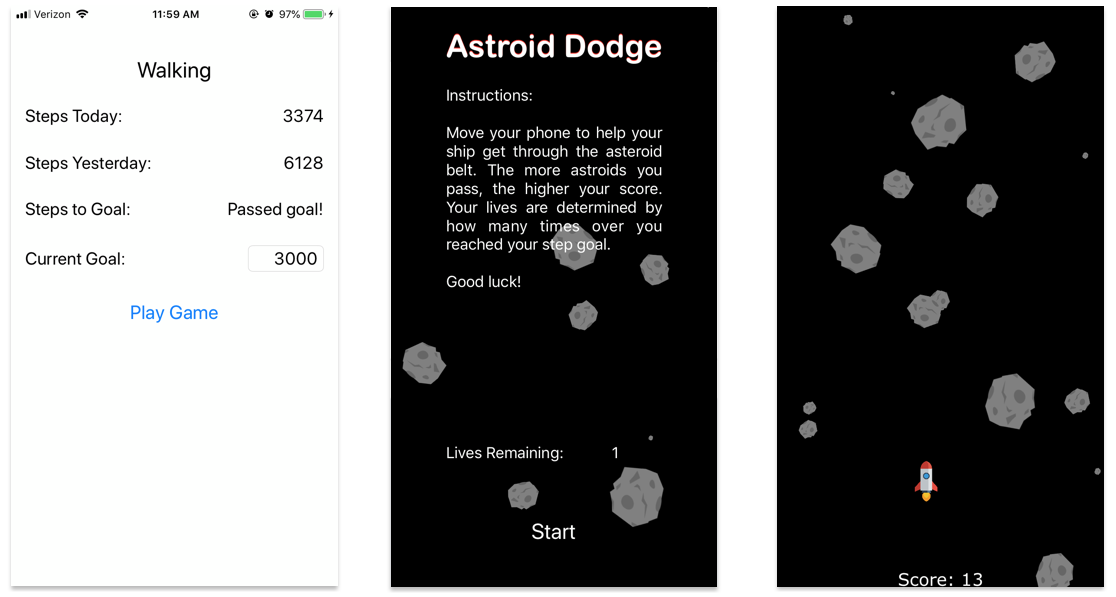

This app tracks your walking in the background and rewards you with a game! If you pass your step goal for the day, you get to play Asteroid Dodge: a game where you guide a spaceship through an asteroid field.

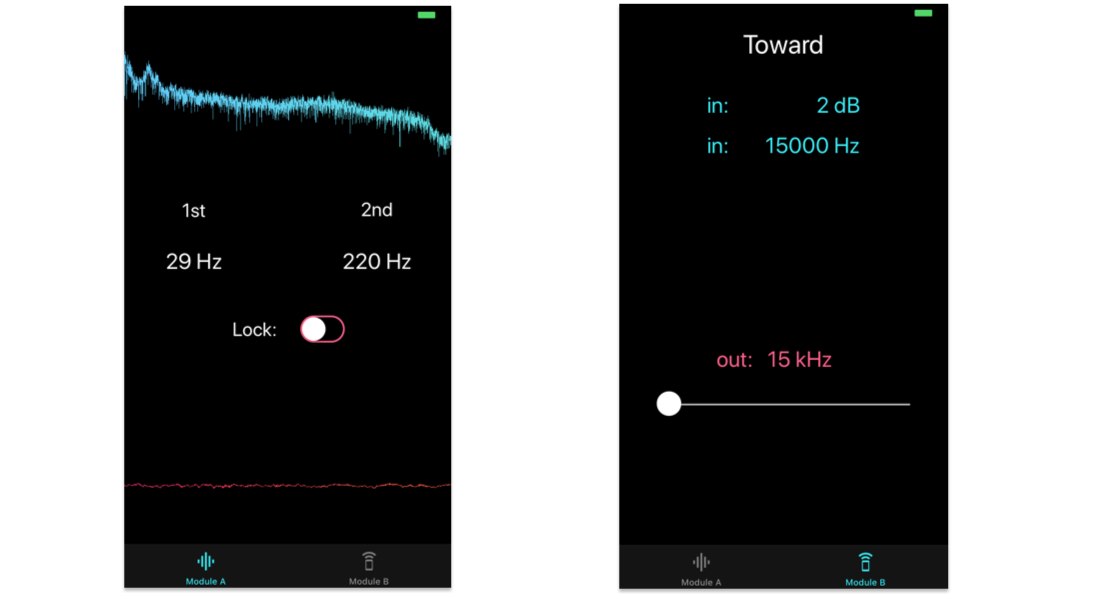

In this lab, we use a fast Fourier transform to calculate the frequencies of highest magnitude being picked up by the iPhone's microphone. We also make use of Doppler shifts to determine if someone is moving their hand towards or away from the microphone.

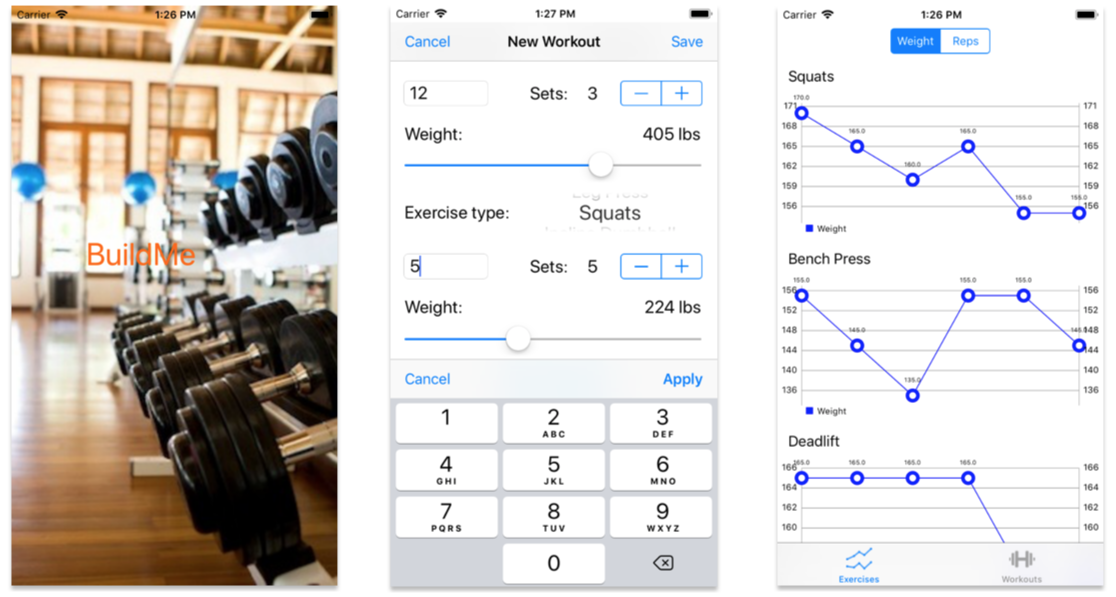

This app helps users track their workout progress.