10 example images from the MNIST dataset

| Seed = 1 | Seed = 2 |

|---|---|

|

|

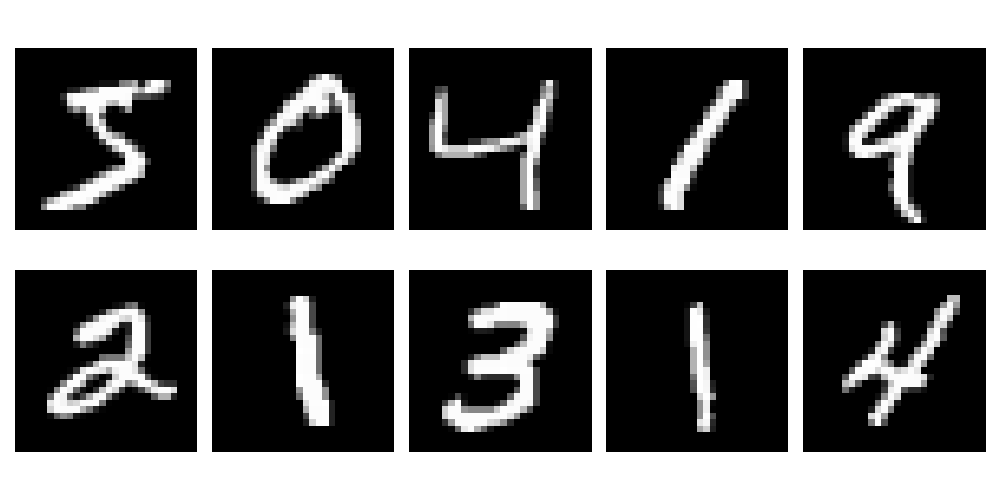

| Diffusion model trained on MNIST generating images |

Images after 200 timesteps applying Gaussian noise

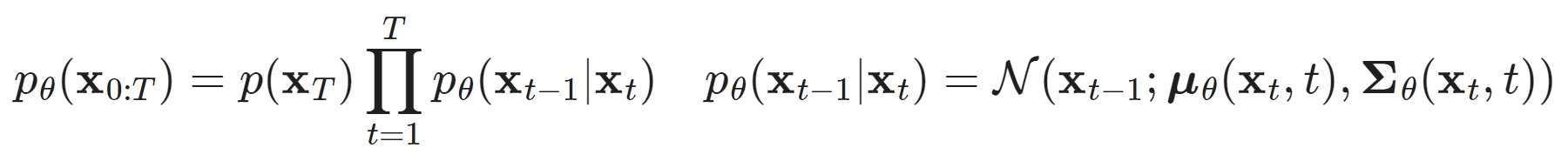

The forward process is a Markov chain of sequentially adding Gaussian noise for

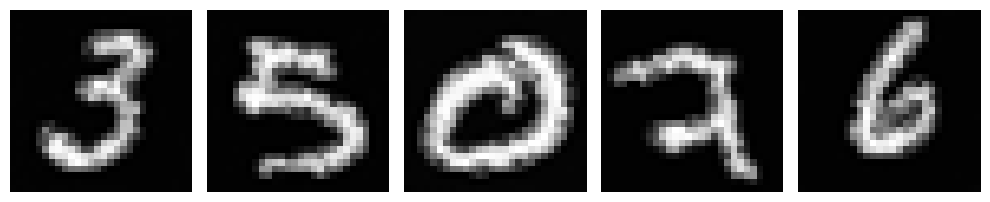

Correctly reversing above noising process would lead to a recreation of the true sample image. However, that would require

From a sufficiently trained model we can then input random Gaussian noise to generate new images resembling the original dataset. Sample generated images are depicted below representing handwritten digits.

To train the model yourself you can run train.py with following arguments -e [num_epochs] -l [learning_rate] -b [batch_size]. Training for 5 epochs took around 3 hours on my machine, you can find according checkpoint file in ./models/checkpoint_5ep.pth.