Results for snimu/rebasin, a library that implements methods described in "Git Re-basin"-paper by Ainsworth et al. for (almost) arbitrary models.

For acknowledgements, see here. For terminology, see here.

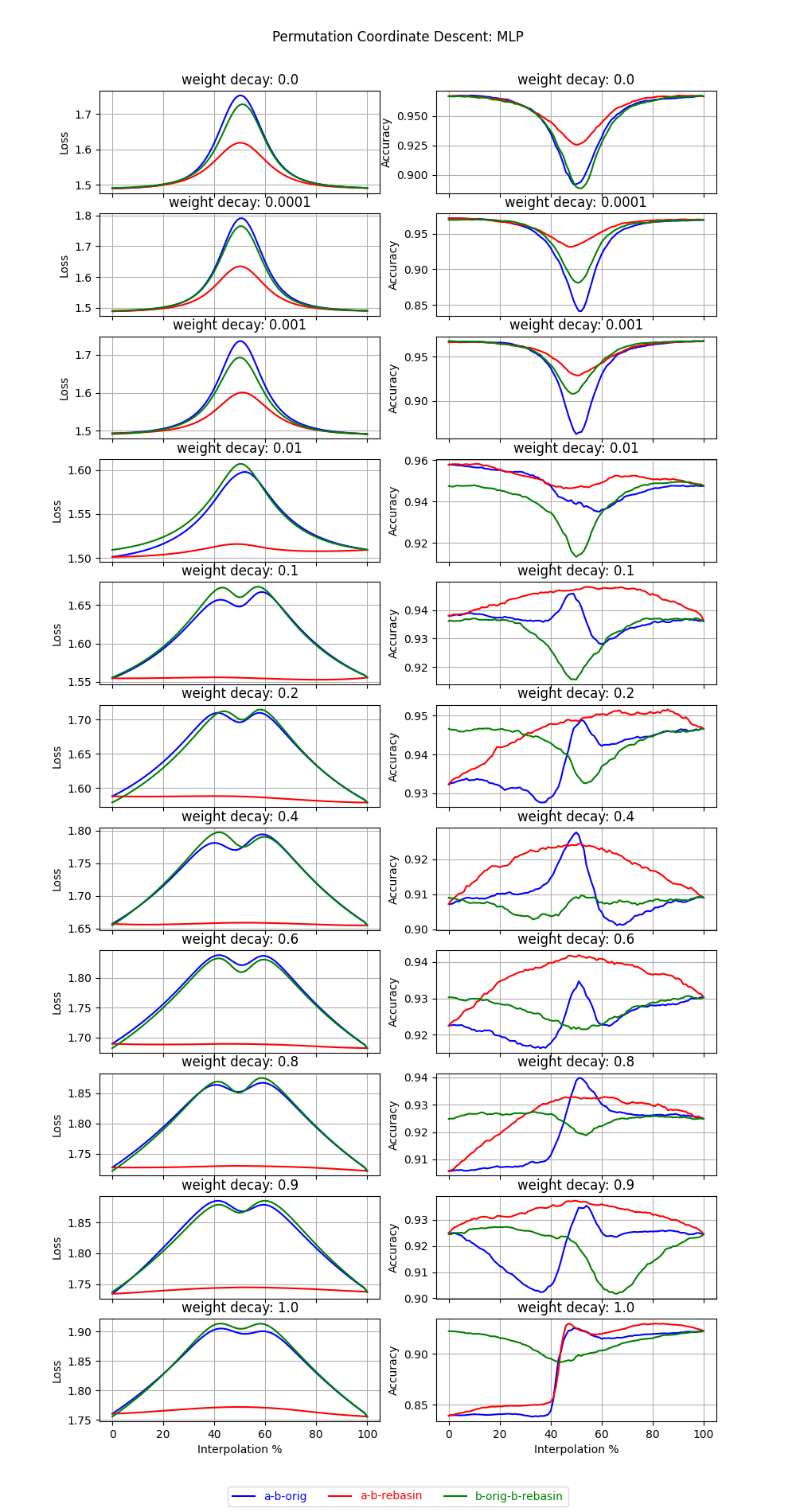

Results for a simple MLP. These are very positive!

The loss barrier was successfully removed in PermutationCoordinateDescent,

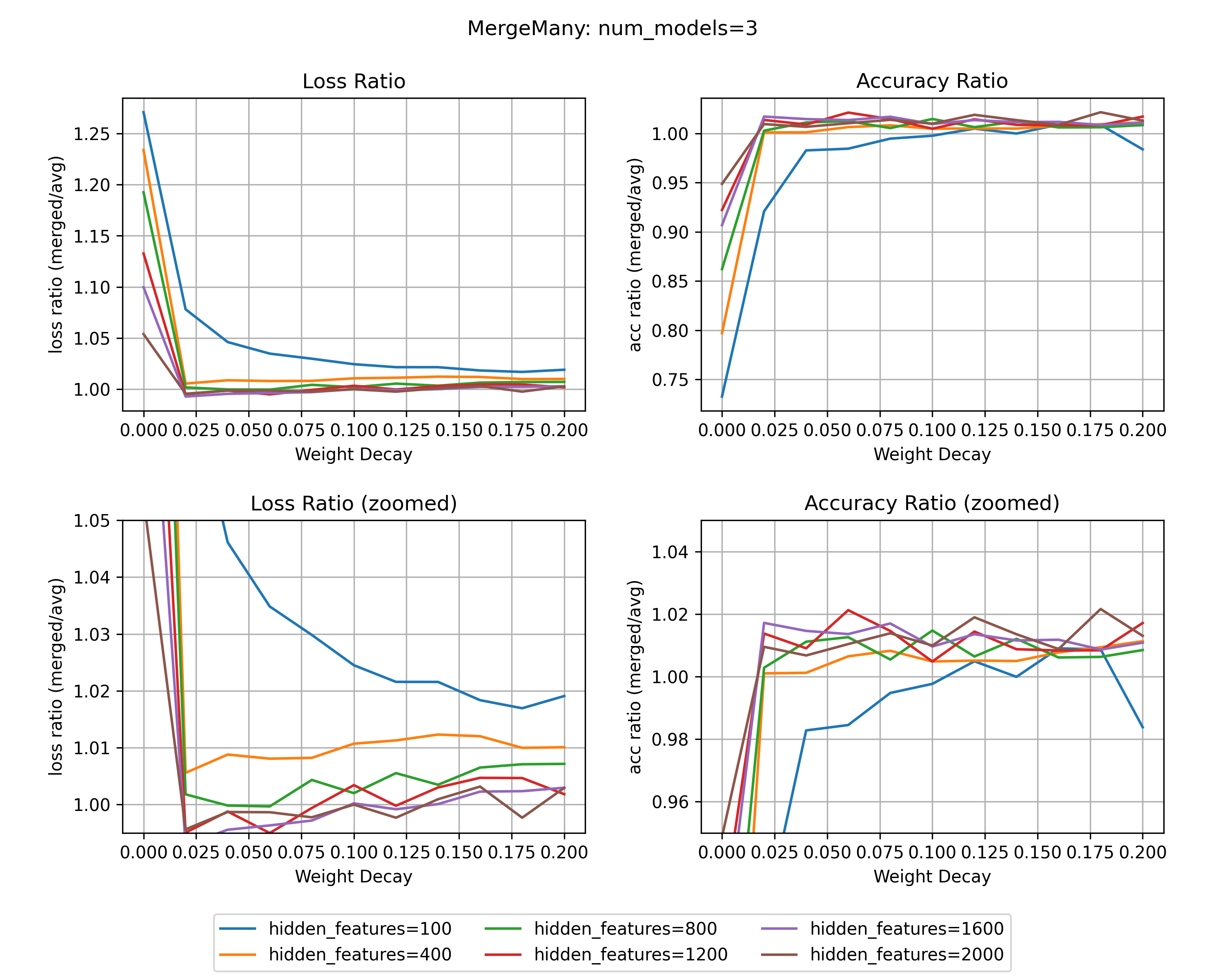

and MergeMany worked well, too.

For detailed results, read mlp/RESULTS.md.

Here's a taste:

The L2-regularizer (weight_decay) improves the performance of PermutationCoordinateDescent!

In MergeMany, I found that a high weight_decay is similarly important,

though here I also looked at the effect of the feature size:

Clearly, a higher feature-size is beneficial, but the effect of the weight-decay is stronger.

Results from forking tysam-code/hlb-CIFAR10. See snimu/hlb-CIFAR10 for the fork.

For results, read RESULTS.md. Raw data at hlb-CIFAR10/results.

Summary:

PermutationCoordinateDescentseems to work fairly well, though not perfectly.MergeManydoesn't work as described in the paper, but when training the models that are merged on different datasets and then retraining on yet another dataset, the results are promising.

Results from forking tysam-code/hlb-gpt. See snimu/hlb-gpt for the fork.

There is currently still some error with the code, so the results are not meaningful. I have therefore not written a RESULTS.md yet.

If you would like to look at the raw data from an experiment, though,

you can, at hlb-gpt/results. I just want to repeat that this data

is produced by erroneous code, otherwise model_b_original and model_b_rebasin

should have the same loss and accuracy, at least approximately.

Results for torchvision.models.mobilenet_v3_large.

For results, read RESULTS.md.

Summary:

This model contains several BatchNorm2d layers. Those have to have their

running_stats recomputed after re-basing. This, however, takes a lot of compute

on ImageNet, for which I don't have the budget. What I can say is that

as I increase the percentage of the ImageNet-data used for recalculating the

running_stats, the results improve, so if someone would like to

recompute the running_stats using more data (ideally all of imagenet),

I would be very interested in the results.

If you do want to run that test, look at rebasin/tests/apply_to_real_models and run it with:

python apply_to_real_models.py -m mobilenet_v3_large -d imagenet -s 99 -vMake sure to install the requirements first.