Deploying your Java application in a Kubernetes cluster could feel like Alice in Wonderland. You keep going down the rabbit hole and don’t know how to make that ride comfortable. This repository explains how a Java application can be deployed, tested, debugged and monitored in Kubernetes. In addition, it also talks about canary deployment and deployment pipeline.

We will use a simple Java application built using Spring Boot. The application publishes a REST endpoint that can be invoked at http://{host}:{port}/hello.

The source code is in the app directory.

-

java

-

maven

-

docker — Once docker is installed, enable Kubernetes in Docker. Docker → Preferences → Kubernetes → Enable Kubernetes

-

kubectl cli: https://kubernetes.io/docs/tasks/tools/install-kubectl/

-

Run application:

cd app mvn spring-boot:run

-

Test application

curl http://localhost:8080/hello

-

Create

m2.tar.gz:mvn -Dmaven.repo.local=./m2 clean package tar cvf m2.tar.gz ./m2

-

Create Docker image:

docker image build -t arungupta/greeting .

Explain multi-stage Dockerfile.

Build Docker Image using Jib

-

Create Docker image:

mvn compile jib:build -Pjib

The benefits of using Jib over a multi-stage Dockerfile build include:

-

Don’t need to install Docker or run a Docker daemon

-

Don’t need to write a Dockerfile or build the archive of m2 dependencies

-

Much faster

-

Builds reproducibly

The above builds directly to your Docker registry. Alternatively, Jib can also build to a Docker daemon:

mvn compile jib:dockerBuild -Pjib

-

Download JDK 11 and

scpto an Amazon Linux instance -

Install JDK 11:

sudo yum install jdk-11.0.1_linux-x64_bin.rpm

-

Create a custom JRE for the Spring Boot application:

cp target/app.war target/app.jar jlink \ --output myjre \ --add-modules $(jdeps --print-module-deps target/app.jar),\ java.xml,jdk.unsupported,java.sql,java.naming,java.desktop,\ java.management,java.security.jgss,java.instrument

-

Build Docker image using this custom JRE:

docker image build --file Dockerfile.jre -t arungupta/greeting:jre-slim .

-

List the Docker images and show the difference in sizes:

[ec2-user@ip-172-31-21-7 app]$ docker image ls | grep greeting arungupta/greeting jre-slim 9eed25582f36 6 seconds ago 162MB arungupta/greeting latest 1b7c061dad60 10 hours ago 490MB

-

Run the container:

docker container run -d -p 8080:8080 arungupta/greeting:jre-slim

-

Access the application:

curl http://localhost:8080/hello

Kubernetes can be easily enabled on a development machine using Docker for Mac as explained at https://docs.docker.com/docker-for-mac/#kubernetes.

-

Ensure that Kubernetes is enabled in Docker for Mac

-

Show the list of contexts:

kubectl config get-contexts

-

Configure kubectl CLI for Kubernetes cluster

kubectl config use-context docker-for-desktop

-

Deploy the application to local Kubernetes cluster

kubectl create -f greeting-deployment.yaml #creates the deployment of a ReplicaSet to bring up 1 pod kubectl get deployment -o=wide #prints info about deployments kubectl get svc -o=wide #we didn't deploy a service so we won't expect to see anything here other than k8s cluster running kubectl get pods -o=wide #NOTE the existance of the pod that is running

-

Can you try and access the web endpoint 'hello' using your local web browser using the IP address from the get pods command?

-

Can you try and access the web endpoint 'hello' using curl command from your local host?

-

Can you try and access the web endpoint 'hello' using curl command from within the pod itself?

kubectl exec -it greeting-5dc49d9484-ptpxf -- /bin/bash curl localhost:8080/hello

-

-

Deploy the load balancer service to local Kubernetes cluster to access app via port 80

kubectl create -f greeting-service.yaml kubectl get svc -o=wide

-

Now try accessing localhost via web browser or through curl

curl http://$(kubectl get svc/greeting-service -o jsonpath='{.status.loadBalancer.ingress[0].hostname}'):80/hello

-

-

Stop the container

docker container ls docker stop 6c8271e1e208 #docker stop <CONTAINER ID> #wait 5-10 seconds docker container ls

-

What just happened? There’s a container that’s running again. This is the power of K8S. In our greeting-deployment.yaml, we’ve defined a ReplicaSet ensuring that 1 replica is always running

-

K8S saw that the container was stopped so it started another one for us.

-

-

Delete the app deployment and service

kubectl delete -f greeting-deployment.yaml docker container ls #what do you notice here? kubectl get svc -o=wide kubectl delete -f greeting-service.yaml kubectl get svc -o=wide #notice any difference here?

-

Summary: we just used K8S to launch a simple app. We had to manage 2 yaml files for this simple app. What if we have a complex app (or distributed system) that has multiple yaml configuration files to represent system? At that point, managing the cluster deployment via K8S may become cumbersome. This is where Helm comes in. Helm will allow us to execute 1 single command to deploy our app.

-

Install the Helm CLI:

brew install kubernetes-helm

If Helm CLI is already installed then use

brew upgrade kubernetes-helm. -

Check Helm version:

helm version

-

Install Helm in Kubernetes cluster:

helm init

If Helm has already been initialized on the cluster, then you may have to upgrade Tiller:

helm init --upgrade

-

Install the Helm chart:

cd .. helm install --name myapp manifests/myapp

-

Check that the pod is running:

kubectl get pods

-

Check that the service is up:

kubectl get svc

-

Access the application:

curl http://$(kubectl get svc/myapp-greeting -o jsonpath='{.status.loadBalancer.ingress[0].hostname}'):80/hello -

Optional: Delete the application:

helm delete --purge myapp

You can debug a Docker container and a Kubernetes Pod if they’re running locally on your machine.

This was tested using Docker for Mac/Kubernetes. Use the previously deployed Helm chart.

-

Show service:

kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE greeting-service LoadBalancer 10.101.39.100 <pending> 80:30854/TCP 8m kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 90d myapp-greeting LoadBalancer 10.108.104.178 localhost 8080:32189/TCP,5005:31117/TCP 4s

Highlight the debug port is also forwarded.

-

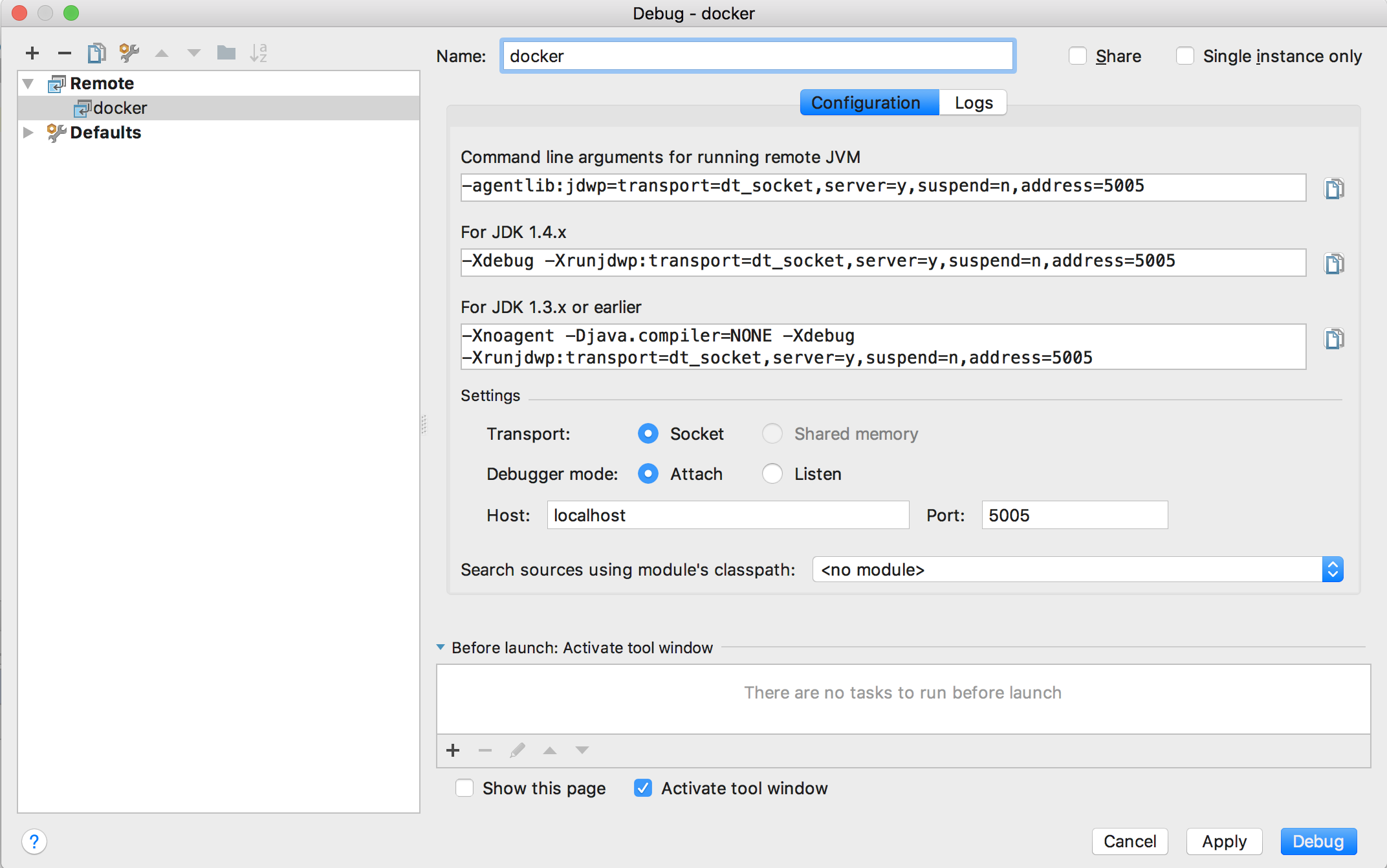

In IntelliJ,

Run,Debug,Remote: -

Click on

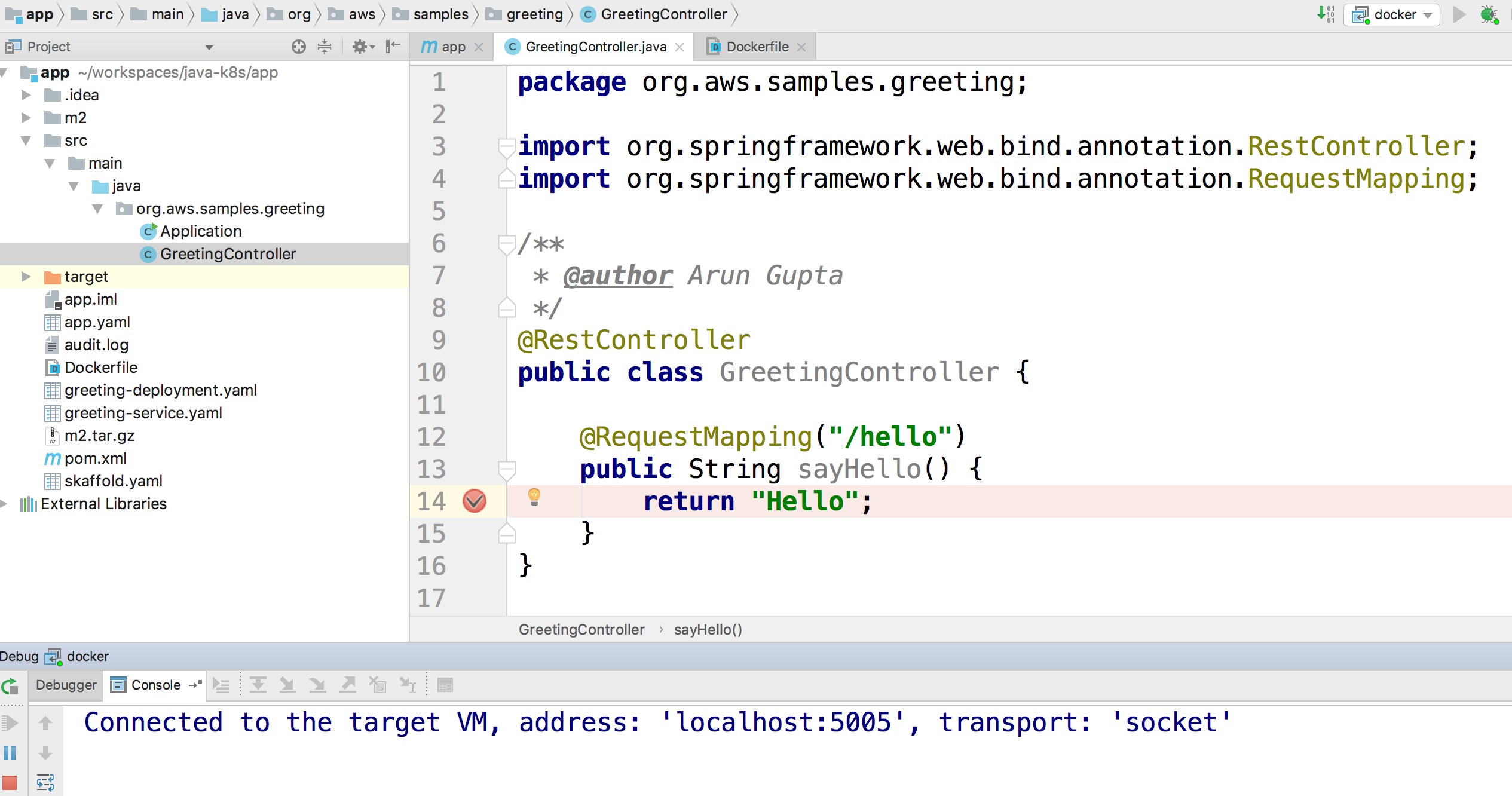

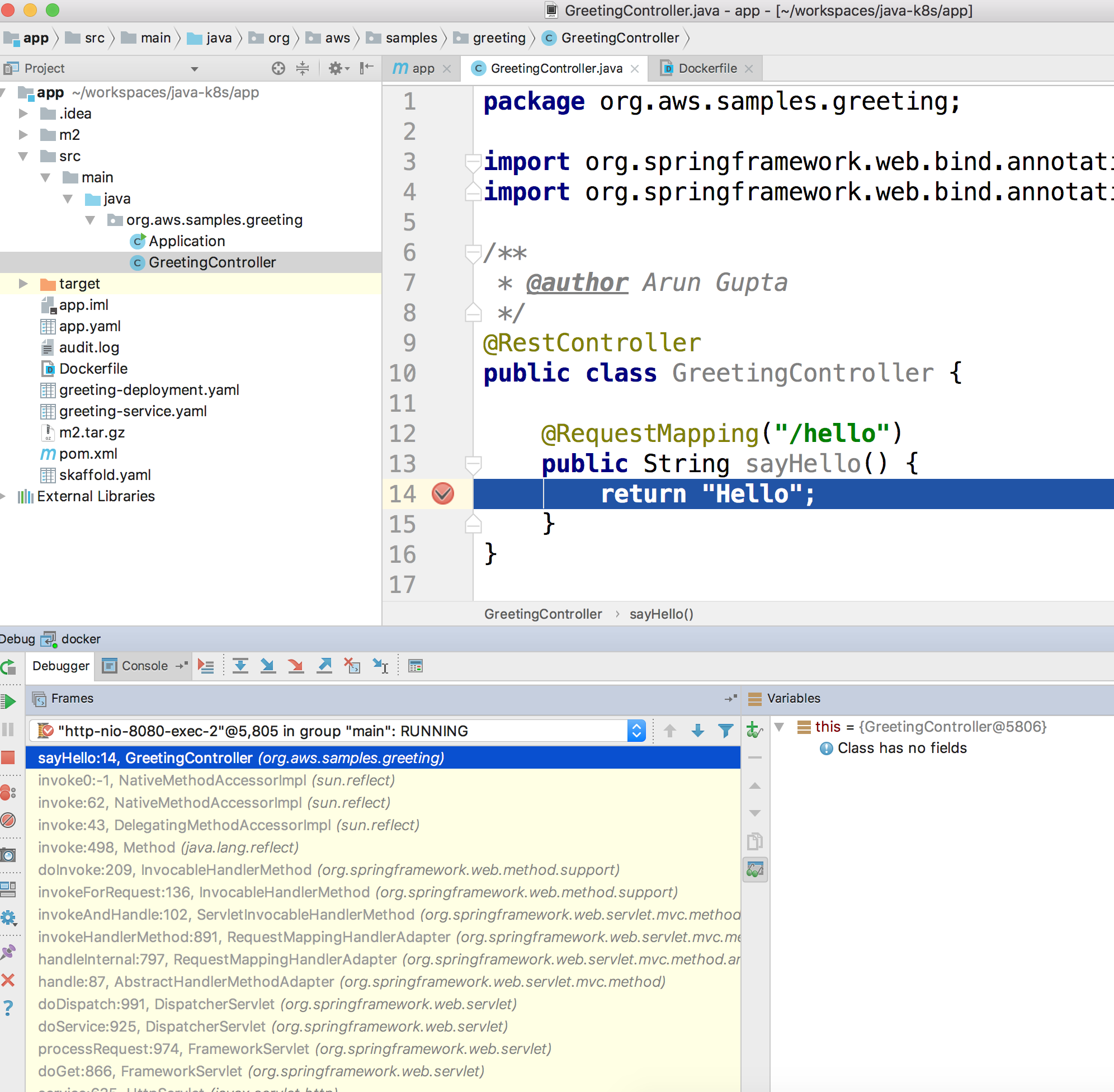

Debug, setup a breakpoint in the class: -

Access the application:

curl http://$(kubectl get svc/myapp-greeting \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}'):8080/hello -

Show the breakpoint hit in IntelliJ:

-

Delete the Helm chart:

helm delete --purge myapp

This was tested using Docker for Mac.

-

Run container:

docker container run --name greeting -p 8080:8080 -p 5005:5005 -d arungupta/greeting

-

Check container:

$ docker container ls -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 724313157e3c arungupta/greeting "java -jar app-swarm…" 3 seconds ago Up 2 seconds 0.0.0.0:5005->5005/tcp, 0.0.0.0:8080->8080/tcp greeting

-

Setup breakpoint as explained above.

-

Access the application using

curl http://localhost:8080/resources/greeting.

This application will be deployed to an Amazon EKS cluster. Let’s create the cluster first.

-

Install eksctl CLI:

brew install weaveworks/tap/eksctl

-

Download AWS IAM Authenticator:

curl -o heptio-authenticator-aws https://amazon-eks.s3-us-west-2.amazonaws.com/1.10.3/2018-07-26/bin/darwin/amd64/aws-iam-authenticator

This workaround to rename the tool is required until eksctl-io/eksctl#169 is fixed. Include the directory where the CLI is downloaded to your

PATH. -

Create EKS cluster:

eksctl create cluster --name myeks --nodes 4 --region us-west-2 2018-10-25T13:45:38+02:00 [ℹ] setting availability zones to [us-west-2a us-west-2c us-west-2b] 2018-10-25T13:45:39+02:00 [ℹ] using "ami-0a54c984b9f908c81" for nodes 2018-10-25T13:45:39+02:00 [ℹ] creating EKS cluster "myeks" in "us-west-2" region 2018-10-25T13:45:39+02:00 [ℹ] will create 2 separate CloudFormation stacks for cluster itself and the initial nodegroup 2018-10-25T13:45:39+02:00 [ℹ] if you encounter any issues, check CloudFormation console or try 'eksctl utils describe-stacks --region=us-west-2 --name=myeks' 2018-10-25T13:45:39+02:00 [ℹ] creating cluster stack "eksctl-myeks-cluster" 2018-10-25T13:57:33+02:00 [ℹ] creating nodegroup stack "eksctl-myeks-nodegroup-0" 2018-10-25T14:01:18+02:00 [✔] all EKS cluster resource for "myeks" had been created 2018-10-25T14:01:18+02:00 [✔] saved kubeconfig as "/Users/argu/.kube/config" 2018-10-25T14:01:19+02:00 [ℹ] the cluster has 0 nodes 2018-10-25T14:01:19+02:00 [ℹ] waiting for at least 4 nodes to become ready 2018-10-25T14:01:50+02:00 [ℹ] the cluster has 4 nodes 2018-10-25T14:01:50+02:00 [ℹ] node "ip-192-168-161-180.us-west-2.compute.internal" is ready 2018-10-25T14:01:50+02:00 [ℹ] node "ip-192-168-214-48.us-west-2.compute.internal" is ready 2018-10-25T14:01:50+02:00 [ℹ] node "ip-192-168-75-44.us-west-2.compute.internal" is ready 2018-10-25T14:01:50+02:00 [ℹ] node "ip-192-168-82-236.us-west-2.compute.internal" is ready 2018-10-25T14:01:52+02:00 [ℹ] kubectl command should work with "/Users/argu/.kube/config", try 'kubectl get nodes' 2018-10-25T14:01:52+02:00 [✔] EKS cluster "myeks" in "us-west-2" region is ready

-

Check the nodes:

kubectl get nodes NAME STATUS ROLES AGE VERSION ip-192-168-161-180.us-west-2.compute.internal Ready <none> 52s v1.10.3 ip-192-168-214-48.us-west-2.compute.internal Ready <none> 57s v1.10.3 ip-192-168-75-44.us-west-2.compute.internal Ready <none> 57s v1.10.3 ip-192-168-82-236.us-west-2.compute.internal Ready <none> 54s v1.10.3

-

Get the list of configs:

kubectl config get-contexts CURRENT NAME CLUSTER AUTHINFO NAMESPACE * arun@myeks.us-west-2.eksctl.io myeks.us-west-2.eksctl.io arun@myeks.us-west-2.eksctl.io docker-for-desktop docker-for-desktop-cluster docker-for-desktopAs indicated by

*, kubectl CLI configuration is updated to the recently created cluster.

-

Explicitly set the context:

kubectl config use-context arun@myeks.us-west-2.eksctl.io

-

Install Helm:

kubectl -n kube-system create sa tiller kubectl create clusterrolebinding tiller --clusterrole cluster-admin --serviceaccount=kube-system:tiller helm init --service-account tiller

-

Check the list of pods:

kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE aws-node-774jf 1/1 Running 1 2m aws-node-jrf5r 1/1 Running 0 2m aws-node-n46tw 1/1 Running 0 2m aws-node-slgns 1/1 Running 0 2m kube-dns-7cc87d595-5tskv 3/3 Running 0 8m kube-proxy-2ghg6 1/1 Running 0 2m kube-proxy-hqxwg 1/1 Running 0 2m kube-proxy-lrwrr 1/1 Running 0 2m kube-proxy-x77tq 1/1 Running 0 2m tiller-deploy-895d57dd9-txqk4 1/1 Running 0 15s

-

Redeploy the application:

helm install --name myapp manifests/myapp

-

Get the service:

kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 17m myapp-greeting LoadBalancer 10.100.241.250 a8713338abef211e8970816cb629d414-71232674.us-east-1.elb.amazonaws.com 8080:32626/TCP,5005:30739/TCP 2m

It shows the port

8080and5005are published and an Elastic Load Balancer is provisioned. It takes about three minutes for the load balancer to be ready. -

Access the application:

curl http://$(kubectl get svc/myapp-greeting \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}'):8080/hello -

Delete the application:

helm delete --purge myapp

Istio is is a layer 4/7 proxy that routes and load balances traffic over HTTP, WebSocket, HTTP/2, gRPC and supports application protocols such as MongoDB and Redis. Istio uses the Envoy proxy to manage all inbound/outbound traffic in the service mesh.

Istio has a wide variety of traffic management features that live outside the application code, such as A/B testing, phased/canary rollouts, failure recovery, circuit breaker, layer 7 routing and policy enforcement (all provided by the Envoy proxy). Istio also supports ACLs, rate limits, quotas, authentication, request tracing and telemetry collection using its Mixer component. The goal of the Istio project is to support traffic management and security of microservices without requiring any changes to the application; it does this by injecting a sidecar into your pod that handles all network communications.

More details at Getting Started with Istio on Amazon EKS.

-

Download Istio:

curl -L https://git.io/getLatestIstio | sh - cd istio-1.*

-

Include

istio-1.*/bindirectory inPATH -

Install Istio on Amazon EKS:

helm install \ --wait \ --name istio \ --namespace istio-system \ install/kubernetes/helm/istio \ --set tracing.enabled=true \ --set grafana.enabled=true

-

Verify:

kubectl get pods -n istio-system NAME READY STATUS RESTARTS AGE grafana-75485f89b9-4lwg5 1/1 Running 0 1m istio-citadel-84fb7985bf-4dkcx 1/1 Running 0 1m istio-egressgateway-bd9fb967d-bsrhz 1/1 Running 0 1m istio-galley-655c4f9ccd-qwk42 1/1 Running 0 1m istio-ingressgateway-688865c5f7-zj9db 1/1 Running 0 1m istio-pilot-6cd69dc444-9qstf 2/2 Running 0 1m istio-policy-6b9f4697d-g8hc6 2/2 Running 0 1m istio-sidecar-injector-8975849b4-cnd6l 1/1 Running 0 1m istio-statsd-prom-bridge-7f44bb5ddb-8r2zx 1/1 Running 0 1m istio-telemetry-6b5579595f-nlst8 2/2 Running 0 1m istio-tracing-ff94688bb-2w4wg 1/1 Running 0 1m prometheus-84bd4b9796-t9kk5 1/1 Running 0 1m

Check that both Tracing and Grafana add-ons are enabled.

-

Enable side car injection for all pods in

defaultnamespacekubectl label namespace default istio-injection=enabled

-

From the repo’s main directory, deploy the application:

kubectl apply -f manifests/app.yaml

-

Check pods and note that it has two containers (one for the application and one for the sidecar):

kubectl get pods -l app=greeting NAME READY STATUS RESTARTS AGE greeting-d4f55c7ff-6gz8b 2/2 Running 0 5s

-

Get list of containers in the pod:

kubectl get pods -l app=greeting -o jsonpath={.items[*].spec.containers[*].name} greeting istio-proxy -

Get response:

curl http://$(kubectl get svc/greeting \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')/hello

-

Deploy application with two versions of

greeting, one that returnsHelloand another that returnsHowdy:kubectl delete -f manifests/app.yaml kubectl apply -f manifests/app-hello-howdy.yaml

-

Check the list of pods:

kubectl get pods -l app=greeting NAME READY STATUS RESTARTS AGE greeting-hello-69cc7684d-7g4bx 2/2 Running 0 1m greeting-howdy-788b5d4b44-g7pml 2/2 Running 0 1m

-

Access application multipe times to see different response:

for i in {1..10} do curl -q http://$(kubectl get svc/greeting -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')/hello echo done -

Setup an Istio rule to split traffic between 75% to

Helloand 25% toHowdyversion of thegreetingservice:kubectl apply -f manifests/app-rule-75-25.yaml

-

Invoke the service again to see the traffic split between two services.

-

Setup an Istio rule to divert 10% traffic to canary:

kubectl delete -f manifests/app-rule-75-25.yaml kubectl apply -f manifests/app-canary.yaml

-

Access application multipe times to see ~10% greeting messages with

Howdy:for i in {1..50} do curl -q http://$(kubectl get svc/greeting -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')/hello echo done

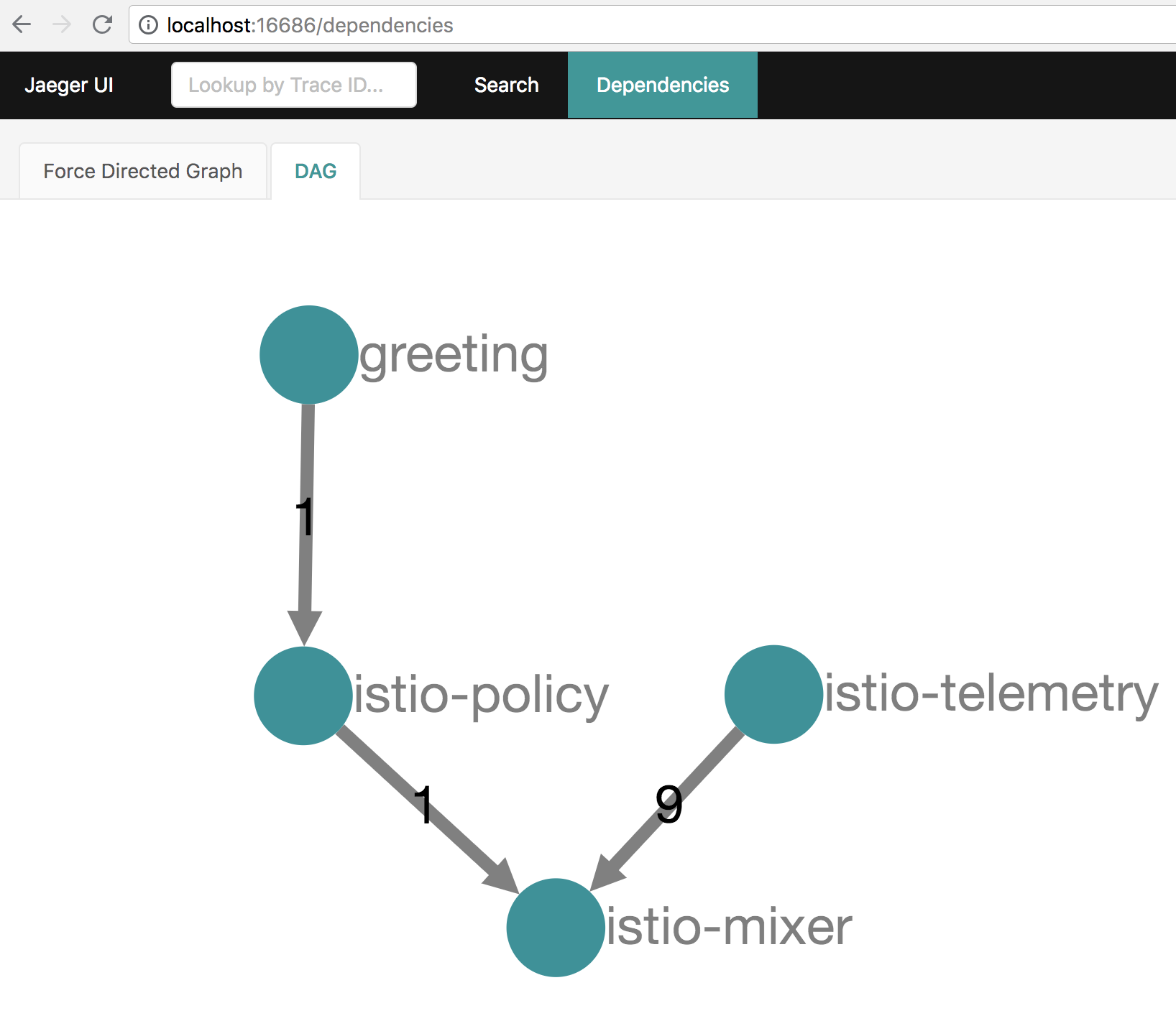

Istio is deployed as a sidecar proxy into each of your pods; this means it can see and monitor all the traffic flows between your microservices and generate a graphical representation of your mesh traffic. We’ll use the application you deployed in the previous step to demonstrate this.

By default, tracing is disabled. --set tracing.enabled=true was used during Istio installation to ensure tracing was enabled.

Setup access to the tracing dashboard URL using port-forwarding:

kubectl port-forward \

-n istio-system \

pod/$(kubectl get pod \

-n istio-system \

-l app=jaeger \

-o jsonpath='{.items[0].metadata.name}') 16686:16686 &

Access the dashboard at http://localhost:16686, click on Dependencies, DAG.

-

By default, Grafana is disabled.

--set grafana.enabled=truewas used during Istio installation to ensure Grafana was enabled. Alternatively, the Grafana add-on can be installed as:kubectl apply -f install/kubernetes/addons/grafana.yaml

-

Verify:

kubectl get pods -l app=grafana -n istio-system NAME READY STATUS RESTARTS AGE grafana-75485f89b9-n4skw 1/1 Running 0 10m

-

Forward Istio dashboard using Grafana UI:

kubectl -n istio-system \ port-forward $(kubectl -n istio-system \ get pod -l app=grafana \ -o jsonpath='{.items[0].metadata.name}') 3000:3000 & -

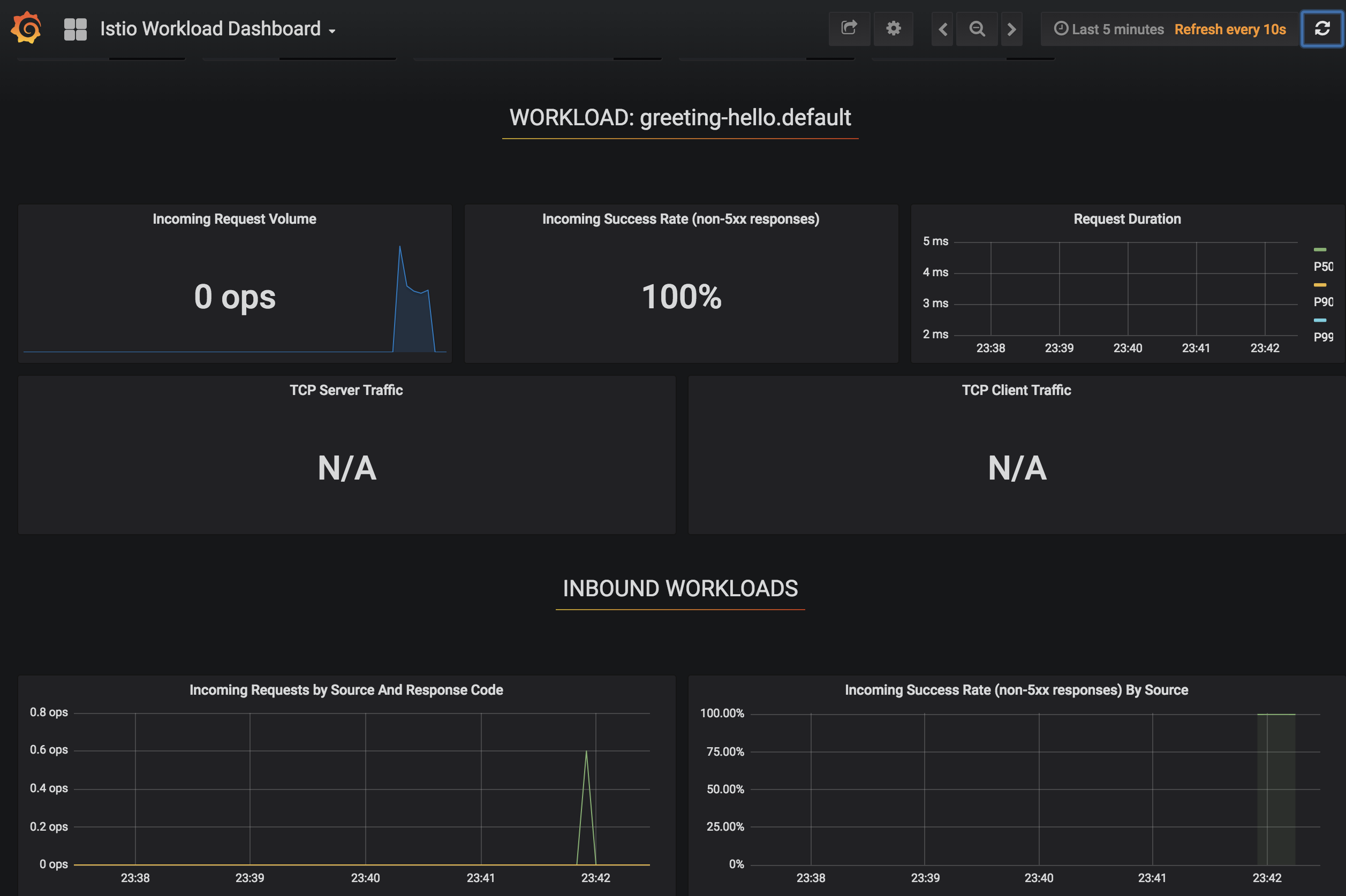

View Istio dashboard http://localhost:3000. Click on

Home,Istio Workload Dashboard. -

Invoke the endpoint:

curl http://$(kubectl get svc/greeting \ -o jsonpath='{.status.loadBalancer.ingress[0].hostname}')/hello

Delays and timeouts can be injected in services.

-

Deploy the application:

kubectl delete -f manifests/app.yaml kubectl apply -f manifests/app-ingress.yaml

-

Add a 5 seconds delay to calls to the service:

kubectl apply -f manifests/greeting-delay.yaml

-

Invoke the service using a 2 seconds timeout:

export INGRESS_HOST=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.status.loadBalancer.ingress[0].hostname}') export INGRESS_PORT=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="http")].port}') export GATEWAY_URL=$INGRESS_HOST:$INGRESS_PORT curl --connect-timeout 2 http://$GATEWAY_URL/resources/greeting

The service will timeout in 2 seconds.

kube-monkey is an implementation of Netflix’s Chaos Monkey for Kubernetes clusters. It randomly deletes Kubernetes pods in the cluster encouraging and validating the development of failure-resilient services.

-

Create kube-monkey configuration:

kubectl apply -f manifests/kube-monkey-configmap.yaml

-

Run kube-monkey:

kubectl apply -f manifests/kube-monkey-deployment.yaml

-

Deploy an app that opts-in for pod deletion:

kubectl apply -f manifests/app-kube-monkey.yaml

This application agrees to kill up to 40% of pods. The schedule of deletion is defined by kube-monkey configuration and is defined to be between 10am and 4pm on weekdays.

Skaffold is a command line utility that facilitates continuous development for Kubernetes applications. With Skaffold, you can iterate on your application source code locally then deploy it to a remote Kubernetes cluster.

-

Check context:

kubectl config get-contexts CURRENT NAME CLUSTER AUTHINFO NAMESPACE arun@eks-gpu.us-west-2.eksctl.io eks-gpu.us-west-2.eksctl.io arun@eks-gpu.us-west-2.eksctl.io * arun@myeks.us-east-1.eksctl.io myeks.us-east-1.eksctl.io arun@myeks.us-east-1.eksctl.io docker-for-desktop docker-for-desktop-cluster docker-for-desktop -

Change to use local Kubernetes cluster:

kubectl config use-context docker-for-desktop

-

Download Skaffold:

curl -Lo skaffold https://storage.googleapis.com/skaffold/releases/latest/skaffold-darwin-amd64 \ && chmod +x skaffold

-

Open http://localhost:8080/resources/greeting in browser. This will show the page is not available.

-

Run Skaffold in the application directory:

cd app skaffold dev

-

Refresh the page in browser to see the output.