Course project of CSE463 Machine Learning, UNIST

Our task is to train an MLP on$X_{tr}$, apply the trained MLP to the test set Trn.txt file contains this training set: For each line, the first two columns represent an input Tst.txt file where two columns represent a test input per line.

-

Using

Xavier Normal Initializationfor weight initializationXavier Normal Initialization[Glorot & Bengio, AISTATS 2010]

(

- Bias initialization as zero

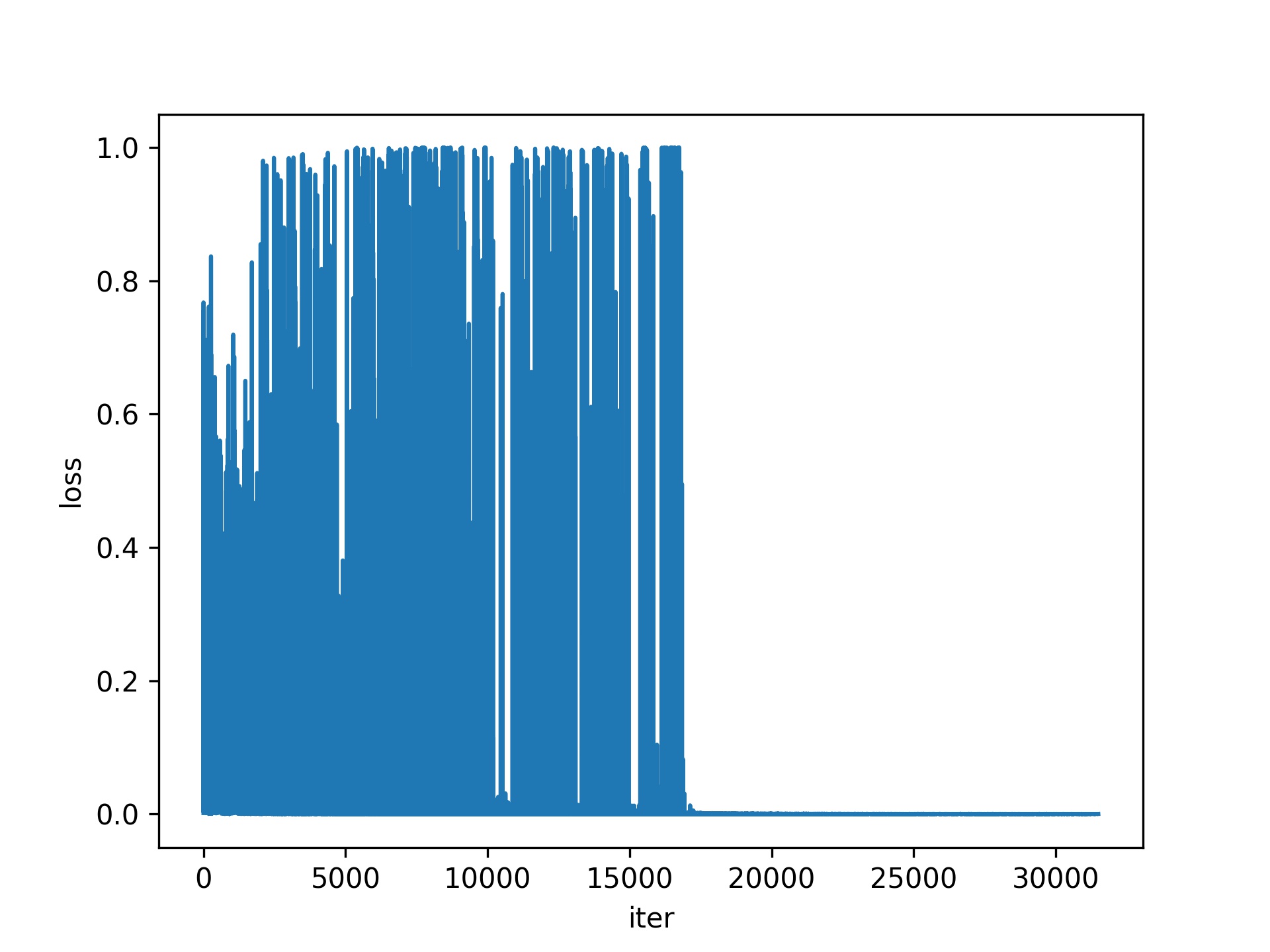

Learning Rate: 1epochs: 50batch size: 1 (fully-SGD)- using sigmoid for activation function

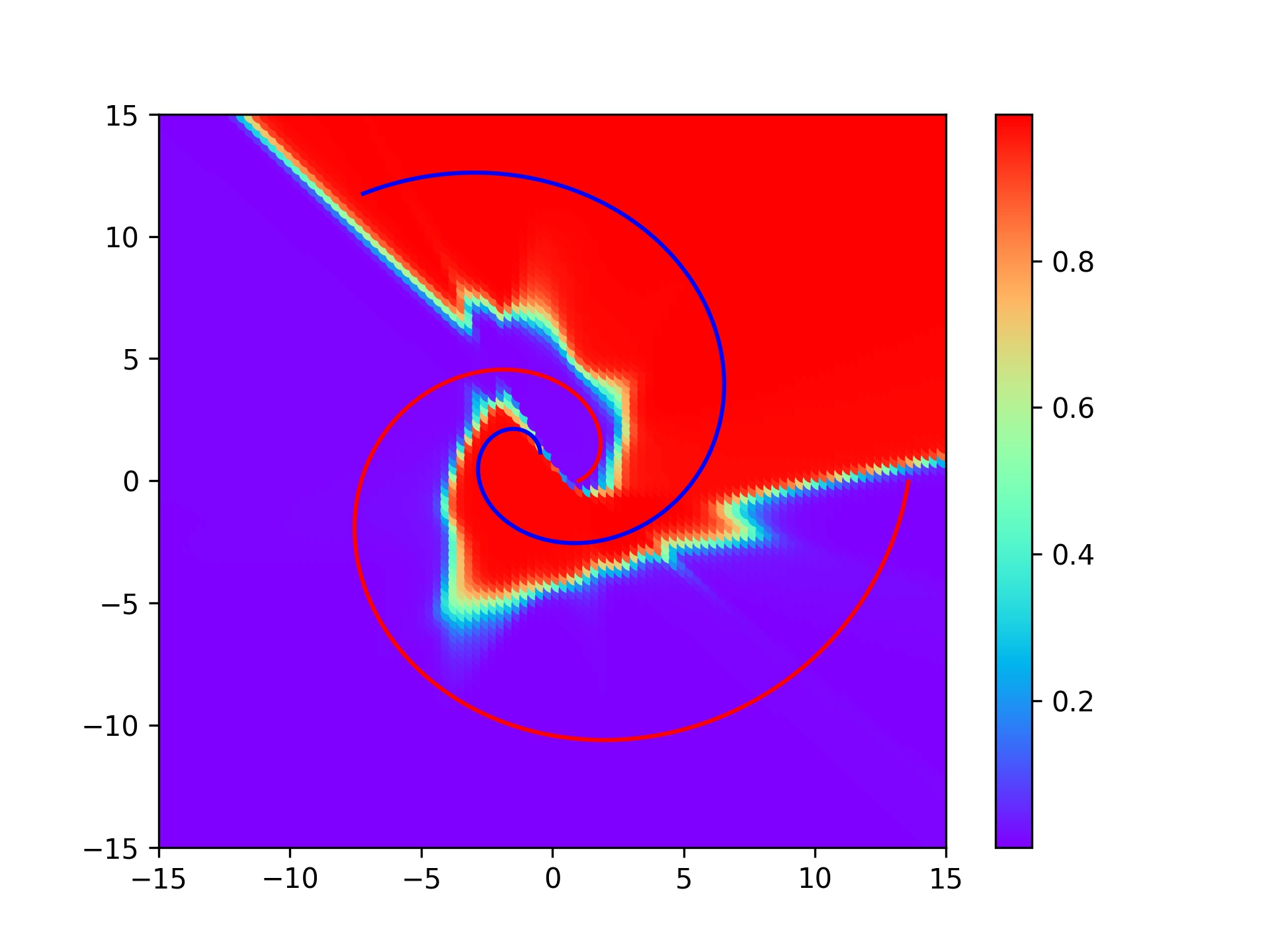

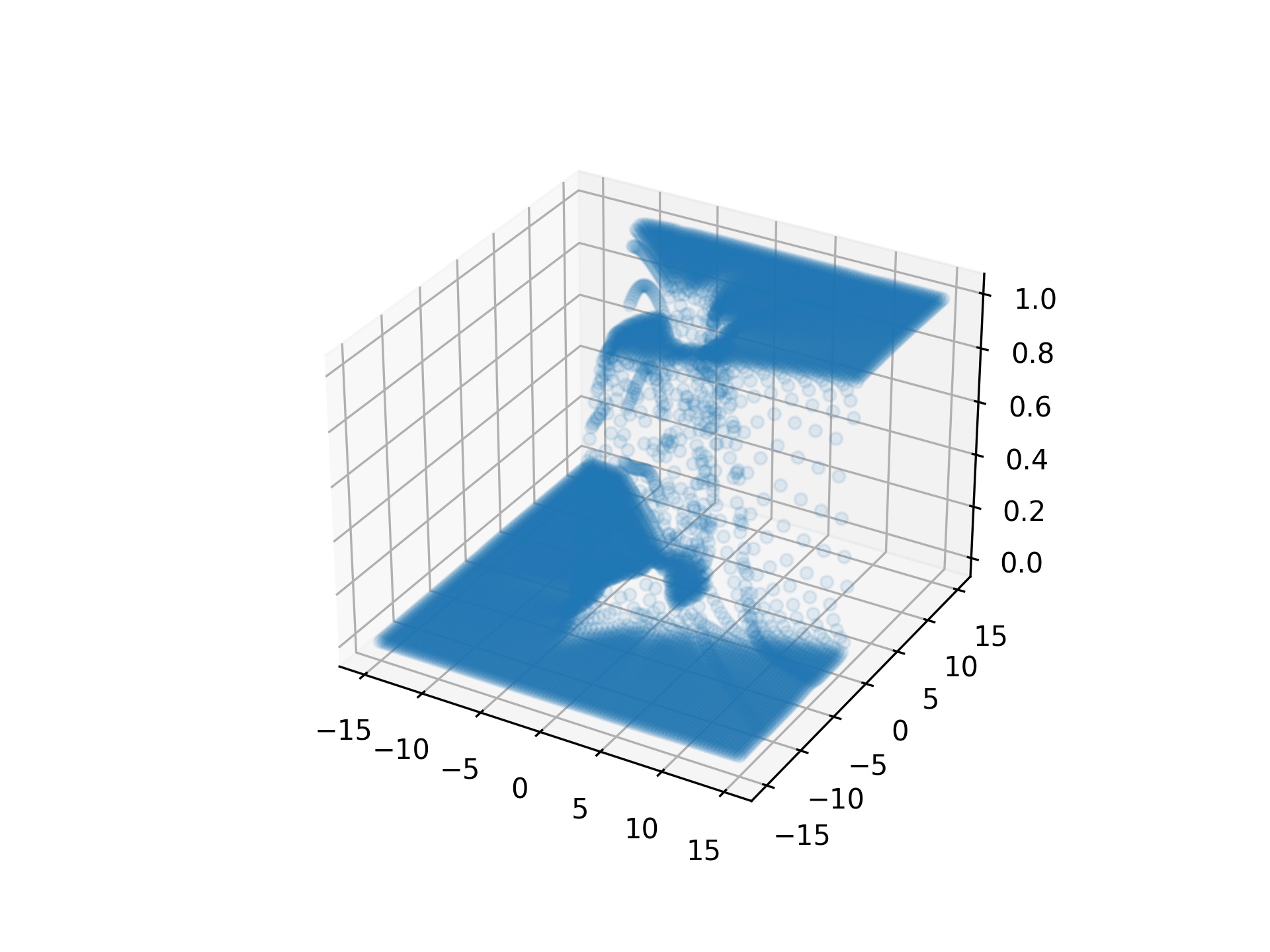

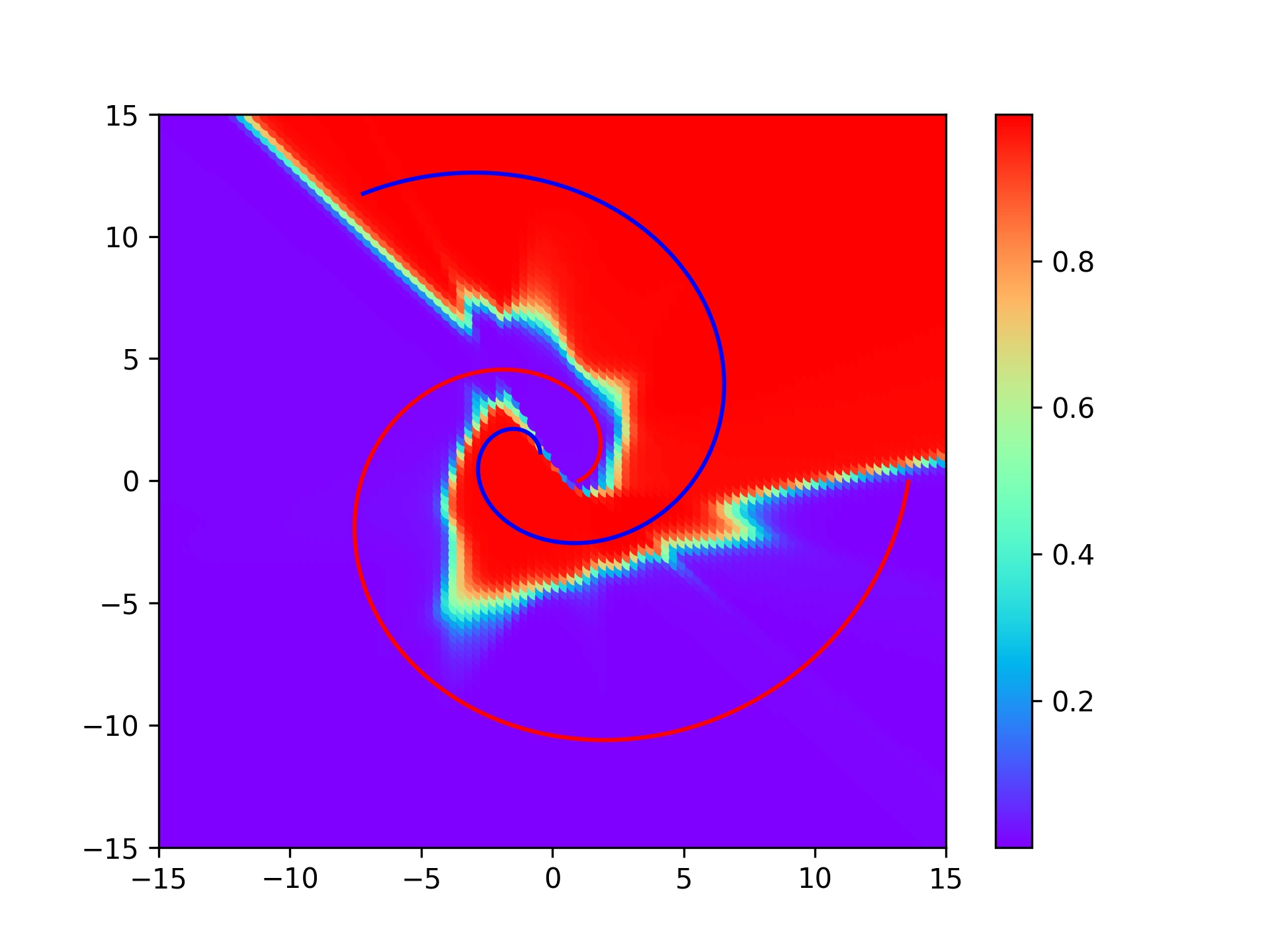

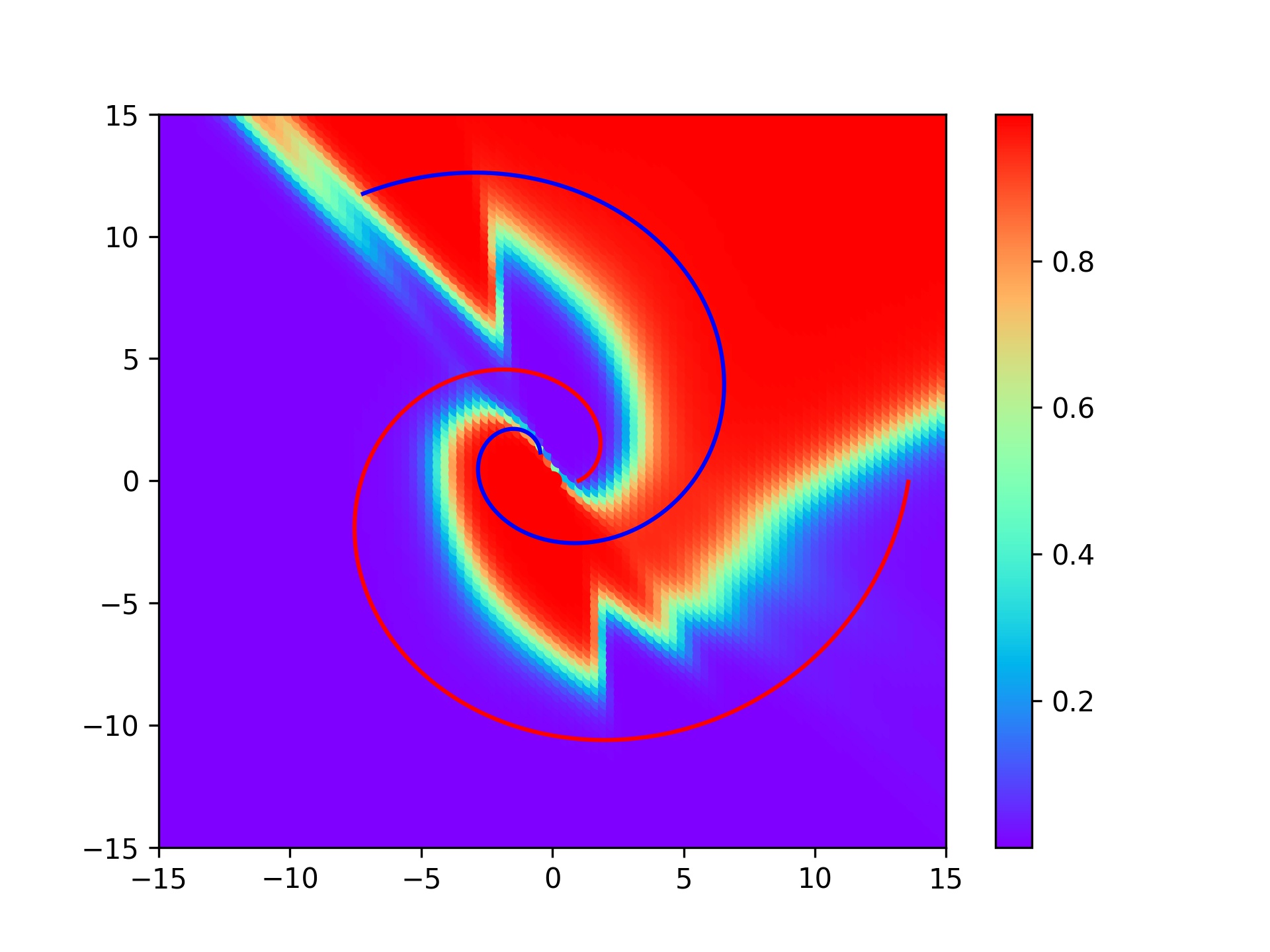

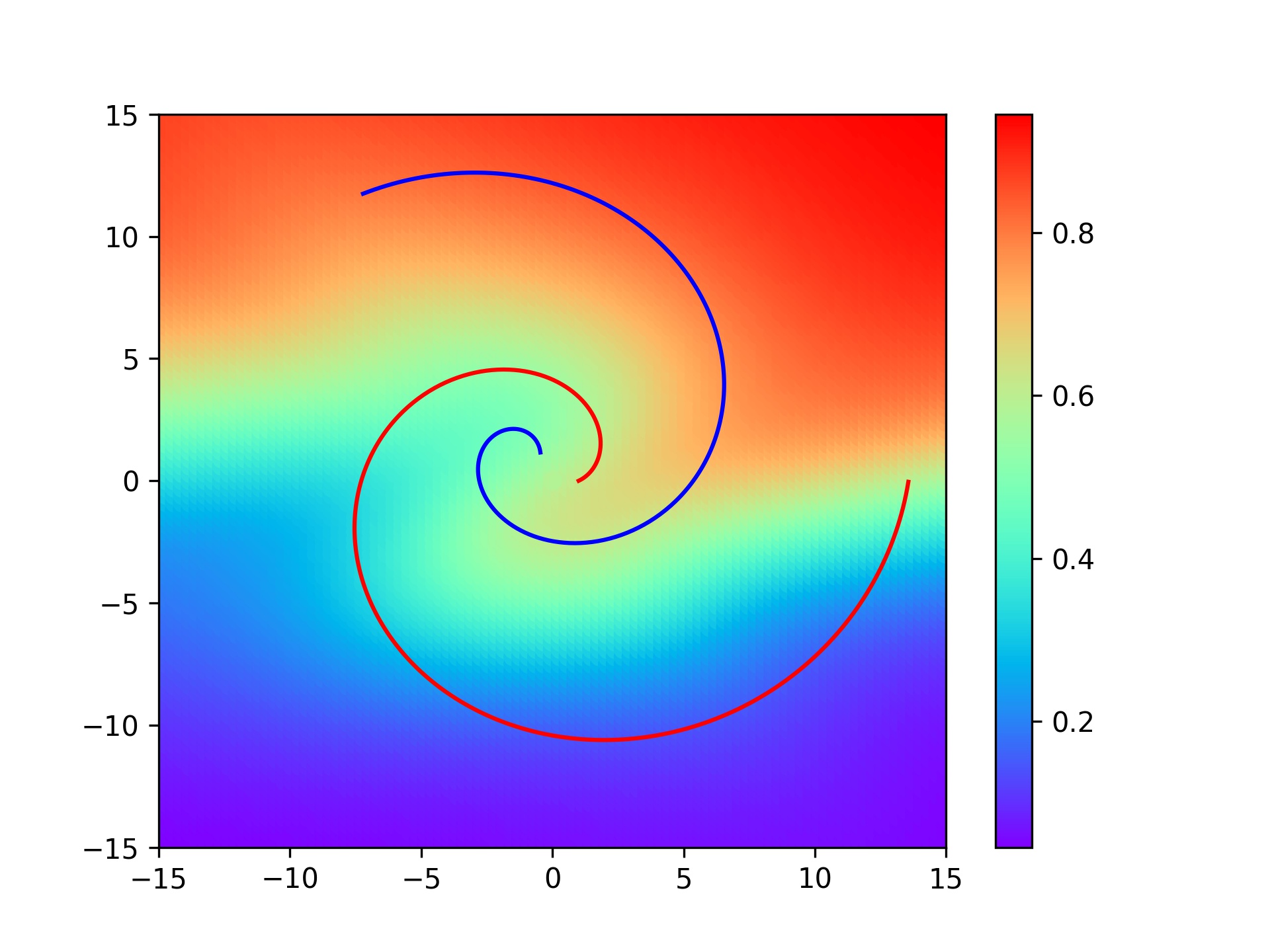

| Loss | 2D visualization | 3D visualization |

|---|---|---|

|

|

|

Except for the variable values regulated in the experiment, all values(hyper-params) are set to be the same.

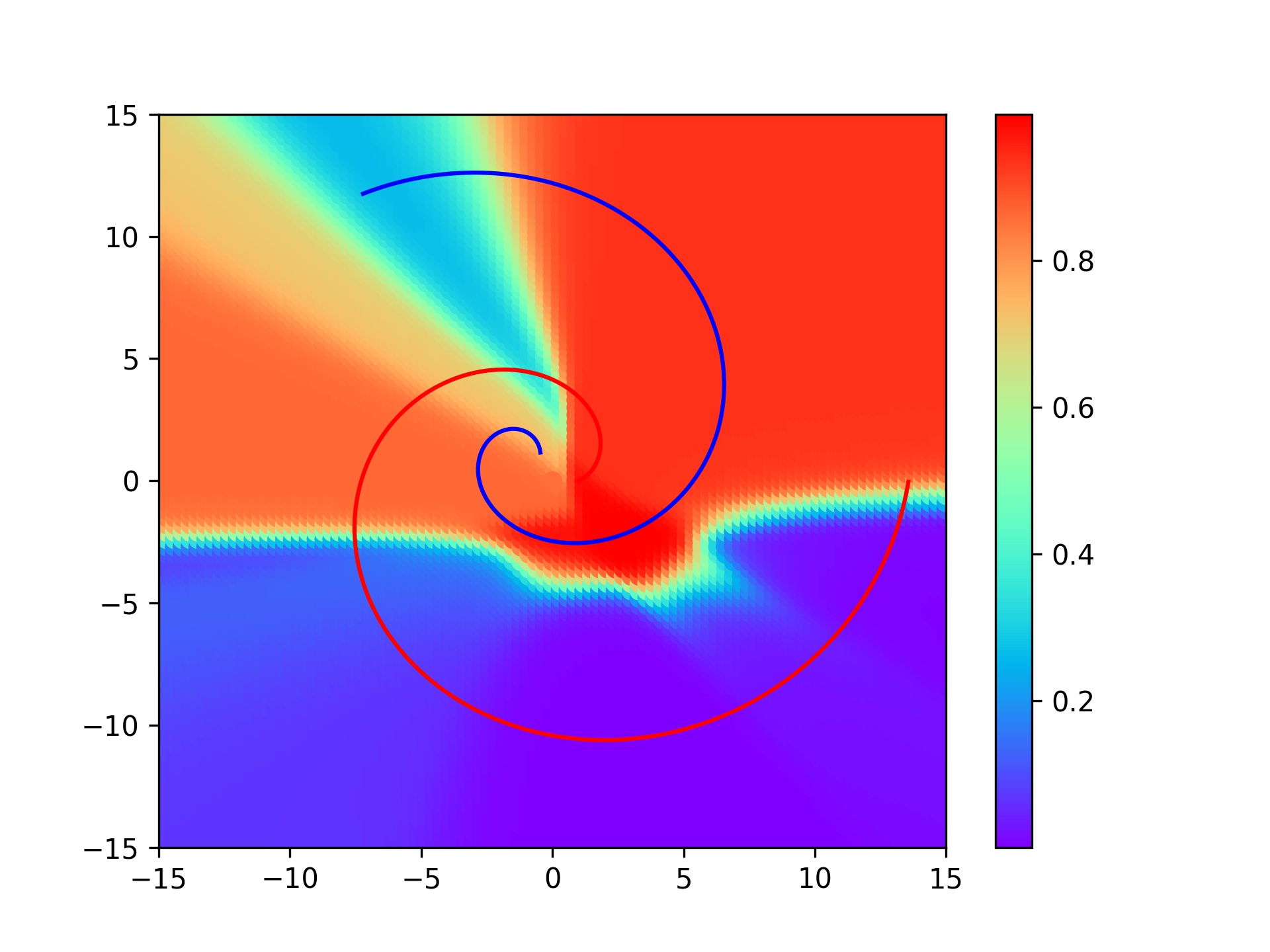

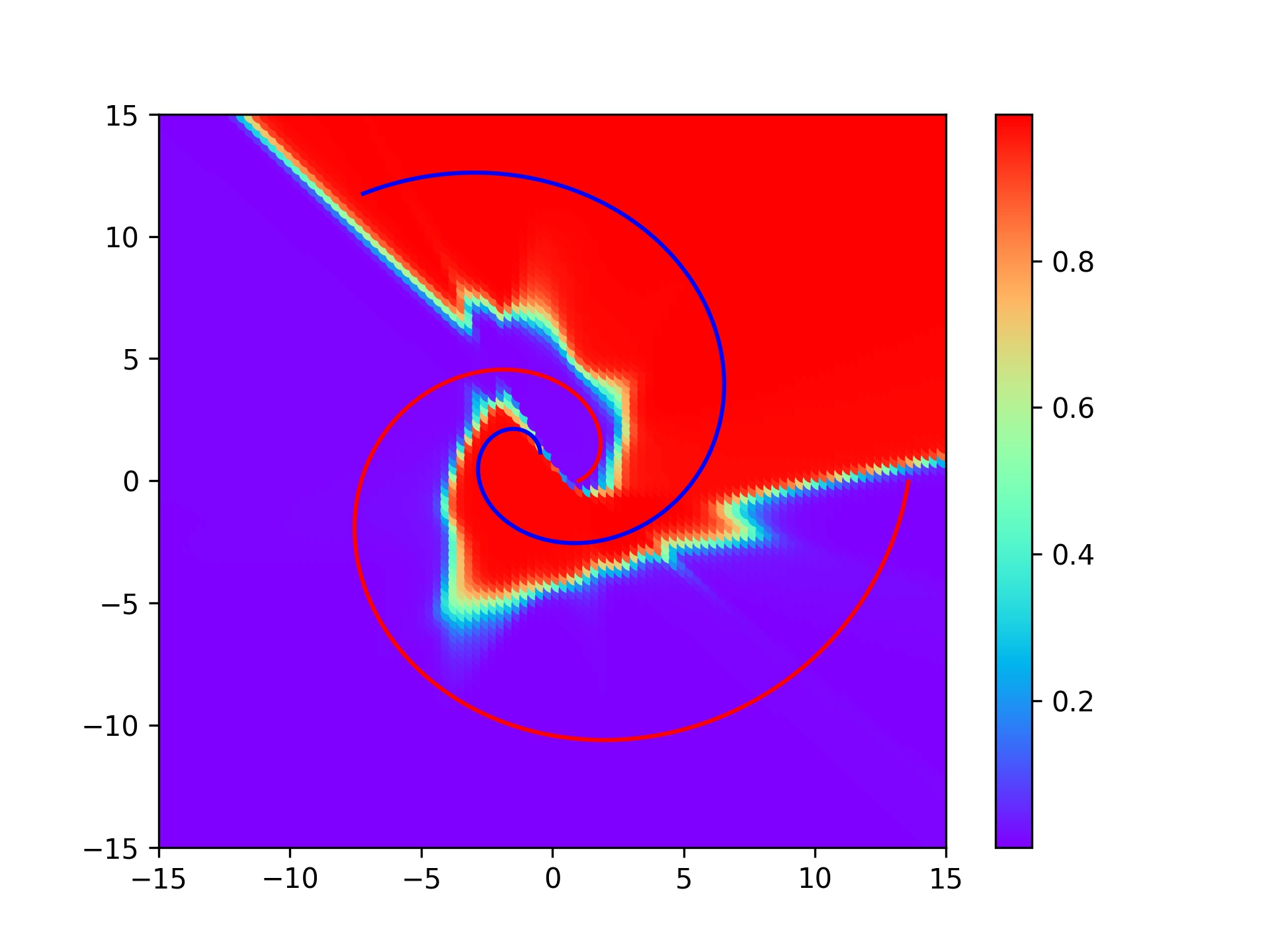

| lr = 1 (base) | lr = 0.1 | lr = 0.01 |

|---|---|---|

|

|

|

It appears to have found the appropriate learning rate value.

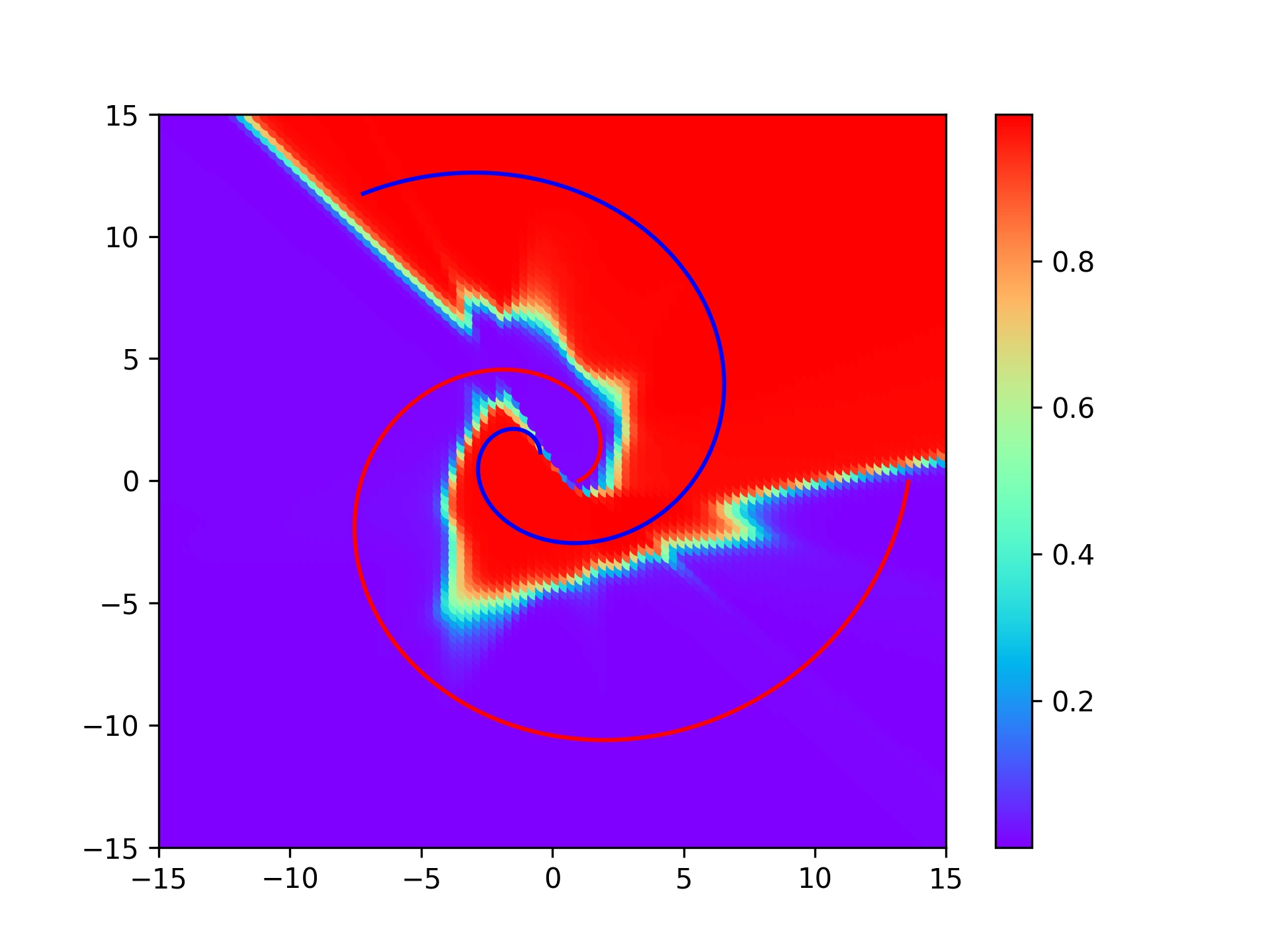

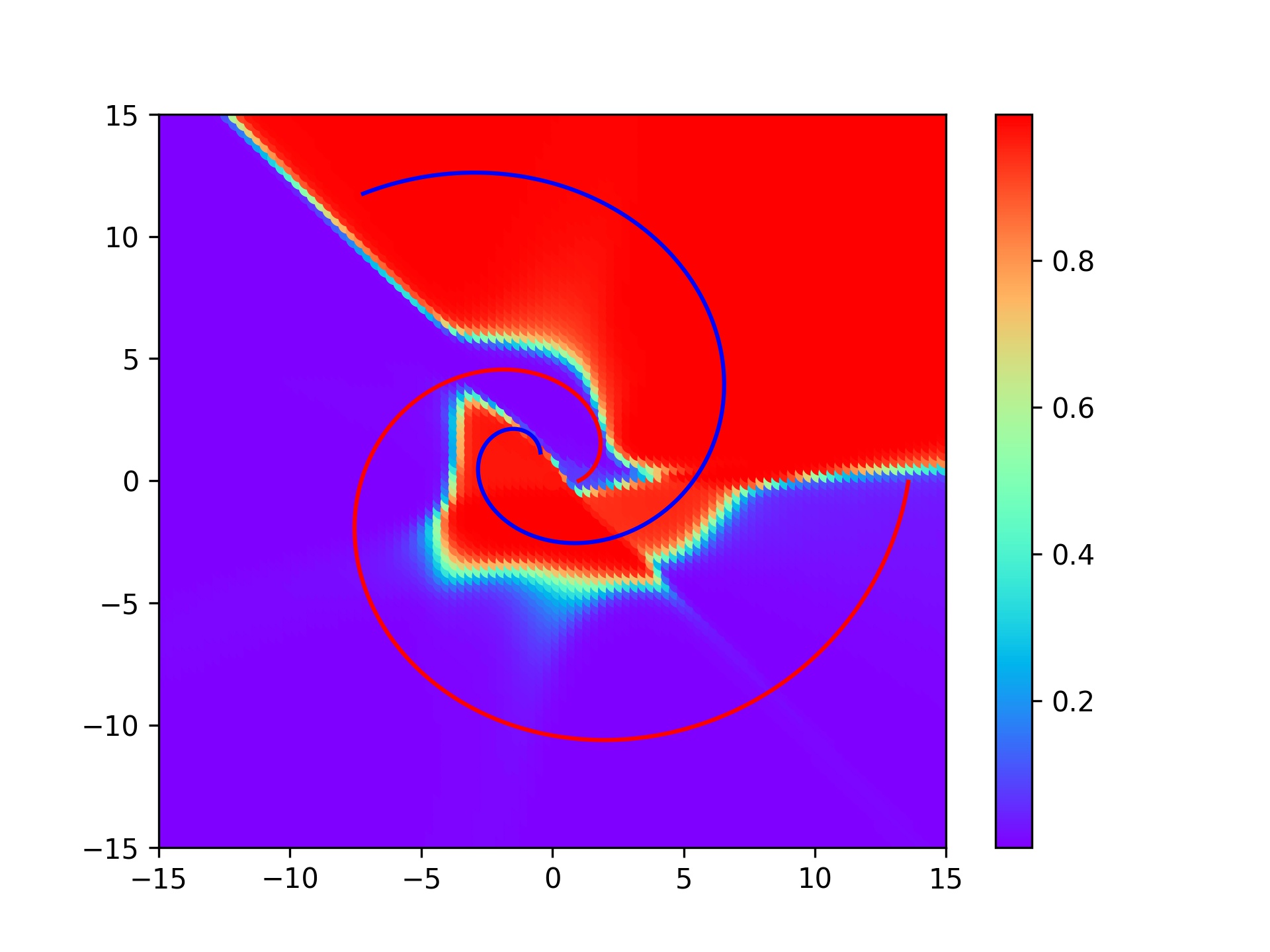

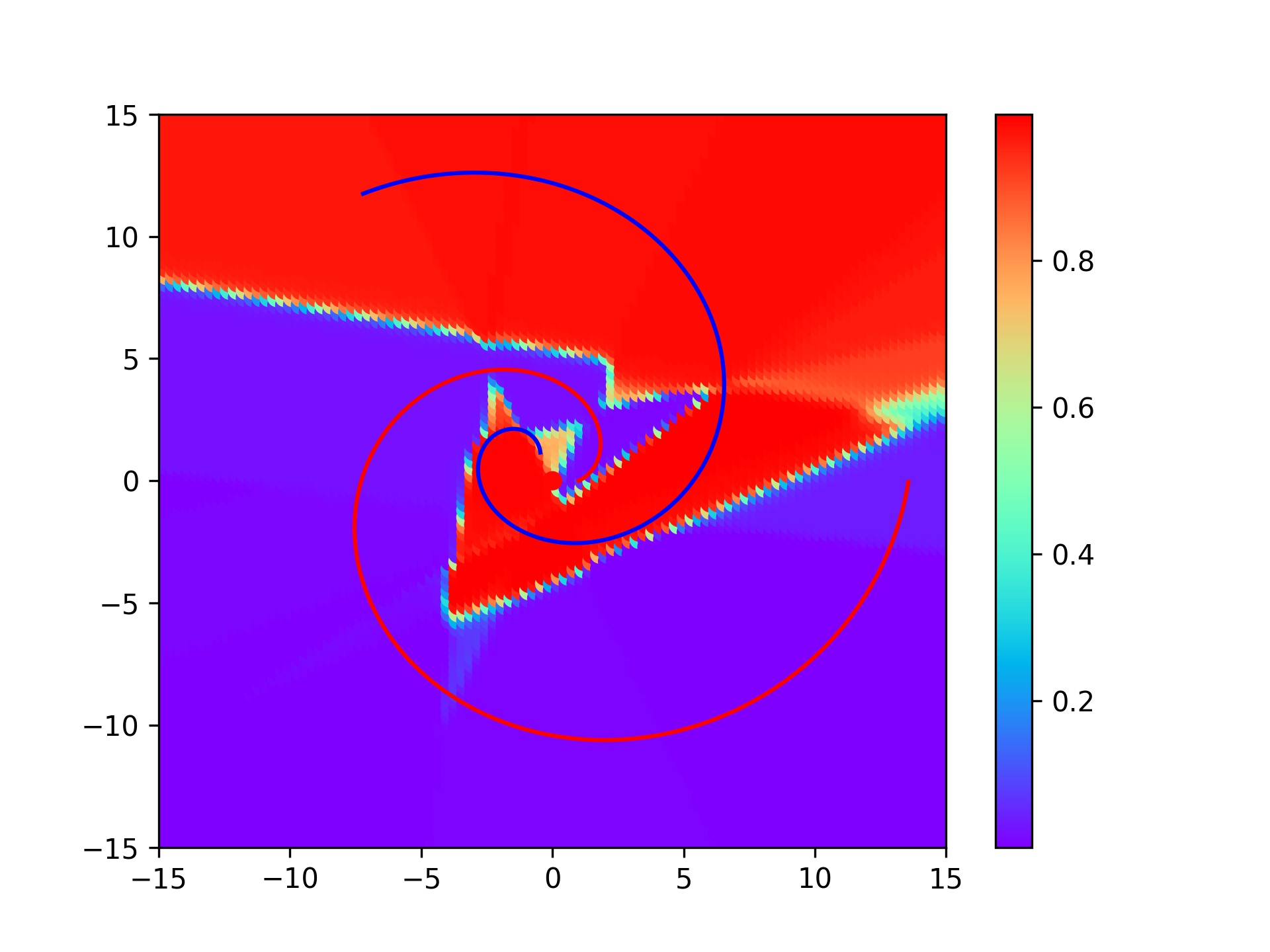

| Epochs = 50 (base) | Epochs = 30 | Epochs = 10 |

|---|---|---|

|

|

|

Given the loss plot(loss graph), there has been no significant difference since it dropped sharply from about 10000-20000(iters), indicating that learning has been stable since epochs was about 15–30. Therefore, there is no significant difference between 30 and 50. I think It's okay to stop learning at a location where loss is moderately reduced(about epochs 15-30).

Number of Neurons (for Hidden Layer)

h1: # of 1st hidden layer's neuronh2: # of 2nd hidden layer's neuron

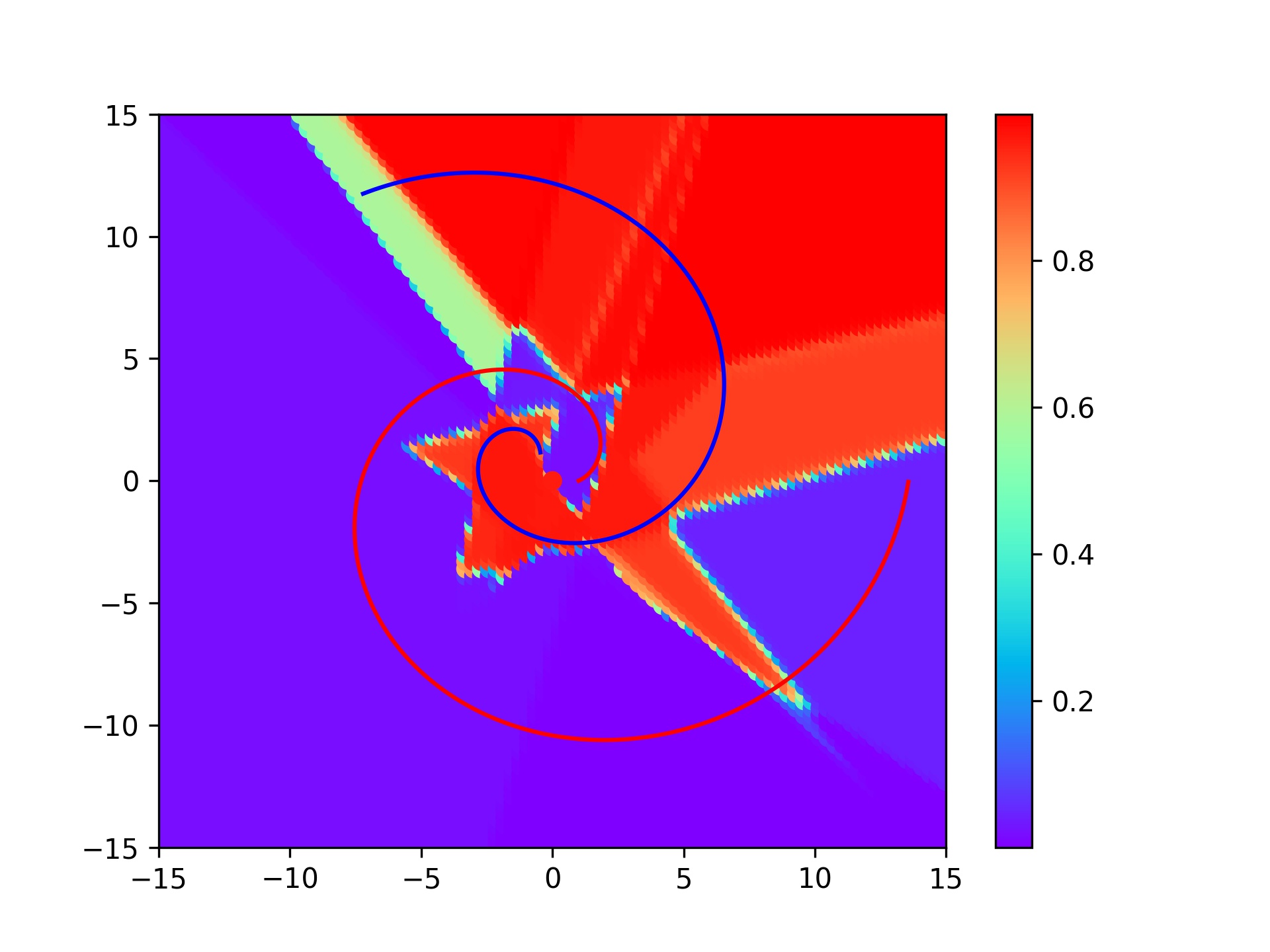

| h1 = 100, h2 = 50 (base) | h1 = 10, h2 = 5 | h1 = 5, h2 = 2 |

|---|---|---|

|

|

|

It appears to have found the appropriate # of neuron value.