Anomaly Transformer: Time Series Anomaly Detection with Association Discrepancy

Unsupervised detection of anomaly points in time series is a challenging problem, which requires the model to learn informative representation and derive a distinguishable criterion. In this paper, we propose the Anomaly Transformer in these three folds:

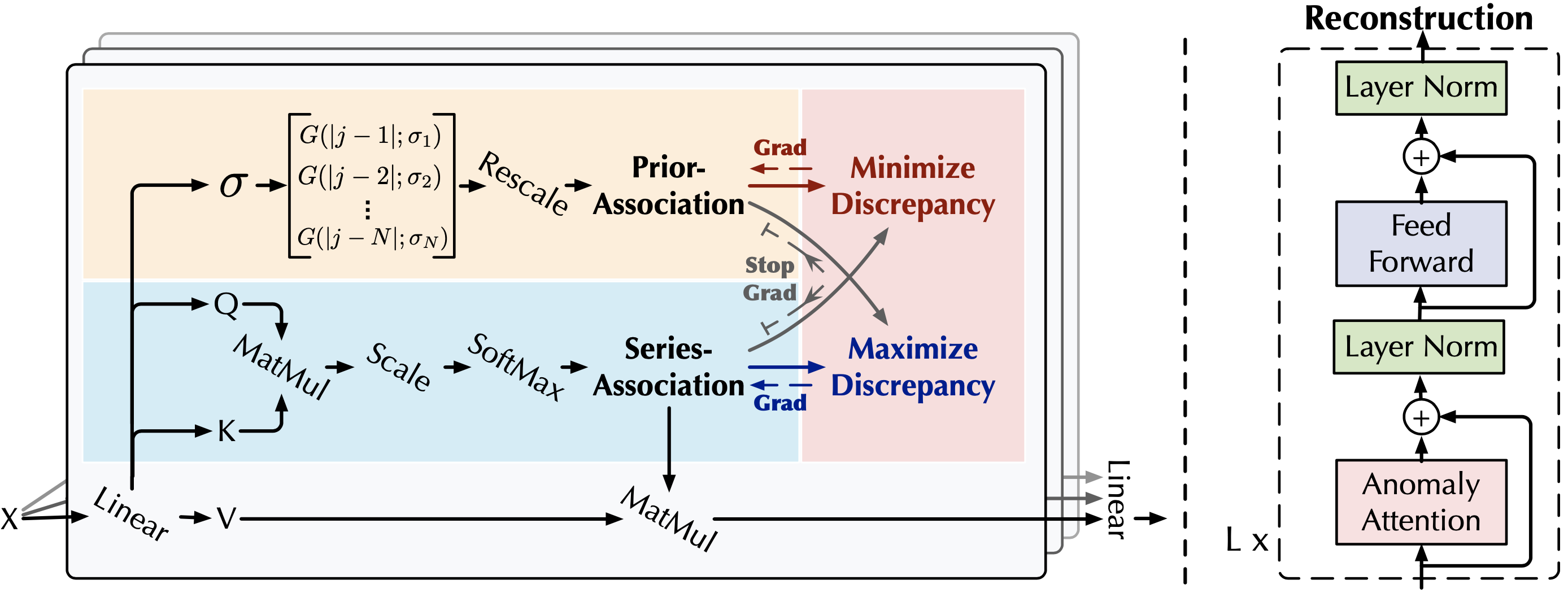

- An inherent distinguishable criterion as Association Discrepancy for detection.

- A new Anomaly-Attention mechanism to compute the association discrepancy.

- A minimax strategy to amplify the normal-abnormal distinguishability of the association discrepancy.

- Install Python 3.6, PyTorch 1.4.0. (Note: Higher PyTorch version may cause error.)

- Download data. You can obtain four benchmarks from Tsinghua Cloud. All the datasets are well pre-processed. For the SWaT dataset, you can apply for it by following its official tutorial.

- Train and evaluate. We provide the experiment scripts of all benchmarks under the folder

./scripts. You can reproduce the experiment results as follows:

bash ./scripts/SMD.sh

bash ./scripts/MSL.sh

bash ./scripts/SMAP.sh

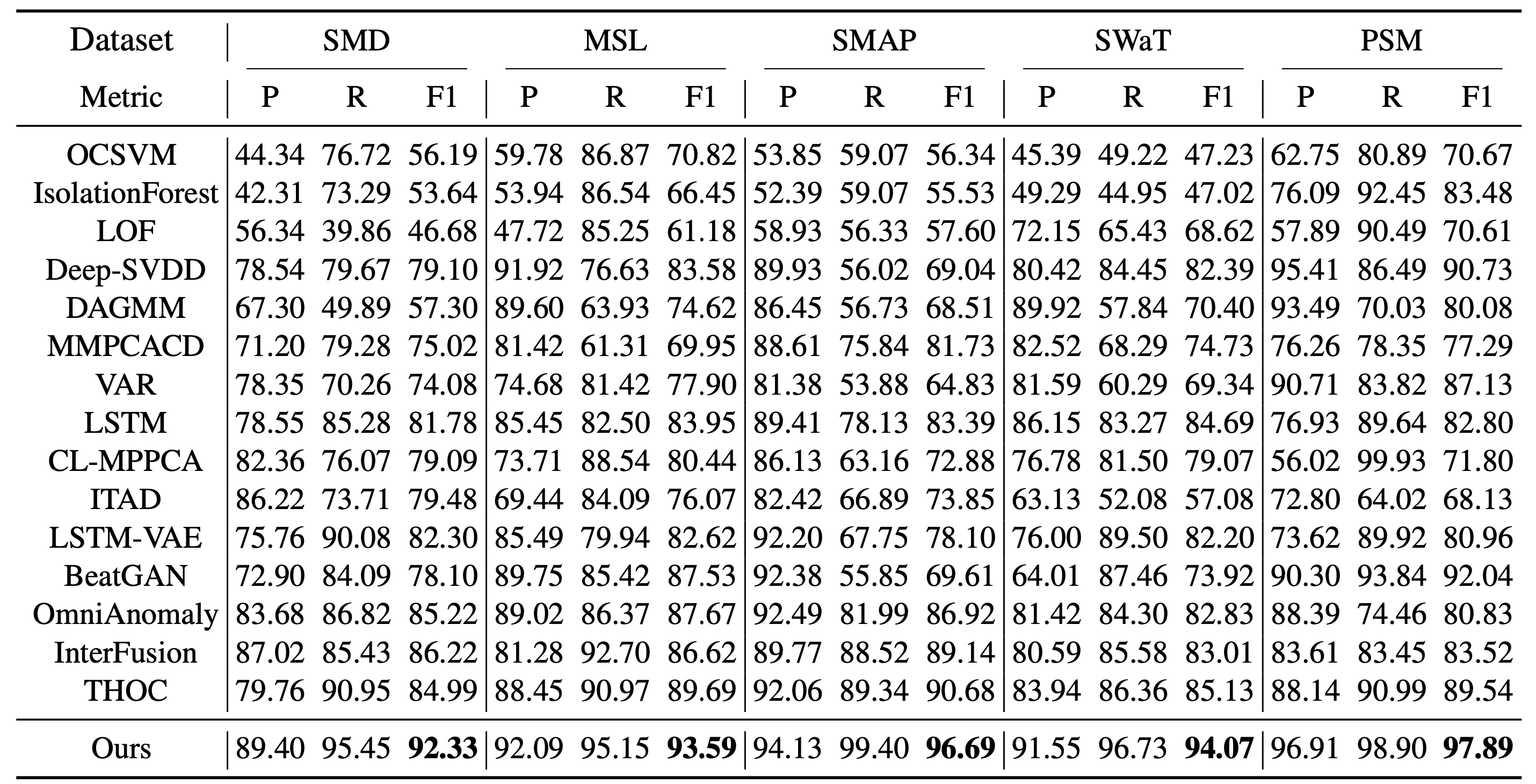

bash ./scripts/PSM.shWe compare our model with 15 baselines, including THOC, InterFusion, etc. Generally, Anomaly-Transformer achieves SOTA.

If you find this repo useful, please cite our paper.

@inproceedings{

xu2022anomaly,

title={Anomaly Transformer: Time Series Anomaly Detection with Association Discrepancy},

author={Jiehui Xu and Haixu Wu and Jianmin Wang and Mingsheng Long},

booktitle={International Conference on Learning Representations},

year={2022},

url={https://openreview.net/forum?id=LzQQ89U1qm_}

}

If you have any question, please contact xjh20@mails.tsinghua.edu.cn, whx20@mails.tsinghua.edu.cn.