This repo contains code for two papers.

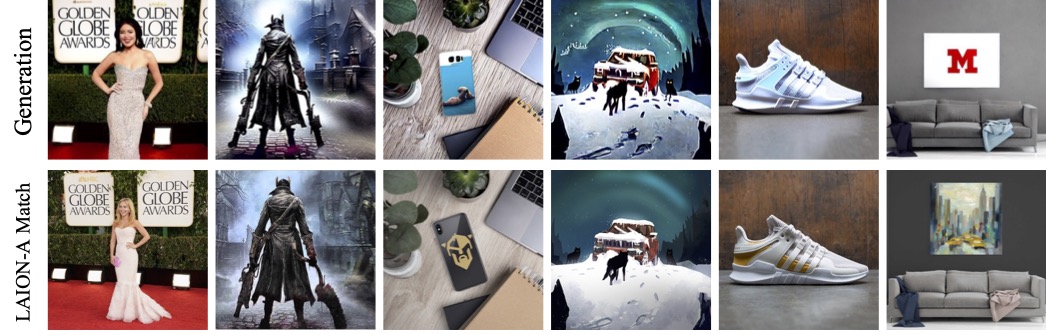

- Diffusion Art or Digital Forgery? Investigating Data Replication in Diffusion Models (CVPR'23) - paper link

- Understanding and Mitigating Copying in Diffusion Models (NeurIPS'23) - paper link

Install conda environment

conda env create -f env.yaml

conda activate diffrep

We used RTX-A6000 machines to train the models. For inference or to compute the metrics, smaller machines will do.

accelerate launch diff_train.py \

--pretrained_model_name_or_path stabilityai/stable-diffusion-2-1 \

--instance_data_dir <training_data_path> \

--resolution=256 --gradient_accumulation_steps=1 --center_crop --random_flip \

--learning_rate=5e-6 --lr_scheduler constant_with_warmup \

--lr_warmup_steps=5000 --max_train_steps=100000 \

--train_batch_size=16 --save_steps=10000 --modelsavesteps 20000 --duplication <duplication_style> \

--output_dir=<path_to_save_model> --class_prompt <conditioning_style> --instance_prompt_loc <path_to_captions_json>

<duplication_style>options arenodup,dup_both,dup_image.<conditioning_style>options arenolevel,classlevel,instancelevel_blip,instancelevel_random.- To train a model with mitigation, set

--trainspecial <traintime_mitigation_strategy>. The available options areallcaps,randrepl,randwordadd,wordrepeat. - For gaussian noise addition in training, set

--rand_noise_lamto a non-zero value in range[0,1].

python diff_inference.py --modelpath <path_to_finetuned_model> -nb <number_of_inference_generations>

This script computes similairity scores, fid scores and a few other metrics. Logged to wandb.

python diff_retrieval.py --arch resnet50_disc --similarity_metric dotproduct --pt_style sscd --dist-url 'tcp://localhost:10001' --world-size 1 --rank 0 --query_dir <path_to_generated_data> --val_dir <path_to_training_data>

For train time strategies, train with --trainspecial option and infer and compute metrics as shown below

For inference time strategies,

python sd_mitigation.py --rand_noise_lam 0.1 --seed 2

python sd_mitigation.py --rand_augs rand_word_repeat --seed 2

python sd_mitigation.py --rand_augs rand_word_add --seed 2

python sd_mitigation.py --rand_augs rand_numb_add --seed 2

Download the LAION-10k split here.

This repository is released under the Apache 2.0 license as found in the LICENSE file.

Citation for Diffusion Art or Digital Forgery? Investigating Data Replication in Diffusion Models

@inproceedings{somepalli2023diffusion,

title={Diffusion art or digital forgery? investigating data replication in diffusion models},

author={Somepalli, Gowthami and Singla, Vasu and Goldblum, Micah and Geiping, Jonas and Goldstein, Tom},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={6048--6058},

year={2023}

}

Citation for Understanding and Mitigating Copying in Diffusion Models

@article{somepalli2023understanding,

title={Understanding and Mitigating Copying in Diffusion Models},

author={Somepalli, Gowthami and Singla, Vasu and Goldblum, Micah and Geiping, Jonas and Goldstein, Tom},

journal={arXiv preprint arXiv:2305.20086},

year={2023}

}