University of Pennsylvania, CIS 565: GPU Programming and Architecture, Final Project

-

Sanura Jewell

-

Tested on: Linux pop-os 5.11.0-7614-generic, i7-9750H CPU @ 2.60GHz 32GB, GeForce GTX 1650 Mobile / Max-Q 4GB

-

Yuxuan Zhu

-

Tested on: Windows 10, i7-7700HQ @ 2.80GHz 16GB, GTX 1050 4096MB (Personal Laptop)

We created a WebGPU based image super resolution program. Under the hood, it runs a neural netork based on ESRGAN. The input to the program is any RGB image that users provide. The output of the program is the same image with 4x the resolution. The program runs in Chrome Canary with the flag --enable-unsafe-webgpu. All the computation is done on the client side, so the images uploaded by clients will remain on the client side. No privacy concerns! We currently support up-sizing images of medium size. For example, a typical GPU with 4GB RAM can upsize images of size 400px by 400px. The size limit and run time vary depending on different client hardwares capabilities.

- Download and install Chrome Canary/Chrome Dev/Firefox Nightly

- Enable webGPU

- In chrome Canary/Dev, put chrome://flags/#enable-unsafe-webgpu in the navbar and enable WebGPU

- In Firefox nightly, put about:preferences#experimental in the navbar, check the box for "Web API: WebGPU"

- Visit https://sona1111.github.io/webgpu-super-resolution/

- Voilà, now you can increase the resolution of any image that you upload!

| Original (320 x 214) | ESRGAN_REAL (1280 x 856) |

|---|---|

|

|

| Original (448 x 299) | ESRGAN_ANIME (1792 x 1196) |

|---|---|

|

|

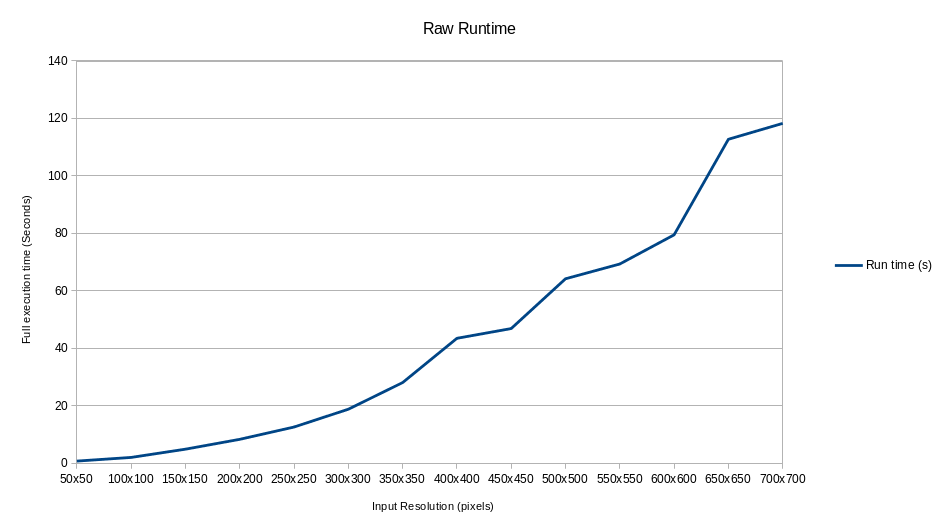

The graph below shows the runtime of our super resolution program with respect to the input image dimension. The runtime grows quadratically since the number of pixels in an image grows quadratically with respect to side length. The time complexity of our super resolution program is roughly O(number of pixels).

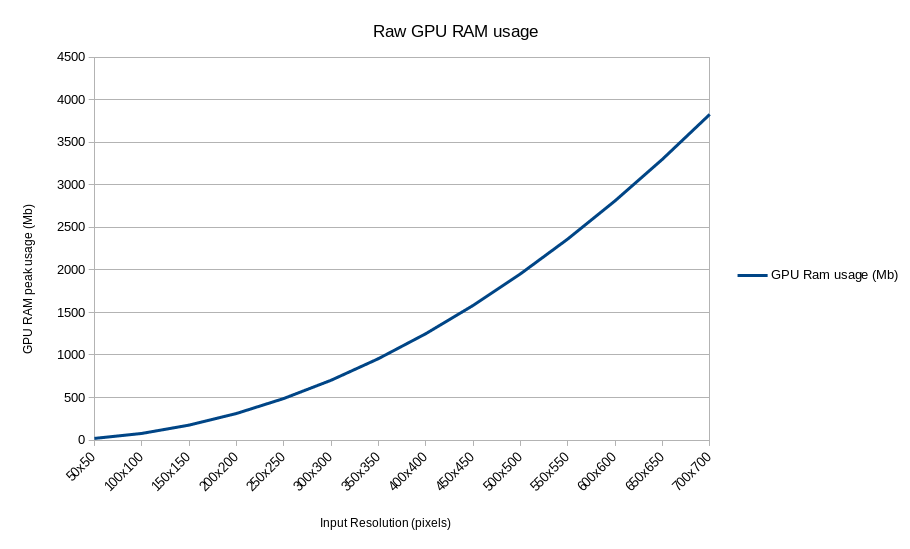

The graph below shows the GPU memory usage of our super resolution program with respect to the input image dimension. The memory usage also grows quadratically, so the space complexity of our program is also O(number of pixels).

The graph below shows the GPU memory usage of our super resolution program with respect to the input image dimension. The memory usage also grows quadratically, so the space complexity of our program is also O(number of pixels).

Since WebGPU is a new API in developement, there are limited libraries to support our need to create a neural network inference engine. We found libraries such as WebGPU_BLAS. However, it does not support efficient matrix multiplication for random matrix sizes. So we decided to implement everything from scratch. We implemented the following layers in WGSL.

- convolution layer

- leaky relu layer

- convolution layer fused with leaky relu

- residual with scaling layer

- 2 x up-sampling layer

- addition layer

Since we are implementing specifically for ESRGAN, we taylored our implementation to the specific parameters of ESRGAN. For example, all the convolution layers in ESRGAN operate on an input_channel x 3 x 3 patch. Instead of doing a triple for loop over the patch, we did a single for loop over input channel size and manually unrolled the double for loop into 9 segments. Another reason manual loop unrolling is required is that WGSL does not support loop unrolling. After we construct the neural network architecture, we load in the weights from Real-ESRGAN to perform inference.

- Support for larger images

- Further improve runtime

- Include more variants of ESRGAN finetuned on different tasks