Paper URL at IFMLab: http://www.ifmlab.org/files/paper/graph_toolformer.pdf

Paper description in Chinese: 文章中文介绍

See the shared environment.yml file. Create a local environment at your computer with command

conda env create -f environment.yml

For the packages cannot be instead with the above conda command, you may consider to install via pip instead.

(also add \usepackage{hyperref} into your paper for adding the url address in the reference)

@article{zhang2023graph,

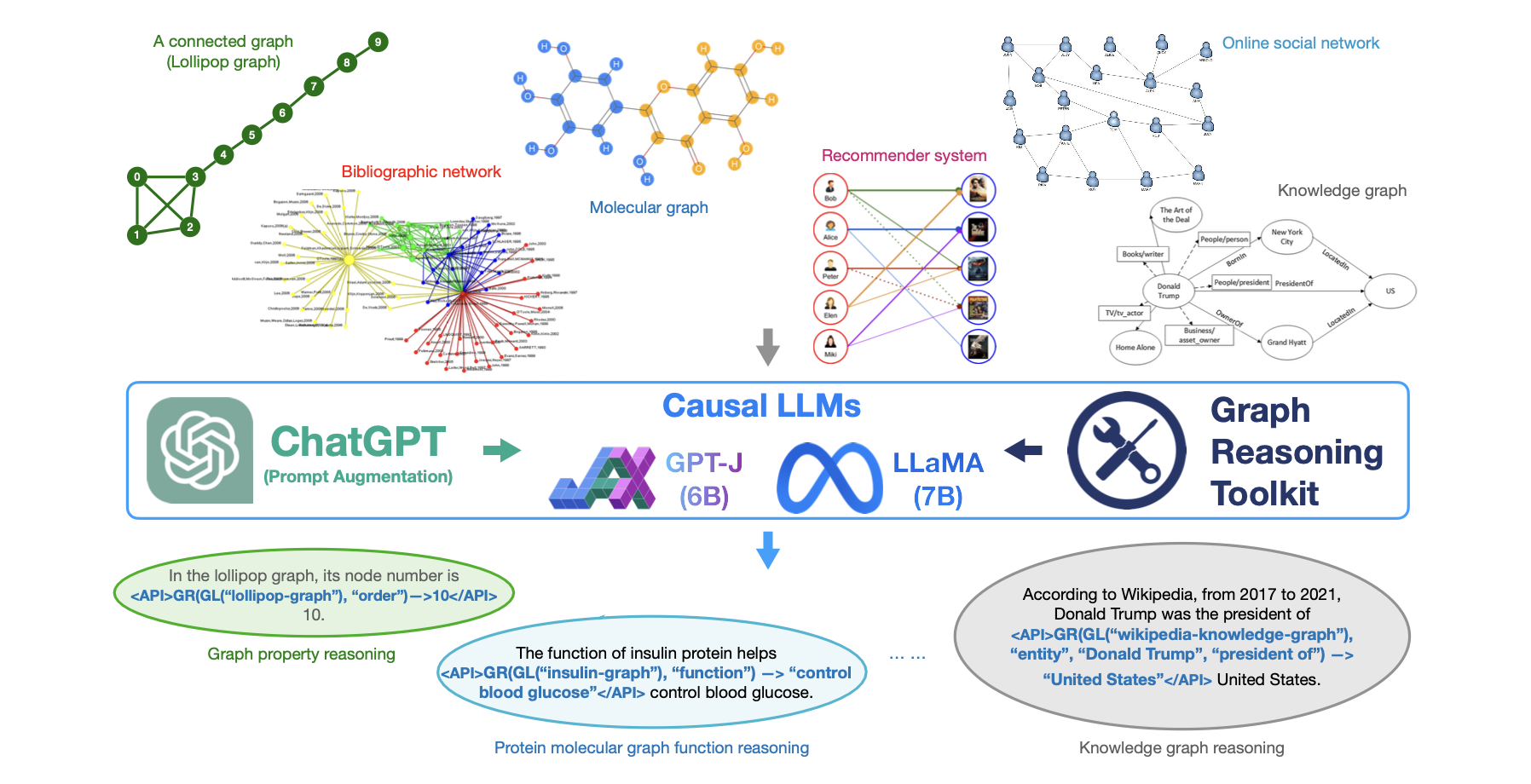

title={Graph-ToolFormer: To Empower LLMs with Graph Reasoning Ability via Prompt Augmented by ChatGPT},

author={Zhang, Jiawei},

note = {\url{http://www.ifmlab.org/files/paper/graph_toolformer.pdf}},

year={2023},

note = "[Online; Published 10-April-2023]"

}

- Polish the framework: 8/9 done

- add working memory module

- add query parser module

- add query excutor module

- add graph dataset hub

- add graph model hub

- add graph reasoning task hub

- add llm model hub

- add llm trainer module

- add cli for interaction

- Expand the framework: 0/4

- Add and test more LLMs

- Include more graph datasets

- Add more pre-trained graph models

- Include more graph reasoning tasks

- Release the framework and service: 0/4

- Implement the CLI with GUI panel

- Provide the demo for graph reasoning

- Release web access and service

- Add API for customerized graph reasoning

By adding the needed dataset, the model can run by calling "python gtoolformer_gptj_script.py".

A simpler template of the code is also available at http://www.ifmlab.org/files/template/IFM_Lab_Program_Template_Python3.zip

(1) data.py (for data loading and basic data organization operators, defines abstract method load() )

(2) method.py (for complex operations on the data, defines abstract method run() )

(3) result.py (for saving/loading results from files, defines abstract method load() and save() )

(4) evaluate.py (for result evaluation, defines abstract method evaluate() )

(5) setting.py (for experiment settings, defines abstract method load_run_save_evaluate() )

The base class of these five parts are defined in ./code/base_class/, they are all abstract class defining the templates and architecture of the code.

The inherited class are provided in ./code, which inherit from the base classes, implement the abstract methonds.

(1) DatasetLoader.py (for dataset loading)

(1) Method_Graph_Toolformer_GPTJ.py (Graph-Toolformer Implementation with GPT-J 6b 8bit)

(2) Module_GPTJ_8bit.py (The GPT-J module quantization with 8 bit)

(1) ResultSaving.py (for saving results to file)

(2) Result_Loader.py (for loading result from file)

(1) Evaluate_Metrics.py (implementation of evaluation metrics used in this experiment, include rouge scores, bleu scores, etc.)

(1) Setting_Regular.py (defines the interactions and data exchange among the above classes)