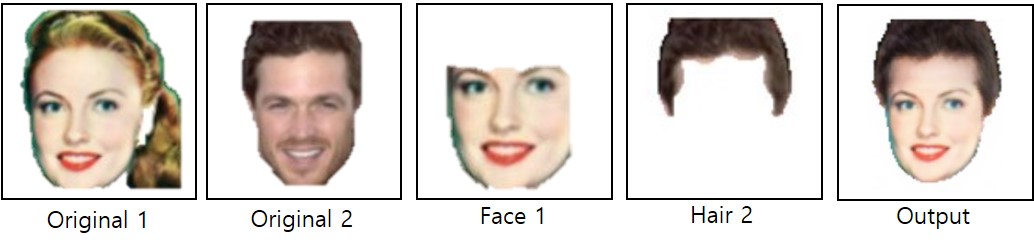

Inspired by PITVONS, we created a method to view faces with different hairstyles

Given two inputs: a segmented face and segmented hair, a generator outputs an image that captures the features of the both inputs, allowing one to view a face with a different hairstyle.

It is near impossible to perform supervised learning on a problem such as this - as it is difficult to obtain a dataset that can suffice.

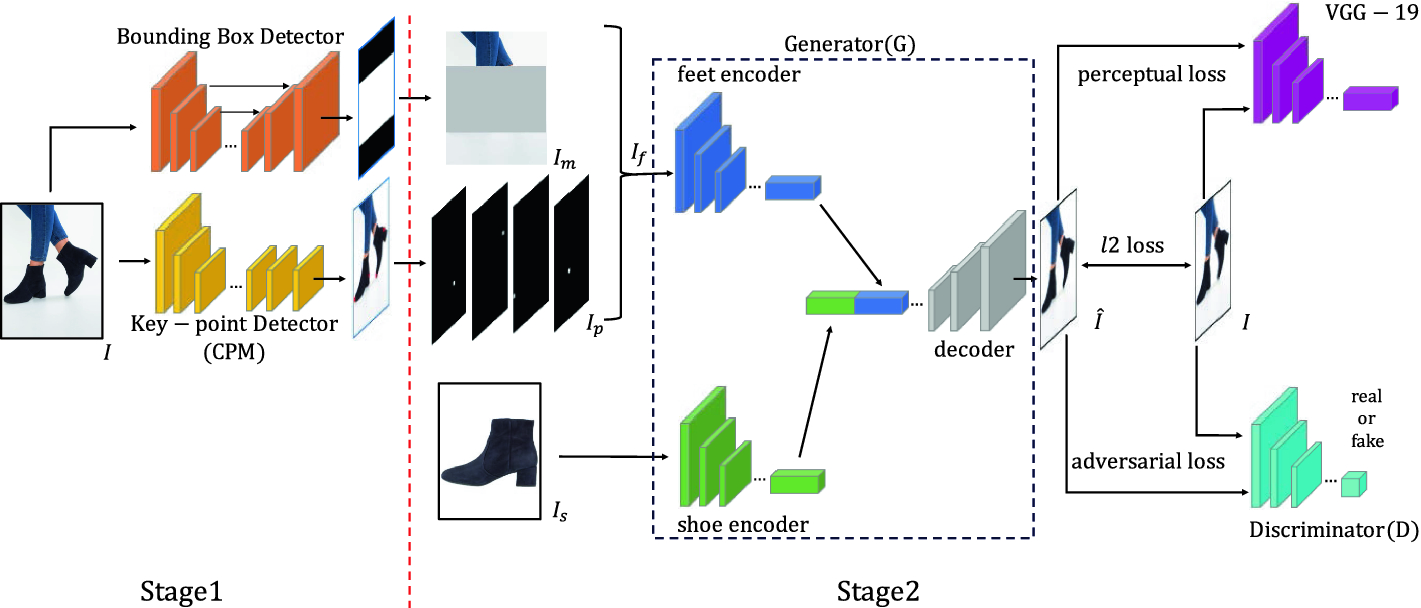

Thus we adapt a triple-loss / image completion learning method for swapping hair.

The training method extremely similar to PIVTONS.

- We set up our problem by segmenting the faces and hair from the CelebA dataset. Here, faces are segmented down to the eyebrows fromt he top, and up to the ears at the sides. This is to decrease the possibility of bangs being included in the face segmentation.

- Three models are created

- Generator - Reconstructs an image of a full face given a segmented face and segmented hair in an attempt to fool the discriminator

- Discriminator - Binary classifier trying to distinguish original image from fake (generator output) ones

- Pre-trained model - Model used to calculate perceptual loss by comparing feature map of generator

- A training step consists of

- Calculating the adversarial loss -- how well disciminator is fooled by generated image

- Calculating the perceptual loss -- difference of feature maps of real/generated image are

- Calculating the l2-loss -- difference of pixels between real/generated

- Updating the generator based on all three losses