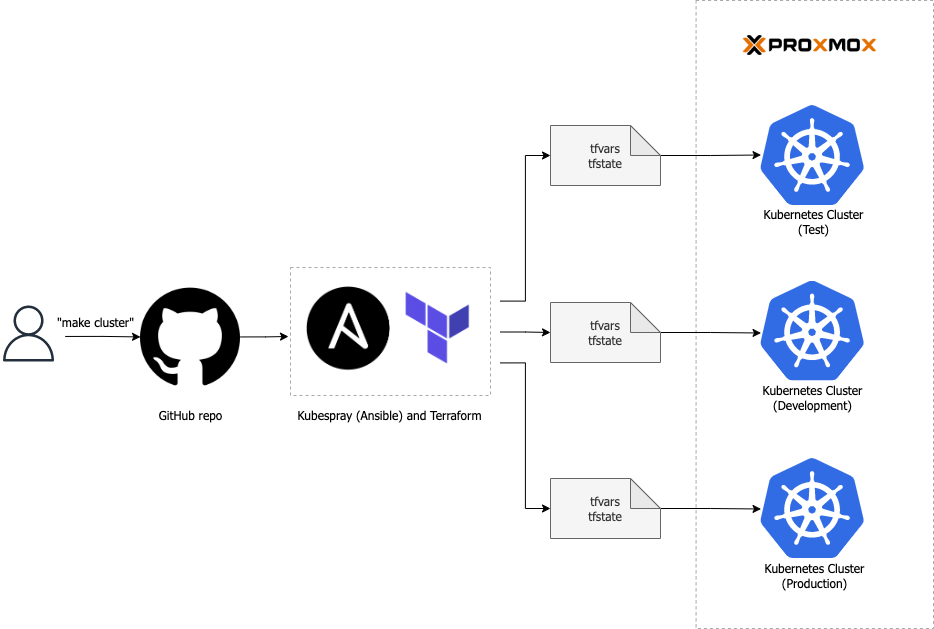

This project allows you to create a Kubernetes cluster on Proxmox VE using Terraform and Kubespray in a declarative manner.

Ensure the following software versions are installed:

- Proxmox VE

7.xor8.x. - Terraform

>=1.3.3

Kubespray will be set up automatically.

Before proceeding with the setup for Proxmox VE, make sure you have the following components in place:

- Internal network

- VM template

- SSH key pair

- Bastion host

Follow these steps to use the project:

-

Clone the repo:

$ git clone https://github.com/khanh-ph/proxmox-kubernetes.git

-

Open the

example.tfvarsfile in a text editor and update all the mandatory variables with your own values. -

Initialize the Terraform working directory.

$ terraform init

-

Generate an execution plan and review the output to ensure that the planned changes align with your expectations.

$ terraform plan -var-file="example.tfvars" -

If you're satisfied with the plan and ready to apply the changes. Run the following command:

$ terraform apply -var-file="example.tfvars"

The project provides several Terraform variables that allow you to customize the cluster to suit your needs. Please see the following:

| Name | Description | Type | Default | Required |

|---|---|---|---|---|

| env_name | The stage of the development lifecycle for the k8s cluster. Example: prod, dev, qa, stage, test |

string |

"test" |

no |

| location | The city or region where the cluster is provisioned | string |

null |

no |

| cluster_number | The instance count for the k8s cluster, to differentiate it from other clusters. Example: 00, 01 |

string |

"01" |

no |

| cluster_domain | The cluster domain name | string |

"local" |

no |

| use_legacy_naming_convention | Whether to use legacy naming convention for the VM and cluster name. If your cluster was provisioned using version <= 3.x, set it to true |

bool |

false |

no |

| pm_api_url | The base URL for Proxmox VE API. See https://pve.proxmox.com/wiki/Proxmox_VE_API#API_URL | string |

n/a | yes |

| pm_api_token_id | The token ID to access Proxmox VE API. | string |

n/a | yes |

| pm_api_token_secret | The UUID/secret of the token defined in the variable pm_api_token_id. |

string |

n/a | yes |

| pm_tls_insecure | Disable TLS verification while connecting to the Proxmox VE API server. | bool |

n/a | yes |

| pm_host | The name of Proxmox node where the VM is placed. | string |

n/a | yes |

| pm_parallel | The number of simultaneous Proxmox processes. E.g: creating resources. | number |

2 |

no |

| pm_timeout | Timeout value (seconds) for proxmox API calls. | number |

600 |

no |

| internal_net_name | Name of the internal network bridge | string |

"vmbr1" |

no |

| internal_net_subnet_cidr | CIDR of the internal network | string |

"10.0.1.0/24" |

no |

| ssh_private_key | SSH private key in base64, will be used by Terraform client to connect to the Kubespray VM after provisioning. We can set its sensitivity to false; otherwise, the output of the Kubespray script will be hidden. | string |

n/a | yes |

| ssh_public_keys | SSH public keys in base64 | string |

n/a | yes |

| vm_user | The default user for all VMs | string |

"ubuntu" |

no |

| vm_sockets | Number of the CPU socket to allocate to the VMs | number |

1 |

no |

| vm_max_vcpus | The maximum CPU cores available per CPU socket to allocate to the VM | number |

2 |

no |

| vm_cpu_type | The type of CPU to emulate in the Guest | string |

"host" |

no |

| vm_os_disk_storage | Default storage pool where OS VM disk is placed | string |

n/a | yes |

| add_worker_node_data_disk | Whether to add a data disk to each worker node of the cluster | bool |

false |

no |

| worker_node_data_disk_storage | The storage pool where the data disk is placed | string |

"" |

no |

| worker_node_data_disk_size | The size of worker node data disk in Gigabyte | string |

10 |

no |

| vm_ubuntu_tmpl_name | Name of Cloud-init template Ubuntu VM | string |

"ubuntu-2404" |

no |

| bastion_ssh_ip | IP of the bastion host, could be either public IP or local network IP of the bastion host | string |

"" |

no |

| bastion_ssh_user | The user to authenticate to the bastion host | string |

"ubuntu" |

no |

| bastion_ssh_port | The SSH port number on the bastion host | number |

22 |

no |

| vm_k8s_control_plane | Control Plane VM specification | object({ node_count = number, vcpus = number, memory = number, disk_size = number }) |

{ |

no |

| vm_k8s_worker | Worker VM specification | object({ node_count = number, vcpus = number, memory = number, disk_size = number }) |

{ |

no |

| create_kubespray_host | Whether to provision the Kubespray as a VM | bool |

true |

no |

| kubespray_image | The Docker image to deploy Kubespray | string |

"quay.io/kubespray/kubespray:v2.25.0" |

no |

| kube_version | Kubernetes version | string |

"v1.29.5" |

no |

| kube_network_plugin | The network plugin to be installed on your cluster. Example: cilium, calico, kube-ovn, weave or flannel |

string |

"calico" |

no |

| enable_nodelocaldns | Whether to enable nodelocal dns cache on your cluster | bool |

false |

no |

| podsecuritypolicy_enabled | Whether to enable pod security policy on your cluster (RBAC must be enabled either by having 'RBAC' in authorization_modes or kubeadm enabled) | bool |

false |

no |

| persistent_volumes_enabled | Whether to add Persistent Volumes Storage Class for corresponding cloud provider (supported: in-tree OpenStack, Cinder CSI, AWS EBS CSI, Azure Disk CSI, GCP Persistent Disk CSI) | bool |

false |

no |

| helm_enabled | Whether to enable Helm on your cluster | bool |

false |

no |

| ingress_nginx_enabled | Whether to enable Nginx ingress on your cluster | bool |

false |

no |

| argocd_enabled | Whether to enable ArgoCD on your cluster | bool |

false |

no |

| argocd_version | The ArgoCD version to be installed | string |

"v2.11.4" |

no |

For more detailed instructions, refer to the following blog post: Create a Kubernetes cluster on Proxmox with Terraform & Kubespray