Note

Use Cloud9 for a fast start, using any instance size larger than a 'small' size.

us-east-1 and us-west-2 are the only supported regions. us-west-2 is the only region with Anthropic Claude 3 Opus

clone this repo + run setup.sh and deploy.sh:

git clone https://github.com/seandkendall/genai-bedrock-chatbot.git --depth 1

cd genai-bedrock-chatbot

./setup.sh

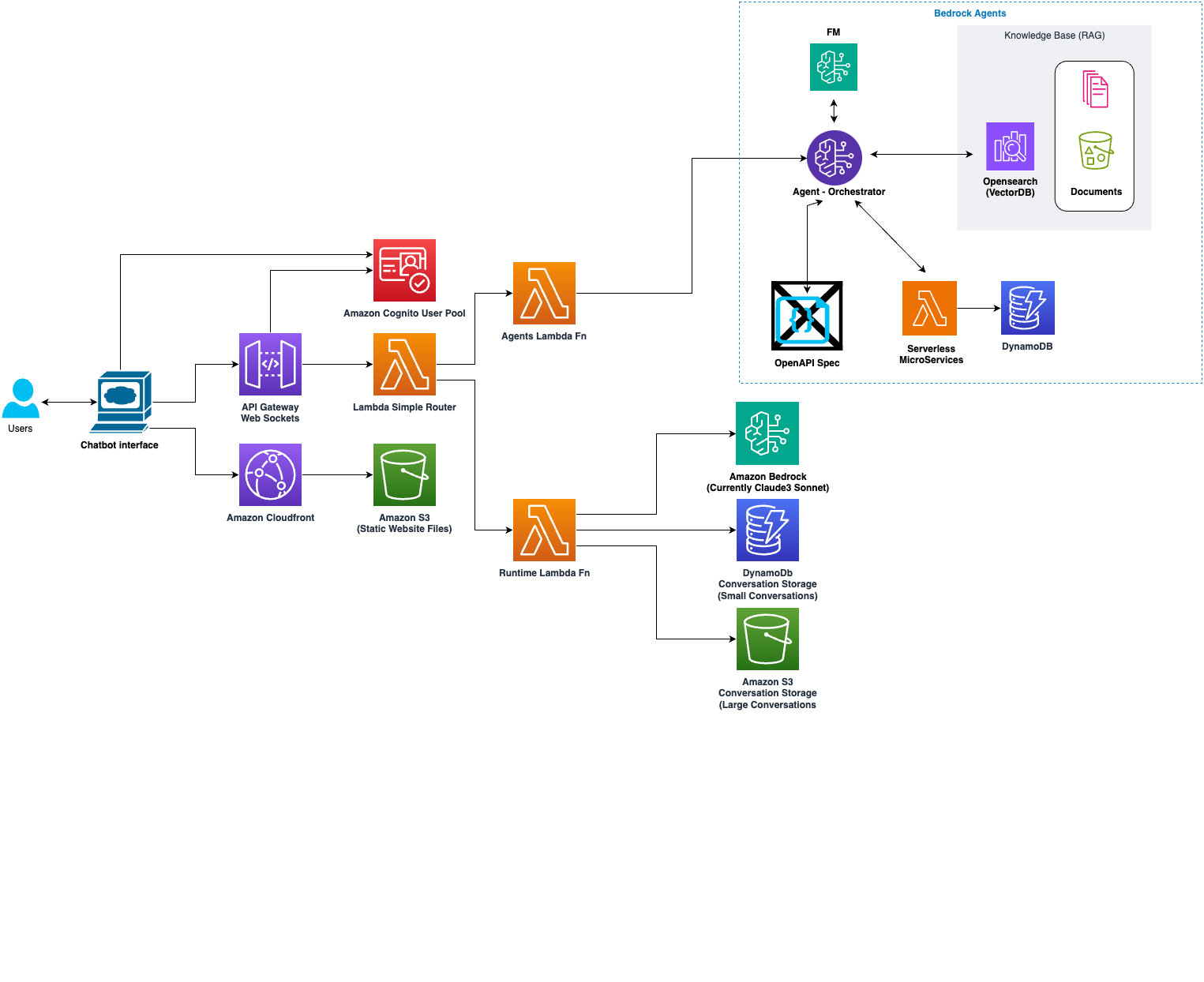

./deploy.shThis is a serverless application that provides a chatbot interface using AWS services and the Anthropic Claude-3 language model. The application features two modes: Bedrock Agents and Bedrock. In Bedrock Agents mode, users can interact with Bedrock Agents powered by a knowledgebase containing HSE (health and safety) data. In Bedrock mode, users can engage in open-ended conversations with the Claude-3 language model.

The application utilizes the following AWS services:

- AWS Lambda: Serverless functions for handling WebSocket connections, Bedrock Agent interactions, and Bedrock model invocations.

- AWS API Gateway WebSocket API: WebSocket API for real-time communication between the frontend and backend.

- AWS DynamoDB: Storing conversation histories and incident data.

- AWS S3: Hosting the website content and storing conversation histories.

- AWS CloudFront: Content Delivery Network for efficient distribution of the website.

- AWS Cognito: User authentication and management.

- AWS Bedrock: Bedrock Agents and Bedrock runtime for language model interactions.

-

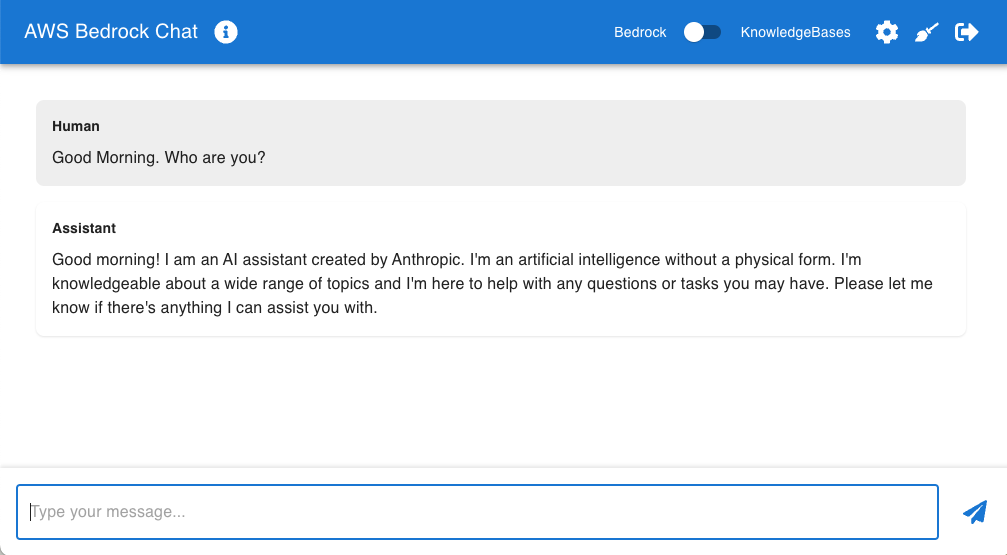

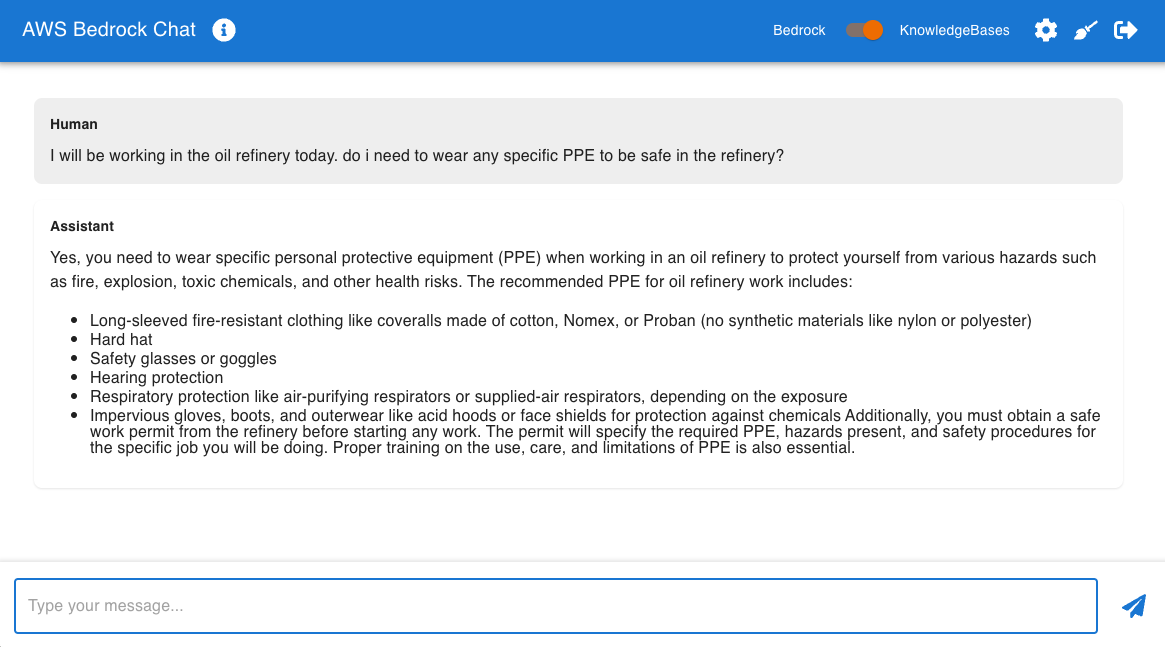

This is what the interface looks like, here we are simply communicating with Anthropic Claude3 Sonnet

-

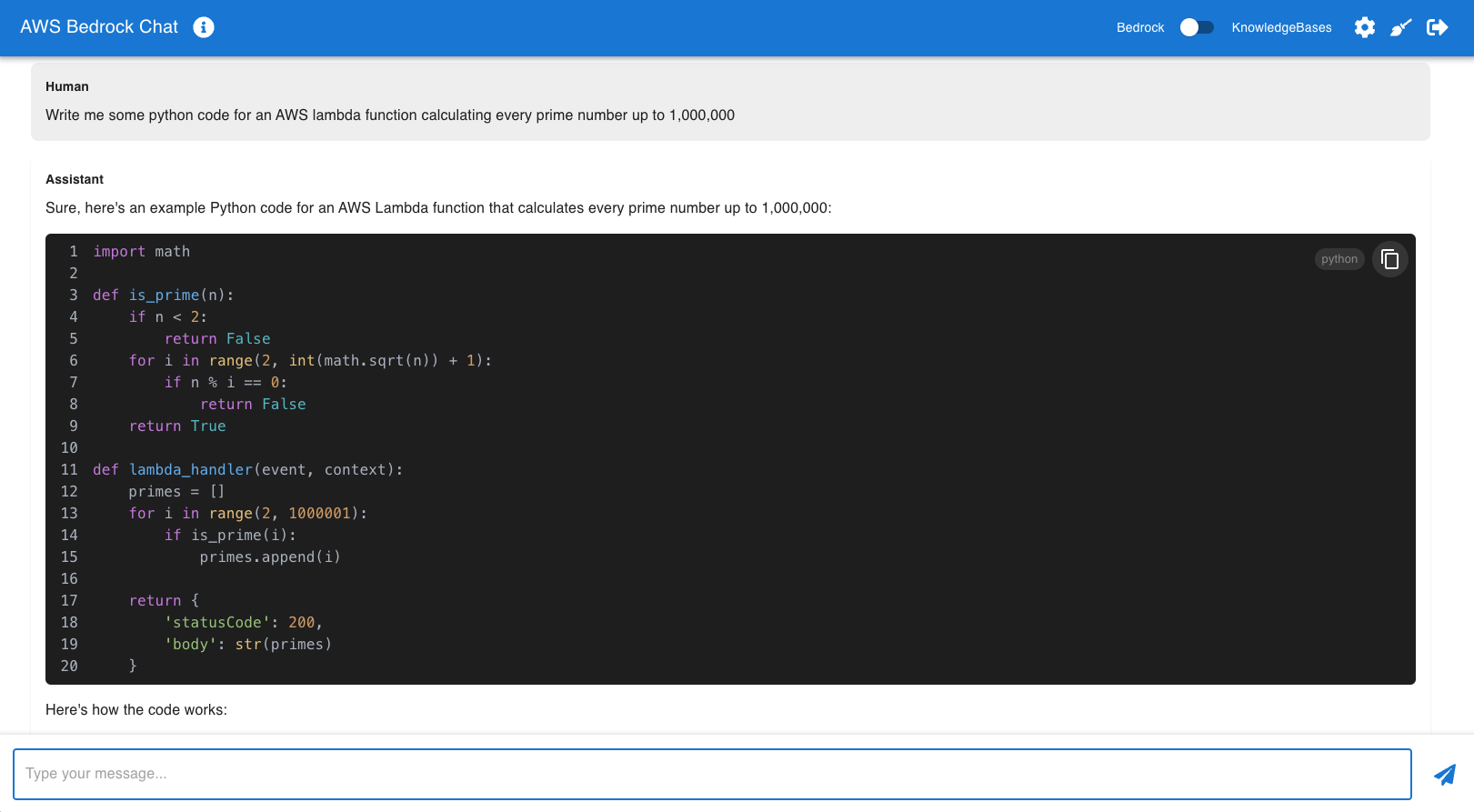

You can ask the chatbot to write code for you. Here we are using Anthropic Claude 3 Sonnet to show us python code

-

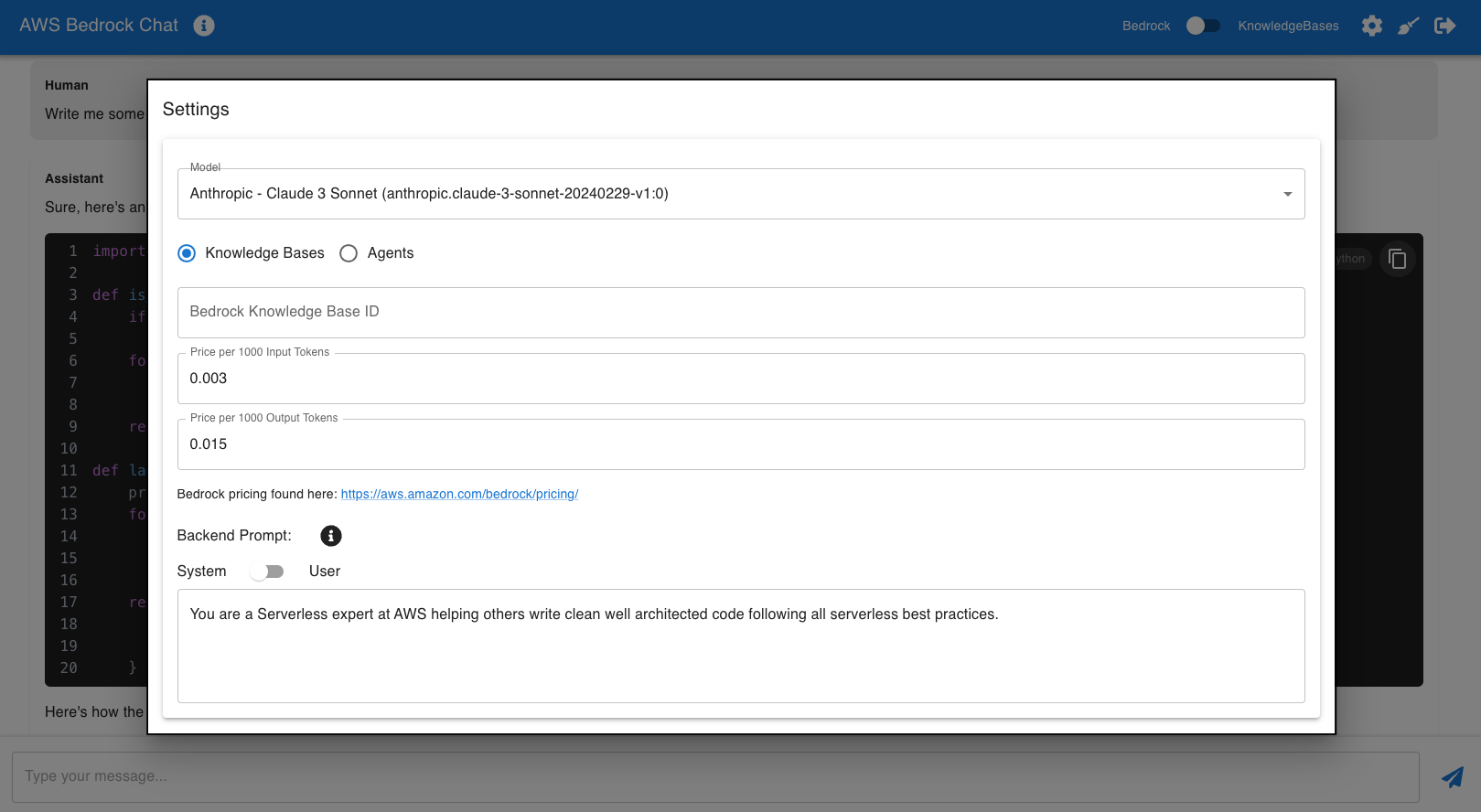

There are settings you can change at a user and global level (Global if you have multiple users using this app). You can configure a Bedrock KnowledgeBase ID to simply enable RAG. You can also configure the Agent ID and Agent Alias ID if you would like to use this chatbot with a Bedrock Agent. You can set a system prompt at the user or system level so all interactions have a standard context set. You can also set custom pricing per 1000 input/output tokens, however without saving this value, the app will use the price saved in the code which is built from the AWS Bedrock pricing page. Finally, you can select your model.

-

This is an example of interacting with Bedrock Knowledgebases, enabling RAG with Anthropic Claude3 Sonnet

-

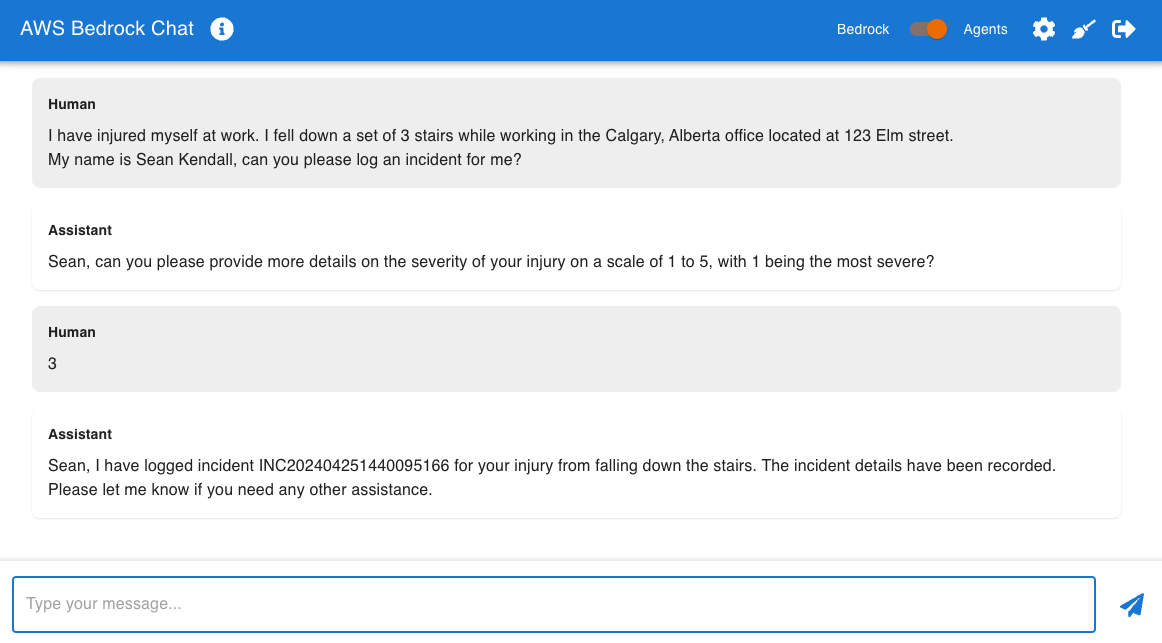

This is an example of interacting with Bedrock Agents, where we can ask questions about the data sitting behind our KnowledgeBase or have the chatbot interact with API's in the backend. This example shows how Bedrock Agents can use an AWS Lambda function to log an inciden into a DynamoDB Table. The sample code for this demo is also included in thie repository if you wish to test by manually creating the KnowledgeBase and Agent. If you need help with this, feel free to reach out to me at Seandall@Amazon.com

-

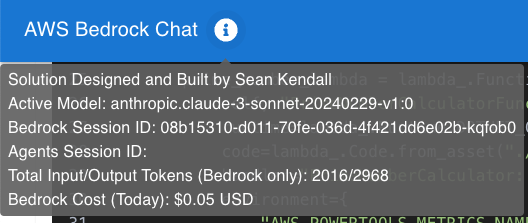

If you mouse-over the info icon in the header, you can see additional details such as the current cost, session ID's, and total input/output tokens. Since Bedrock today only supportes token metrics for the Bedrock Runtime, the tokens and cost shown here are only for communicating with an LLM directly. If you are using KnowledgeBases or Agents, these values are not reflected here

Before deploying the application, ensure you have the following installed:

- Python 3 (version 3.7 or later)

- pip (Python package installer)

- Git - click for a quick interactive tutorial

- jq

- AWS CLI (Configured with your AWS credentials)

- AWS CDK (version 2.x)

- Node.js (version 12.x or later)

-

Download the latest Python installer from the official Python website (https://www.python.org/downloads/).

-

Run the installer and make sure to select the option to add Python to the system PATH.

-

Open the Command Prompt and run the following command to install pip:

python -m ensurepip --default-pip

-

Open the Terminal and run the following command to install Python and pip:

brew install python3 -

If you don't have Homebrew installed, you can install it by following the instructions at https://brew.sh/.

Follow the instructions in the AWS CLI documentation to install the AWS CLI for your operating system.

After installing the AWS CLI, run the following command to configure it with your AWS credentials:

aws configure

This command will prompt you to enter your AWS Access Key ID, AWS Secret Access Key, AWS Region, and default output format. You can find your AWS credentials in the AWS Management Console under the "My Security Credentials" section.

Run the following command to install the AWS CDK:

npm install -g aws-cdk

To deploy the application on your local machine, follow these steps:

-

Clone the repository:

git clone https://github.com/seandkendall/genai-bedrock-chatbot --depth 1 cd genai-bedrock-chatbot -

Deploy the application stack: Simply run these 2 commands. setup is a one time command to make sure you have the correct packages set-up. deploy can be used for each change you make in the chatbot.

./setup.sh ./deploy.shThe deployment process will create all the necessary resources in your AWS account.

To deploy the application on a Windows machine, follow these steps:

- Install the AWS CLI and Node.js for Windows.

- Open the Command Prompt or PowerShell and follow the "Getting Started" section above.

To deploy the application on a Mac, follow these steps:

- Install the AWS CLI and Node.js for macOS.

- Open the Terminal and follow the "Getting Started" section above.

This project relies on Git for version control. If you're new to Git, we recommend going through the Try Git interactive tutorial to get familiar with the basic Git commands and workflow.

Additionally, here are some other helpful resources for learning Git:

Contributions are welcome! Please feel free to submit a Pull Request or open an issue for any bugs, feature requests, or improvements.

If you simply want to tell me a bug, e-mail me at Seandall@Amazon.com