A pytorch implementation of Tacotron: A Fully End-to-End Text-To-Speech Synthesis Model.

- Install python 3

- Install pytorch == 0.2.0

- Install requirements:

pip install -r requirements.txt

I used LJSpeech dataset which consists of pairs of text script and wav files. The complete dataset (13,100 pairs) can be downloaded here. I referred https://github.com/keithito/tacotron for the preprocessing code.

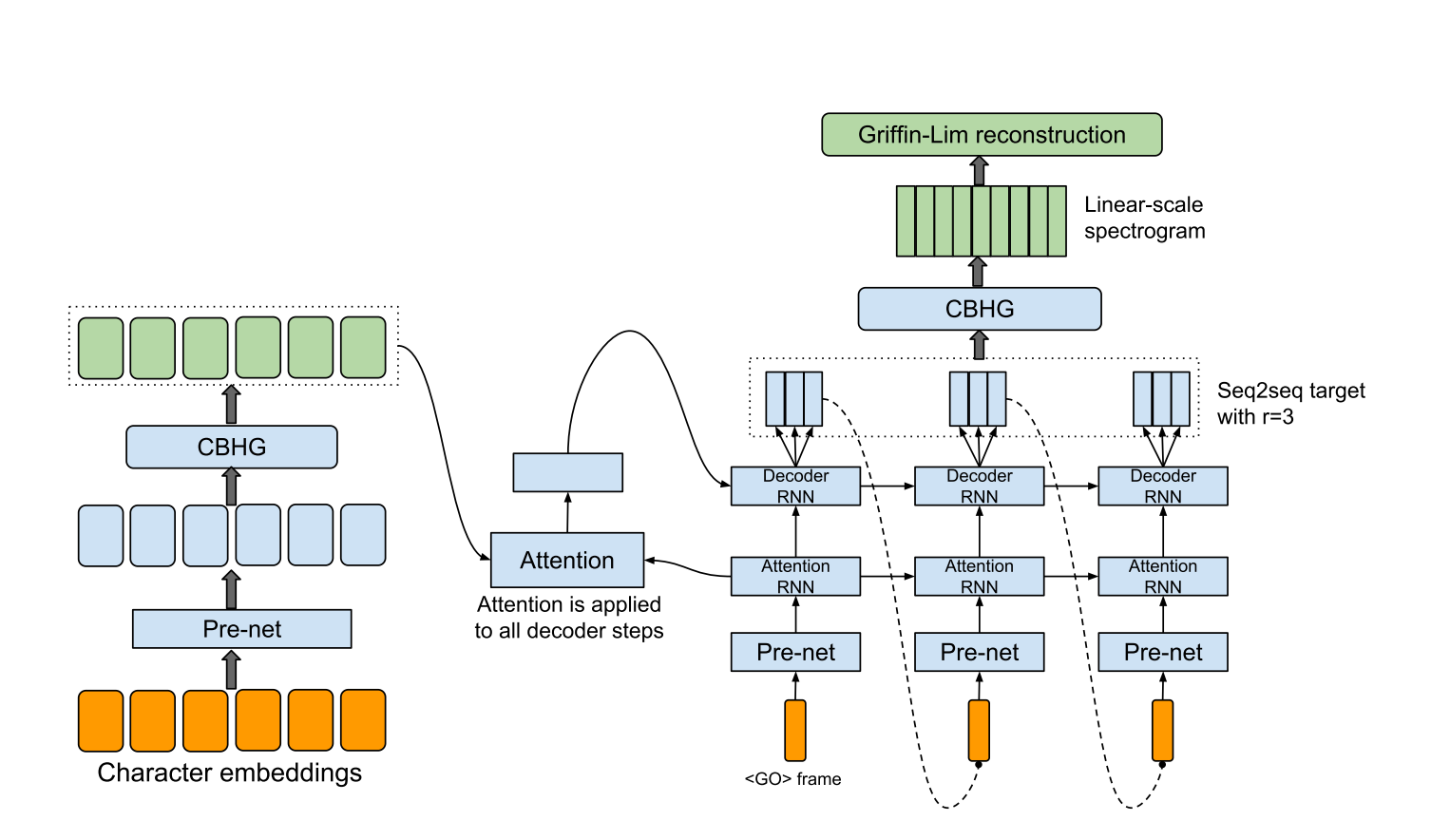

hyperparams.pyincludes all hyper parameters that are needed.data.pyloads training data and preprocess text to index and wav files to spectrogram. Preprocessing codes for text is in text/ directory.module.pycontains all methods, including CBHG, highway, prenet, and so on.network.pycontains networks including encoder, decoder and post-processing network.train.pyis for training.synthesis.pyis for generating TTS sample.

- STEP 1. Download and extract LJSpeech data at any directory you want.

- STEP 2. Adjust hyperparameters in

hyperparams.py, especially 'data_path' which is a directory that you extract files, and the others if necessary. - STEP 3. Run

train.py.

- STEP 1. Run

synthesis.py. Make sure the restore step.

- You can check the generated samples in 'samples/' directory. Training step was only 60K, so the performance is not good yet.

- Keith ito: https://github.com/keithito/tacotron

- Any comments for the codes are always welcome.