Through six years of research, the DevOps Research and Assessment (DORA) team has identified four key metrics that indicate the performance of a software development team. This project allows you to collect your data and compiles it into a dashboard displaying these key metrics.

- Deployment Frequency

- Lead Time for Changes

- Time to Restore Services

- Change Failure Rate

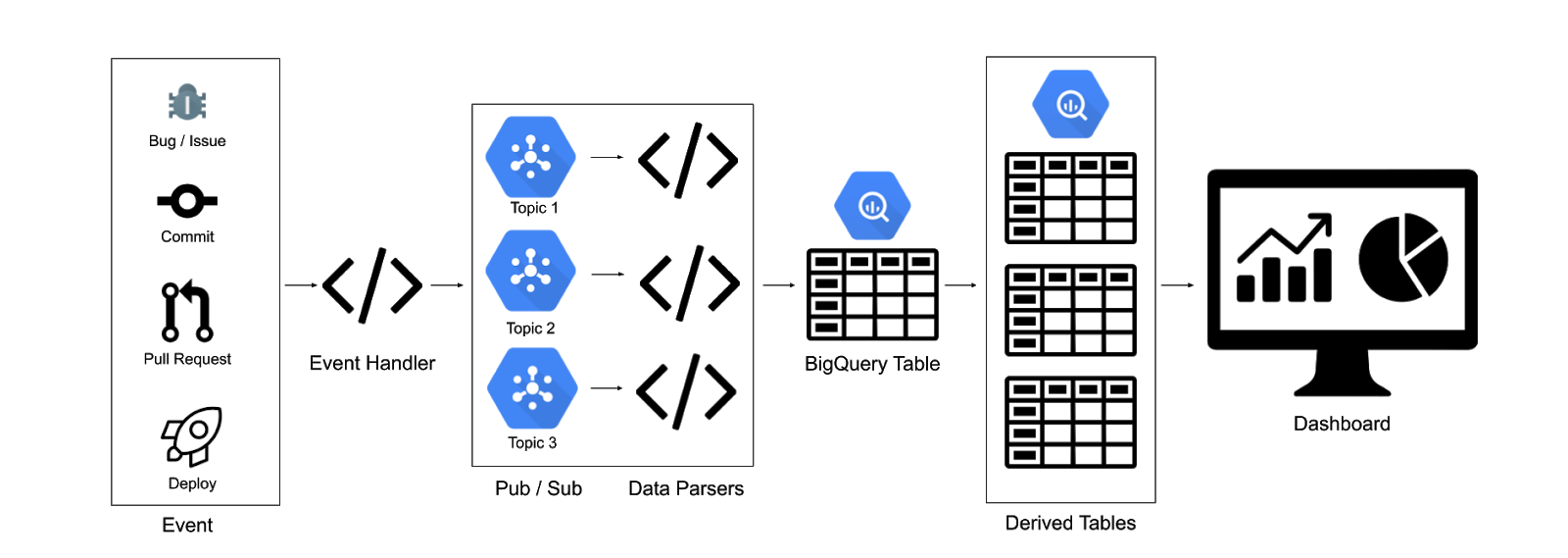

- Events are sent to a webhook target hosted on Cloud Run

- The Cloud Run target publishes all events to Pub/Sub

- A Cloud Run instance is subscribed to the topics and does some light data transformation and inputs the data into BigQuery

- Nightly scripts are scheduled in BigQuery to complete the data transformations and feed into the dashboard

- bq_workers/

- Contains the code for the individual bigquery workers. Each data source has its own worker service with the logic for parsing the data from the pub/sub message. Eg: Github has its own worker which only looks at events pushed to the Github-Hookshot pub/sub topic

- data_generator/

- Contains a python script for generating mock github data

- event_handler/

- Contains the code for the event_handler. This is the public service that accepts incoming webhooks.

- queries/

- Contains the SQL queries for creating the derived tables

- Contains a python script for schedulig the queries

- setup/

- Contains the code for setting up and tearing down the fourkeys pipeline. Also contains a script for extending the data sources.

- shared/

- Contains a shared module for inserting data into bigquery, which is used by the bq_workers

The project currently uses python3 and supports data extraction for Cloud Build and GitHub events.

- Fork this project

- Run the automation scripts, which will do the following (See the INSTALL.md for more details):

- Set up a new Google Cloud Project

- Create and deploy the Cloud Run webhook target and ETL workers

- Create the Pub/Sub topics and subscriptions

- Enable the Google Secret Manager and create a secret for your Github repo

- Create a BigQuery dataset and tables, and schedule the nightly scripts

- Open up a browser tab to connect your data to a DataStudio dashboard template

- Set up your development environment to send events to the webhook created in the second step

- Add the secret to your github webhook

The setup script includes an option to generate mock data. The data generator creates mocked github events, which will be ingested into the table with the source “githubmock.” It creates following events:

- 5 mock commits with timestamps no earlier than a week ago

- Note: Number can be adjusted

- 1 associated deployment

- Associated mock incidents

- Note: By default, less than 15% of deployments will create a mock incident. Threshold can be adjusted in the script.

To run outside of the setup script, ensure that you’ve saved your webhook URL and Github Secret in your environment variables:

export WEBHOOK={your event handler URL}

export GITHUB_SECRET={your github signing secret}Then run the following command:

python3 data_generator/data.pyYou will see events being run through the pipeline:

- The event handler logs will show successful requests

- The PubSub topic will show messages posted

- The bigquery github parser will show successful requests

- You will be able to query the events_raw table directly in bigquery:

SELECT * FROM four_keys.events_raw WHERE source = 'githubmock';Currently the scripts consider some events to be “changes”, “deploys”, and “incidents.” If you want to reclassify one of the events in the table (eg, you use a different label for your incidents other than “incident”), no changes are required on the architecture or code of the project. Simply update the nightly scripts in BigQuery for the following tables:

- four_keys.changes

- four_keys.deployments

- four_keys.incidents

To update the scripts, we recommend that you update the sql files in the queries folder, rather than in the BigQuery UI. Once you've edited the SQL, run the schedule.py script to update the scheduled query that populates the table. For example, if you wanted to update the four_keys.changes table, you'd run:

python3 schedule.py --query_file=changes.sql --table=changesNotes:

- The query_file flag should contain the relative path of the file.

- To feed into the dashboard, the table name should be one of

changes,deployments,incidents.

- Add to the

AUTHORIZED_SOURCESinsources.py- If you want to create a verification function, add the function to the file as well

- Run the

new_source.shscript in thesetupdirectory. This script will create a PubSub topic, a PubSub subscription, and the new service using thenew_source_template- Update the main.py in the new service to parse the data properly

- Update the BigQuery Script to classify the data properly

If you add a common data source, please submit a pull request so that others may benefit from the functionality.

This project uses nox to manage tests. Ensure that nox is installed:

pip install noxThe noxfile defines what tests will run on the project. Currently, it’s set up to run all the pytest files in all the directories, as well as run a linter on all directories. To run nox:

python3 -m noxTo list all the test sesssions in the noxfile:

python3 -m nox -lOnce you have the list of test sessions, you can run a specific session with:

python3 -m nox -s "{name_of_session}" The "name_of_session" will be something like "py-3.6(folder='.....').

| Field Name | Type | Notes |

| source | STRING | eg: github |

| event_type | STRING | eg: push |

| 🔑id | STRING | Id of the development object. Eg, bug id, commit id, PR id |

| metadata | JSON | Body of the event |

| time_created | TIMESTAMP | The time the event was created |

| signature | STRING | Encrypted signature key from the event. This will be the unique key for the table. |

| msg_id | STRING | Message id from Pub/Sub |

This table will be used to create the following three derived tables:

Note: Deployments and changes have a many to one relationship. Table only contains successful deployments.

| Field Name | Type | Notes |

| 🔑deploy_id | string | Id of the deployment - foreign key to id in events_raw |

| changes | array of strings | List of id’s associated with the deployment. Eg: commit_id’s, bug_id’s, etc. |

| time_created | timestamp | Time the deployment was completed |

| Field Name | Type | Notes |

| 🔑change_id | string | Id of the change - foreign key to id in events_raw |

| time_created | timestamp | Time_created from events_raw |

| change_type | string | The event type |

| Field Name | Type | Notes |

| 🔑incident_id | string | Id of the failure incident |

| changes | array of strings | List of deployment ID’s that caused the failure |

| time_created | timestamp | Min timestamp from changes |

| time_resolved | timestamp | Time the incident was resolved |

The dashboard displays all four metrics with daily systems data, as well as a current snapshot of the last 90 days.

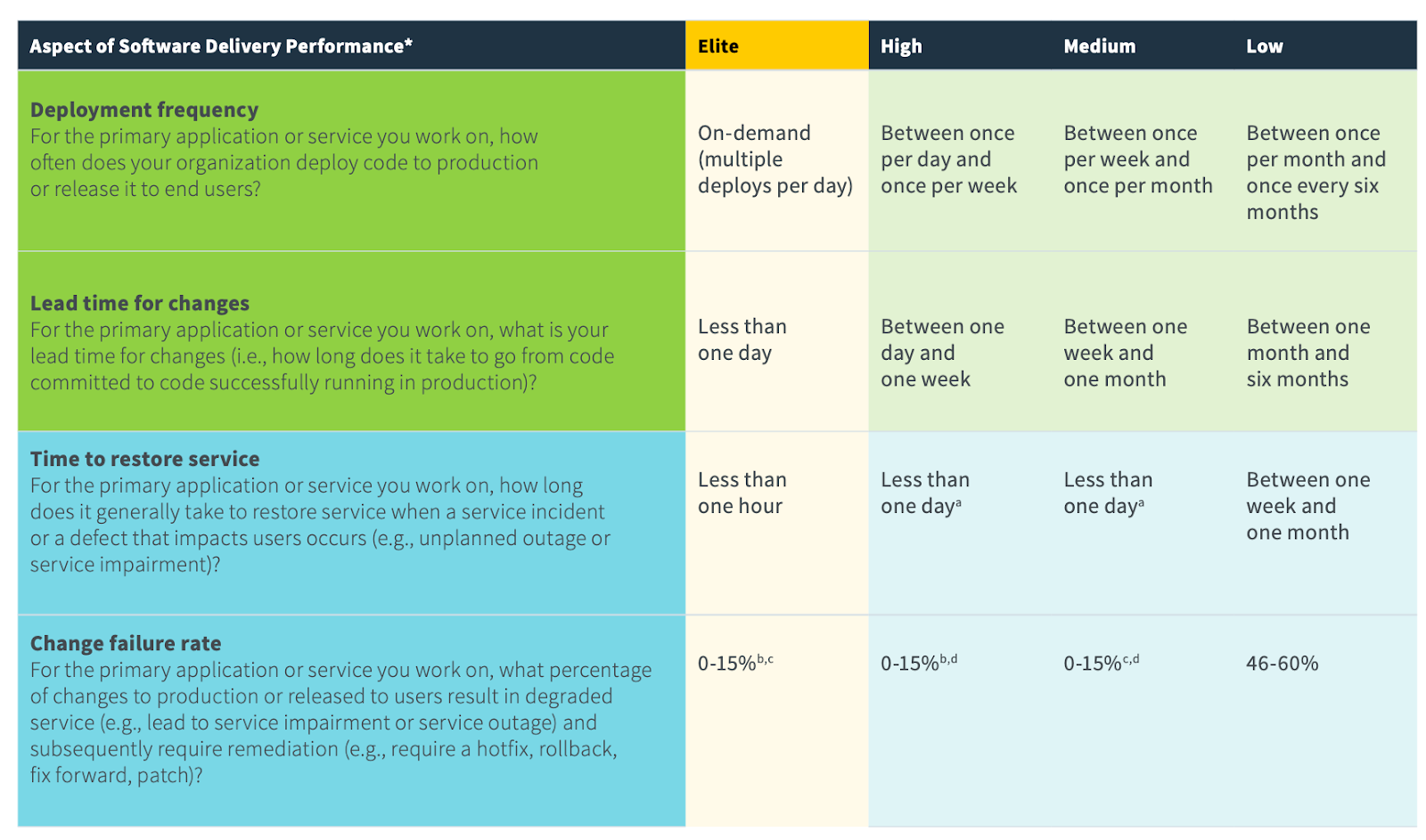

To understand the metrics and intent of the dashboard, please see the 2019 State of DevOps Report.

Deployment Frequency

- The number of deployments per time period: daily, weekly, monthly, yearly.

Lead Time for Changes

- The median amount of time for a commit to be deployed into production

Time to Restore Services

- For a failure, the median amount of time between the deployment which caused the failure, and the restoration. The restoration is measured by closing an associated bug / incident report.

Change Failure Rate

- The number of failures per the number of deployments. Eg, if there are four deployments in a day and one causes a failure, that will be a 25% change failure rate.

The color coding of the quarterly snapshots roughly follows the buckets set forth in the State of DevOps Report.

Deployment Frequency

- Green: Weekly

- Yellow: Monthly

- Red: Between once per month and once every 6 months.

- This will be expressed as “Yearly.”

Lead Time to Change

- Green: Less than one week

- Yellow: Between one week and one month

- Red: Between one month and 6 months.

- Red: Anything greater than 6 months

- This will be expressed as “One year.”

Time to Restore Service

- Green: Less than one day

- Yellow: Less than one week

- Red: Between one week and a month

- This will be expressed as “One month”

- Red: Anything greater than a month

- This will be expressed as “One year”

Change Failure Rate

- Green: Less than 15%

- Yellow: 16% - 45%

- Red: Anything greater than 45%

Disclaimer: This is not an officially supported Google product