torchtitan is currently in a pre-release state and under extensive development.

torchtitan is a native PyTorch reference architecture showcasing some of the latest PyTorch techniques for large scale model training.

- Designed to be easy to understand, use and extend for different training purposes.

- Minimal changes to the model code when applying 1D, 2D, or (soon) 3D Parallel.

- Modular components instead of monolithic codebase.

- Get started in minutes, not hours!

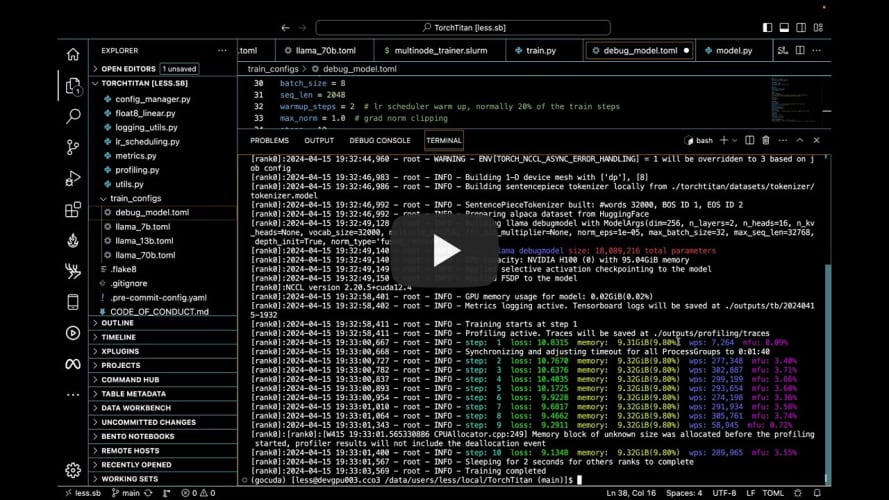

Currently we showcase pre-training Llama 3 and Llama 2 models (LLMs) of various sizes from scratch. torchtitan is tested and verified with the PyTorch nightly version torch-2.4.0.dev20240412. (We recommend latest PyTorch nightly).

Key features available:

1 - FSDP2 (per param sharding)

2 - Tensor Parallel (FSDP + Tensor Parallel)

3 - Selective layer and operator activation checkpointing

4 - Distributed checkpointing

5 - 2 datasets pre-configured (45K - 144M)

6 - GPU usage, MFU, tokens per second and other metrics all reported and displayed via TensorBoard.

7 - Fused RMSNorm (optional), learning rate scheduler, meta init, and more.

8 - All options easily configured via toml files.

9 - Performance verified on 64 A100 GPUs.

10 - Save pre-trained torchtitan model weights and load directly into torchtune for fine tuning.

1 - Async checkpointing

2 - FP8 support

3 - Context Parallel

4 - 3D (Pipeline Parallel)

5 - torch.compile support

6 - Scalable data loading solution

Install PyTorch from source or install the latest pytorch nightly, then install requirements by

pip install -r requirements.txttorchtitan currently supports training Llama 3 (8B, 70B), and Llama 2 (7B, 13B, 70B) out of the box. To get started training these models, we need to download a tokenizer.model. Follow the instructions on the official meta-llama repository to ensure you have access to the Llama model weights.

Once you have confirmed access, you can run the following command to download the Llama 3 / Llama 2 tokenizer to your local machine.

# pass your hf_token in order to download tokenizer.model

# llama3 tokenizer.model

python torchtitan/datasets/download_tokenizer.py --repo_id meta-llama/Meta-Llama-3-8B --tokenizer_path "original" --hf_token=...

# llama2 tokenizer.model

python torchtitan/datasets/download_tokenizer.py --repo_id meta-llama/Llama-2-13b-hf --hf_token=...

Run Llama 3 8B model locally on 8 GPUs:

CONFIG_FILE="./train_configs/llama3_8b.toml" ./run_llama_train.sh

To visualize TensorBoard metrics of models trained on a remote server via a local web browser:

-

Make sure

metrics.enable_tensorboardoption is set to true in model training (either from a .toml file or from CLI). -

Set up SSH tunneling, by running the following from local CLI

ssh -L 6006:127.0.0.1:6006 [username]@[hostname]

- Inside the SSH tunnel that logged into the remote server, go to the torchtitan repo, and start the TensorBoard backend

tensorboard --logdir=./outputs/tb

- In the local web browser, go to the URL it provides OR to http://localhost:6006/.

For training on ParallelCluster/Slurm type configurations, you can use the multinode_trainer.slurm file to submit your sbatch job.

Note that you will need to adjust the number of nodes and gpu count to your cluster configs.

To adjust total nodes:

#SBATCH --ntasks=2

#SBATCH --nodes=2

should both be set to your total node count. Then update the srun launch parameters to match:

srun torchrun --nnodes 2

where nnodes is your total node count, matching the sbatch node count above.

To adjust gpu count per node:

If your gpu count per node is not 8, adjust:

--nproc_per_node

in the torchrun command and

#SBATCH --gpus-per-task

in the SBATCH command section.

torchtitan is a proof-of-concept for Large-scale LLM training using native PyTorch. It is (and will continue to be) a repo to showcase PyTorch's latest distributed training features in a clean, minimal codebase. torchtitan is complementary to and not a replacement for any of the great large-scale LLM training codebases such as Megatron, Megablocks, LLM Foundry, Deepspeed, etc. Instead, we hope that the features showcased in torchtitan will be adopted by these codebases quickly. torchtitan is unlikely to ever grow a large community around it.

This code is made available under BSD 3 license. However you may have other legal obligations that govern your use of other content, such as the terms of service for third-party models, data, etc.