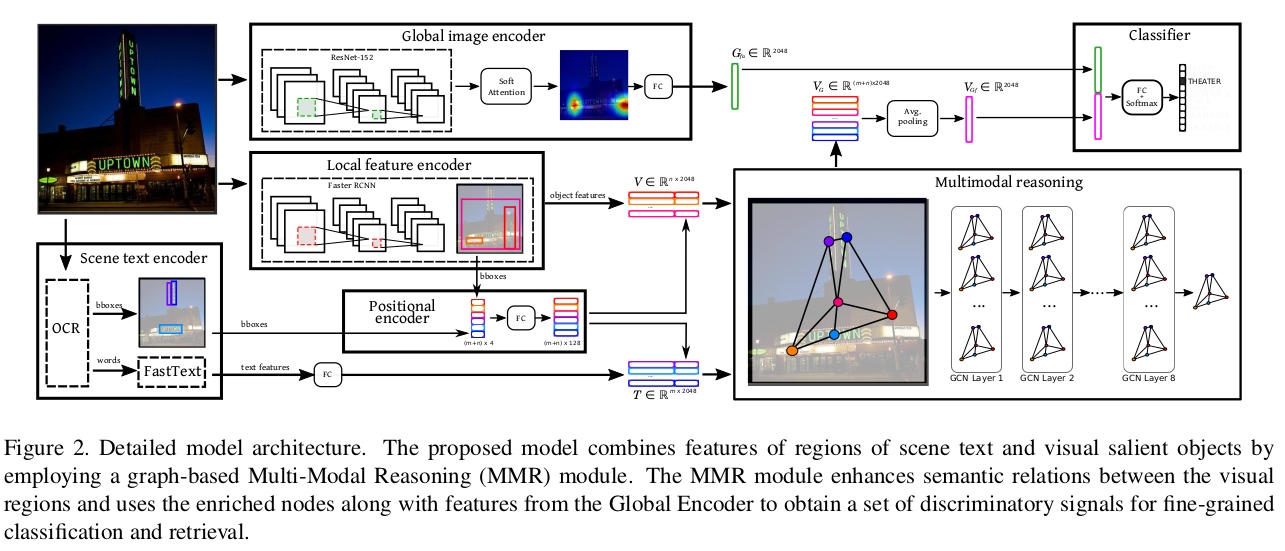

Multi-Modal Reasoning Graph for Scene-Text Based Fine-Grained Image Classification and Retrieval

Based on our WACV 2021 Accepted Paper: https://arxiv.org/abs/2009.09809

Create Conda environment

$ conda env create -f environment.yml

Activate the environment

$ conda activate finegrained

Con-Text dataset can be downloaded from: https://staff.fnwi.uva.nl/s.karaoglu/datasetWeb/Dataset.html

Drink-Bottle dataset: https://drive.google.com/open?id=1ss9Pxr7rsdCpYX7uKjd-_1R4qCpUYTWT

Con-Text dataset: https://drive.google.com/file/d/1axyxyYp6GJBcakjs9bMOdkg4Cq9DtB1b/view?usp=sharing

Drink-Bottle dataset: https://drive.google.com/file/d/1SDR9NSlOvNJZsCgOaxYFoKkLALJiPKWs/view?usp=sharing

Please download the following files and place them in the following dir structure:

Refer to the path after the link to correctly place the file.

The visual features were extracted by the Faster R-CNN proposed by in the paper Bottom-Up and Top-Down Attention for Image Captioning and Visual Question Answering.

For more details, please refer to our paper pre-print.

CONTEXT DATASET

Visual Features Con-Text: https://drive.google.com/file/d/1stQGz0uH035uYTgXbjwGAfn-q2GzXSaM/view?usp=sharing

BBOXES Features Con-Text: https://drive.google.com/file/d/107H-sTRGWAAi_2qOSlTAKEG8meuGTQti/view?usp=sharing

OCR Textual Features Con-Text: https://drive.google.com/file/d/1mIhjeas4sVgevk7TSsiAflc--_Iqltpk/view?usp=sharing

OCR Textual Con-Text: https://drive.google.com/file/d/1T5Ui3Xu2g5q9ajpwKmcA9R59KU-sg9EO/view?usp=sharing

Unzip the following Context files to run the code:

https://drive.google.com/file/d/1ls6OdIMEHE5AVjt8HfJWC4mUem71egN9/view?usp=sharing

DRINK BOTTLE DATASET

Visual Features Drink Bottle: https://drive.google.com/file/d/1jpAOB1ICVby0YIQQyNfAL7do1RJq5_Xn/view?usp=sharing

BBOXES Features Drink Bottle: https://drive.google.com/file/d/15-9tmBfwtC4bi_FB3tgHp5u7xTAzKI--/view?usp=sharing

OCR Textual Features Drink Bottle: https://drive.google.com/file/d/1a4WEoRzo6sx4KAX-5TarAaebbc_EJ5AG/view?usp=sharing

OCR Textual BBOXES preds: https://drive.google.com/file/d/1ZQ3ID3IDzwqzx94P2fNXr-agjAhH8YSS/view?usp=sharing

Unzip the following Drink Bottles files to run the code:

https://drive.google.com/file/d/1Mx8iLUuZTVtgyQ457J7mLdCzKlRUl0_e/view?usp=sharing

$ export Data_DIR=$PATH_TO_DATASETS$

$ python train.py (Please refer to the code to decide the args to train the model)

Example:

Context dataset

$python train.py context --batch_size 64 --model fullGCN_bboxes --ocr google_ocr --embedding fasttext --max_textual 15 --max_visual 36 --projection_layer mean --fusion concat --data_path $Data_DIR --split 1

Drink Bottle dataset

$python train.py bottles --batch_size 64 --model fullGCN_bboxes --ocr google_ocr --embedding fasttext --max_textual 15 --max_visual 36 --projection_layer mean --fusion concat --data_path $Data_DIR --split 1

If you found this code useful, please cite the following paper:

@article{mafla2020multi, title={Multi-Modal Reasoning Graph for Scene-Text Based Fine-Grained Image Classification and Retrieval}, author={Mafla, Andres and Dey, Sounak and Biten, Ali Furkan and Gomez, Lluis and Karatzas, Dimosthenis}, journal={arXiv preprint arXiv:2009.09809}, year={2020} }