We are going to explore the basics of robot modeling using the Unified Robot Description Format (URDF) and we will have a model ready and running in Gazebo simulator.

-

inside src/:

sh$ catkin_create_pkg m2wr_description rospy -

inside catkin_ws/:

$ catkin_make

$ source devel/setup.bash- Launch rviz:

$ roslaunch m2wr_description rviz.launch - Launch gazebo:

$ roslaunch gazebo_ros empty_world.launch- Load our model:

$ roslaunch m2wr_description spawn.launch - Run the following code in order to control the robot using the keyboard:

$ rosrun teleop_twist_keyboard teleop_twist_keyboard.pyWe are going to explore the macros for URDF files, using XACRO files and we will have the same model organized in different files, in a organized way.

We are going to insert a laser scan sensor to a 2 wheeled robot the robot.

We will modify the urdf file (m2wr.xacro) as follows:

-

Add a link element to our robot. This link will be cylindrical in shape and will represent the sensor.

-

Add a joint element to our robot. This will connect the sensor to robot body rigidly.

-

Define a new macro to calculate the inertial property of a cylinder using its dimensions (length and radius).

-

Finally a laser scan sensor plugin element will add sensing ability to the link that we created (the cylinder representing the sensor).

-

Having aded object in front of the robot in gazebo, open rviz and do the following:

-

After starting rviz open the Graphical Tools window. Once rviz window loads you need to do the following settings

-

Select odom in the Fixed Frame field (see the image below)

-

Add two new displays using the Add button on the left bottom of rviz screen. The first display should be RobotModel and the other should be LaserScan

-

Expand the LaserScan display by double clicking on its name and choose Topic as /m2wr/laser/scan

-

-

Then move the robot and rviz should mark the sensor detecting this objects.

We are going to read the values of the laser scanner and filter a small part to work with.

- Clone the following repository, but just keep the 'my_worlds' folder:

$ cd simulation_ws/src

$ git clone https://marcoarruda@bitbucket.org/theconstructcore/two-wheeled-robot-simulation.gitThe 'my_worlds' package consists of the following directories:

1. launch : Contains a launch file.

2. worlds : Contains multiple world description files.

We can run the world1 in Gazebo by writing:

$ roslaunch my_worlds world1.launchCreate a new catkin package named motion_plan with dependencies rospy, std_msgs, geometry_msgs and sensor_msgs. Write the following commands:

$ cd ~/catkin_ws/src

$ catkin_create_pgk motion_plan rospy std_msgs geometry_msgs sensor_msgs

$ cd motion_plan

$ mkdir scripts

$ touch scripts/reading_laser.pyThis reading_laser file will read the laser scan data coming on the /m2wr/laser/scan topic. Making the script executable:

$ cd ~/catkin_ws/src/motion_plan/scripts/

$ chmod +x reading_laser.pyTo spawn our robot into the gazebo simulation write:

$ roslaunch m2wr_description spawn.launch

$ rosrun motion_plan reading_laser.pyPart 5: An obstacle avoidance algorithm

An obstacle avoidance algorithm will be explained.

Clone the following repository:

$ cd catkin_ws/src

$ git clone https://marcoarruda@bitbucket.org/theconstructcore/two-wheeled-robot-motion-planning.gitLaunch the world02 scenario:

$ roslaunch my_worlds world2.launchTo test the obstacle avoidance algorithm, spawn the robot into the world, as before and run the script:

$ rosrun motion_plan obstacle_avoidance.pyYou can change various settings like speed of robot, sensing distance etc and see how it works.

Part 6: Create an algorithm to go from a point to another

We are going create an algorithm to go from a point to another using the odometry data to localize the robot.

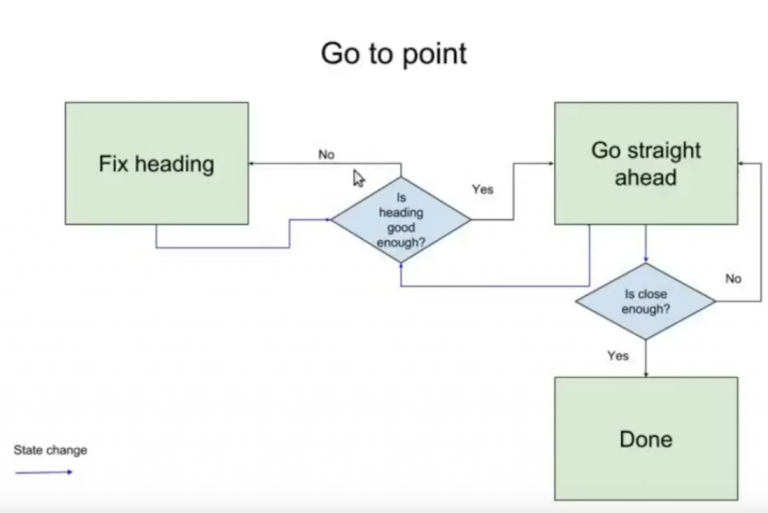

We will use the concept of state machines to implement the navigation logic. In a state machine there are finite number of states that represent the current situation (or behavior) of the system. In our case, we have three states:

-

Fix Heading: Denotes the state when robot heading differs from the desired heading by more than a threshold (represented by

yaw_precision_in code) -

Go Straight: Denotes the state when robot has correct heading but is away from the desired point by a distance greater than some threshold ( represented by

dist_precision_in code) -

Done: Denotes the state when robot has correct heading and has reached the destination.

The robot can be in any one state at a time and can switch to other states as different conditions arise. This is depicted by the following state transition diagram

As made before, given that the world2 is already loaded, spawn the robot into the scenario and run the obstacle avoidance script:

$ rosrun motion_plan obstacle_avoidance.pyPart 7: Work with wall following robot algorithm

Part 8: Work with the Bug 0 algorithm

Part 10: Perform the motion planning task Bug 1

Part 11: From ROS Indigo to Kinetic