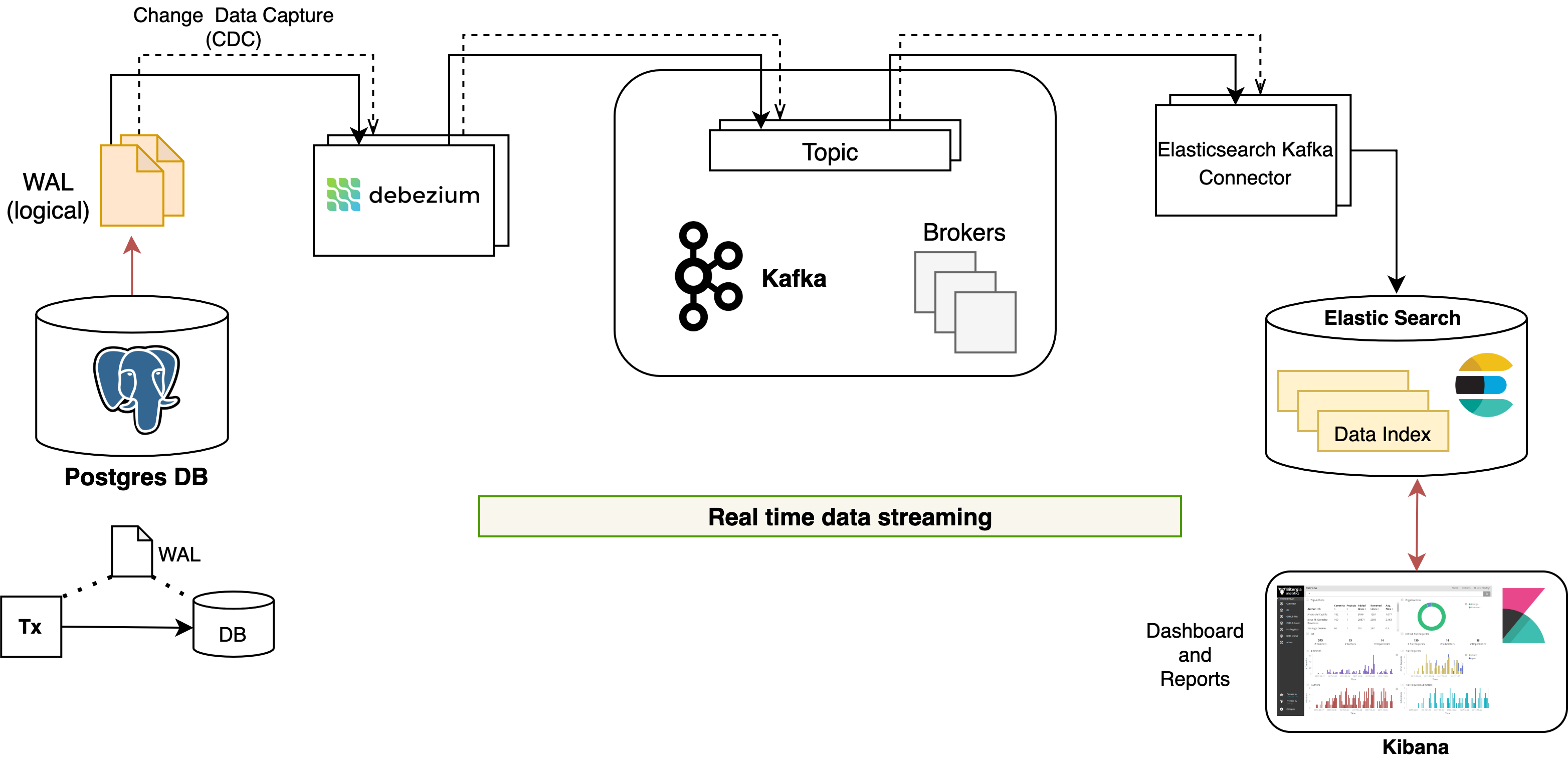

Reporting framework for real-time streaming data and visualization.

- MOSIP cluster installed as given here

- Elasticsearch and Kibana already running in the cluster.

- Postgres installed with

extended.conf. (MOSIP default install has this configured)

- Inspect

scripts/values.yamlfor modules to be installed. - Inspect

scripts/values-init.yamlfor connector configs. - Run

cd scripts

./install.sh [kube-config-file]All components will be installed in reporting namespace of the cluster.

- NOTE: for the db_user use superuser/

postgresfor now, because any other user would require the db_ownership permission, create permission & replication permission. (TODO: solve the problems when using a different user.) - NOTE: before installing,

reporting-initdebezium configuration, make sure to include all tables under that db beforehand. If one wants to add another table from the same db, it might be harder later on. (TODO: develop some script that adds additional tables under the same db)

Various Kibana dashboards are available in dashboards folder. Upload all of them with the following script:

cd scripts

./load_kibana_dashboards.shThe dashboards may also be uploaded manually using Kibana UI.

Install your own connectors as given here

To clean up the entire data pipeline follow the steps given here

CAUTION: Know what you are doing!

- Debezium, kafka "SOURCE" connector, puts all the (WAL logs) data into kafka in a raw manner, without modifying anything. So there are no "transformations" on source connector side.

- So its the job of whoever it is, that's reading these kafka topics, to modify the data in the way it is desired be put into elasticsearch.

- Currently, we are using Confluent's Elasticsearch Kafka Connector, "SINK" connector, (like debezium, this is also a kafka connector) to put data from kafka topics into elasticsearch indices. But this method also puts the data raw into elasticsearch. (Right now we have multiple sink "connections" between kafka and elasticsearch, almost one for each topic)

- To modify the data, we can use kafka connect's SMTs(Single Message Transforms). Basically each of these transforms change each kafka record in a particular way. So each individual connection can be configured such that these SMTs are applied in a chain.

- Please note that from here on the terms "connetor" and "connection" are used invariably, which mean each kafka-connection (source/sink). All the reference connector configuration files can be found here.

- For how each of these ES connections configures and uses the transforms, and how they are chained, refer to any of the file here. For more info on the connectors themselves, refer here.

- Side note: We have explored Spark (i.e., a method that doesn't use kafka sink connectors) to stream these kafka topics and put that data into elasticsearch manually. There are many complications this way. So currently continuing with the ES kafka connect + SMT way

- So the custom transforms that are written, just need to be available in the docker image of the es-kafka-connector. Find the code for these transforms and more details on how to develop and build these transforms and build the es-kafka-connector docker image etc, here.

- Please note slot.drop.on.stop property in file debez-sample-conn.api under here should be false in production.Because it is set to false so not to delete the logical replication slot when the connector stops in a graceful, expected way. Set to true in only testing or development environments.Dropping the slot allows the database to discard WAL segments. When the connector restarts it performs a new snapshot or it can continue from a persistent offset in the Kafka Connect offsets topic.

This project is licensed under the terms of Mozilla Public License 2.0.