Algorithm: Decentralized Parallel Stochastic Gradient Descent

- Install PyTorch (pytorch.org)

- GPU clusters with OpenMPI to communicate

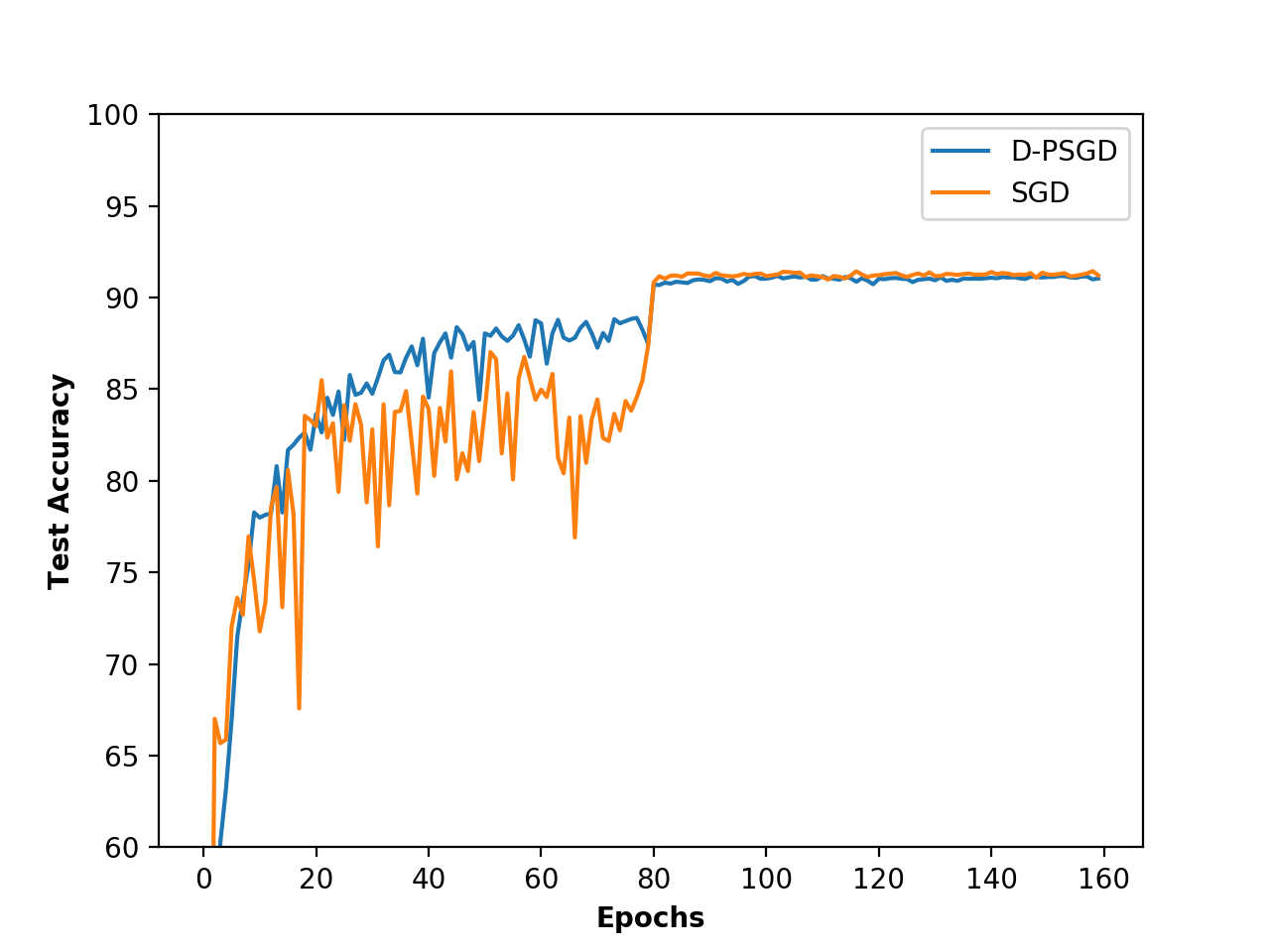

A 20-layer ResNet model and Cifar10 dataset are choosed for evaluation. Use the code bellow to start a training process on 1 coordinator node and 4 training nodes.

mpirun -n 5 --hostfile hosts python PSGD.py --epochs 160 --lr 0.5