This is a PyTorch implementation of research paper, Deep-Emotion

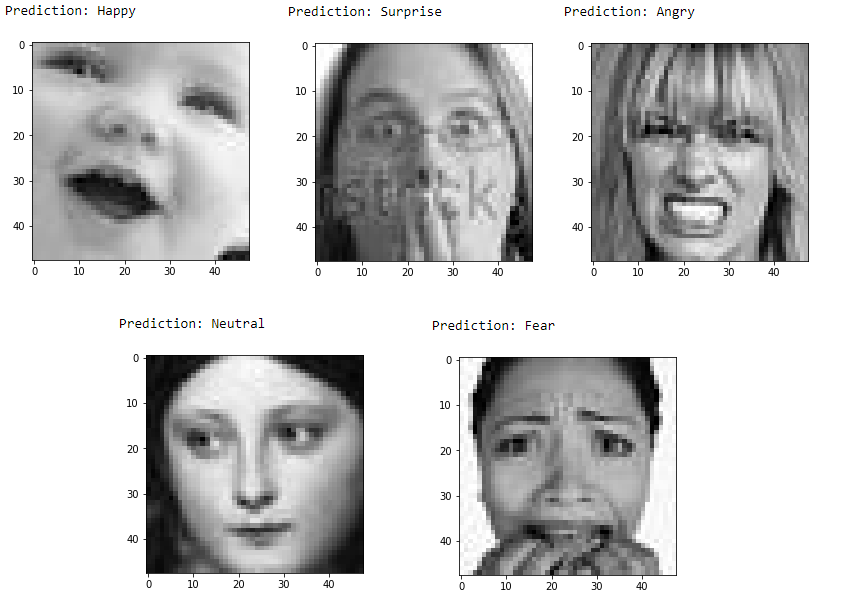

- An end-to-end deep learning framework, based on attentional convolutional network

- Attention mechanism is added through spatial transformer network

To run this code, you need to have the following libraries:

- pytorch >= 1.1.0

- torchvision ==0.5.0

- opencv

- tqdm

- PIL

This repository is organized as :

- main This file contains setup of the dataset and training loop.

- visualize This file contains the source code for evaluating the model on test data and real-time testing on webcam.

- deep_emotion This file contains the model class

- data_loaders This file contains the dataset class

- generate_data This file contains the setup of the dataset

Download the dataset from Kaggle, and decompress train.csv and test.csv into ./data folder.

Setup the dataset

python main.py [-s [True]] [-d [data_path]]

--setup Setup the dataset for the first time

--data Data folder that contains data files

To train the model

python main.py [-t] [--data [data_path]] [--hparams [hyperparams]]

[--epochs] [--learning_rate] [--batch_size]

--data Data folder that contains training and validation files

--train True when training

--hparams True when changing the hyperparameters

--epochs Number of epochs

--learning_rate Learning rate value

--batch_size Training/validation batch size

To validate the model

python visualize.py [-t] [-c] [--data [data_path]] [--model [model_path]]

--data Data folder that contains test images and test CSV file

--model Path to pretrained model

--test_cc Calculate the test accuracy

--cam Test the model in real-time with webcam connect via USB