In ICML 2021.

Sungryull Sohn¹²*,

Sungtae Lee³*,

Jongwook Choi¹,

Harm van Seijen⁴,

Mehdi Fatemi⁴,

Honglak Lee²¹

*: Equal Contributions

¹University of Michigan, ²LG AI Research, ³Yonsei University, ⁴Microsoft Research

This is an official implementation of our ICML 2021 paper Shortest-Path Constrained Reinforcement Learning for Sparse Reward Tasks. For simplicity of the code, we only included codes for DeepMind Lab environment. All algorithms used in the experiments (SPRL, ECO, ICM, PPO, Oracle) can be run using this code.

The code was tested on Linux only. We used python 3.6.

These installation steps are based on episodic_curiosity repo from Google Research.

Clone this repository:

git clone https://github.com/srsohn/shortest-path-rl.git

cd shortest-path-rlWe need to use a modified version of DeepMind Lab:

Clone DeepMind Lab:

git clone https://github.com/deepmind/lab

cd labApply our patch to DeepMind Lab:

git checkout 7b851dcbf6171fa184bf8a25bf2c87fe6d3f5380

git checkout -b modified_dmlab

git apply ../third_party/dmlab/dmlab_min_goal_distance.patchInstall dependencies of DMLab from deepmind_lab repository.

Right before building deepmind_lab,

- Replace BUILD, WORKSPACE files in lab directory with the files in changes_in_lab directory.

- Copy python.BUILD from changes_in_lab directory to lab directory.

Please make sure

- lab/python.BUILD has correct path to python. Please refer to changes_in_lab/python.BUILD.

- lab/WORKSPACE has correct path to python system in its last code line (new_local_repository).

Then build DeepMind Lab (in the lab/ directory):

bazel build -c opt //:deepmind_lab.soOnce you've installed DMLab dependencies, you'll need to run:

bazel build -c opt python/pip_package:build_pip_package

./bazel-bin/python/pip_package/build_pip_package /tmp/dmlab_pkg

pip install /tmp/dmlab_pkg/DeepMind_Lab-1.0-py3-none-any.whl --force-reinstallFinally, install episodic curiosity and its pip dependencies:

cd shortest-path-rl

pip install --user -e .Please refer to changes_in_lab/lab_install.txt for the summary of deepmind_lab & sprl installation.

To run the training code, go to base directory and run the script below.

sh scripts/main_script/run_{eco,icm,oracle,ppo,sprl}.shseed_set controls the random seed and scenario_set denotes the environment to run.

Hyperparameters of the algorithms can be changed in the bash file in the scripts/main_script directory.

scripts/launcher_script.py is the main entry point.

Main flags:

| Flag | Descriptions |

|---|---|

| --method | Solving method to use. Possible values: ppo, icm, eco, sprl, oracle |

| --scenario | Scenario to launch. Possible values: GoalSmall, ObjectMany, GoalLarge |

| --workdir | Directory where logs and checkpoints will be stored. |

Training usually takes a couple of days. Using GTX1080 Titan X, fps and VRAM usage are as below.

| Algorithm | Fps | VRAM |

|---|---|---|

| ppo | ~ 900 | 1.4G |

| icm | ~ 500 | 4.5G |

| eco | ~ 200 | 4.5G |

| sprl | ~ 200 | 4.5G |

| oracle | ~ 800 | 1.4G |

Under the hood, launcher_script.py launches train_policy.py with the right hyperparameters. train_policy.py generates environment and run ppo2.py where rolling out the transitions take place.

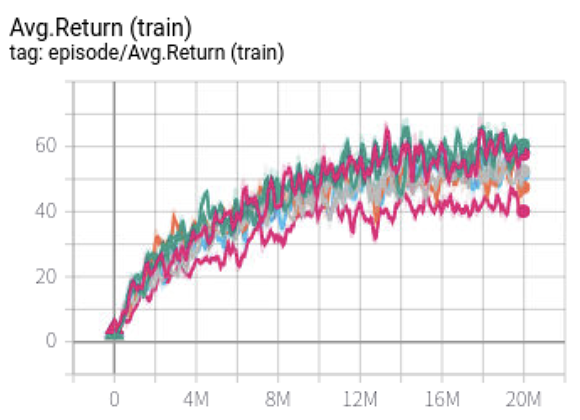

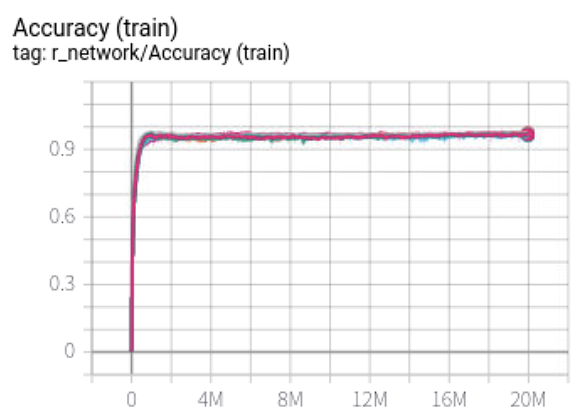

In workdir/, tensorflow logs will be saved. Run tensorboard to visualize training logs.

Training logs vary depending on the algorithm including below logs.

In sample_result/, sample training logs from tensorboard are available.

If you use this work, please cite:

@inproceedings{sohn2021shortest,

title={Shortest-Path Constrained Reinforcement Learning for Sparse Reward Tasks},

author={Sohn, Sungryull and Lee, Sungtae and Choi, Jongwook and Van Seijen, Harm H and Fatemi, Mehdi and Lee, Honglak},

booktitle={International Conference on Machine Learning},

pages={9780--9790},

year={2021},

organization={PMLR}

}

Apache License