Self-supervised Representation Learning from Videos for Facial Action Unit Detection, CVPR 2019 (oral)

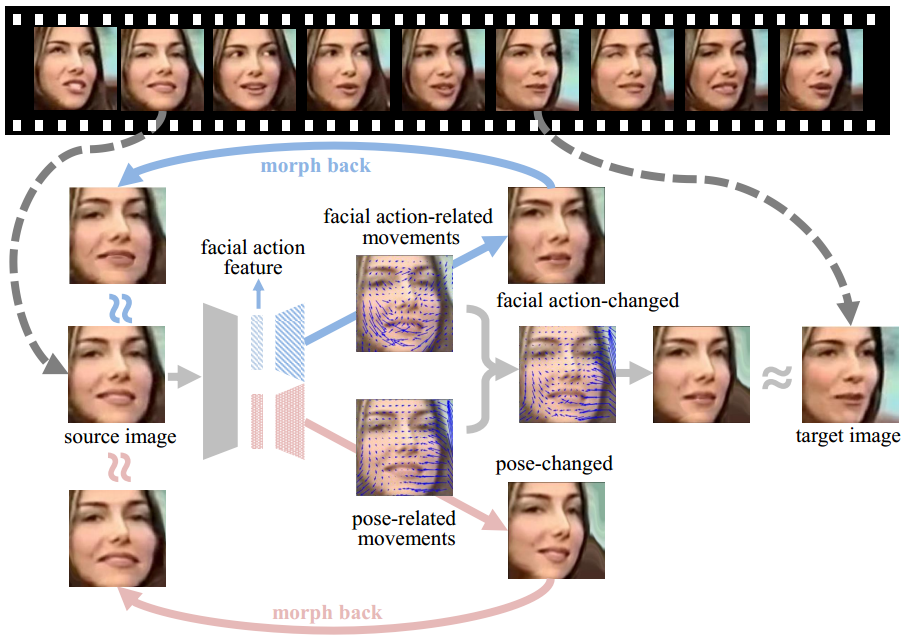

We propose a Twin-Cycle Autoencoder (TCAE) that self-supervisedly learns two embeddings to encode the movements of Facial Actions and Head Motions.

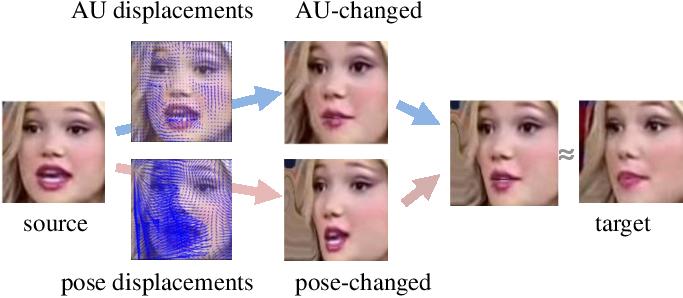

Given a source and target facial images, TCAE is tasked to change the AUs or head poses of the source frame to those of the target frame by predicting the AU-related and pose-related movements, respectively.

Given a source and target facial images, TCAE is tasked to change the AUs or head poses of the source frame to those of the target frame by predicting the AU-related and pose-related movements, respectively.

The generated AU-changed and pose-changed faces are shown as below:

Please refer to the original paper and supplementary file for more examples.

- Python 2.x

- Pytorch 0.4.1

- Download the training dataset: Voxceleb1/2

- Extract the frames at 1fps, then detect & align the faces, organize the face directories in format: data/id09238/VqEJCd7pbgQ/0_folder/img_0_001.jpg

- Split the face images for training/validation/testing by dataset_split.py

- Train TCAE by self_supervised_train_TCAE.py

The learned AU embedding from TCAE can be used for both AU detection and facial image retrieval.

- Download pretrained model and script at: onedrive

- If you adopt the released model, you should align the facial images in AU datasets by Seetaface.

- If you train the model yourself, the facial images in voxceleb1/2 should be detected & aligned & cropped, as done in face recognition task.

@InProceedings{Li_2019_CVPR,

author = {Li, Yong and Zeng, Jiabei and Shan, Shiguang and Chen, Xilin},

title = {Self-Supervised Representation Learning From Videos for Facial Action Unit Detection},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2019}

}