| Name | Contact e-mail | Role |

|---|---|---|

| Razvan Itu | itu.razvan@gmail.com | Perception |

| Harry Wang | wangharr@gmail.com | Perception |

| Saurabh Bagalkar | sbagalka@asu.edu | Planning |

| Vijayakumar Krishnaswamy | kvijay.krish@gmail.com | Planning |

| Vilas Chitrakaran (Team Lead) | cvilas@gmail.com | Control |

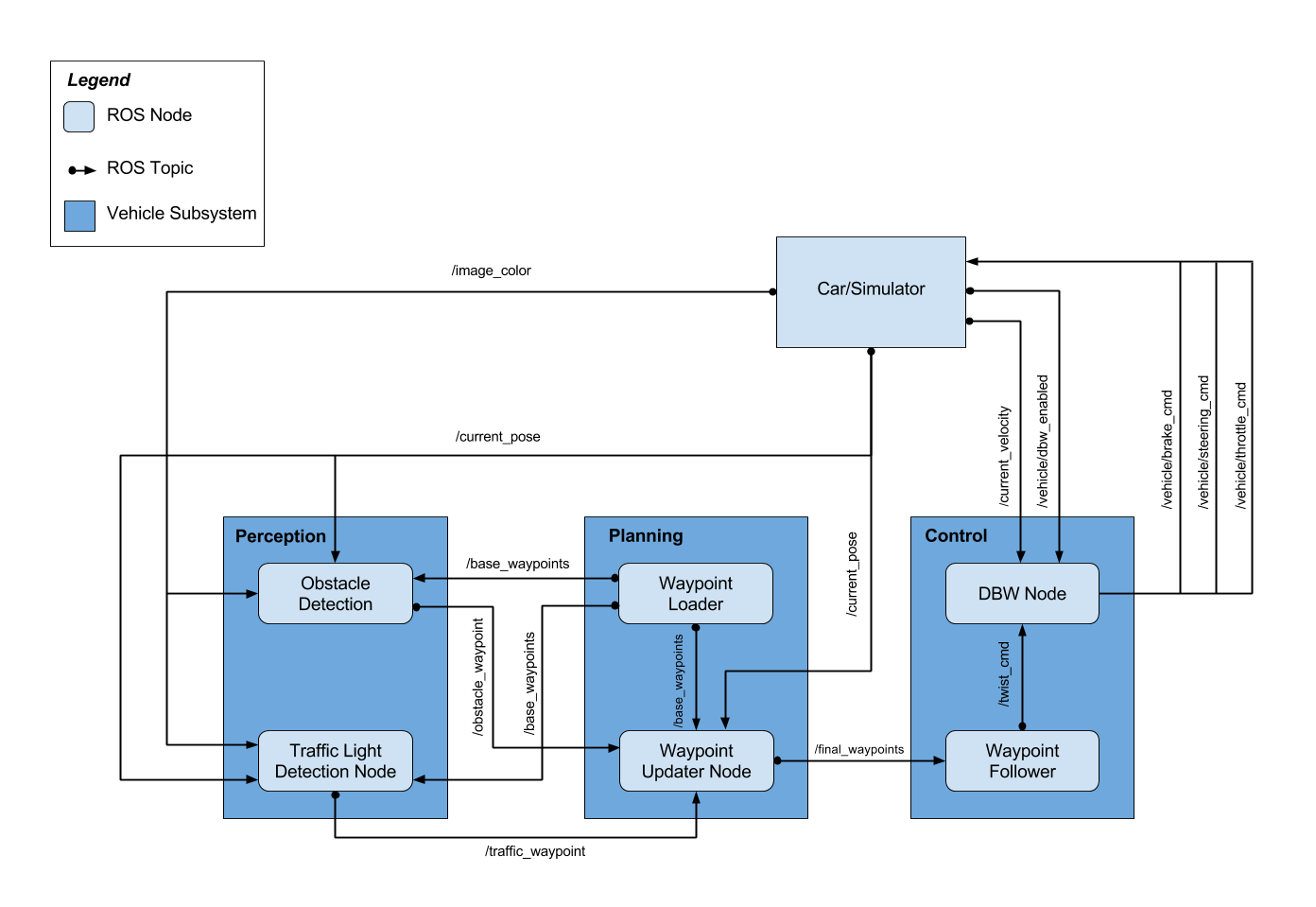

This project implements a basic pipeline for autonomous highway driving obeying traffic lights along the way. The system architecture is as follows:

As a team we were able to get all the individual modules working on their own and wire them up correctly. However, we were unable to resolve latencies in the pipeline due to camera image data and its processing in the traffic light classifier. Therefore, the light classification lags behind, causing the simulated car to not stop immediately ahead of the lights. The car eventually stops after a significant overshoot on our test machines (we do not have GPU enabled machines).

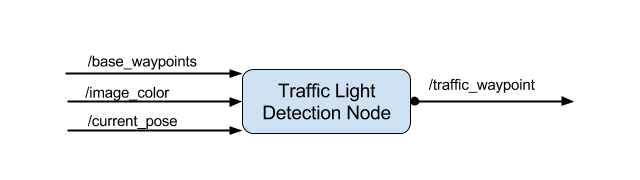

The traffic light detection logic is in the detector node (ros/src/tl_detector/tl_detector.py). The purpose of this node is to alert the vehicle when it's approaching a red light. Specifically, the detector node subscribes to the /current_pose and /base_waypoints topics and uses a global list of traffic light coordinates to determine if it's approaching a traffic light, i.e. under 80 meters. We believe this is appropriate since at 42mph, or 19m/s, this gives the vehicle about 4 seconds to come to a complete stop if the traffic light is red.

The vehicle receives a steady stream of camera images from its front-facing camera, and once it's determined that the vehicle is approaching a traffic light, the detector node then uses a classifier (ros/src/tl_detector/tl_classifier.py) to draw bounding boxes of traffic lights in the image and determine their colors. This information will then be published via the /traffic_waypoint topic of which the waypoint updater node subscribes to.

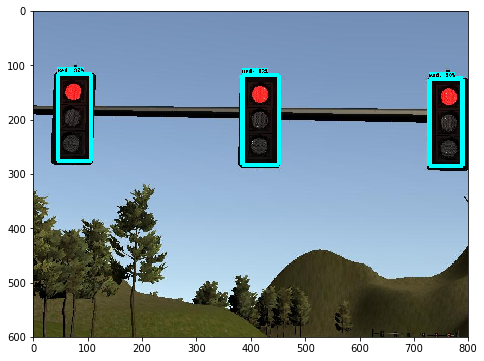

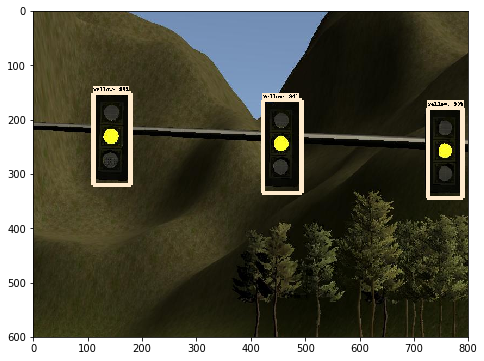

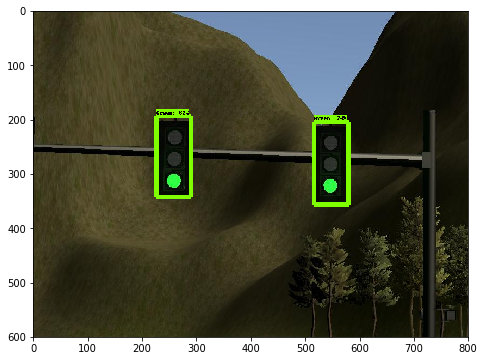

We used the TensorFlow Object Detection API to train the Faster R-CNN model to classify traffic light state. The classifier itself is implemented in tl_classifier.py. Here are some example results from the classifier.

| Red | Amber | Green |

|---|---|---|

|

|

|

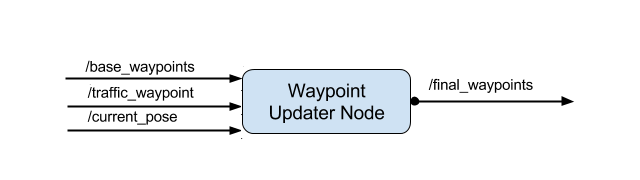

The eventual purpose of this node is to publish a fixed number of waypoints ahead of the vehicle with the correct target velocities. Depending on traffic lights the velocity is set. This node subscribes to the /base_waypoints, /current_pose, and /traffic_waypoint topics, and publishes a list of waypoints ahead of the car with target velocities to the /final_waypoints topic as shown below:

The Wapoints update funtion is implemented in the waypoint_updater.py file.

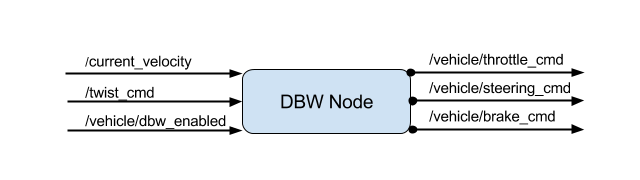

The controller module sends desired steering angle, throttle and brake commands to the car.

The control logic is implemented in dbw_node.py. A standard PID controller was implemented in twist_controller.py

for generating throttle and brake commands, and a pre-existing steering controller provided in the repo was used for

generating steering commands. Throttle and steering angle limits set by parameter files are obeyed by the controller.

This node also correctly handles toggle between manual/auto modes.

This is the project repo for the final project of the Udacity Self-Driving Car Nanodegree: Programming a Real Self-Driving Car. For more information about the project, see the project introduction here.

Please use one of the two installation options, either native or docker installation.

-

Be sure that your workstation is running Ubuntu 16.04 Xenial Xerus or Ubuntu 14.04 Trusty Tahir. Ubuntu downloads can be found here.

-

If using a Virtual Machine to install Ubuntu, use the following configuration as minimum:

- 2 CPU

- 2 GB system memory

- 25 GB of free hard drive space

The Udacity provided virtual machine has ROS and Dataspeed DBW already installed, so you can skip the next two steps if you are using this.

-

Follow these instructions to install ROS

- ROS Kinetic if you have Ubuntu 16.04.

- ROS Indigo if you have Ubuntu 14.04.

-

- Use this option to install the SDK on a workstation that already has ROS installed: One Line SDK Install (binary)

-

Download the Udacity Simulator.

Build the docker container

docker build . -t capstoneRun the docker file

docker run -p 4567:4567 -v $PWD:/capstone -v /tmp/log:/root/.ros/ --rm -it capstoneTo set up port forwarding, please refer to the instructions from term 2

- Clone the project repository

git clone https://github.com/udacity/CarND-Capstone.git- Install python dependencies

cd CarND-Capstone

pip install -r requirements.txt- Make and run styx

cd ros

catkin_make

source devel/setup.sh

roslaunch launch/styx.launch- Run the simulator

- Download training bag that was recorded on the Udacity self-driving car.

- Unzip the file

unzip traffic_light_bag_file.zip- Play the bag file

rosbag play -l traffic_light_bag_file/traffic_light_training.bag- Launch your project in site mode

cd CarND-Capstone/ros

roslaunch launch/site.launch- Confirm that traffic light detection works on real life images