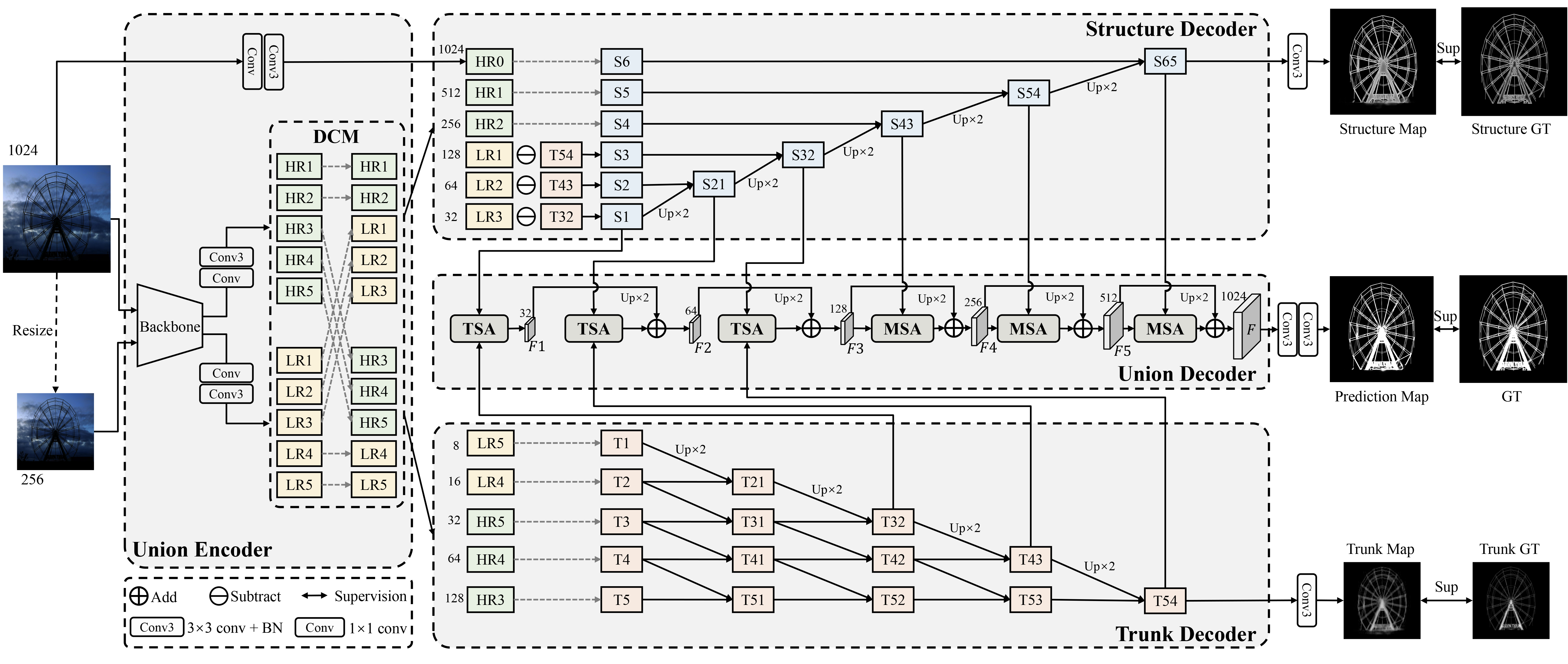

Official Implementation of ACM-MM 2023 paper "Unite-Divide-Unite: Joint Boosting Trunk and Structure for High-accuracy Dichotomous Image Segmentation"

Jialun Pei, Zhangjun Zhou, Yueming Jin, He Tang, and Pheng-Ann Heng

Contact: jialunpei@cuhk.edu.hk, hetang@hust.edu.cn

- Linux with python ≥ 3.8

- Pytorch ≥ 1.7 and torchvison that matches the Pytorch installation.

- Opencv

- Numpy

- Apex

- Download the datasets and put them in the same folder. To match the folder name in the dataset mappers, you'd better not change the folder names, its structure may be:

DATASET_ROOT/

├── DIS5K

├── DIS-TR

├── im

├── gt

├── trunk-origin

├── struct-origin

├── DIS-VD

├── im

├── gt

├── DIS-TE1

├── im

├── gt

├── DIS-TE2

├── im

├── gt

├── DIS-TE3

├── im

├── gt

├── DIS-TE4

├── im

├── gt

- Download the pre-training weights into UDUN-master/pre .

| Model | UDUN pretrain weights |

|

MAE |

HCE |

|---|---|---|---|---|

| ResNet-18 | UDUN-R18 | 0.807 | 0.065 | 1009 |

| ResNet-34 | UDUN-R34 | 0.818 | 0.060 | 999 |

| ResNet-50 | UDUN-R50 | 0.831 | 0.057 | 977 |

Download the optimized model weights and store them in UDUN-master/model.

The visual results of our UDUN with ResNet-50 trained on Overall DIS-TE.

The visual results of other SOTAs on Overall DIS-TE.

- To train our UDUN on single GPU by following command,the trained models will be saved in savePath folder. You can modify datapath if you want to run your own datases.

./train.sh- To test our UDUN on DIS5K, the prediction maps will be saved in DIS5K_Pre folder.

python3 test.py - To Evaluate the predicted results.

cd metrics

python3 test_metrics.py

python3 hce_metric_main.py

- Split the ground truth into trunk map and struct map, which will be saved into DIS5K-TR/gt/Trunk-origin and DIS5K-TR/gt/struct-origin.

cd utils

python3 utils.pyThis work is based on:

Thanks for their great work!

If this helps you, please cite this work:

@inproceedings{pei2023udun,

title={Unite-Divide-Unite: Joint Boosting Trunk and Structure for High-accuracy Dichotomous Image Segmentation},

author={Pei, Jialun and Zhou, Zhangjun and Jin, Yueming and Tang, He and Pheng-Ann, Heng},

booktitle={Proceedings of the 31th ACM International Conference on Multimedia},

pages={1--10},

year={2023},

}