lightly's backend - receipt to text 📉

OCR can be found in /ocr, database/network controller can be found in /ingress

- recommends

pipenvfor virtualenv, refers to pypa/pipenv for installation - python

>=3.7 - built with

pytorch

- build local docker image with

[sudo] docker build [--gpus=all] -f [PATH of Dockerfile] -t aar0npham/lightly-ocr:latest ocr- for

--gpus=allrequires nvidia-docker

- for

- to test the images after build do

[sudo] docker run -d -p 5000:5000 aar0npham/lightly-ocr:latest - refers to @66171c80 for MORAN

- run

bash scripts/download_model.shto get the pretrained model (included in docker images) - to test the model run:

python ocr/pipeline.py --img [IM_PATH] - to train your own model refers to here. Train loops are located in

ocr/train/

-

make sure the repository is up to date with

git pull origin master -

create a new branch BEFORE working with

git checkout -b feats-branch_namewherefeatsis the feature you are working on and thebranch-nameis the directory containing that features.e.g:

git checkout -b YOLO-ocrmeans you are working onYOLOinsideocr -

make your changes as you wish

-

git statusto show your changes and then following withgit add .to stage your changes -

commit these changes with

git commit -m "describe what you do here"

if you have more than one commit you should rebase/squash small commits into one

-

git statusto show the amount of your changes comparing to HEAD:Your branch is ahead of 'origin/master' by n commit.wherenis the number of your commit -

git rebase -i HEAD~nto changes commit, REMEMBER-i -

Once you enter the interactive shell

pickyour first commit andsquashall the following commits after that -

after saving and exits edit your commit message once the new windows open describe what you did

-

more information here

- push these changes with

git push origin feats-branch_name,git branchto check which branch you are on - then make a pull-request on github!

overview in src as follows:

./

├── save_models/ # location for model save

├── notebook/ # contains playground for testing

├── modules/ # contains core file for setting up models

├── train/ # code to train specific model

├── test/ # contains unit testing file

├── tools/ # contains tools to generate dataset/image processing etc.

├── Dockerfile # Dockerfile for creating container

├── requirements.txt # contains modules using for import

├── requirements-dev.txt # contains modules that is not included in the docker container

├── config.yml # config.yml

├── convert.py # convert model to .onnx file format

├── model.py # contains model constructed from `modules`

├── net.py # contains detector and recognizer segment of OCR

├── server.py # run basic server with `flask`

├── pipeline.py # end-to-end OCR

└── torch2onnx.py # conversion to .onnx (future use with onnx.js) _WIP_General

- improve runtime (concurrency)

- define type for python function

- custom ops for

torch.nn.functional.grid_sample, refers to this issues. Notes and fixes are in here -

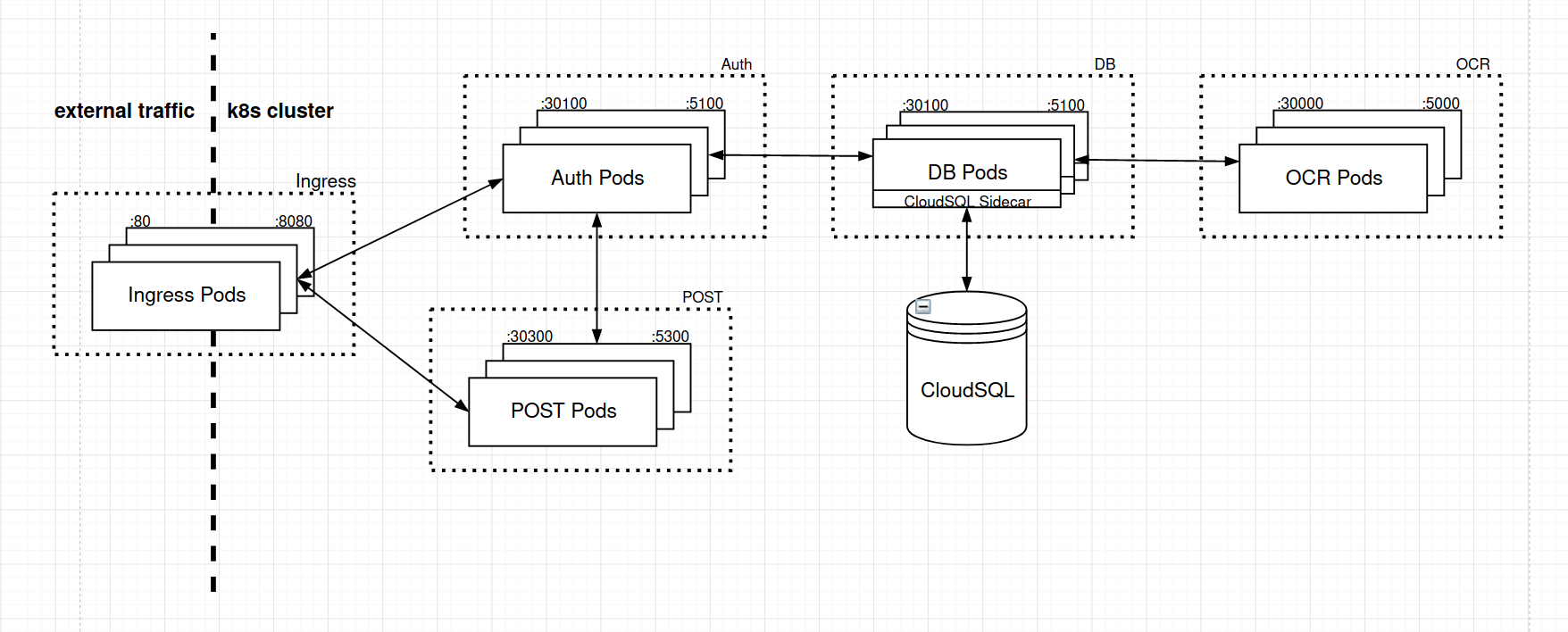

ingress controller -

rename state dict -

→ fixes CircleCI config -

add docstring, fixestoo-many-locals -

updates save weight to google drive (for local testing) -

complete__init__.py -

added Dockerfile/CircleCI

CRAFT

- add

unit_test - includes training loop (under construction)

CRNN

- add

unit_test -

process ICDAR2019 for eval sets in conjunction with MJSynth val data ⇒ reduce biases -

fixesbatch_firstfor AttentionCell in sequence.py -

transfer trained weight to fit with the model -

fix image padding issues with eval.py -

creates a general dataset and generator function for both reconition model -

database parsing for training loop -

FIXME: gradient vanishing when training -

generates logs for each training session -

add options for continue training -

modules incompatible shapes -

create lmdb as dataset -

added generator.py to generate lmdb -

merges valuation_fn into train.py

paper | original implementation

architecture: VGG16-Unet as backbone, with UpConv return region/affinity score

- adopt from Faster R-CNN

paper | original implementation

NOTES:

- run

ocr/tools/generator.pyto create dataset,ocr/train/crnn.pyto train the model - model is under model.py

architecture: 4 stage CRNN includes:

- TPS based STN for image rectification

- ResNet50 for feature extractor

- Bidirectional LSTM for sequence modeling

- either CTC / Attention layer for sequence prediction