If you always wanted to learn how AI works, this is the course for you. Our course will guide you through the basics of machine learning and show you ways it can be applied in real-world scenarios. No prior machine learning knowledge is required, but you’ll need a solid grasp of Python programming.

We’ll start with simple examples and provide step-by-step explanations on training machine learning models to make predictions. Then we’ll introduce you to neural networks, where you’ll learn about more advanced models that drive recent AI advancements. Additionally, we’ll cover models like ChatGPT, showing you how they are trained and how to build applications using them.

By the end of the course, you'll have practical skills and a solid foundation in machine learning, ready to create your own projects.

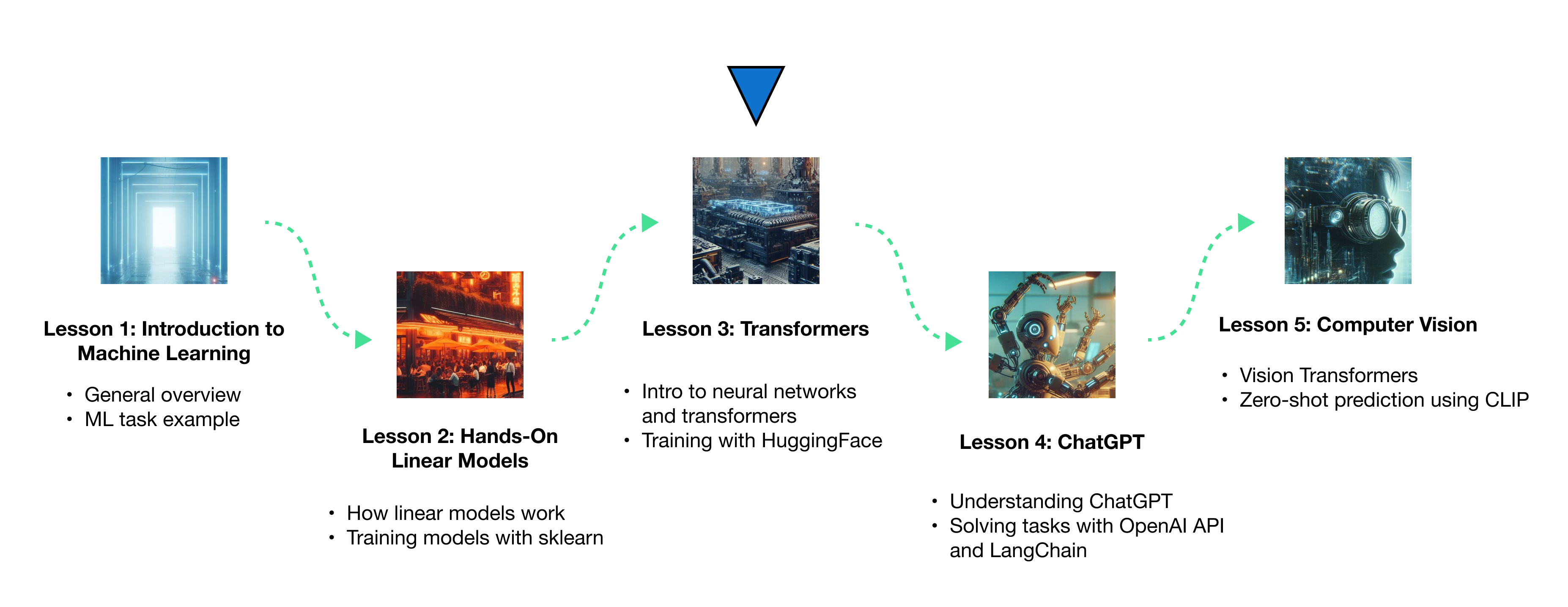

In this course, we’ll guide you through five clear and straightforward notebooks that introduce you to cutting-edge concepts. Each notebook has two versions:

- A basic version has several simple coding tasks that will help you to get a grasp on the material,

- A version with solutions has all the tasks solved, so you can consult them if you get stuck or if you want to check yourself.

- Basic machine learning principles

- Linear classifier

- Transformers for texts and images

- ChatGPT architecture

- CLIP model architecture

- HuggingFace libraries: datasets, transformers

- OpenAI API, LangChain

- General principles of machine learning

- Overview of different areas within machine learning

- Introduction to text classification using a linear classifier

- Using Hugging Face datasets

- Bag-of-words model and text vectorization with scikit-learn

- Math behind a multiclass linear classifier

- Gradient descent for parameter optimization

- Training a linear model with scikit-learn

- Basics of neural networks

- Texts tokenization and embeddings

- Attention mechanism in neural networks

- Transformers architecture

- Stochastic gradient descent for neural networks training

- Training and fine-tuning models with Hugging Face transformers

- Base GPT model is pre-training using self-supervised learning

- ChatGPT fine-tuning and alignment training

- In-context learning with ChatGPT

- Using the OpenAI API for text classification

- Building applications with large language models using LangChain

- Basics of image classification

- Vision transformer architecture

- Principles of transfer learning

- Training and fine-tuning vision transformers

- CLIP model architecture

- Zero-shot image classification with CLIP