The official implementation of ICCV2023 paper "Spatio-Temporal Domain Awareness for Multi-Agent Collaborative Perception".

Spatio-Temporal Domain Awareness for Multi-Agent Collaborative Perception,

Kun Yang*, Dingkang Yang*, Jingyu Zhang, Mingcheng Li, Yang Liu, Jing Liu, Hanqi Wang, Peng Sun, Liang Song

Accepted by ICCV 2023

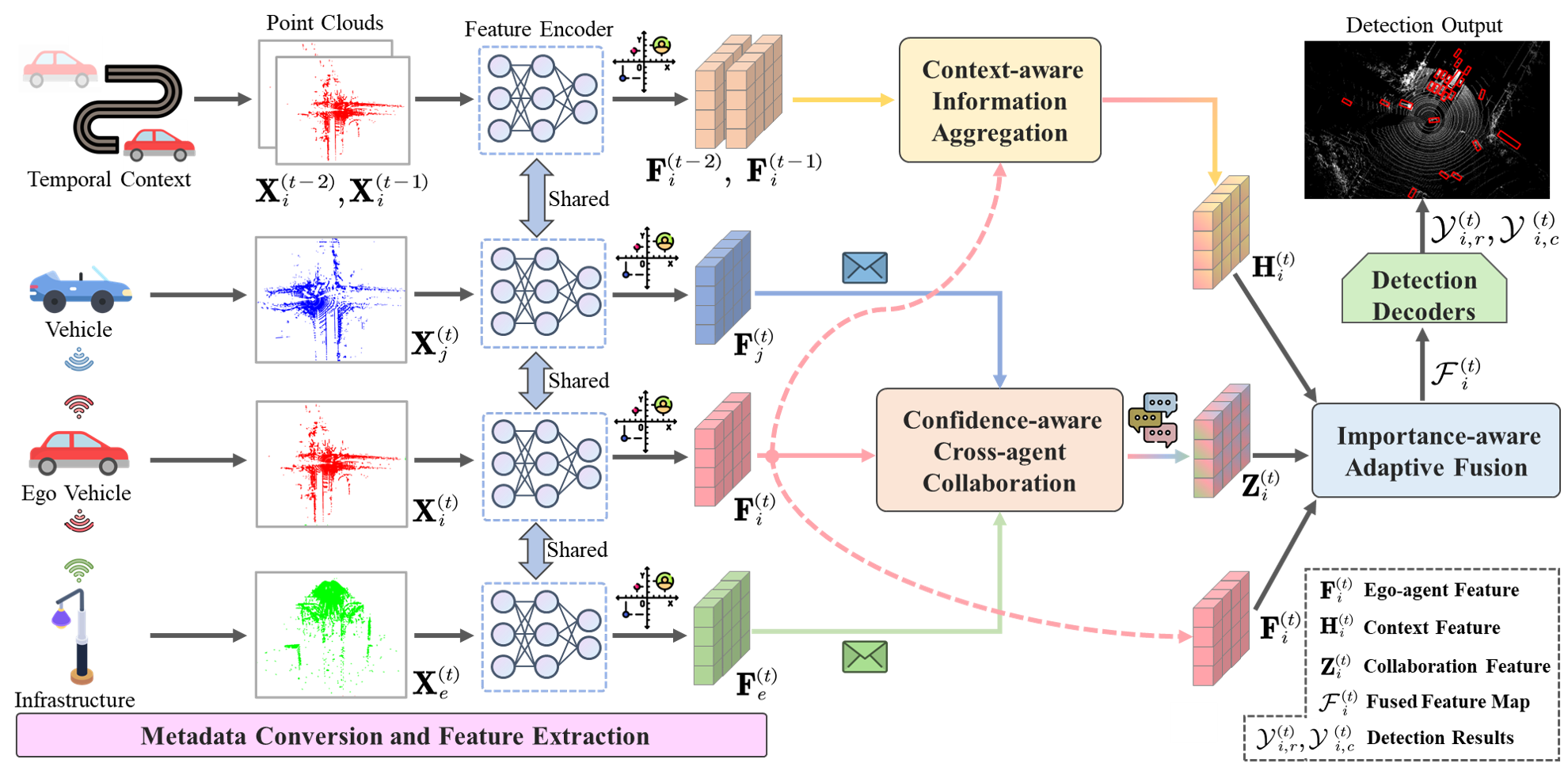

Multi-agent collaborative perception as a potential application for vehicle-to-everything communication could significantly improve the perception performance of autonomous vehicles over single-agent perception. However, several challenges remain in achieving pragmatic information sharing in this emerging research. In this paper, we propose SCOPE, a novel collaborative perception framework that aggregates the spatio-temporal awareness characteristics across on-road agents in an end-to-end manner. Specifically, SCOPE has three distinct strengths: i) it considers effective semantic cues of the temporal context to enhance current representations of the target agent; ii) it aggregates perceptually critical spatial information from heterogeneous agents and overcomes localization errors via multi-scale feature interactions; iii) it integrates multi-source representations of the target agent based on their complementary contributions by an adaptive fusion paradigm. To thoroughly evaluate SCOPE, we consider both real-world and simulated scenarios of collaborative 3D object detection tasks on three datasets. Extensive experiments demonstrate the superiority of our approach and the necessity of the proposed components.

Please refer to OpenCOOD and centerformer for more installation details.

Here we install the environment based on the OpenCOOD and centerformer repos.

# Clone the OpenCOOD repo

git clone https://github.com/DerrickXuNu/OpenCOOD.git

cd OpenCOOD

# Create a conda environment

conda env create -f environment.yml

conda activate opencood

# install pytorch

conda install -y pytorch torchvision cudatoolkit=11.3 -c pytorch

# install spconv

pip install spconv-cu113

# install basic library of deformable attention

git clone https://github.com/TuSimple/centerformer.git

cd centerformer

# install requirements

pip install -r requirements.txt

sh setup.sh

# clone our repo

https://github.com/starfdu1418/SCOPE.git

# install v2xvit into the conda environment

python setup.py develop

python v2xvit/utils/setup.py build_ext --inplacePlease download the V2XSet and OPV2V datasets. The dataset folder should be structured as follows:

v2xset # the downloaded v2xset data

── train

── validate

── test

opv2v # the downloaded opv2v data

── train

── validate

── testWe provide our pretrained models on V2XSet and OPV2V datasets. The download URLs are as follows:

To test the provided pretrained models of SCOPE, please download the model file and put it under v2xvit/logs/scope. The validate_path in the corresponding config.yaml file should be changed as v2xset/test or opv2v/test.

To test under the default pose error setting in our paper, please change the loc_err in the corresponding config.yaml file as True. The default localization and heading errors are set as 0.2m and 0.2° in the inference.py.

Run the following command to conduct test:

python v2xvit/tools/inference.py --model_dir ${CHECKPOINT_FOLDER} --eval_epoch ${EVAL_EPOCH}The explanation of the optional arguments are as follows:

model_dir: the path to your saved model.eval_epoch: the evaluated epoch number.

You can use the following commands to test the provided pretrained models:

V2XSet dataset: python v2xvit/tools/inference.py --model_dir ${CHECKPOINT_FOLDER} --eval_epoch 31

OPV2V dataset: python v2xvit/tools/inference.py --model_dir ${CHECKPOINT_FOLDER} --eval_epoch 34We follow OpenCOOD to use yaml files to configure the training parameters. You can use the following command to train your own model from scratch or a continued checkpoint:

python v2xvit/tools/train.py --hypes_yaml ${CONFIG_FILE} [--model_dir ${CHECKPOINT_FOLDER}]The explanation of the optional arguments are as follows:

hypes_yaml: the path of the training configuration file, e.g.v2xvit/hypes_yaml/point_pillar_scope.yaml. You can change the configuration parameters in this provided yaml file.model_dir(optional) : the path of the checkpoints. This is used to fine-tune the trained models. When themodel_diris given, the trainer will discard thehypes_yamland load theconfig.yamlin the checkpoint folder.

If you are using our SCOPE for your research, please cite the following paper:

@Inproceedings{yang2023spatio,

author = {Yang, Kun and Yang, Dingkang and Zhang, Jingyu and Li, Mingcheng and Liu, Yang and Liu, Jing and Wang, Hanqi and Sun, Peng and Song, Liang},

title = {Spatio-Temporal Domain Awareness for Multi-Agent Collaborative Perception},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {23383-23392}

}Many thanks to Runsheng Xu for the high-quality dataset and codebase, including V2XSet, OPV2V, OpenCOOD and OpenCDA. The same goes for Where2comm and centerformer for the excellent codebase.