Prometheus collector and exporter for metrics extracted from the Slurm resource scheduling system.

- Allocated: CPUs which have been allocated to a job.

- Idle: CPUs not allocated to a job and thus available for use.

- Other: CPUs which are unavailable for use at the moment.

- Total: total number of CPUs.

- Information extracted from the SLURM sinfo command

- Slurm CPU Management User and Administrator Guide

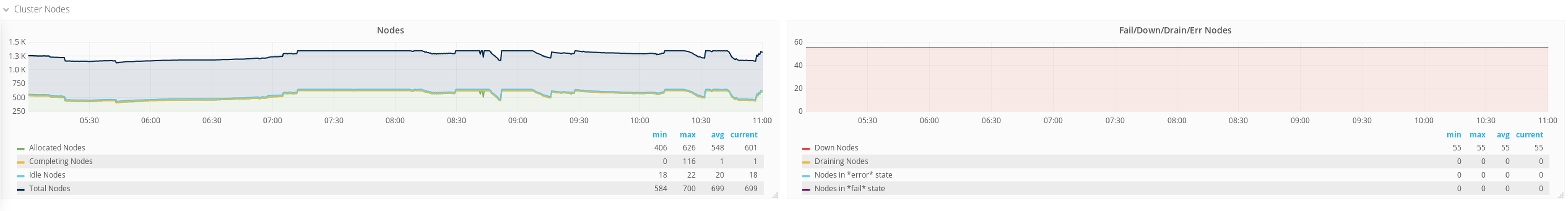

- Allocated: nodes which has been allocated to one or more jobs.

- Completing: all jobs associated with these nodes are in the process of being completed.

- Down: nodes which are unavailable for use.

- Drain: with this metric two different states are accounted for:

- nodes in

drainedstate (marked unavailable for use per system administrator request) - nodes in

drainingstate (currently executing jobs but which will not be allocated for new ones).

- nodes in

- Fail: these nodes are expected to fail soon and are unavailable for use per system administrator request.

- Error: nodes which are currently in an error state and not capable of running any jobs.

- Idle: nodes not allocated to any jobs and thus available for use.

- Maint: nodes which are currently marked with the maintenance flag.

- Mixed: nodes which have some of their CPUs ALLOCATED while others are IDLE.

- Resv: these nodes are in an advanced reservation and not generally available.

Information extracted from the SLURM sinfo command

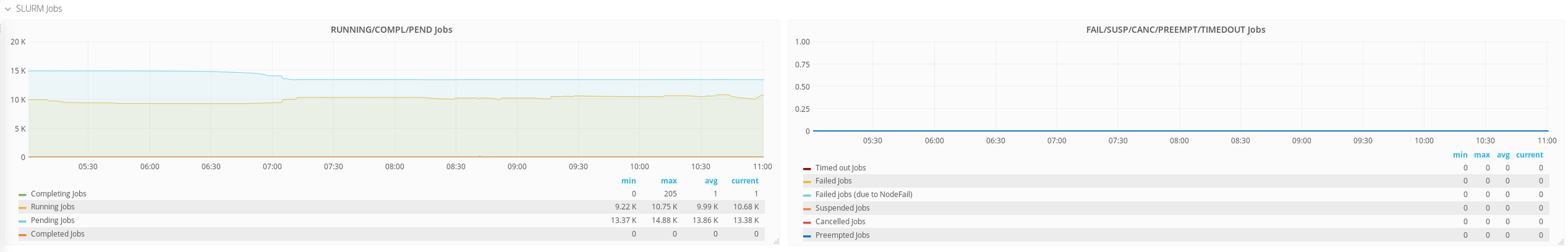

- PENDING: Jobs awaiting for resource allocation.

- PENDING_DEPENDENCY: Jobs awaiting because of a unexecuted job dependency.

- RUNNING: Jobs currently allocated.

- SUSPENDED: Job has an allocation but execution has been suspended and CPUs have been released for other jobs.

- CANCELLED: Jobs which were explicitly cancelled by the user or system administrator.

- COMPLETING: Jobs which are in the process of being completed.

- COMPLETED: Jobs have terminated all processes on all nodes with an exit code of zero.

- CONFIGURING: Jobs have been allocated resources, but are waiting for them to become ready for use.

- FAILED: Jobs terminated with a non-zero exit code or other failure condition.

- TIMEOUT: Jobs terminated upon reaching their time limit.

- PREEMPTED: Jobs terminated due to preemption.

- NODE_FAIL: Jobs terminated due to failure of one or more allocated nodes.

Information extracted from the SLURM squeue command

- Running/suspended Jobs per partitions, divided between Slurm accounts and users.

- CPUs total/allocated/idle per partition plus used CPU per user ID.

The following information about jobs are also extracted via squeue:

- Running/Pending/Suspended jobs per SLURM Account.

- Running/Pending/Suspended jobs per SLURM User.

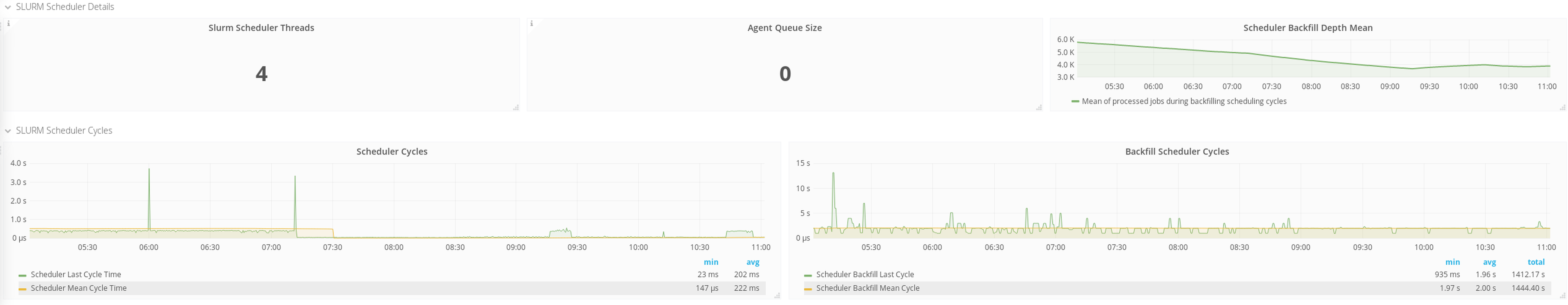

- Server Thread count: The number of current active

slurmctldthreads. - Queue size: The length of the scheduler queue.

- DBD Agent queue size: The length of the message queue for SlurmDBD.

- Last cycle: Time in microseconds for last scheduling cycle.

- Mean cycle: Mean of scheduling cycles since last reset.

- Cycles per minute: Counter of scheduling executions per minute.

- (Backfill) Last cycle: Time in microseconds of last backfilling cycle.

- (Backfill) Mean cycle: Mean of backfilling scheduling cycles in microseconds since last reset.

- (Backfill) Depth mean: Mean of processed jobs during backfilling scheduling cycles since last reset.

- (Backfill) Total Backfilled Jobs (since last slurm start): number of jobs started thanks to backfilling since last Slurm start.

- (Backfill) Total Backfilled Jobs (since last stats cycle start): number of jobs started thanks to backfilling since last time stats where reset.

- (Backfill) Total backfilled heterogeneous Job components: number of heterogeneous job components started thanks to backfilling since last Slurm start.

Information extracted from the SLURM sdiag command

DBD Agent queue size: it is particularly important to keep track of it, since an increasing number of messages counted with this parameter almost always indicates three issues:

- the SlurmDBD daemon is down;

- the database is either down or unreachable;

- the status of the Slurm accounting DB may be inconsistent (e.g.

sreportmissing data, weird utilization of the cluster, etc.).

-

Read DEVELOPMENT.md in order to build the Prometheus Slurm Exporter. After a successful build copy the executable

bin/prometheus-slurm-exporterto a node with access to the Slurm command-line interface. -

A Systemd Unit file to run the executable as service is available in lib/systemd/prometheus-slurm-exporter.service.

-

(optional) Distribute the exporter as a Snap package: consult the following document. NOTE: this method requires the use of Snap, which is built by Canonical.

It is strongly advisable to configure the Prometheus server with the following parameters:

scrape_configs:

#

# SLURM resource manager:

#

- job_name: 'my_slurm_exporter'

scrape_interval: 30s

scrape_timeout: 30s

static_configs:

- targets: ['slurm_host.fqdn:8080']

- scrape_interval: a 30 seconds interval will avoid possible 'overloading' on the SLURM master due to frequent calls of sdiag/squeue/sinfo commands through the exporter.

- scrape_timeout: on a busy SLURM master a too short scraping timeout will abort the communication from the Prometheus server toward the exporter, thus generating a

context_deadline_exceedederror.

The previous configuration file can be immediately used with a fresh installation of Promethues. At the same time, we highly recommend to include at least the global section into the configuration. Official documentation about configuring Prometheus is available here.

NOTE: the Prometheus server is using YAML as format for its configuration file, thus indentation is really important. Before reloading the Prometheus server it would be better to check the syntax:

$~ promtool check-config prometheus.yml

Checking prometheus.yml

SUCCESS: 1 rule files found

[...]

A dashboard is available in order to visualize the exported metrics through Grafana:

Copyright 2017-2020 Victor Penso, Matteo Dessalvi

This is free software: you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation, either version 3 of the License, or (at your option) any later version.

This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You should have received a copy of the GNU General Public License along with this program. If not, see http://www.gnu.org/licenses/.