This is a collection of papers on Time Series Foundation Models , including pre-training foundation models from scratch for time series and adapting large language foundation models for time series. They both contribute to the development of a unified model that is highly generalizable, versatile, and comprehensible for time series analysis. It is based on our survey paper: A Survey of Time Series Foundation Models: Generalizing Time Series Representation with Large Language Model.

We will try to make this list updated frequently. If you found any error or any missed paper, please don't hesitate to open issues or pull requests.

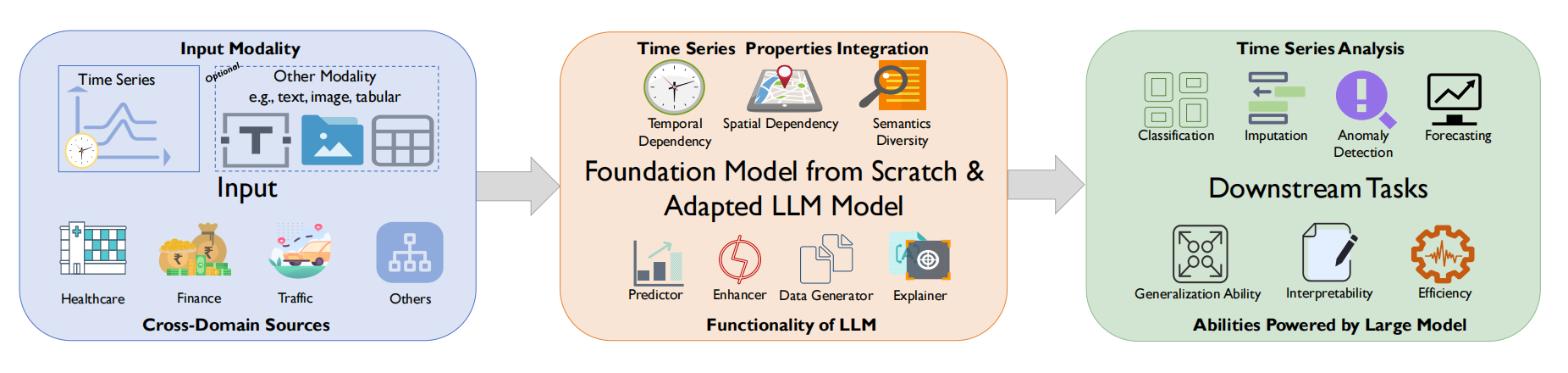

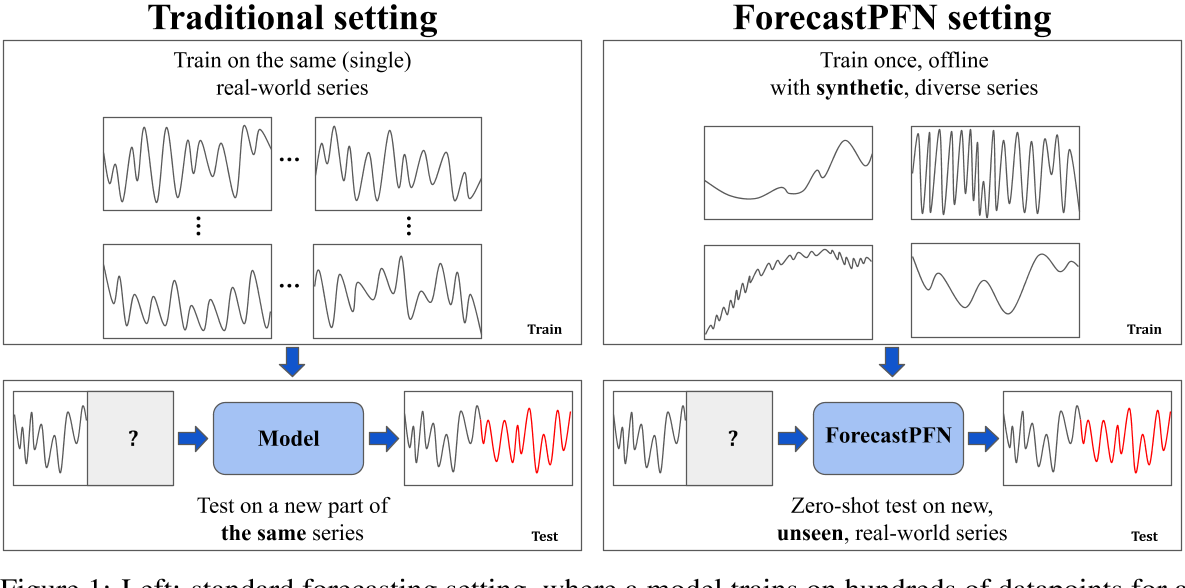

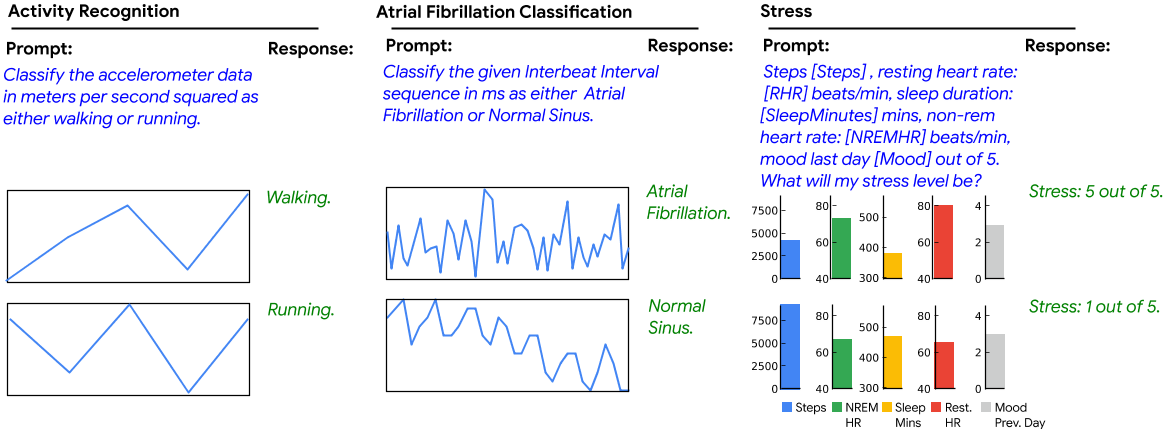

Traditional time series models are task-specific, featuring singular functionality and limited generalization capacity. Recently, large language foundation models have unveiled their remarkable capabilities for cross-task transferability, zero-shot/few-shot learning, and decision-making explainability. This success has sparked interest in the exploration of foundation models to solve multiple time series challenges simultaneously, including knowledge transferability between different time series domains, data sparseness in some time series scenarios (e.g. business), multimodal learning between time sequence and other data modality (e.g. text) and explainability of time series models.

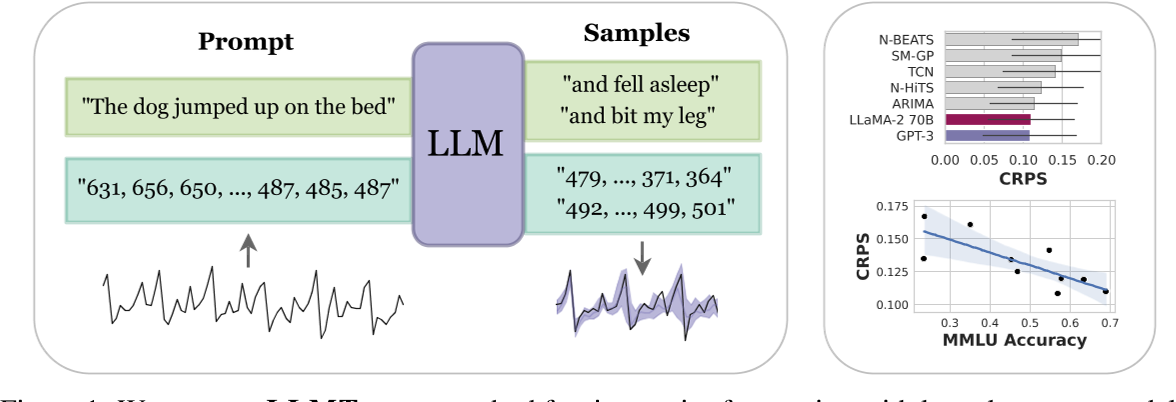

Figure 1. The background of Time Series Foundation Models.

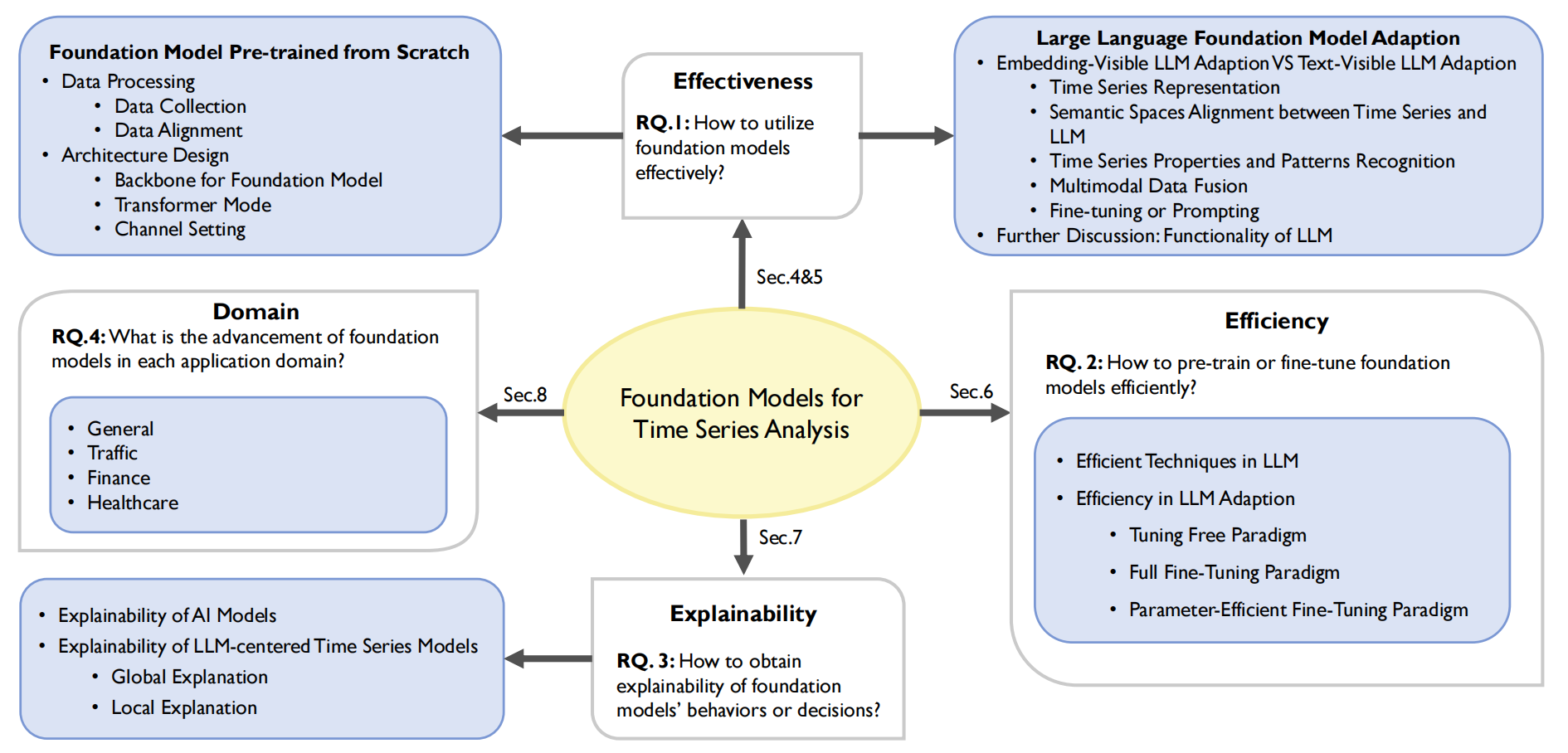

Figure 2. The structure of our survey.

Our review is guided by four research questions in Figure 2, covering three analytical dimensions (i.e. effectiveness, efficiency, explainability) and one taxonomy (i.e. domain taxonomy).

Feel free to cite this survey if you find it useful to you!

@article{ye2024survey,

title={A Survey of Time Series Foundation Models: Generalizing Time Series Representation with Large Language Model},

author={Ye, Jiexia and Zhang, Weiqi and Yi, Ke and Yu, Yongzi and Li, Ziyue and Li, Jia and Tsung, Fugee},

journal={arXiv preprint arXiv:2405.02358},

year={2024}

}

-

[arxiv' 2023] Toward a Foundation Model for Time Series Data [Paper | No Code]

-

[arxiv' 2023] A decoder-only foundation model for time-series forecasting [Paper | No Code]

-

[NIPs' 2023] ForecastPFN: Synthetically-Trained Zero-Shot Forecasting [Paper | Code]

-

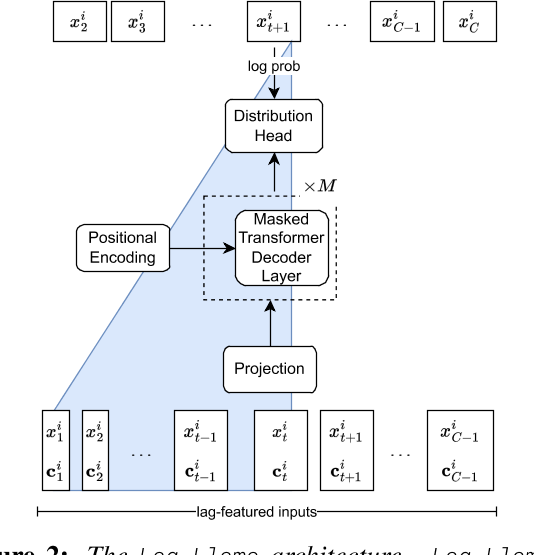

[arxiv' 2023] Lag-Llama: Towards Foundation Models for Time Series Forecasting [Paper | Code]

-

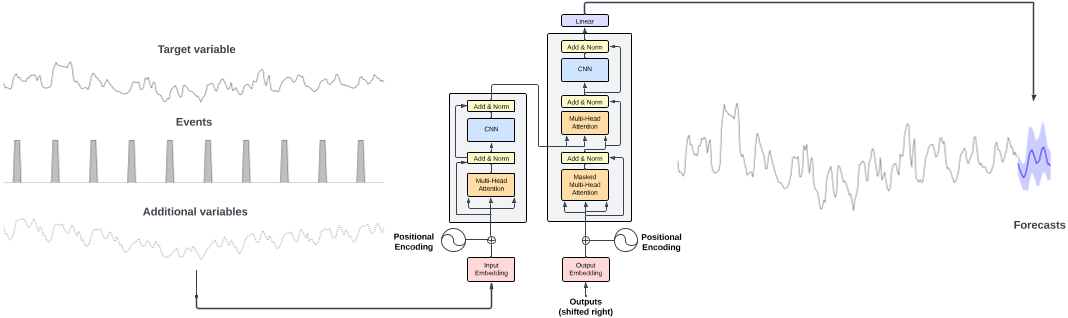

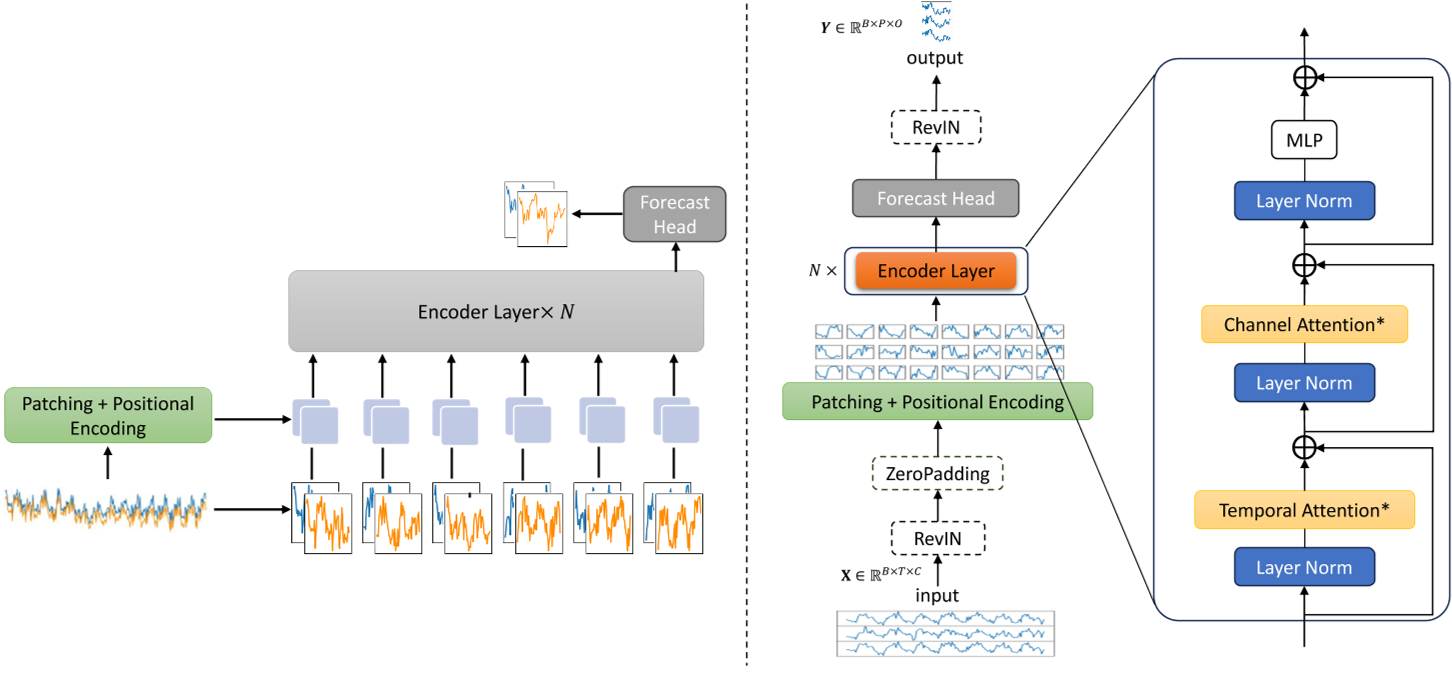

[arxiv' 2023] Only the Curve Shape Matters: Training Foundation Models for Zero-Shot Multivariate Time Series Forecasting through Next Curve Shape Prediction [Paper | Code]

-

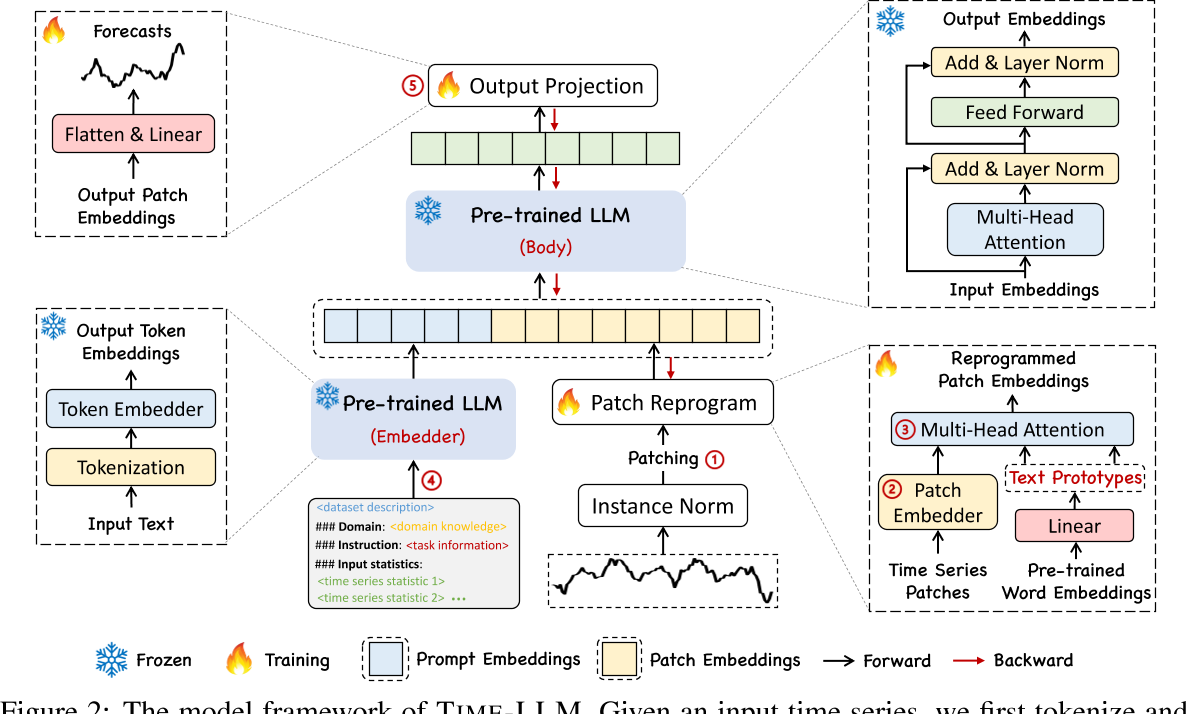

[ICLR' 2024] Time-LLM: Time Series Forecasting by Reprogramming Large Language Models [Paper | Code]

-

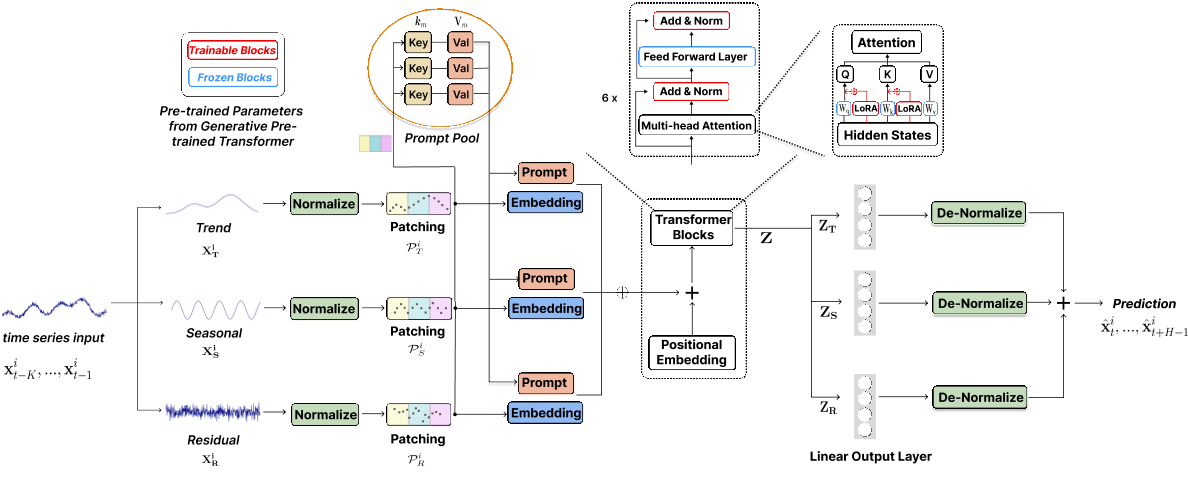

[ICLR' 2024] TEMPO: Prompt-based Generative Pre-trained Transformer for Time Series Forecasting [Paper | No Code]

-

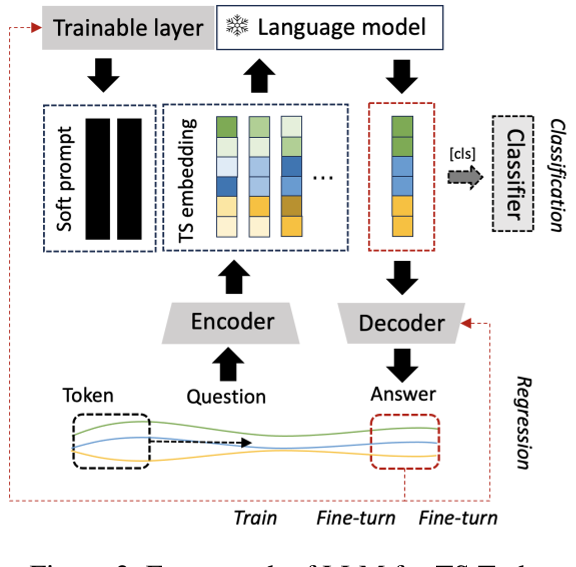

[ICLR' 2024] TEST: Text Prototype Aligned Embedding to Activate LLM's Ability for Time Series [Paper | No Code]

-

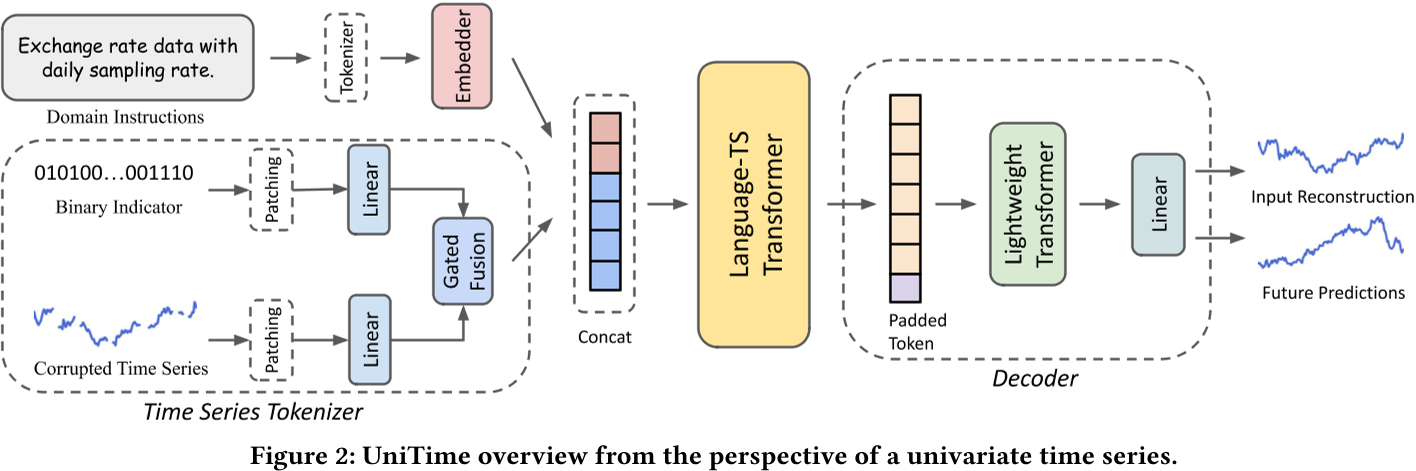

[WWW' 2024] UniTime: A Language-Empowered Unified Model for Cross-Domain Time Series Forecasting [Paper | No Code]

-

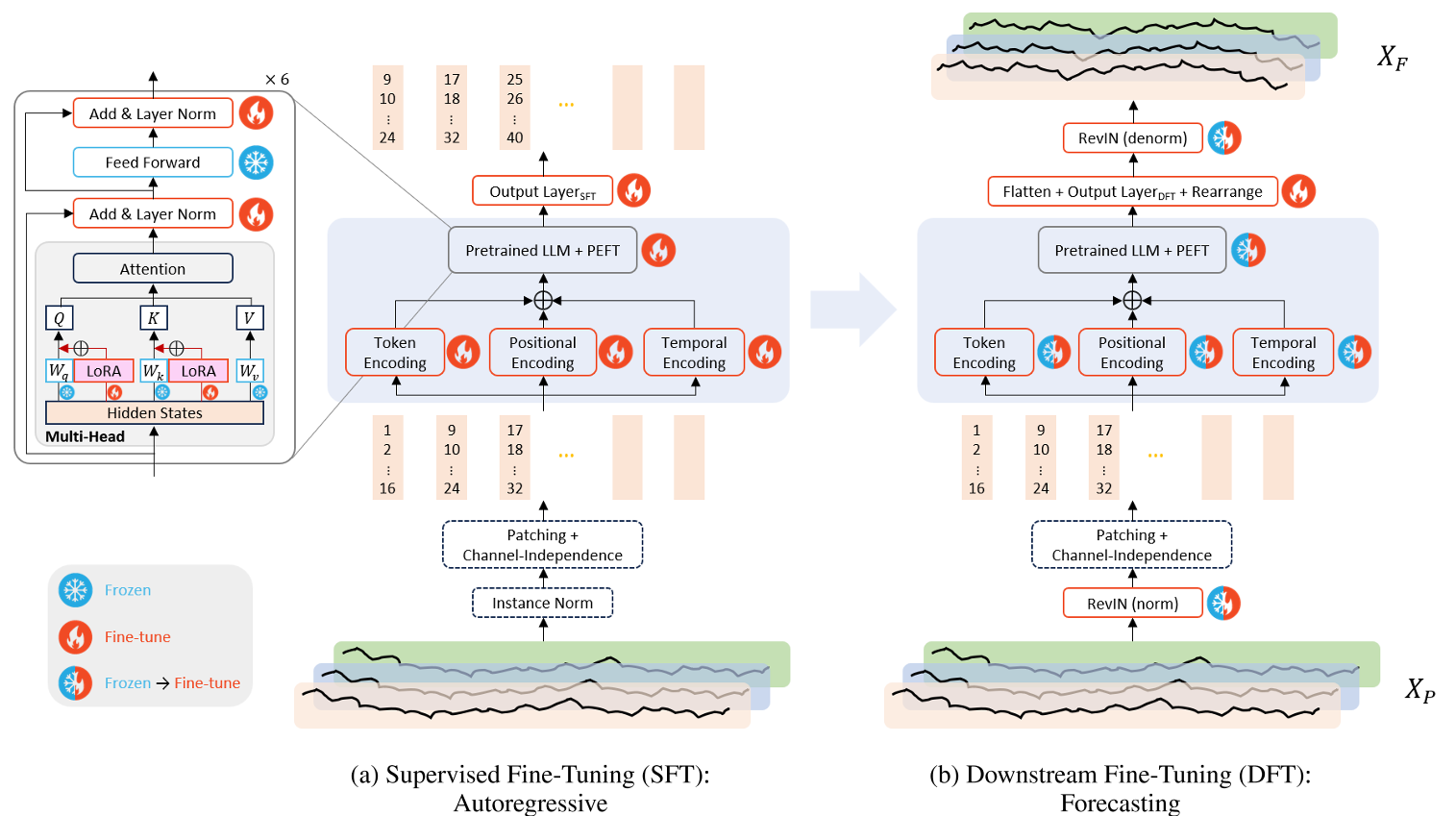

[arXiv' 2023] LLM4TS: Two-Stage Fine-Tuning for Time-Series Forecasting with Pre-Trained LLMs [Paper | No Code]

-

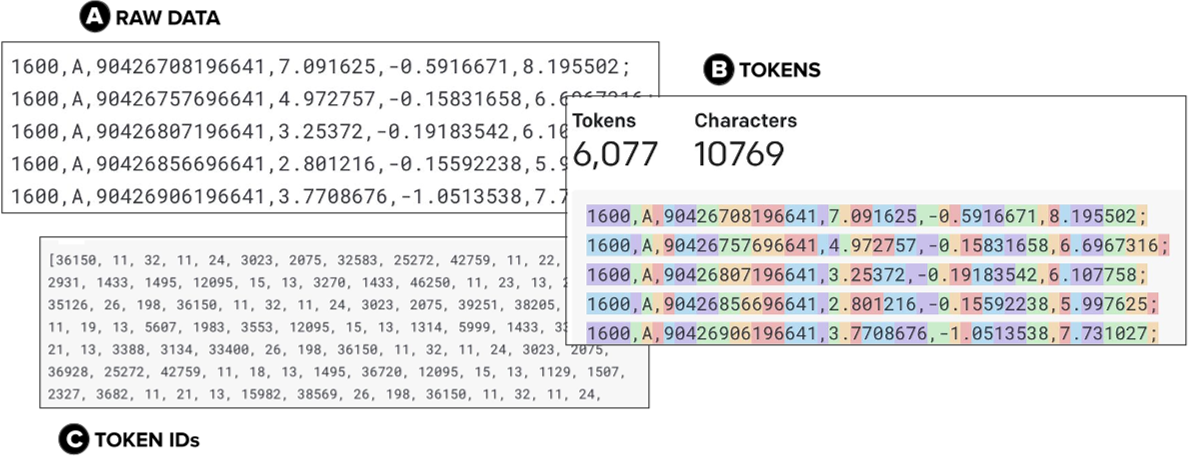

[arXiv' 2023] The first step is the hardest: Pitfalls of Representing and Tokenizing Temporal Data for Large Language Models [Paper | No Code]

-

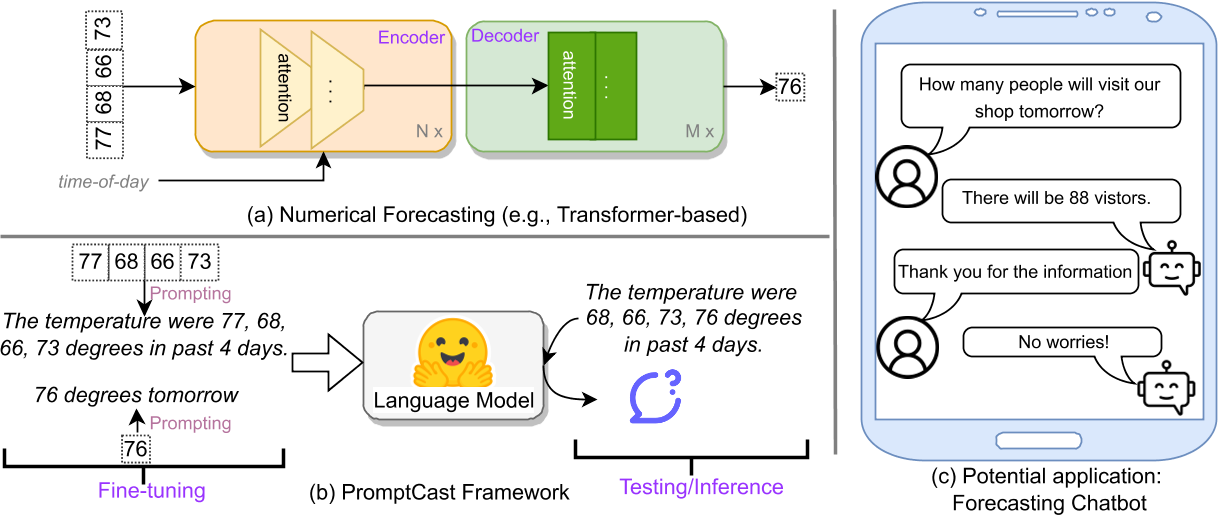

[TKDE' 2023] PromptCast: A New Prompt-based Learning Paradigm for Time Series Forecasting [Paper | Code]

-

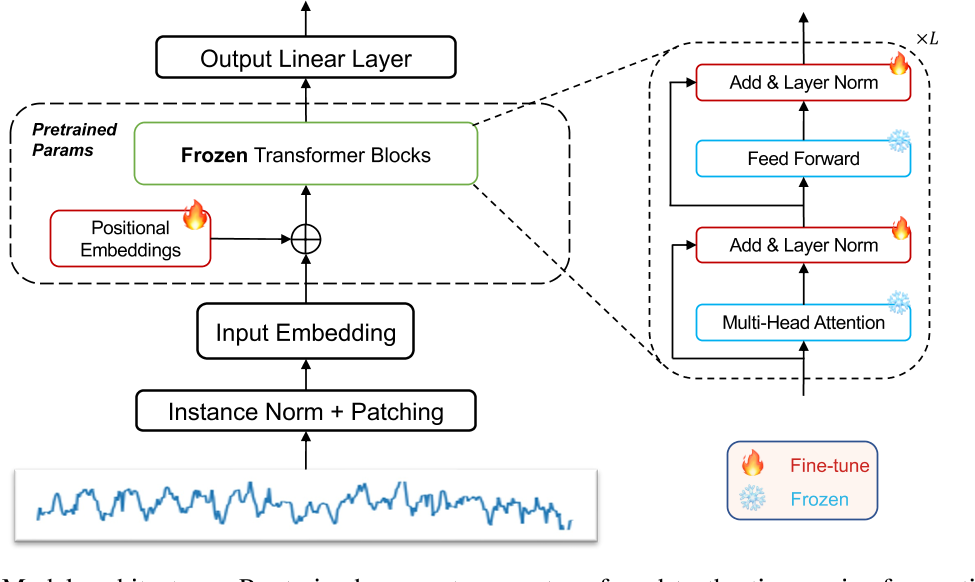

[NeurIPS' 2023] One Fits All: Power General Time Series Analysis by Pretrained LM [Paper | Code]

-

[NeurIPS' 2023] Large Language Models Are Zero-Shot Time Series Forecasters [Paper | Code]

-

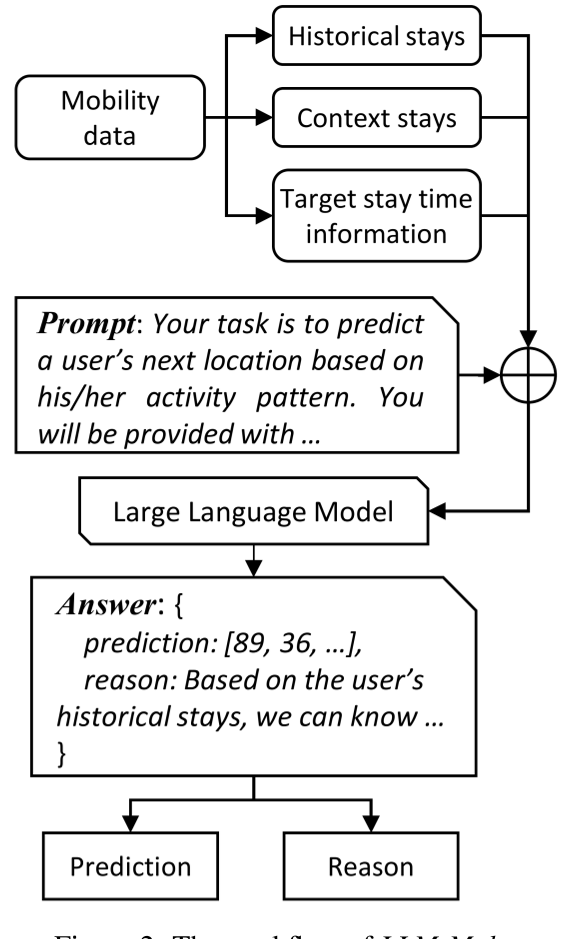

[arXiv' 2023] Where Would I Go Next? Large Language Models as Human Mobility Predictors [Paper | Code]

-

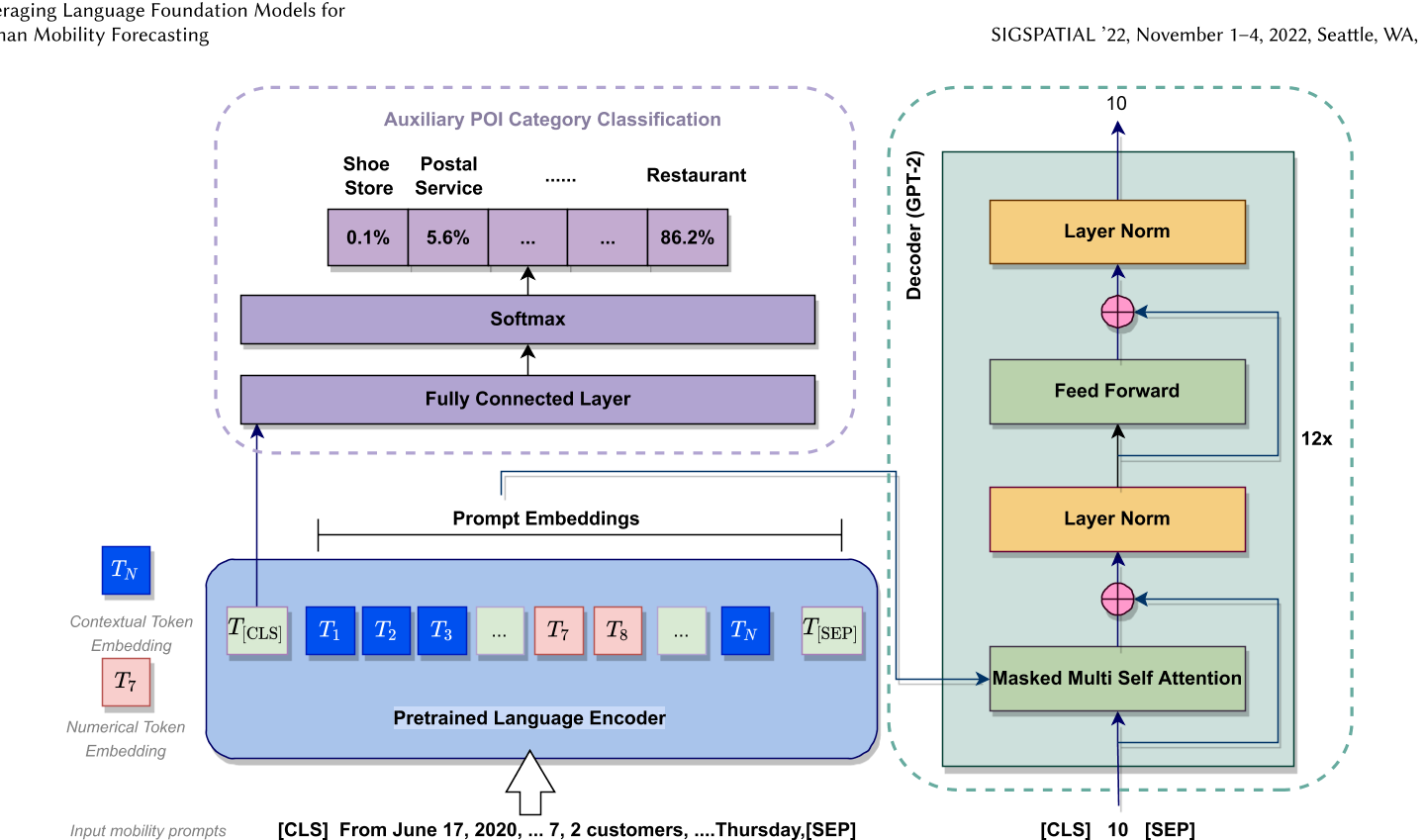

[SIGSPATIAL' 2022] Leveraging Language Foundation Models for Human Mobility Forecasting [Paper | Code]

-

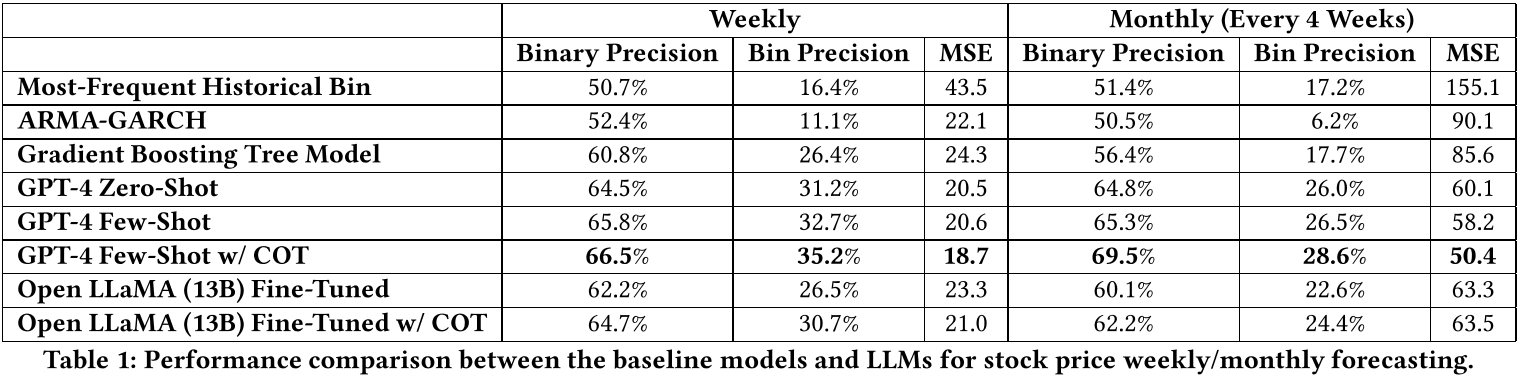

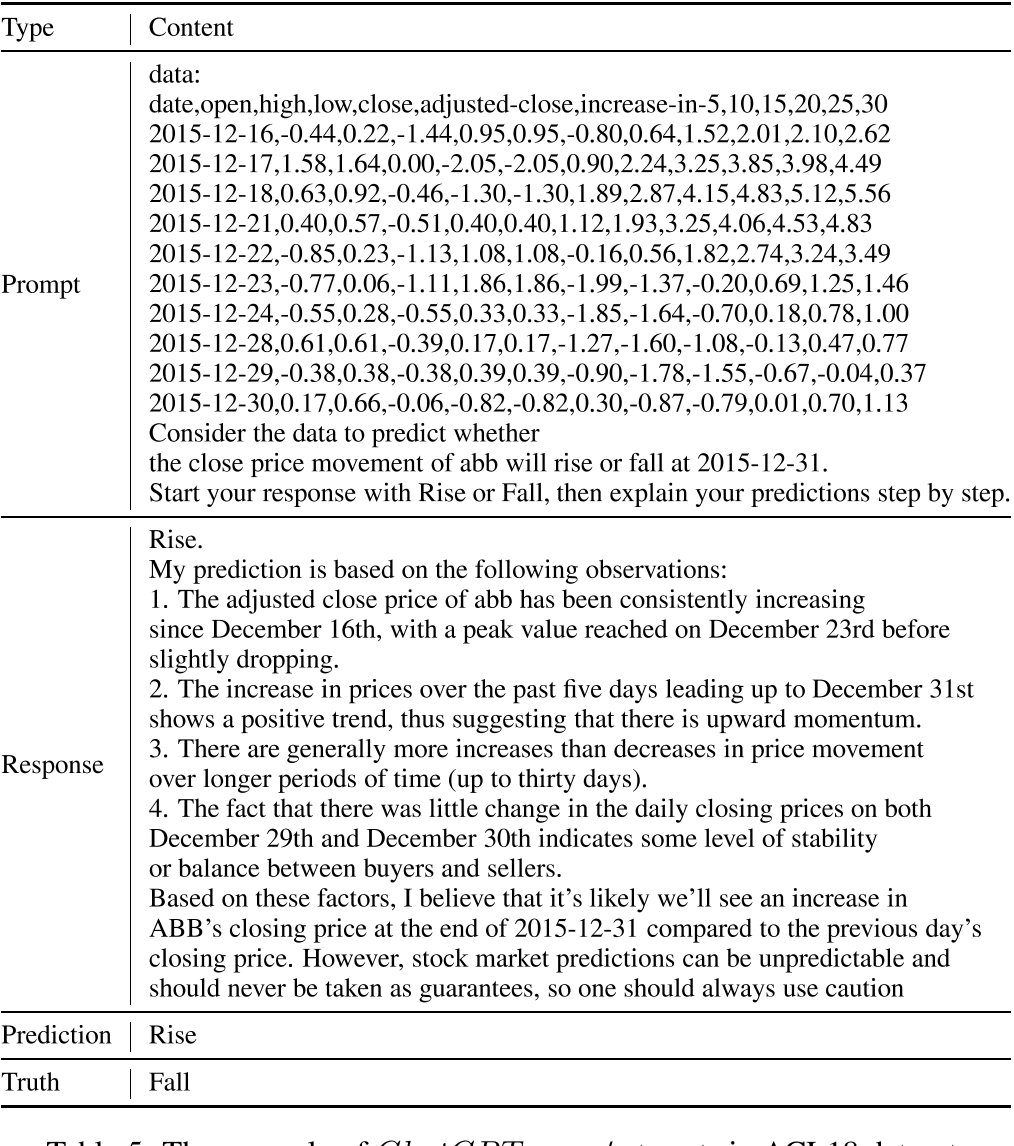

[arXiv' 2023] Temporal Data Meets LLM -- Explainable Financial Time Series Forecasting [Paper | No Code]

-

[arXiv' 2023] The Wall Street Neophyte: A Zero-Shot Analysis of ChatGPT Over MultiModal Stock Movement Prediction Challenges [Paper | No Code]

If you have come across relevant resources, feel free to open an issue or submit a pull request.