Code for paper "Dropout Strikes Back: Improved Uncertainty Estimation via Diversity Sampling" by Kirill Fedyanin, Evgenii Tsymbalov, Maxim Panov published in Recent Trends in Analysis of Images, Social Networks and Texts by Springer. You can cite this paper by bibtex provided below.

Main code with implemented methods (DPP, k-DPP, leverages masks for dropout) are in our alpaca library

For regression tasks, it could be useful to know not just a prediction but also a confidence interval. It's hard to do it in the close form for deep learning, but to estimate, you can you so-called ensembles of several models. To avoid training and keeping several models, you could use monte-carlo dropout on inference.

To use MC dropout, you need multiple forward passes; converging requires tens or even hundreds of forward passes.

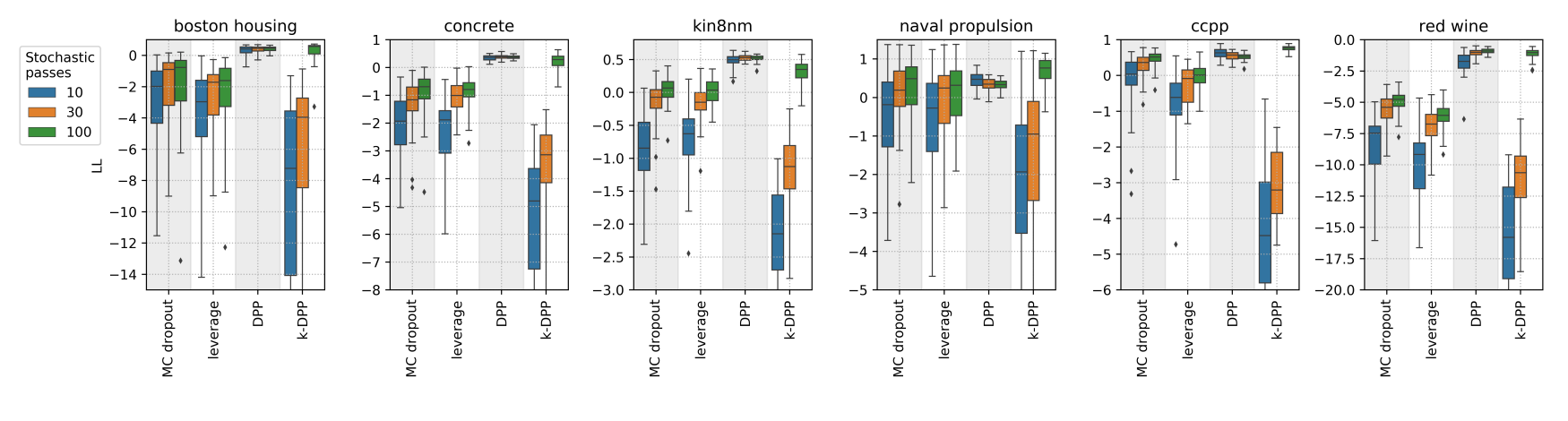

We propose to force the diversity of forward passes by hiring determinantal point processes. See how it improves the log-likelihood metric across various UCI datasets for the different numbers of stochastic passes T = 10, 30, 100.

You can read full paper here https://link.springer.com/chapter/10.1007/978-3-031-15168-2_11

For the citation, please use

@InProceedings{Fedyanin2021DropoutSB,

author="Fedyanin, Kirill

and Tsymbalov, Evgenii

and Panov, Maxim",

title="Dropout Strikes Back: Improved Uncertainty Estimation via Diversity Sampling",

booktitle="Recent Trends in Analysis of Images, Social Networks and Texts",

year="2022",

publisher="Springer International Publishing",

pages="125--137",

isbn="978-3-031-15168-2"

}pip install -r requirements.txt

To get the experiment results from the paper, run the following notebooks

experiments/regression_1_big_exper_train-clean.ipynbto train the modelsexperiments/regression_2_ll_on_trained_models.ipynbto get the ll values for different datasetsexperiments/regression_3_ood_w_training.ipynbfor the OOD experiments

From the experiment folder run the following scripts. They goes in pairs, first script trains models and estimate the uncertainty, second just print the results.

python classification_ue.py mnist

python print_confidence_accuracy.py mnistpython classification_ue.py cifar

python print_confidence_accuracy.py cifar For the imagenet you need to manually download validation dataset (version ILSVRC2012) and put images to the experiments/data/imagenet/valid folder

python classification_imagenet.py

python print_confidence_accuracy.py imagenetpython classification_ue_ood.py mnist

python print_ood.py mnistpython classification_ue_ood.py cifar

python print_ood.py cifar python classification_imagenet.py --ood

python print_ood.py imagenet You can change the uncertainty estimation function for mnist/cifar by adding -a=var_ratio or -a=max_prob keys to the scripts.