In this workshop you will learn how to:

- use a notebook environment

- write simple Apache Spark queries to filter and transform a dataset

- do very simple outlier detection

The example dataset we will use is the Amazon Electronics reviews dataset:

Ups and downs: Modeling the visual evolution of fashion trends with one-class collaborative filtering

R. He, J. McAuley

WWW, 2016 [http://jmcauley.ucsd.edu/data/amazon/]

To make things smoother and avoid installation woes, I created a Docker container that will have all we need for this workshop pre-installed and separate from your system.

Please follow the instructions below to start up the container:

-

If you don't have a Docker ID account yet, go to Docker Hub and create an account.

-

Install Docker. On Mac OS X, you can download Docker for Mac for an easy-to-install desktop app.

-

Open Docker and enter your Docker Hub credentials.

-

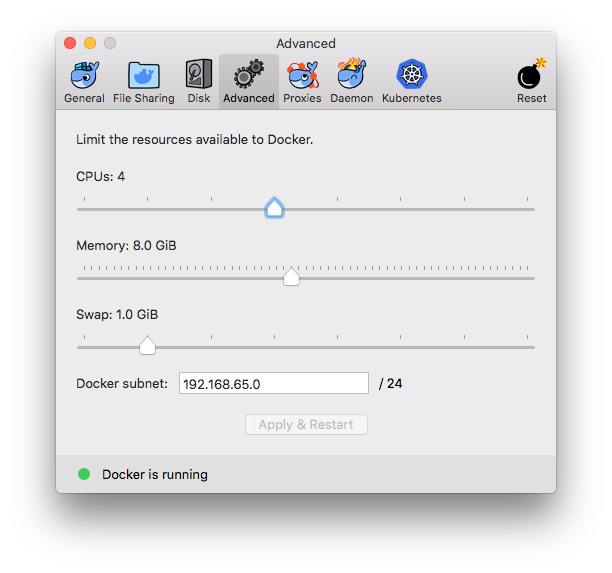

Click on the Docker app icon and select "Preferences...". Under "Advanced", increase the memory available to containers to 8.0 GB.

- Clone this repository using

git clone:

$ git clone https://github.com/stefano-meschiari/spark_workshop.git

- Open a terminal, navigate to the

spark_workshopdirectory, and run:

$ sh run.sh

from a terminal to download a JSON dataset and start the Docker container.

-

You should be able to navigate to

http://0.0.0.0:8889with your browser and see a Jupyter notebook instance. The password isspark. -

You can exit the Docker session using

Ctrl+C.

If step 6 fails with error unauthorized: incorrect username or password., run

$ docker login

and enter your DockerHub credentials (username and password; username is not your email).