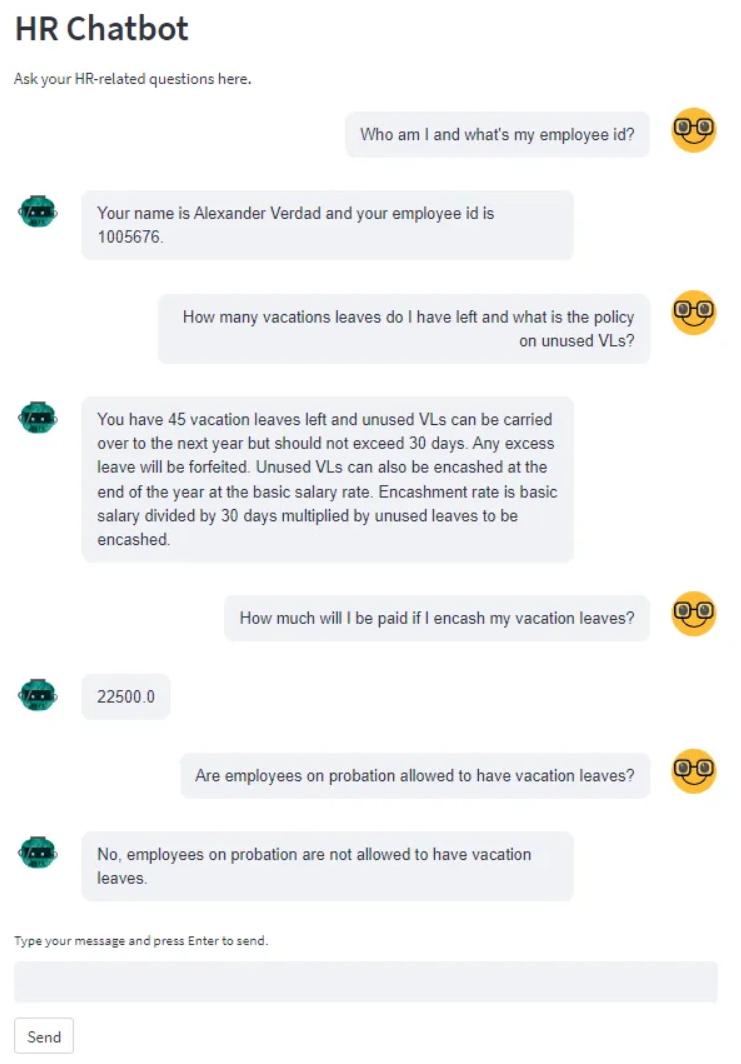

Companion Reading: Creating a (mostly) Autonomous HR Assistant with ChatGPT and LangChain’s Agents and Tools

This is a prototype enterprise application - an autonomous agent that is able to answer HR queries using the tools it has on hand. It was made using LangChain's agents and tools modules, using Pinecone as vector database and powered by ChatGPT or gpt-3.5-turbo. The front-end is Streamlit using the streamlit_chat component.

Tools:

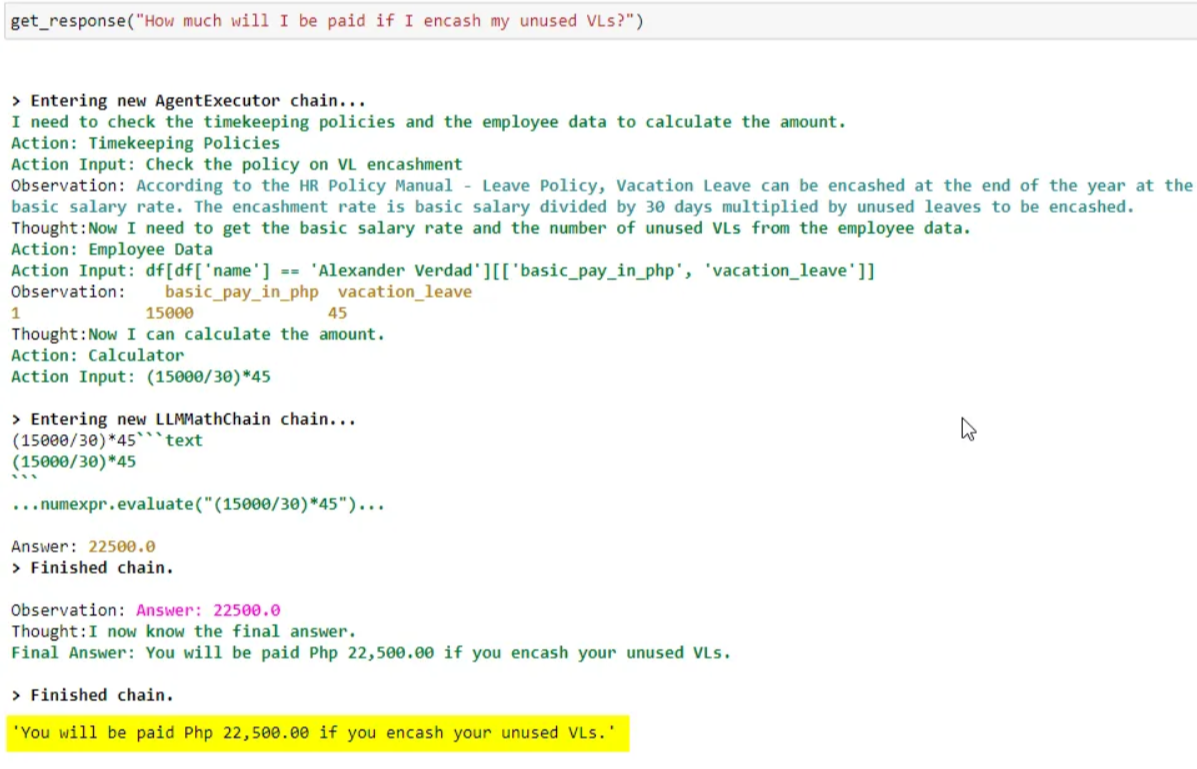

- Timekeeping Policies - A ChatGPT generated sample HR policy document. Embeddings were created for this doc using OpenAI’s text-embedding-ada-002 model and stored in a Pinecone index.

- Employee Data - A csv file containing dummy employee data (e.g. name, supervisor, # of leaves etc). It's loaded as a pandas dataframe and manipulated by the LLM using LangChain's PythonAstREPLTool

- Calculator - this is LangChain's calculator chain module, LLMMathChain

- Install python 3.10. Windows, Mac

- Clone the repo to a local directory.

- Navigate to the local directory and run this command in your terminal to install all prerequisite modules -

pip install -r requirements.txt - Input your own API keys in the

hr_agent_backend_local.pyfile (orhr_agent_backend_azure.pyif you want to use the azure version; just uncomment it in the frontend.py file) - Run

streamlit run hr_agent_frontent.pyin your terminal

- Create a Pinecone account in pinecone.io - there is a free tier. Take note of the Pinecone API and environment values.

- Run the notebook 'store_embeddings_in_pinecone.ipynb'. Replace the Pinecone and OpenAI API keys (for the embedding model) with your own.

Azure OpenAI Service - the OpenAI service offering for Azure customers.

LangChain - development frame work for building apps around LLMs.

Pinecone - the vector database for storing the embeddings.

Streamlit - used for the front end. Lightweight framework for deploying python web apps.

Azure Data Lake - for landing the employee data csv files. Any other cloud storage should work just as well (blob, S3 etc).

Azure Data Factory - used to create the data pipeline.

SAP HCM - the source system for employee data.

Feel free to connect with me on:

Linkedin: https://www.linkedin.com/in/stephenbonifacio/

Twitter: https://twitter.com/Stepanogil