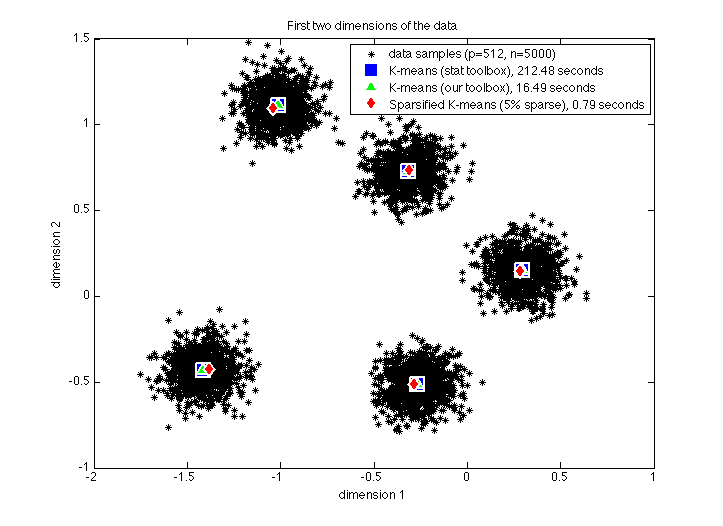

KMeans for big data using preconditioning and sparsification, Matlab implementation. Uses the KMeans clustering algorithm (also known as Lloyd's Algorithm or "K Means" or "K-Means") but sparsifies the data in a special manner to achieve significant (and tunable) savings in computation time and memory.

The code provides kmeans_sparsified which is used much like the kmeans function from the Statistics toolbox in Matlab.

There are three benefits:

- The basic implementation is much faster than the Statistics toolbox version. We also have a few modern options that the toolbox version lacks; e.g., we implement K-means++ for initialization. (Update: Since 2015, Matlab has improved the speed of their routine and initialization, and now their version and ours are comparable).

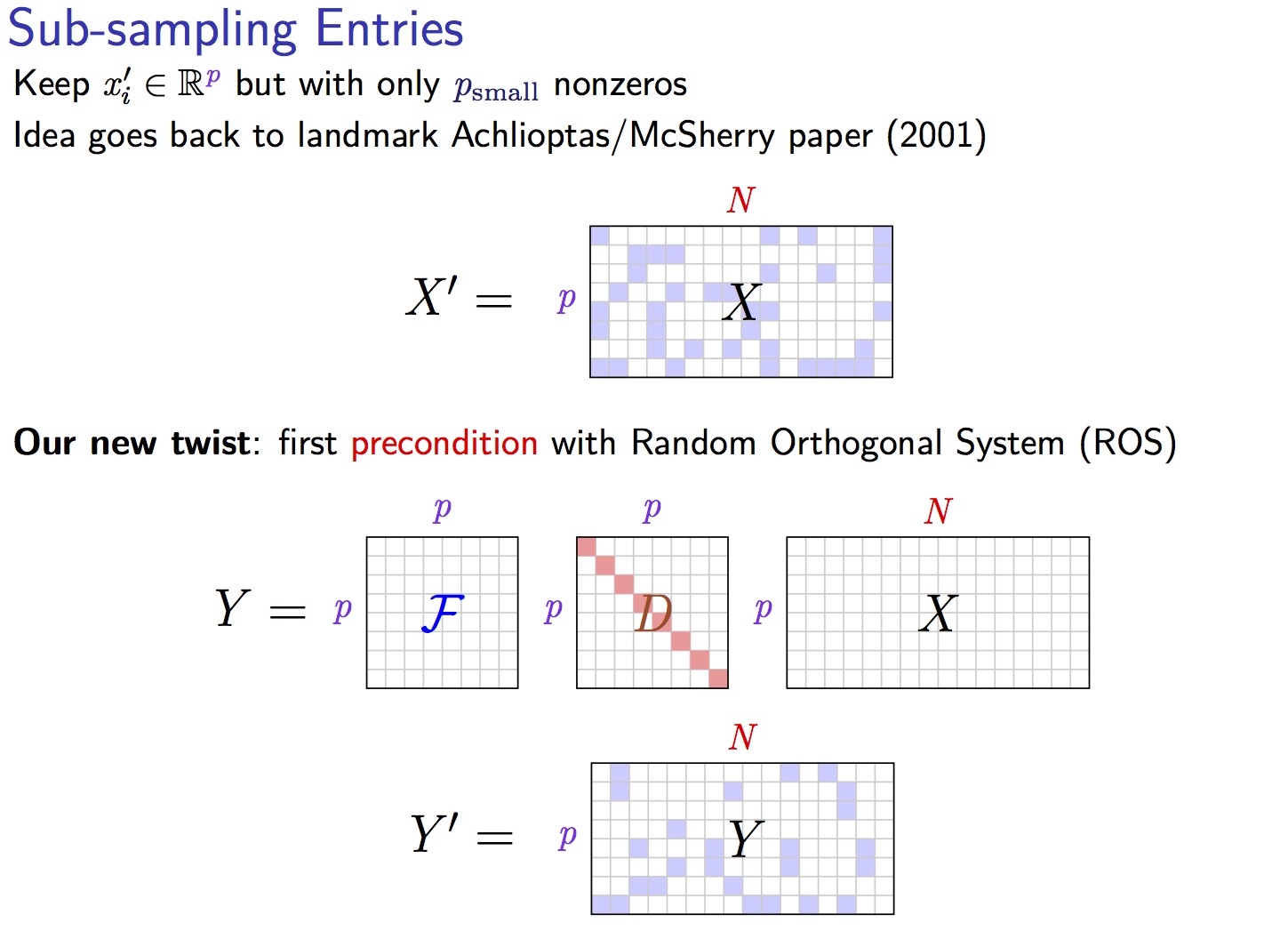

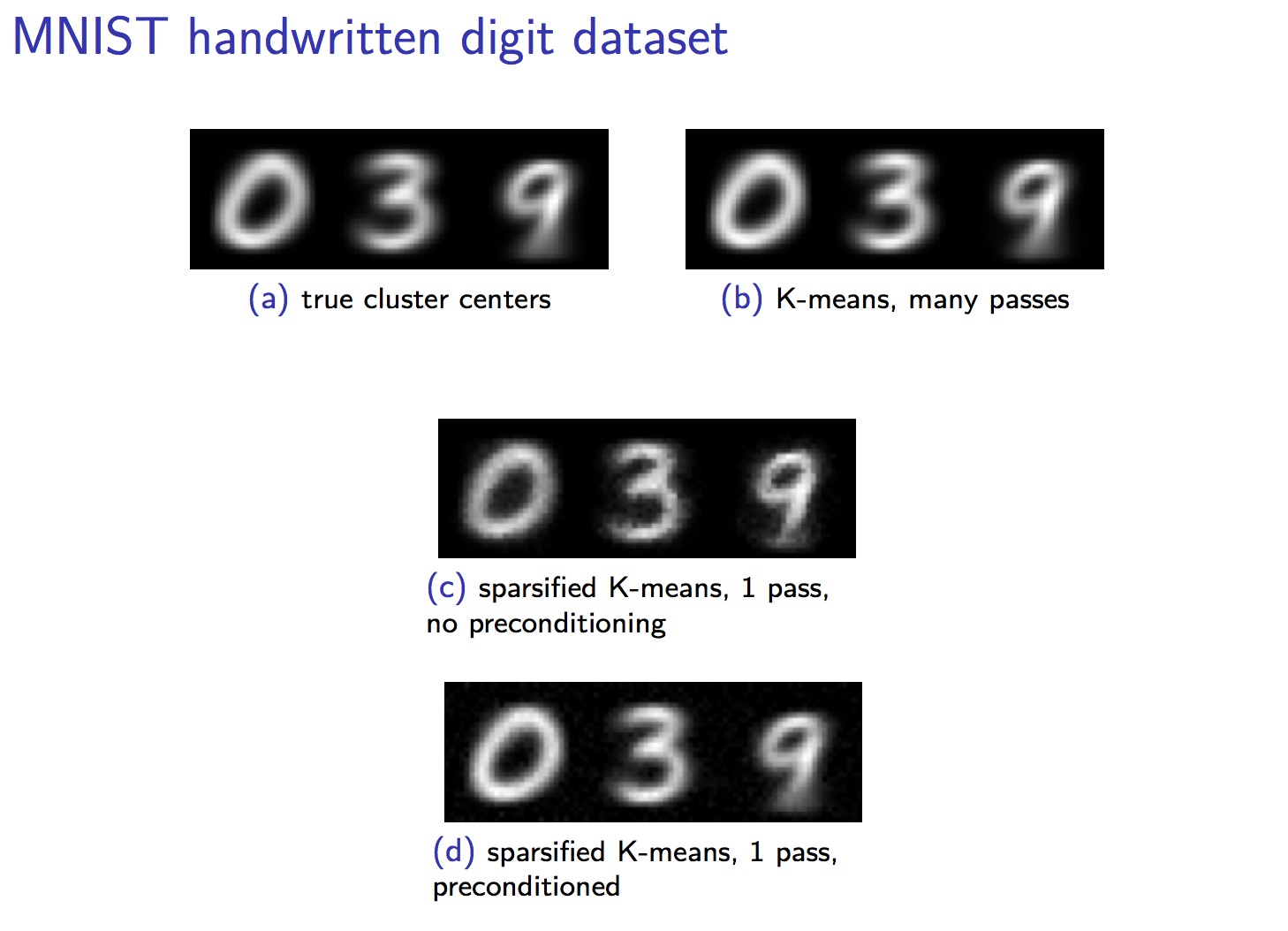

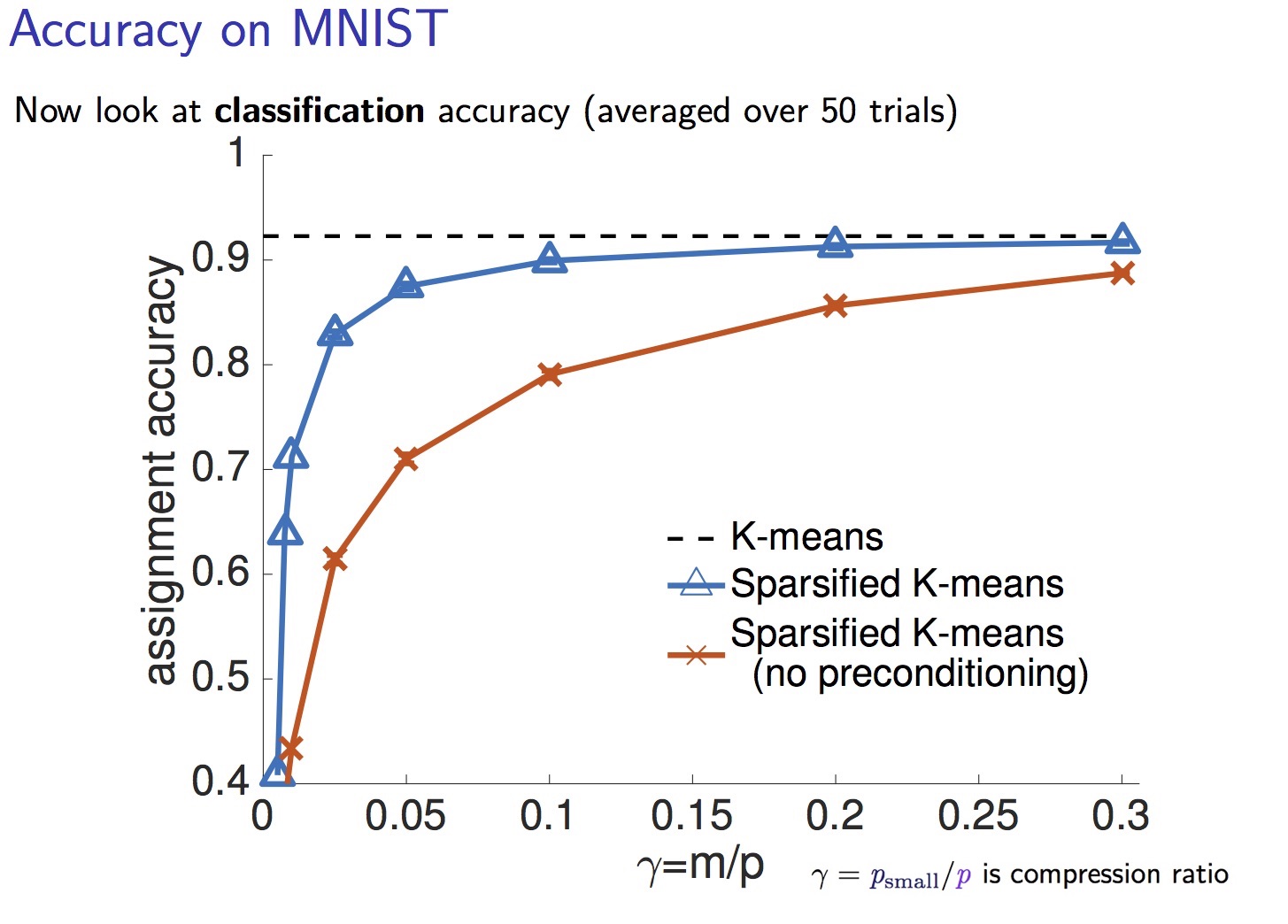

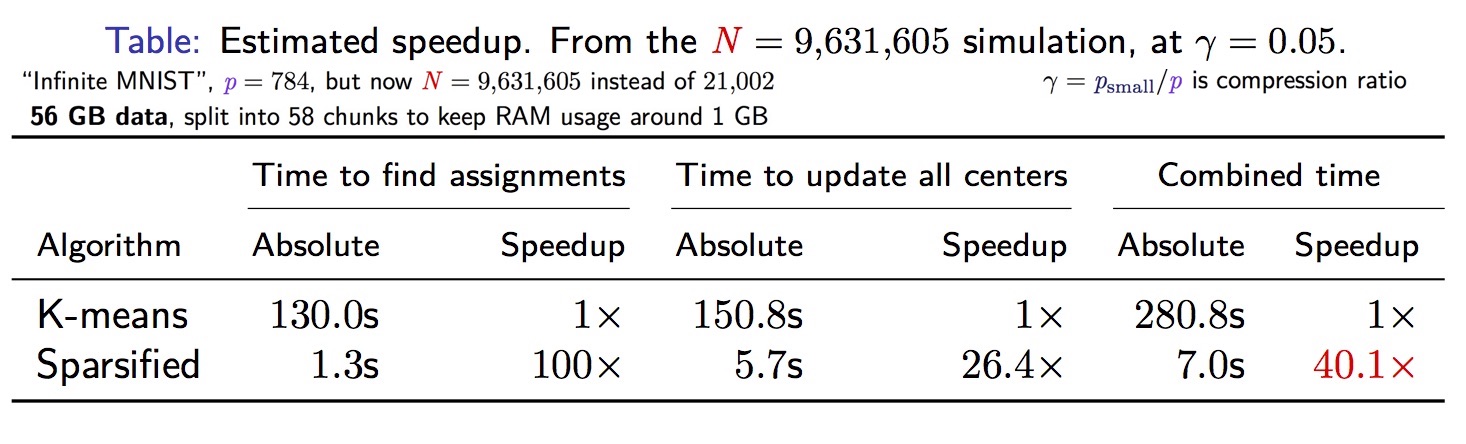

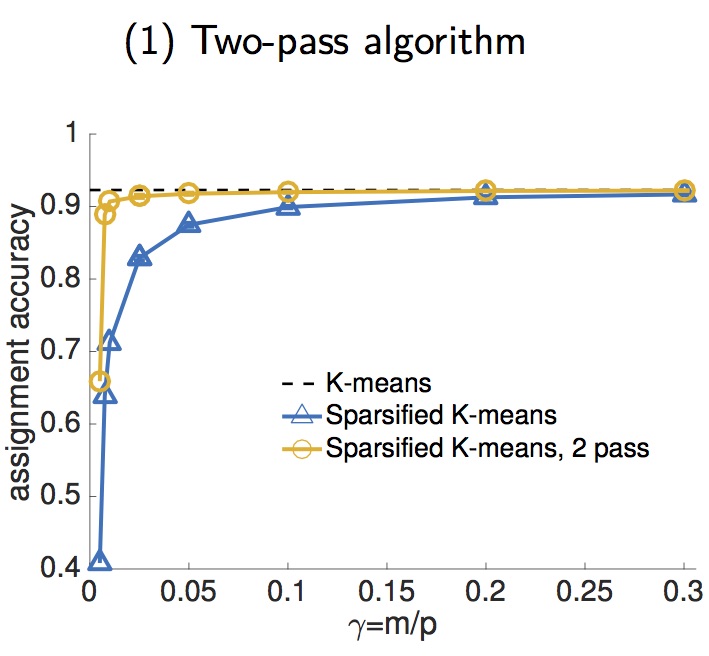

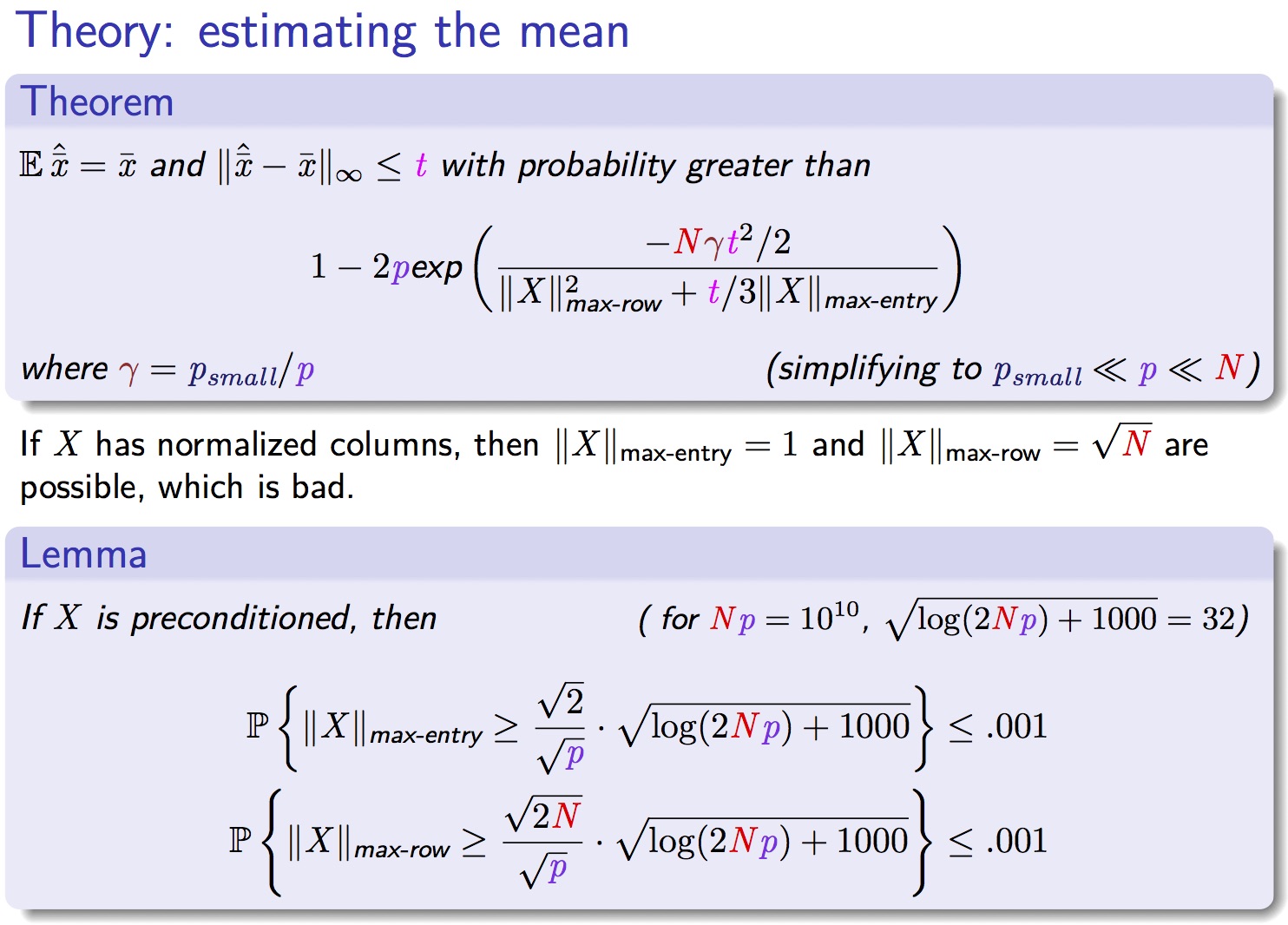

- We have a new variant, called sparsified KMeans, that preconditions and then samples the data, and this version can be thousands of times faster, and is designed for big data sets that are unmangeable otherwise

- The code also allows a big-data option. Instead of passing in a matrix of data, you give it the location of a .mat file, and the code will break the data into chunks. This is useful when the data is, say, 10 TB and your computer only has 6 GB of RAM. The data is loaded in smaller chunks (e.g., less than 6 GB), which is then preconditioned and sampled and discarded from RAM, and then the next data chunk is processed. The entire algorithm is one-pass over the dataset.

/Note/: if you use our code in an academic paper, we appreciate it if you cite us: "Preconditioned Data Sparsification for Big Data with Applications to PCA and K-means", F. Pourkamali Anaraki and S. Becker, IEEE Trans. Info. Theory, 2017.

For moderate to large data, we believe this is one of the fastest ways to run k-means. For extremely large data that cannot all fit into core memory of your computer, we believe there are almost no good alternatives (in theory and practice) to this code.

Every time you start a new Matlab session, run setup_kmeans and it will correctly set the paths. The first time you run it, it may also compile some mex files; for this, you need a valid C compiler (see http://www.mathworks.com/support/compilers/R2015a/index.html).

Current version is 2.1

- Prof. Stephen Becker, University of Colorado Boulder (Applied Mathematics)

- Prof. Farhad Pourkamali Anaraki, University of Massachusetts Lowell (Computer Science)

Preconditioned Data Sparsification for Big Data with Applications to PCA and K-means, F. Pourkamali Anaraki and S. Becker, IEEE Trans. Info. Theory, 2017. See also the arXiv version

Bibtex:

@article{SparsifiedKmeans,

title = {Preconditioned Data Sparsification for Big Data with Applications to {PCA} and {K}-means},

Author = {Pourkamali-Anaraki, F. and Becker, S.},

year = 2017,

doi = {10.1109/TIT.2017.2672725},

journal = {IEEE Trans. Info. Theory},

volume = 63,

number = 5,

pages = {2954--2974}

}

- sparsekmeans by Eric Kightley is our joint project to implement the algorithm in python, and support out-of-memory operations. The sparseklearn is the generalization of this idea to other types of machine learning algorithms (also python).

Some images taken from the paper or slides from presentations; see the journal paper for full explanations