Relational Databases and MySQL

Frequent Itemsets for Mining Data

Matrix-Vector Multiplication with MapReduce

As of 2015, there is no formal definition for the term "Big Data." This tends to cause a good deal of confusion, while at the same time presenting an exciting new field. Some may be lead to believe they are working with big data when they are using small data.

We find a similar issue with the term "green" used in the field of sustainability, and which also lacks any standard definition. And as with something claimed as being green, big data may also be subject to the equivalent of greenwashing, where organizations may use it solely as a buzz word.

As described by Doug Laney in a 2001 article.

- Volume: data is too big to work with on a single machine.

- Velocity: data is too dynamic and being created at too high of a rate (e.g. tweets per second) for a single machine to handle.

- Variety: (e.g. unstructured data, NoSQL databases, etc)

The first two points are relative to processing power and the latest technology in personal computing, the absolute volume or velocity that may identify Big Data will increase over time. And so this definition of Big Data is fluid and influenced by Moore's Law.

If you have all three cases, you are dealing with big data under this common definition. If you have only one case, it is likely you are still dealing with big data.

Examples having an instance of only one of the three V's:

| Case | Example |

|---|---|

| Volume | Genetics (structured, high volume of data) |

| Velocity | Earthquake detection data (e.g. streaming data, not necessarily stored) |

| Variety | Facial recognition data (may be static) |

As described by Jules Berman in Principles of Big Data.

| Small Data | Big Data |

|---|---|

| Gathered for a specific goal | May or may not have a goal initially, or may change with time |

| Exists in one location, often in a single file | Can be in multiple files and across multiple machines in different geographic locations |

| Highly structured (e.g. spreadsheet, csv) | May be unstructured, in a variety of different formats, and multi-disicplinary |

| Prepared by end user for own purposes | May be prepared by one group, analyzed by a second, and used by third group |

| Kept for a finite time frame for project with clear endpoint (maybe 5 or 10 years in government or academia) | May keep data indefinitely or with uncertain lifespan |

| Data measured or recorded with a single protocol and units | Data may be collected using a variety of protocols and units throughout different geographical regions |

| Can typically be reproduced | May not be reproducible |

| Cost of failure or losing data is small | Cost of failure or losing data can be high |

| Data describes itself in an important way | May have to clean data thoroughly and filter out bogus information |

| Can analyze all the data at once from a single file or machine | May have to deal with portions of data and aggregate results |

- Human: data initiated by a human such as social network posts, cell phone calls, online purchases, and the metadata created alongside such data.

- Machine: communication between machines that may never be seen by humans (e.g. internet of things)

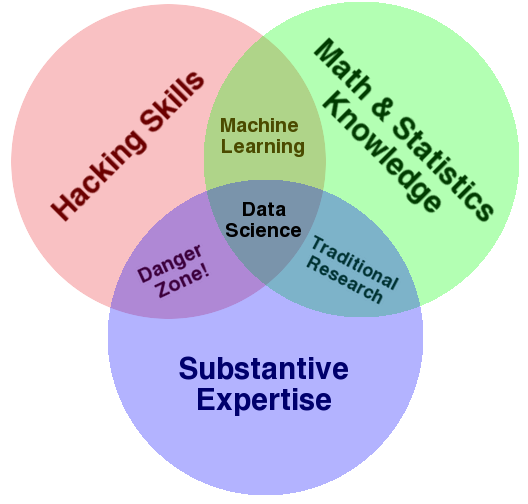

Harlan Harris’s clustering and visualization of subfields of data science from Analyzing the Analyzers (O’Reilly) by Harlan Harris, Sean Murphy, and Marck Vaisman based on a survey of several hundred data science practitioners in mid-2012