This repository contains scripts for load testing various Python task queue libraries.

The purpose of these tests is to provide a general sense of the performance and scalability of different task queue systems under heavy loads.

Additionally, I am exploring how to use each library as a user, evaluating how easy they are to set up, how intuitive their APIs are, and the overall user experience with their CLI.

Note that these tests are not critical evaluations but rather exploratory assessments to give a general feel of how each system performs.

The task queue libraries in this benchmark:

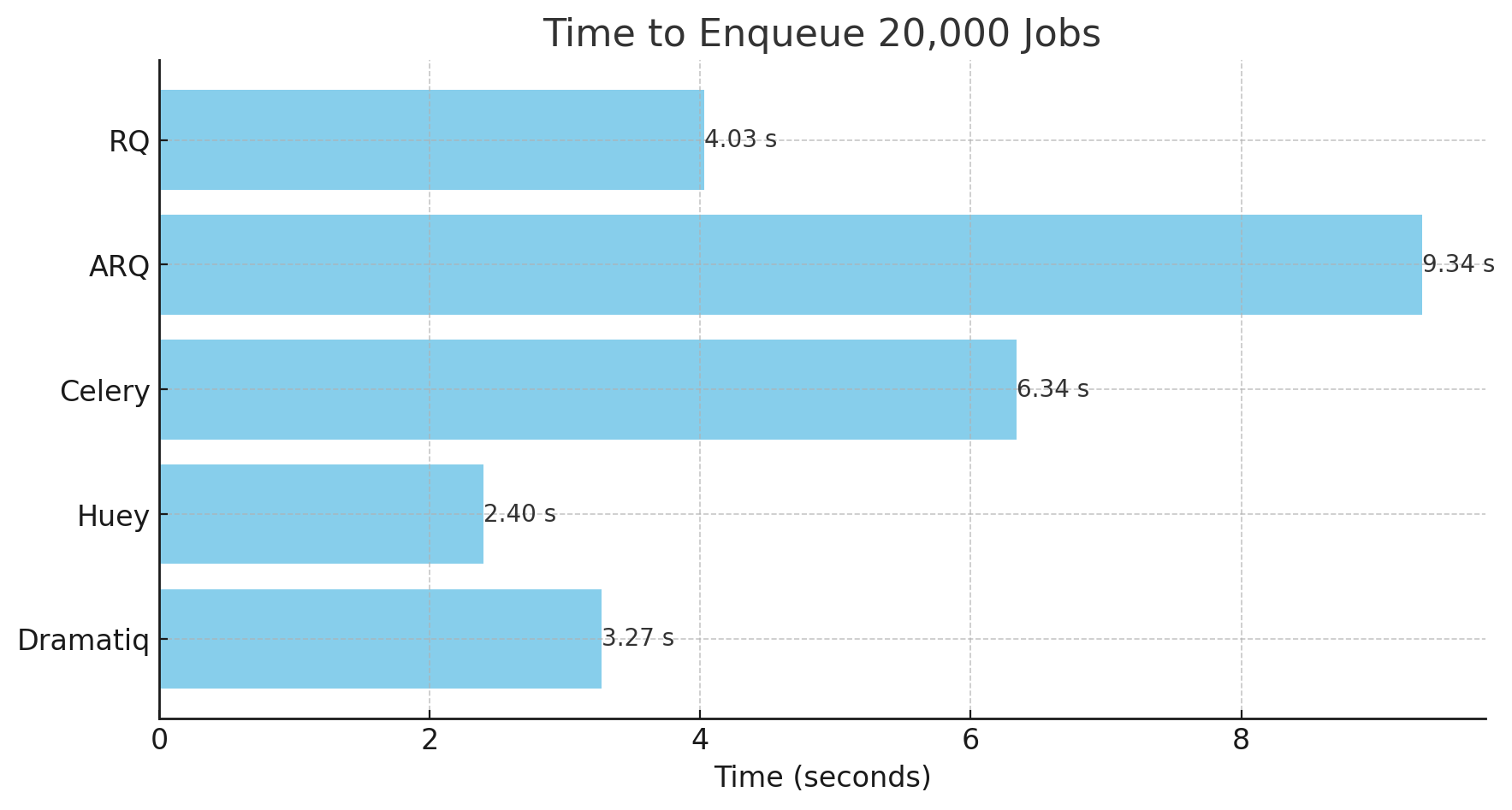

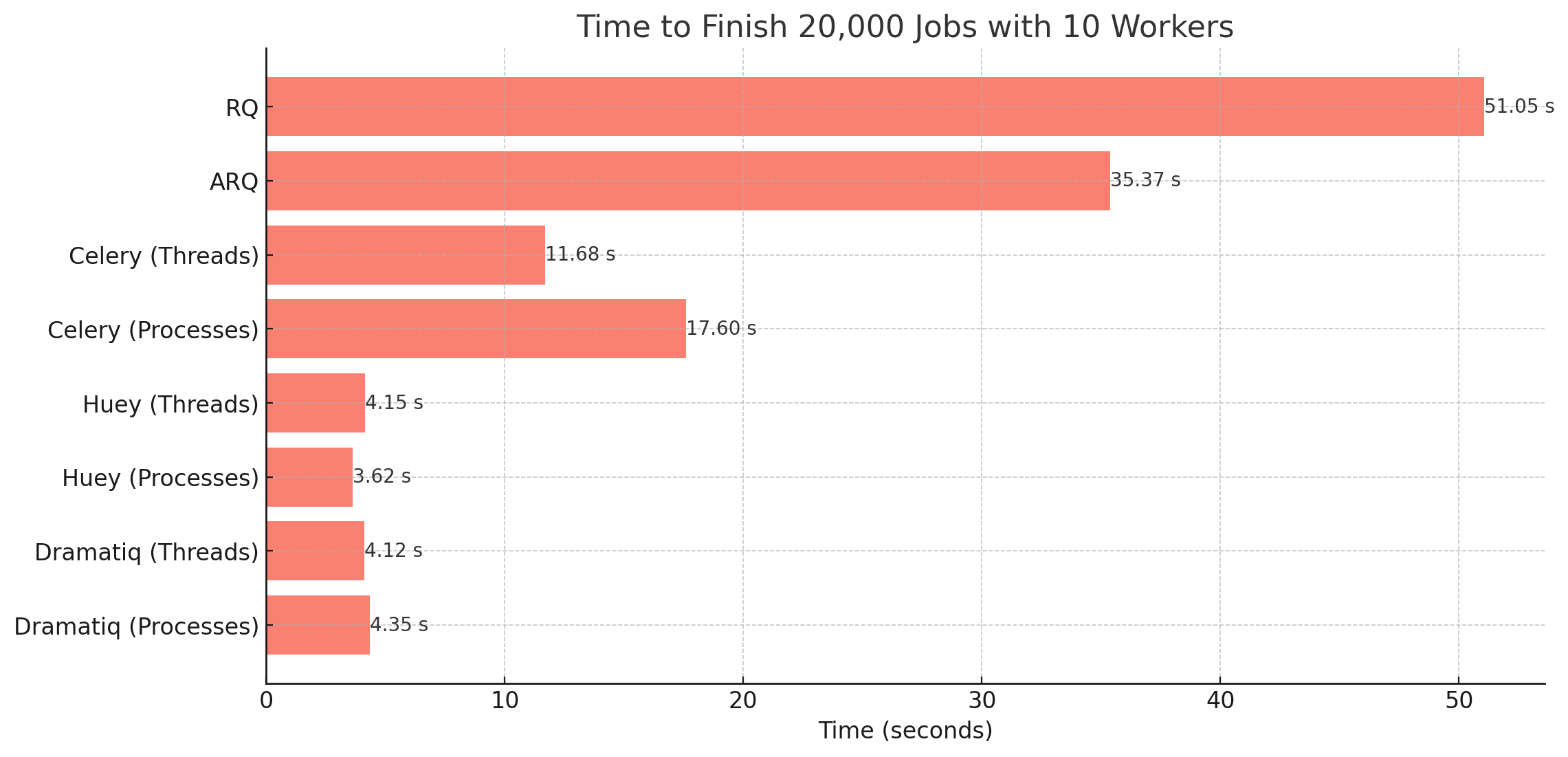

The benchmark is very naive, it measure the total time spent on processing 20,000 jobs by 10 workers. Each job simply prints the current time.

All tests were conducted on a MacBook Pro 14 inch M2 Pro with 16GB of RAM.

Before running the tests, ensure you have Docker installed. Start Redis using Docker Compose:

docker compose upInstall pdm, and then run:

pdm install

source .venv/bin/activate- Navigate to the RQ directory:

cd rq - Enqueue the jobs:

python enqueue_jobs.py

- Process jobs:

python -c "import time; print(f'Start time: {time.time()}')" > time.log && rq worker-pool -q -n 10

- Record the time when the last job finishes and note the start time in

time.log. - Calculate the difference.

- All jobs enqueued within: 4.03 seconds

- With 10 workers, finished in 51.05 seconds

- Navigate to the ARQ directory:

cd arq - Enqueue the jobs:

python enqueue_jobs.py

- ARQ has no built-in support for running multiple workers, so we use

supervisord:

python -c "import time; print(f'Start time: {time.time()}')" > time.log && supervisord -c supervisord.conf- Record the time when the last job finishes and note the start time in

time.log. - Calculate the difference.

- All jobs enqueued within: 9.34 seconds

- With 10 workers, finished in 35.37 seconds

- Navigate to the Celery directory:

cd celery - Enqueue the jobs:

python enqueue_jobs.py

- Process jobs:

python -c "import time; print(f'Start time: {time.time()}')" > time.log && celery -q --skip-checks -A tasks worker --loglevel=info -c 10 -P threads- Record the time when the last job finishes and note the start time in

time.log. - Calculate the difference.

- All jobs enqueued within: 6.34 seconds

- With 10 thread workers, finished in 11.68 seconds

- With 10 process workers (

-P processes), finished in 17.60 seconds

- Navigate to the Huey directory:

cd huey - Enqueue the jobs:

python enqueue_jobs.py

- Process jobs:

python -c "import time; print(f'Start time: {time.time()}')" > time.log && huey_consumer.py tasks.huey -k thread -w 10 -d 0.001 -C -q

- Record the time when the last job finishes and note the start time in

time.log. - Calculate the difference.

- All jobs enqueued within: 2.40 seconds

- With 10 thread workers, finished in 4.15 seconds

- With 10 process workers (

-k process -w 10 -d 0.001 -C -q), finished in 3.62 seconds

- Navigate to the Dramatiq directory:

cd dramatiq - Enqueue the jobs:

python enqueue_jobs.py

- Process jobs:

python -c "import time; print(f'Start time: {time.time()}')" > time.log && dramatiq tasks -p 1 -t 10

- Record the time when the last job finishes and note the start time in

time.log. - Calculate the difference.

- All jobs enqueued within: 3.27 seconds

- With 1 process and 10 threads, finished in 4.12 seconds

- With 10 process and 1 threads, finished in 4.35 seconds

- With default settings (10 processes, 8 threads), finished in 1.53 seconds

- Get the time from stored results in the queue, so that the whole process can be automated.

- Test in larger size, and verify failures, error handlings.