Diverse Image Generation via Self-Conditioned GANs

Steven Liu,

Tongzhou Wang,

David Bau,

Jun-Yan Zhu,

Antonio Torralba

MIT, Adobe Research

in CVPR 2020.

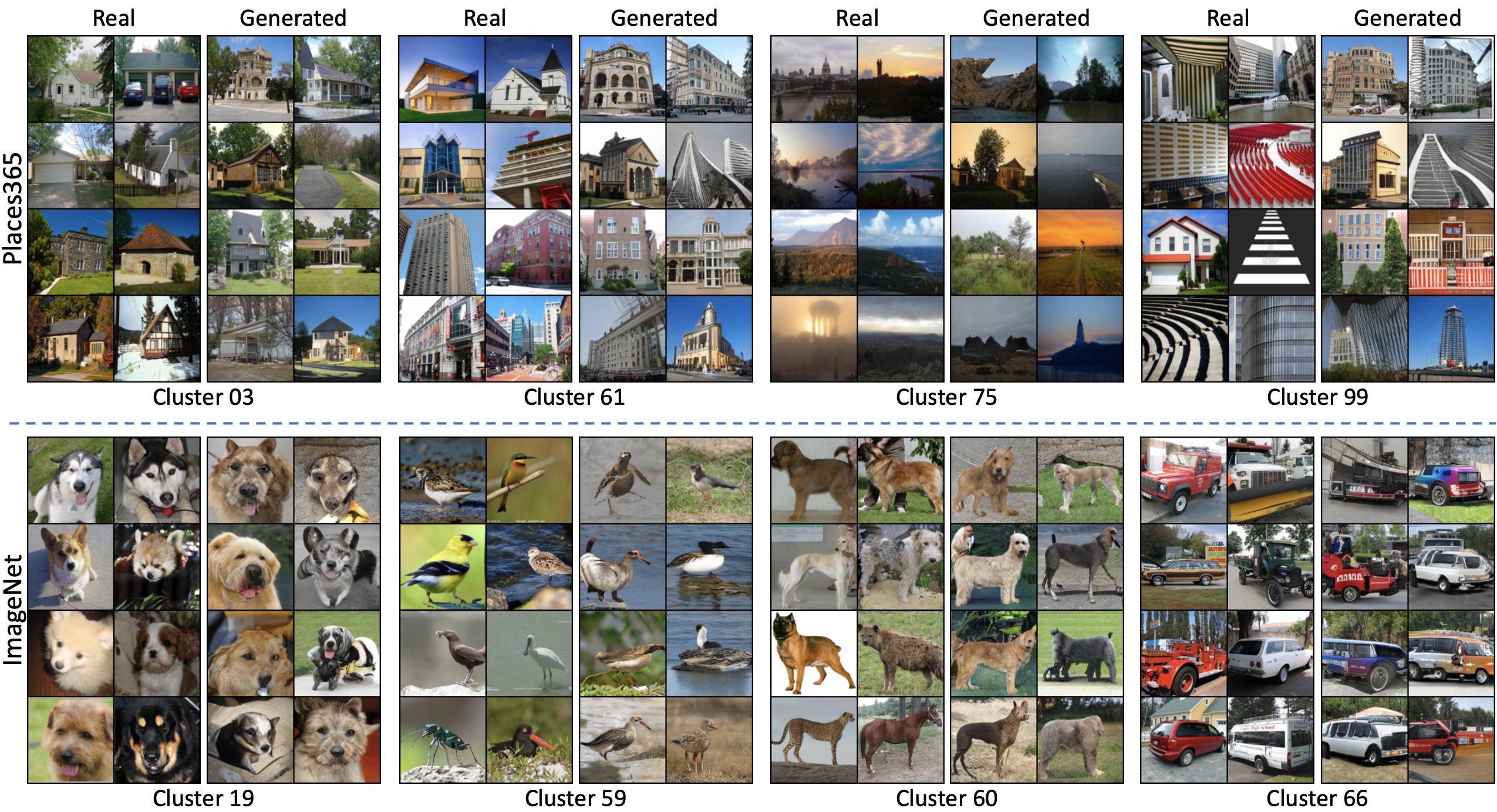

Our proposed self-conditioned GAN model learns to perform clustering and image synthesis simultaneously. The model training requires no manual annotation of object classes. Here, we visualize several discovered clusters for both Places365 (top) and ImageNet (bottom). For each cluster, we show both real images and the generated samples conditioned on the cluster index.

- Clone this repo:

git clone https://github.com/stevliu/self-conditioned-gan.git

cd self-conditioned-gan- Install the dependencies

conda create --name selfcondgan python=3.6

conda activate selfcondgan

conda install --file requirements.txt

conda install -c conda-forge tensorboardx- Train a model on CIFAR:

python train.py configs/cifar/selfcondgan.yaml- Visualize samples and inferred clusters:

python visualize_clusters.py configs/cifar/selfcondgan.yaml --show_clustersThe samples and clusters will be saved to output/cifar/selfcondgan/clusters. If this directory lies on an Apache server, you can open the URL to output/cifar/selfcondgan/clusters/+lightbox.html in the browser and visualize all samples and clusters in one webpage.

- Evaluate the model's FID: You will need to first gather a set of ground truth train set images to compute metrics against.

python utils/get_gt_imgs.py --cifar

python metrics.py configs/cifar/selfcondgan.yaml --fid --every -1You can also evaluate with other metrics by appending additional flags, such as Inception Score (--inception), the number of covered modes + reverse-KL divergence (--modes), and cluster metrics (--cluster_metrics).

You can load and evaluate pretrained models on ImageNet and Places. If you have access to ImageNet or Places directories, first fill in paths to your ImageNet and/or Places dataset directories in configs/imagenet/default.yaml and configs/places/default.yaml respectively. You can use the following config files with the evaluation scripts, and the code will automatically download the appropriate models.

configs/pretrained/imagenet/selfcondgan.yaml

configs/pretrained/places/selfcondgan.yaml

configs/pretrained/imagenet/conditional.yaml

configs/pretrained/places/conditional.yaml

configs/pretrained/imagenet/baseline.yaml

configs/pretrained/places/baseline.yamlTo visualize generated samples and inferred clusters, run

python visualize_clusters.py config-fileYou can set the flag --show_clusters to also visualize the real inferred clusters, but this requires that you have a path to training set images.

To obtain generation metrics, fill in paths to your ImageNet or Places dataset directories in utils/get_gt_imgs.py and then run

python utils/get_gt_imgs.py --imagenet --placesto precompute batches of GT images for FID/FSD evaluation.

Then, you can use

python metrics.py config-filewith the appropriate flags compute the FID (--fid), FSD (--fsd), IS (--inception), number of modes covered/ reverse-KL divergence (--modes) and clustering metrics (--cluster_metrics) for each of the checkpoints.

To train a model, set up a configuration file (examples in /configs), and run

python train.py config-fileAn example config of self-conditioned GAN on ImageNet is config/imagenet/selfcondgan.yaml and on Places is config/places/selfcondgan.yaml.

Some models may be too large to fit on one GPU, so you may want to add --devices DEVICE_NUMBERS as an additional flag to do multi GPU training.

For synthetic dataset experiments, first go into the 2d_mix directory.

To train a self-conditioned GAN on the 2D-ring and 2D-grid dataset, run

python train.py --clusterer selfcondgan --data_type ring

python train.py --clusterer selfcondgan --data_type gridYou can test several other configurations via the command line arguments.

This code is heavily based on the GAN-stability code base. Our FSD code is taken from the GANseeing work. To compute inception score, we use the code provided from Shichang Tang. To compute FID, we use the code provided from TTUR. We also use pretrained classifiers given by the pytorch-playground.

We thank all the authors for their useful code.

If you use this code for your research, please cite the following work.

@inproceedings{liu2020selfconditioned,

title={Diverse Image Generation via Self-Conditioned GANs},

author={Liu, Steven and Wang, Tongzhou and Bau, David and Zhu, Jun-Yan and Torralba, Antonio},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2020}

}