Chen Zhang*, S.T.S Luo*, Jason Chun Lok Li, Yik-Chung Wu, Ngai Wong

[Paper] | [Project Page]

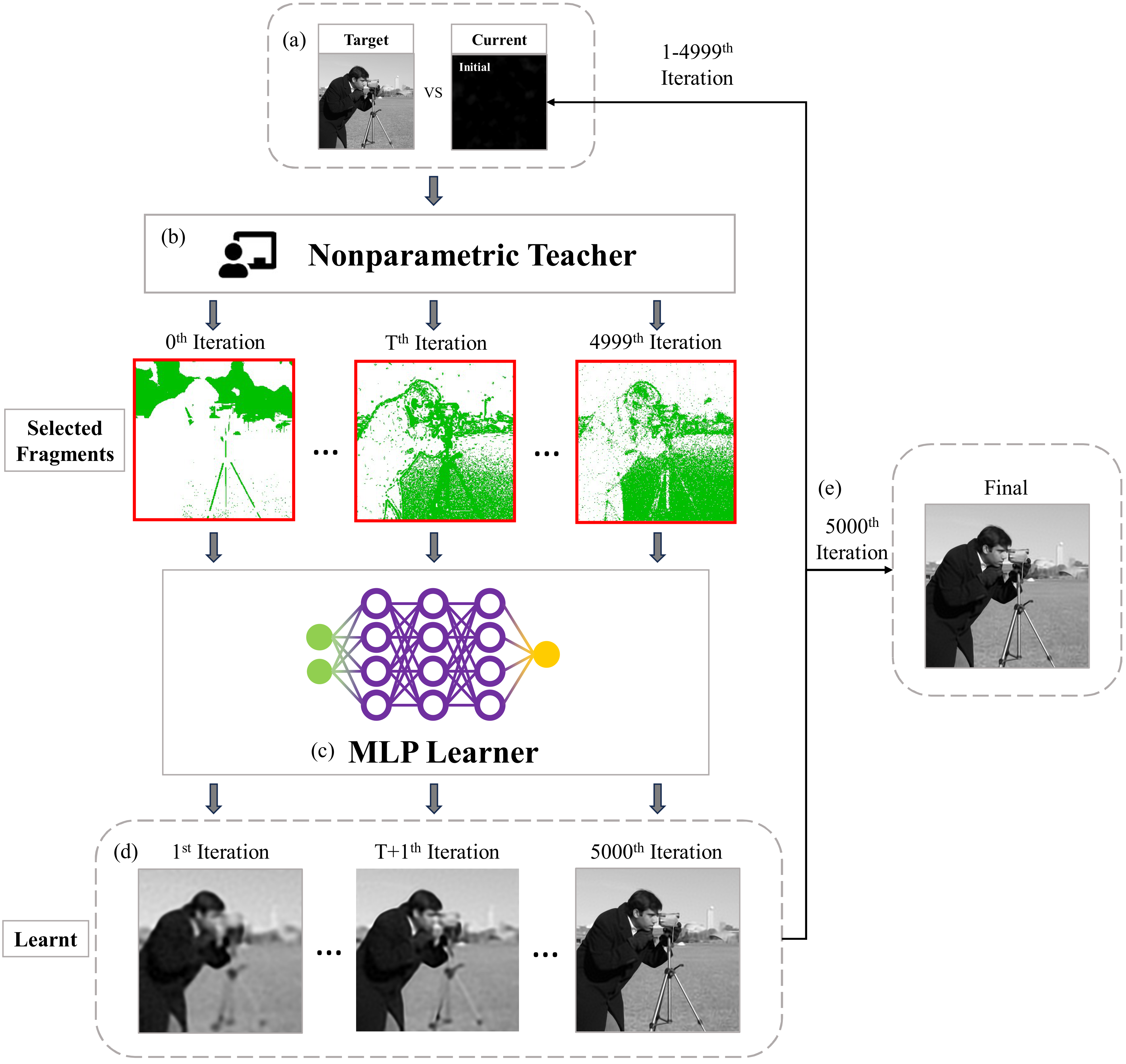

This is the official PyTorch implementation of the paper Nonparametric Teaching of Implicit Neural Representations (the INT algorithm for INRs).

Install dependencies

conda env create -f environment.yml

conda activate NMT

Install the NMT package

python setup.py install

Please view the README in src/ and read the docstrings of the NMT class in src/nmt.py.

The train_<data>.py files are training codes for the specific data modalities reported in the paper, while the bash scripts in scripts/ are used to produce the results. The configuration files (i.e. model config, experimental config, etc.) are located in config in the form of .yaml files. The config files in this repo do not contain the configurations for all the reported experiments. To reproduce the experimental results, please refer to the paper and amend the configuration files accordingly.

Optimized NMT algo

- if mt ratio > ??, do only 1 forward pass (with gradients) and use subset of gradients for backward pass

- if mt ratio < ??, do 1 forward pass (without gradients) and do another subset forward pass (with gradients) for backward pass

Related works for developing a deeper understanding of INT are:

[NeurIPS 2023] Nonparametric Teaching for Multiple Learners,

[ICML 2023] Nonparametric Iterative Machine Teaching.

If you find our work useful in your research, please cite:

@InProceedings{zhang2024ntinr,

title={Nonparametric Teaching of Implicit Neural Representations},

author={Zhang, Chen and Luo, Steven and Li, Jason and Wu, Yik-Chung and Wong, Ngai},

booktitle = {ICML},

year={2024}

}

If you have any questions, please feel free to email the authors.