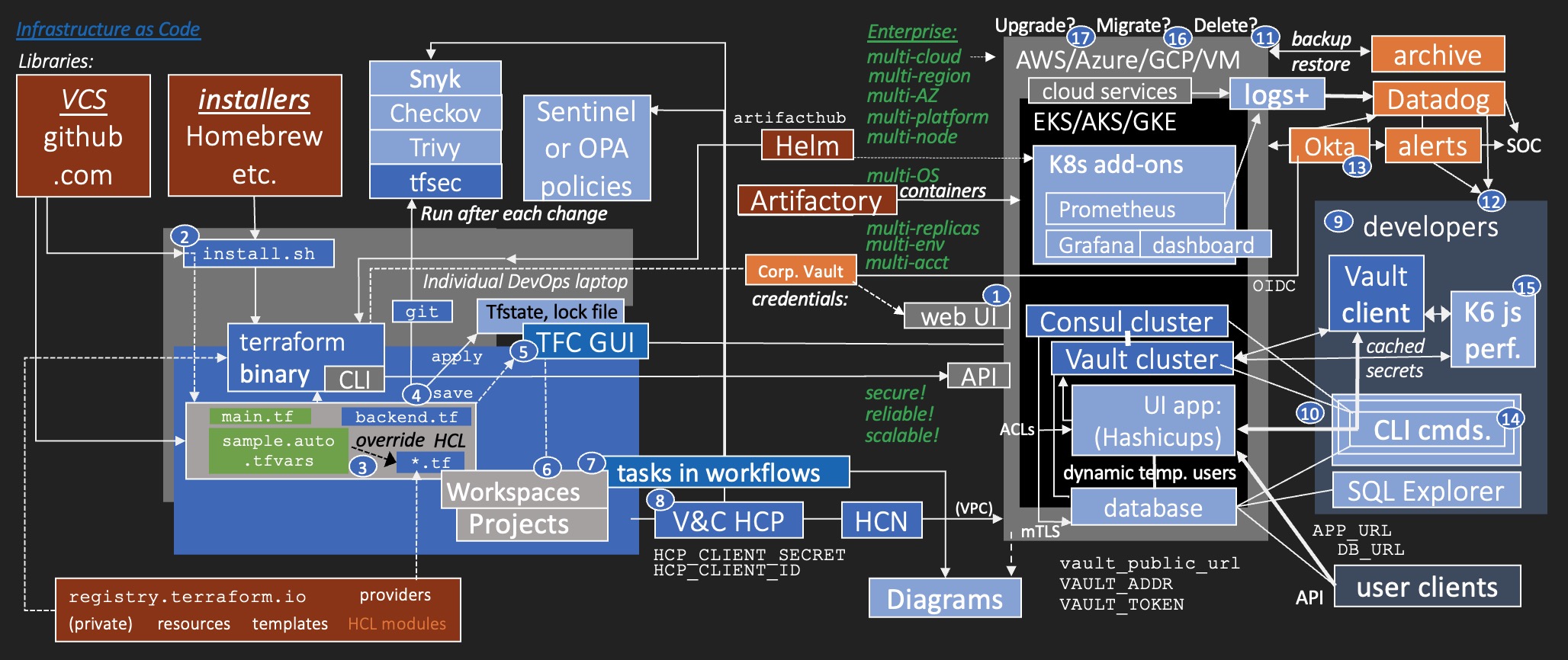

Click image below to watch a gradual reveal video, covered in text below, one concept at a time.

Yes, there is a lot involved in this flowchart, which introduces ALL the key components of an entire enterprise production environment containing high-availability Kubernetes clusters (with add-ins) that interface with external systems.

But the focus here is on automation so you don't need to manually code every detail about every resource.

Numbered bubbles on the flowchart mark the sequence of manual tasks described in the text below.

Green text identifies attributes about enterprise usage.

A web UI (browser web User Interface) is provided by each Cloud Service Provider (CSP) for cloud administrators to create and manage infrastructure in their cloud. Each cloud service provider has a different way to create and manage credentials to access each of their cloud environments.

PROTIP: Resiliency often means having multiple options for achieving each capability.

TODO: Recent surveys reveal the large percentage of enterprises -- through acquisition or by design to meet customer demand for network resilience -- find that they need to operate across multiple clouds.

- Case studies (such as VIDEO: Q2) find that multi-cloud "reduces operational complexity" while achieving more flexibility and resilience.

Also, many enterprises now make use of a central corporate IdP (Identity Provider) system such as Azure AD or Okta to authenticate human users accessing corporate-owned networks and applications. Such systems provide MFA (MultiFactor Authentication) mechanisms to make users prove they still are in control of their email, mobile phone, or other authentication device.

Due to the security principle of "segregation of duties", many organizations now have separate teams: one to manage the corporate IdP system, an SRE team to manage the cloud infrastructure, and yet another team to manage a central corporate Vault system used to create temporary time-limited credentials instead of static long-term credentials which can be stolen.

A corporate Vault system usually coordinates with IdP systems using OIDC (OpenID Foundation's Connect) and other protocols.

Each cloud provider provides its own mechanisms to achieve HA (High Availability) across multiple geographical Regions around the world, each housing multiple AZs (Availability Zones), in case one of them fails.

PROTIP: This is done because the cost of potential downtime is much higher.

In typical enterprise production scenarios, multiple accounts are necessary to segregate permissions to dev vs. production environments, to reduce the "blast radius" in case credentials for an account are stolen.

PROTIP: All the different clouds, regions, accounts, environments makes it cumbersome to manually switch among them in a browser web UI (browser User Interface). And manual changes are not easily repeatable due to human error.

In fact, many organizations discourage use of cloud vendor web UI, and only allow use of versioned Infrastructure as Code (IaC) stored in Version Control Systems such as GitHub as the preferred way to create and manage IT infrastructure.

NOTE: In this presentation, boxes in brown are libraries that provide files referenced by custom code.

When properly structured, defining infrastructure as code enables easier and more reliable re-creation of resources with less manual effort and thus with less toil and human error.

Infrastructure as Code leverages APIs (Application Programming Interfaces) that each cloud vendor has defined so external programs can create and manage its infrastructure instead of people using a WebUI.

The most common custom infrastructure as code language is HCL (HashiCorp Configuration Language) (Terraform code) defined in .tf files.

To process HCL code, download and install a Terraform binary executable program which turns CLI (Command Line Interface) commands into API calls which create and manage infrastructure resources.

That program can be installed on DevOps Platform Engineers' individual laptops by running an installer shell script which also installs all other utilities needed from Homebrew or other install package managers.

BTW: The Terraform binary is written in Go, so can be run on Linux, macOS, or Windows without additional installs (such as needed with Java, Python, C, etc.).

The install script can also clone onto the laptop GitHub repos containing custom Terraform code.

HashiCorp works with cloud vendors to create Terraform providers that HashiCorp's Terraform binary uses when it verifies credentials and turns Terraform HCL into API calls that create resources.

Provider logic is stored in and retrieved from https://registry.hashicorp.io.

Each cloud vendor offers their own networking, compute, storage, and other cloud services. The logic for handling each cloud service is stored in a module in registry.terraform.io.

Optionally, code template files and the resources they reference are also stored in HashiCorp's registry.

PROTIP: This modularity enables repeatability and collaboration.

Those with Terraform Enterprise licenses can create private modules in the Registry.

Different modules reduce the complexity during creation of platforms such as Kubernetes:

- EKS (Elastic Kubernetes Service) in AWS

- AKS (Azure Kubernetes Service)

- GKE (Google Kubernetes Engine)

When managed by a central team, modules provide a standardized way to create and manage infrastructure resources across platforms and cloud vendors.

PROTIP: API calls among cloud vendors can be very different. However, Terraform providers and Terraform binary commands standardizes operation across all clouds

Each HCL module contains variables with default values that can be overridden by customized values in main.tf or sample.auto.tfvars files, thus controlling what features and services you would like to deploy for your specific use case.

For example, the number of nodes within a Kubernetes multi-node cluster can be configured by an edit.

Within each cloud Kubernetes service, Kubernetes add-ons and plug-ins are installed using Helm charts, the default package manager for Kubernetes.

Popular add-ons from the https://artifacthub.io/ include the open-source Prometheus to gather logs and statistics into its database, and Grafana to visualize metrics in a custom dashboard.

Common add-ons add functionality such as "external-dns" to automate the creation of DNS records for services.

PROTIP: Using infrastructure-as-code makes it more practical to test different components together to ensure that a particular combination of versions all work together well.

The specific operating system used within Kubernetes are set when containers are built (using Packer), then stored for access within Artifactory or other container registry.

HashiCorp designed its Vault and Consul utilities with modern mechanisms to be highly available (HA) within a cluster of Kubernetes nodes. HashiCorp's apps can also be configured for large capacity by use of multiple replicas which can span several regions around the world to serve high loads.

UI apps (such as the sample Hashicups or counting service) can be deployed with a database within Kubernetes or outside of Kubernetes. Either way, the app can reference a Vault service to obtain from the database a new dynamic (temporary) user credential for each new connection. This is a common pattern for how Vault can eliminate static long-term credentials that can be stolen.

PROTIP: To distribute secrets between services, Vault can use its unique "AppRole" authentication method from a Vault one-time access Cubbyhole.

HashiCorp Consul can ensure that communications be encrypted during transit using mTLS protocol within a "service mesh", both within and outside of Kubernetes. Consul can also enforce "intentions" about which service can communicate with specific other services, with enforcement of ACLs (Access Control Lists) to ensure that only authorized services can access the Consul service.

Also due to organizational divisions and to limit "cognitive load" on individuals, common utility systems are usually established by separate groups using separate repositories:

-

An Archival solution to backup and restore data

-

A SIEM (Security Information and Event Management) solution such as Splunk, Datadog, etc. which retrieves, ingests, aggregates, and correlates variouos logs from various services into analytic dashboards

-

An Alerting solution which evaluates data within SIEM to issue alerts and escalations when alerts are not acknowledged in a timely manner.

In many enterprises, a corporate-wide central SOC (Security Operations Center) review alerts to monitor, triage, and respond to anomalies and threats.

Identity Providers can also provide alerts about repetitive failures in Single-Sign-On (SSO) identity verification.

When a Terraform HCL file is saved using Git, after each change, it can be set to trigger run of additional utilities such as tfsec, Trivy, Checkov, Snyk, etc. to scan HCL files for vulnerabilities.

PROTIP: When created, resources in the cloud can be hacked immediately. So such utilities enable vulnerabilities to be identified and remediated in cloud resources even before they are created.

The enterprise edition of Terraform uses state files to identify "drift" created by manual changes. and manage resources, and to prevent Terraform from creating resources that already exist.

Some users of open-source Terraform configure a backend.tf file to specify a remote location, such as a S3 bucket or DynamoDB.

Updates to Helm charts can be set to trigger redeployment of Kubernetes services.

Even more useful is HashiCorp's TFC (Terraform Cloud) where HashiCorp provides a safe place to store versioned state files. It's free but extra features can be licensed.

Like other SaaS (Software as a Service), TFC frees you from managing the underlying multi-region/multi-AZ HA infrastructure, and provides hosting of Terraform state and lock files.

To Setup TFC:

- Create workspaces

- Connect to a Git repo

- Define environment variables

- Kick-off a plan from the drop-down menu

DevOps engineers don't need a laptop if they use TFC SaaS (Software as a Service).

TFC provides a web UIreachable on internet web browsers to initiate runs manually and to define projects which groups webspaces that separates HCL between different teams.

The web UI standardizes permissions and workflows across teams.

- TFC is described using the acroymn "TACOS" (Terraform Automation & Collaboration Software) because it enables scaling across many teams. It provides administrators to track utilization over time.

Licensed TFC Enteprise users can optionally define custom Sentinel or OPA (Open Policy Agent) rules (in Rego language) to ensure that policies-as-code are not violated. For example, ensure that tags have been defined in all HCL for accurate project billing.

Other tasks in TFC workflows include automatic generation of diagrams from Terraform or resources created in the cloud.

HashiCorp also created a SaaS HashiCorp Cloud Platform (HCP) for Vault and Consul that's similar to TFC.

To use it, first create a HCP account to obtain a HCP Organization name. Then Setup HCP environment variables to connect to TFC.

Tasks and Workflow in HCP are similar to TFC.

HCP creates and uses a HVN (HashiCorp Virtual Network) to access cloud infrastructure that makes networking possible. An HVN delegates an IPv4 CIDR range that HCP uses to automatically create resources in your cloud network -- one that does not overlap with other public cloud networks (e.g. AWS VPCs) or on-premise networks.

Custom HCL is loaded onto HCP from github.com.

Output from runs include the Vault service URL and credential token.

Use the cloud console GUI to Confirm HCP and confirm resources in AWS GUI.

WARNING: Avoid making changes using the cloud GUI, which creates drift from configurations defined. Keep Terraform as the single source of truth to define cloud resources.

The Enterprise/Cloud edition of Terraform performs drift detection to detect changes made outside of Terraform. But it's less risk and toil to avoid drift in the first place.

Install on application developer's laptops CLI (Command Line Interface), SSH (Secure Shell), and other utilities that include a Vault client to cache secrets. A local Jupyter server program with Docker can be installed for use in demonstrating commands exercising Vault and Consul.

CLI commands can obtain from within Kubernetes the URL and credentials to Vault and Consul.

Configure Secrets Engines such that Vault can cache secrets so applications can access secrets without wearing out the Vault server.

Commands can be run to obtain Application URLs and database URLs.

Consul can be used to discover services running in Kubernetes.

Ensure there is adequate backup capability by testing procedures to restore from archives. This is also a good time to measure MTTR and practice Incident Management.

PROTIP: When resources can be recreated quickly, there is less fear of destroying Vault and other resources, resulting in less idle resources consuming money for no good reason.

To provide visibility to the security posture of your system, filter logs gathered and view structure analytics displayed using Grafana installed using auxilliary scripts.

If a SIEM (such as Splunk or Datadog) is available, view alerts generated from logs sent to them.

Populate enough fake/test users with credentials obtained from your IdP (Identity Provider) Creating User Accounts, Configuring Policies, and Editng Policies. This is done by coding API in app programs.

PROTIP: Identify users and teams in production and invite/load them in the system (via IdP) early during the implementation project for less friction before users can begin work with minimal effort.

Create and run (perhaps run overnight) test flows (coded in Jupyter or K6 JavaScript) to verify functional, performance, and capacity to emulate activity from end-user clients. This enables monitoring over time of latency between server and end-users, which can impair user productivity.

TODO: GitHub Actions workflows are included here to have a working example of how to retrieve secrets from Vault, such as GitHub OIDC protocol.

Verify configuration on every change. This is important to really determine whether the whole system works both before and after changing/upgrading any component (Versions of Kubernetes, operating system, Vault, etc.). The Enterprise version of Terraform, Vault, and Consul provide for automation of upgrades.

Again, use of Infrastructure-as-Code enables quicker response to security changes identified over time, such as for EC2 IMDSv2.

Migration of data in and from other systems during testing, cut-over, and post-production is possible using Vault Tools and Vault API.

The Enterprise and Cloud editions provide for automation of upgrades.

The following provides more details on the manual steps summarized above.

There are several ways to create a HashiCorp Vault instance.

The approach as described in this tutorial has the following advantages:

-

Solve the "Secret Zero" problem: use using HashiCorp's MFA (Multi-Factor Authentication) embedded within HCP (HashiCorp Cloud Platform) secure infrastructure to authenticate Administrators, who have elevated privileges over all other users.

-

Ease of use: Use of the HCP GUI means no complicated commands to remember, especially to perform emergency "break glass" procedures to stop Vault operation in case of an intrusion.

-

HCP provides separate workflows so that potential impact from loss of credentials (through phising and other means) result in a smaller "blast radius" exposure.

There are several ways to create a HashiCorp Vault or Consul instance (cluster of nodes within Kubernetes).

The approach as described in this tutorial has the following advantages:

-

Solves the "Secret Zero" problem: use using HashiCorp's MFA (Multi-Factor Authentication) embedded within HCP (HashiCorp Cloud Platform) secure infrastructure to authenticate Administrators, who have elevated privileges over all other users.

-

Use pre-defined Terraform modules which have been reviewed by several experienced professionals to contain secure defaults and mechanisms for security hardening that include:

-

RBAC settings by persona for Least-privilege permissions (separate accounts to read but not delete)

-

Verification and automated implementation of the latest TLS certificate version and Customer-provided keys

-

End-to-End encryption to protect communications, logs, and all data at rest

-

Automatic dropping of invalid headers

-

Logging enabled for audit and forwarding

-

Automatic movement of logs to a SIEM (such as Splunk) for analytics and alerting

-

Automatic purging of logs to conserve disk space usage

-

Purge protection (waiting periods) on KMS keys and Volumes

-

Enabled automatic secrets rotation, auto-repair, auto-upgrade

-

Disabled operating system swap to prevent the from paging sensitive data to disk. This is especially important when using the integrated storage backend.

-

Disable operating system core dumps which may contain sensitive information

-

etc.

-

-

Use of Infrastructure-as-Code enables quicker response to security changes identified over time, such as for EC2 IMDSv2.

-

Ease of use: Use of the HCP GUI means no complicated commands to remember, especially to perform emergency "break glass" procedures to stop Vault operation in case of an intrusion.

-

Use of "feature flags" to optionally include Kubernetes add-ons needed for production-quality use:

- DNS

- Verification of endpoints

- Observability Extraction (Prometheus)

- Analytics (dashboarding) of metrics (Grafana)

- Scaling (Kubernetes Operator Karpenter or cluster-autocaler) to provision Kubernetes nodes of the right size for your workloads and remove them when no longer needed

- Troubleshooting

- etc.

-

TODO: Use of a CI/CD pipeline to version every change, automated scanning of Terraform for vulnerabilities (using TFSec and other utilities), and confirmation that policies-as-code are not violated.

-

If you are using a MacOS machine, install Apple's utilities, then Homebrew formulas:

xcode select --install brew install git jq awscli tfsec vault kubectl

NOTE: HashiCorp Enterprise users instead use the Vault enterprise (vault-ent) program.

-

In the Terminal window you will use to run Terraform in the next step, set the AWS account credentials used to build your Vault instance, such as:

export AWS_ACCESS_KEY_ID=ZXYRQPONMLKJIHGFEDCBA export AWS_SECRET_ACCESS_KEY=abcdef12341uLY5oZCi5ILlWqyY++QpWEYnxz62w

Below are steps to obtain credentials used to set up HCP within AWS:

export HCP_CLIENT_ID=1234oTzq81L6DxXmQrrfkTl9lv9tYKHJ export HCP_CLIENT_SECRET=abcdef123mPwF7VIOuHDdthq42V0fUQBLbq-ZxadCMT5WaJW925bbXN9UJ9zBut9

Most enterprises allocate AWS dynamically for a brief time (such as HashiCorp employees using "Bootcamp"). So the above are defined on a Terminal session for one-time use instead of being stored (long term, statically) in a $HOME/.zshrc or $HOME/.bash_profile file run automatically when a Terminal window is created.

-

Be at the browser window which you want a new tab added to contain the URL in the next step:

-

Click this URL to open the HCP portal:

-

Click "Sign in" or, if you haven't already, "Create an account" (for an "org" to work with) to reach the Dashboard for your organization listed at the bottom-left.

-

PROTIP: To quickly reach the URL specific to your org, save it to your bookmarks in your browser.

-

REMEMBER: HCP has a 7-minute interaction timeout. So many users auto-populate the browser form using 1Password, which stores and retrieves credentials locally.

-

Click "Access control (IAM)" on the left menu item under your org. name.

-

Click "Service principals" (which act like users on behalf of a service).

-

Click the blue "Create Service principal".

-

Specify a Name (for example, JohnDoe-23-12-31) for a Contributor.

PROTIP: Add a date (such as 23-12-31) to make it easier to identify when it's time to refresh credentials.

-

Click "Save" for the creation toast at the lower left.

-

Click "Generate key" at the right or "Create service principal key" in the middle of the screen.

-

Click the icon for the Client ID to copy it into your Clipboard.

-

Switch to your Terminal to type, then paste from Clipboard a command such as:

export HCP_CLIENT_ID=1234oTzq81L6DxXmQrrfkTl9lv9tYKHJ -

Switch back to HCP.

-

Click the icon for the Client secret to copy it into your Clipboard.

-

Switch to your Terminal to type, then paste from Clipboard a command such as:

export HCP_CLIENT_SECRET=abcdef123mPwF7VIOuHDdthq42V0fUQBLbq-ZxadCMT5WaJW925bbXN9UJ9zBut9 -

Obtain a copy of the repository onto your laptop:

git clone git@github.com:stoffee/csp-k8s-hcp.git cd csp-k8s-hcp -

Since the main branch of this repo is under active change and thus may be unstable, copy to your Clipboard the last stable release of this repo to use at:

https://github.com/stoffee/csp-k8s-hcp/releases -

Identify release tag (such as "v0.0.6").

-

Click "Code" on the menu bar, then the green "Code" to obtain the SSH clone name:

-

In a Terminal, navigate to a folder where you will be cloning the repo

-

Clone the repo and checkout the release/version in the previous step:

git clone git@github.com:stoffee/csp-k8s-hcp.git cd csp-k8s-hcp git checkout v0.0.6Alternately, you can Fork on the GUI, then clone using your own instead of the "stoffee" account.

Notice that the repo is structured according to the HashiCorp recommended structure of folders and files at

https://developer.hashicorp.com/terraform/language/modules/develop/structure -

Navigate into one of the example folder of deployments:

cd examples cd full-deploy

NOTE: full-deploy example assumes the use of a "Development" tier of Vault instance size, which incur charges as described at https://cloud.hashicorp.com/products/vault/pricing.

Alternately, the eks-hvn-only-deploy example only creates the HVN (HashiCorp Virtual Network). DEFINITION: An HVN delegates an IPv4 CIDR range that HCP uses to automatically create resources in a cloud region. The HCP platform uses this CIDR range to automatically create a virtual private cloud (VPC) on AWS or a virtual network (VNet) on Azure.

TODO: Example prod-eks (production-high availability) constructs (at a higher cost) features not in dev deploys:

- Larger "Standard" type of servers

- Vault clusters in each of the three Availability Zones within a single region

- RBAC with least-privilege permissions (no wildcard resource specifications)

- Encrypted communications, logging, and data at rest

- Emitting VPC, CloudWatch, and other logs to a central repository for auditing and analysis by a central SOC (Security Operations Center)

- Instance Backups

- AWS Volume Purge Protection

- Node pools have automatic repair and auto-upgrade

TODO: Example dr-eks (disaster recovery in production) example repeats example prod-eks to construct (at a higher cost) enable fail-over recovery among two regions.

TODO: Replication for high transaction load.

-

Rename sample.auto.tfvars_example to sample.auto.tfvars

cp sample.auto.tfvars_example sample.auto.tfvars

NOTE: Your file sample.auto.tfvars is specified in the this repo's .gitignore file so it doesn't get uploaded into GitHub.

-

Use a text editor program (such as VSCode) to customize the sample.auto.tfvars file. For example:

cluster_id = "dev1-eks" deploy_hvn = true hvn_id = "dev-eks" hvn_region = "us-west-2" vpc_region = "us-west-2" deploy_vault_cluster = true # uncomment this if setting deploy_vault_cluster to false for an existing vault cluster #hcp_vault_cluster_id = "vault-mycompany-io" make_vault_public = true deploy_eks_cluster = true

During deployment, Terraform HCL uses the value of cluster_id (such as "dev-blazer") to construct the value of eks_cluster_name ("dev-blazer-eks") and eks_cluster_arn ("dev-blazer-vps").

CAUTION: Having a different hvn_region from vpc_region will result in expensive AWS cross-region data access fees and slower performance.

TODO: Additional parmeters include: the number of nodes in Kubernetes (3 being the default).

TODO: Several variables in your sample.auto.tfvars file overrides both the sample GitHub Actions workflow file and the "claims" within "JWT tokens" sent to request secrets from Vault using OIDC (OpenID Connect) standards of interaction:

- github_enterprise="mycompany"

- TODO: vault_role_oidc_1=github_oidc_1

- TODO: env='prod' segregates access to secrets between jobs within GitHub Pro/Enterprise Environments protected by rules and secrets. GitHub Environment protection rules require specific conditions to pass before a job referencing the environment can proceed. You can use environment protection rules to require a manual approval, delay a job, or restrict the environment to certain branches.

- GITHUB_ACTOR

- GITHUB_ACTION (name of action run)

- GITHUB_JOB (job ID of current job)

- GITHUB_REF (branch/tag that triggered workflow)

TODO: This repo has pre-defined sample Vault roles and scopes (permissions) used to validate claims in JWT tokens received. Changing them would require editing of files before deployment.

From https://wilsonmar.github.io/github-actions GitHub added an "Actions" tab to repos (in 2019) to perform Continuous Integration (CI) like Jenkins. GitHub Action files are stored within the repo's .github folder.

The Workflows folder contain declarative yml files which define what needs to happen at each step.

The Scripts folder contain programmatic sh (Bash shell) files which perform actions.TODO: The sample workflow defined in this repo is set to be triggered to run when a PR is merged into GitHub (rather than run manually). TODO: By default, within the repo's trigger-action folder is file changeme.txt

References: Sample files related to GitHub Actions in this repo are based on several sources:

-

In the same Terminal window as the above step (or within a CI/CD workflow), run a static scan for security vulnerabilities in Terraform HCL:

tfsec | sed -r "s/\x1B\[([0-9]{1,2}(;[0-9]{1,2})?)?[m|K]//g"

NOTE: The sed command filters out most of the special characters output to display colors.

WARNING: Do not continue until concerns raised by TFSec found are analyzed and remediated.

-

In the same Terminal window as the above step (or within a CI/CD workflow), run the Terraform HCL to create the environment within AWS based on specifications in sample.auto.tfvars:

terraform init terraform plan time terraform apply -auto-approvePROTIP: Those who work with Terraform frequently define aliases such as tfi, tfp, tfa to reduce keystrokes and avoid typos.

If successful, you should see metadata output about the instance just created:

cluster_security_group_arn = "arn:aws:ec2:us-west-2:123456789123:security-group/sg-081e335dd11b10860" cluster_security_group_id = "sg-081e335dd11b10860" eks_cluster_arn = "arn:aws:eks:us-west-2:123456789123:cluster/dev-blazer-eks" eks_cluster_certificate_authority_data = "...==" eks_cluster_endpoint = "https://1A2B3C4D5E6F4B5F9C8FC755105FAA00.gr7.us-west-2.eks.amazonaws.com" eks_cluster_name = "dev-blazer-eks" eks_cluster_oidc_issuer_url = "https://oidc.eks.us-west-2.amazonaws.com/id/1A2B3C4D5E6F4B5F9C8FC755105FAA00" eks_cluster_platform_version = "eks.15" eks_cluster_status = "ACTIVE" kubeconfig_filename = <<EOT /Users/wilsonmar/githubs/csp-k8s-hcp/examples/full-deploy/apiVersion: v1 kind: ConfigMap metadata: name: aws-auth namespace: kube-system data: mapRoles: | - rolearn: arn:aws:iam::123456789123:role/node_group_01-eks-node-group-12340214203844029700000001 username: system:node:{{EC2PrivateDNSName}} groups: - system:bootstrappers - system:nodes EOT node_security_group_arn = "arn:aws:ec2:us-west-2:123456789123:security-group/sg-abcdef123456789abc" node_security_group_id = "sg-abcdef123456789abc" vault_private_url = "https://hcp-vault-private-vault-9577a2dc.993dfd61.z1.hashicorp.cloud:8200" vault_public_url = "https://hcp-vault-public-vault-9577a2dc.993dfd61.z1.hashicorp.cloud:8200" vault_root_token = <sensitive<This sample time output shows 22 minutes 11 seconds total clock time:

terraform apply -auto-approve 7.71s user 3.53s system 0% cpu 22:11.66 total

One helpful design feature of Terraform HCL is that it's "declarative". So terraform apply can be run again. A sample response if no changes need to be made:

No changes. Your infrastructure matches the configuration. Terraform has compared your real infrastructure against your configuration and found no differences, so no changes are needed.

NOTE: Terraform verifies the status of resources in the cloud, but does not verify the correctness of API calls to each service.

Switch back to the HCP screen to confirm what has been built:

-

In an internet browser, go to the HCP portal Dashboard at https://portal.cloud.hashicorp.com

-

Click the blue "View Vault".

-

Click the Vault ID text -- the text above "Running" for the Overview page for your Vault instance managed by HCP. The Cluster Details page should appear, such as this:

Notice that the instance created is an instance that's "Extra Small", with No HA (High Availability) of clusters duplicated across several Availability Zones.

CAUTION: You should not rely on a Development instance for productive use. Data in Development instances are forever lost when the server is stopped.

-

PROTIP: For quicker access in the future, save the URL in your browser bookmarks.

-

Use the AWS Account, User Name, and Password associated with the AWS variables mentioned above to view different services in the AWS Management Console GUI AWS Cost Explorer:

- Elastic Kubernetes Service Node Groups (generated), EKS cluster

- VPC (Virtual Private Cloud) Peering connections, NAT Gateways, Network interfaces, Subnets, Internet Gateways, Route Tables, Network ACLs, Peering, etc.

- EC2 with Elastic IPs, Instances, Elastic IPs, Node Groups, Volumes, Launch Templates, Security Groups

- EBS (Elastic Block Store) volumes

- KMS (Key Management Service) Aliases, Customer Managed Keys

- CloudWatch Log groups

- AWS Guard Duty

NOTE: Vault in Development mode operates an in-memory database and so does not require an external database.

CAUTION: Please resist changing resources using the AWS Management Console GUI, which breaks version controls and renders obsolete the state files created when the resources were provisioned.

-

Obtain a list of resources created:

terraform state list

-

Be at the browser window you will add a new tab for the Vault UI.

-

Switch to your Terminal to open a browser window presenting the HCP Vault cluster URL obtained (on a Mac) using this CLI command:

open $(terraform output --raw vault_public_url)Alternately, if you're using a client within AWS:

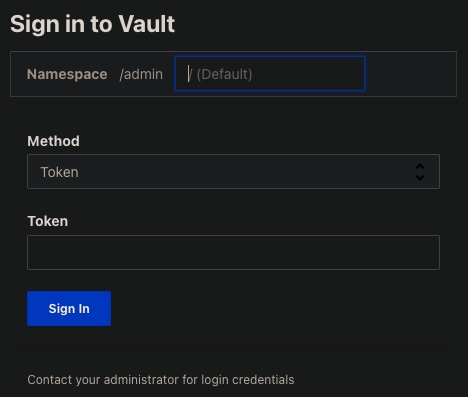

open $(terraform output --raw vault_private_url)Either way, you should see this form:

REMEMBER: The "administrator for login credentials" are automatically provided an /admin namespace within Vault.

NOTE: The administrator informs users of Vault about what authorization methods are available for each user, and how to sign in using each authentication method.

-

PROTIP: Optionally, save the URL as a Bookmark in your browser for quicker access in the future.

-

Copy the admin Token into your Clipboard for "Sign in to Vault" (on a Mac).

terraform output --raw vault_root_token | pbcopyThat is equivalent to clicking Generate token, then Copy (to Clipboard) under "New admin token" on your HCP Vault Overview page.

CAUTION: Sharing sign in token with others is a violation of corporate policy. Create a separate account and credentials for each user.

-

Click Token selection under the "Method" heading within the "Sign in" form.

NOTE: Generation of a temporary token is one of many Authentication Methods supported by Vault.

REMEMBER: The token is only good for (has a Time to Live of) 6 hours.

-

Click in the Token field within the "Sign in" form, then paste the token.

-

Click the blue "Sign in" (as Administrator).

If Sign in is successful, you should see this Vault menu:

The first menu item, "Secrets" is presented by default upon menu entry:

TODO: Automation in this repo has already mounted/enabled several Vault Secrets Engines to generate different types of secrets:

-

kv/ (Key/Value) stores secret values accessed by a key. https://developer.hashicorp.com/vault/tutorials/getting-started/getting-started-first-secret

-

>cubbyhole/ stores each secret so that it can be accessed only one time. (Transmission of such secrets make use of "wrapping" using "AppRole" which creates the equivalent of a username/password for use by computer services without a GUI)

-

-

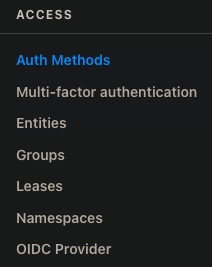

Click "Access" on the menu to manage mechanisms that Vault provides other systems to control access:

Tutorials and Documentation on each menu item:

- Auth Methods perform authentication, then assign identity and a set of policies to each user.

- Multi-factor authentication

- Entities define aliases mapped to a common property among different accounts

- Groups enable several client users of the same kind to be managed together

- Leases limit the lifetimes of TTL (Time To Live) granted to access resources

- Namespaces (a licensed Enterprise "multi-tenancy" feature) separates access to data among several tenants (groups of users in unrelated groups)

- OIDC Provider [doc]

ISO 29115 defines "Identity" as a set of attributes related to an entity (human or machine). A person may have multiple identities, depending on the context (website accessed).

- Policies -- aka ACL (Access Control Lists) -- provide a declarative way to grant or forbid access to certain paths and operations in Vault.

Warning: This application is publicly accessible, make sure to destroy the Kubernetes resources associated with the application when done.

-

Dynamically obtain credentials to the Vault cluster managed by HCP by running these commands on your Terminal:

export VAULT_ADDR=$(terraform output --raw vault_public_url) export VAULT_TOKEN=$(terraform output --raw vault_root_token) export VAULT_namespace=admin

An example of such values are:

The above variables are sought by Vault to authenticate client programs or custom applications. See HashiCorp's Vault tutorials.

-

List secrets engines enabled within Vault:

vault secrets list

These commands are used to verify configuration for GitHub Actions.

-

VIDEO: Obtain a list of Authentication Methods setup, to ensure that "JWT" needed for GitHub is available:

vault auth list

The response includes:

Path Type Accessor Description --- ---- -------- ----------- jwt/ jwt auth_jwt_12345678 JWT Backend for GitHub Actions token/ ns_token auth_ns_token_345678 token based credentials

When GitHub presents a a JSON Web Token (JWT) containing the necessary combination of claims, the token exposes parameters (known as claims) which we can bind a Vault role against Vault returns an auth token for a given Vault role.

-

View settings:

vault policy read github-actions-oidc -

TODO: Terraform in this repo has enabled the JWT (Java Web Token) auth method so that:

vault read auth/jwt/role/github-actions-rolevault auth list

The response includes:

key Value --- ----------- bound_audiences [https://github.com/johndoe] bound_subject repo:johndoe/repo1:ref:refs/heads/main bound_issuer https://token.actions.githubusercontent.com/enterpriseSlug token_max_ttl 1m40s ...

The above is confirmed using this:

vault write auth/jwt/config \

The above was configured using this:

resource "vault_jwt_auth_backend" "github_oidc" { description = "Accept OIDC authentication from GitHub Action workflows" path = "gha" bound_issuer="https://token.actions.githubusercontent.com/enterprise_name oidc_discovery_url="https://token.actions.githubusercontent.com/enterprise_name_ }

CAUTION: The above grants the entirety of public GitHub the possibility of authenticating to your Vault server. If bound claims are accidentally misconfigured, you could be exposing your Vault server to other users on github.com. So instead use an API-only

NOTE: This is NOT the GitHub login Authentication Method which enable sign in to Vault using a PAT generated on GitHub.com.

Access to assets within GitHub.com are granted based Personal Access Tokens (PAT) generated within GitHub.com. Such credentials are static (do not expire), so are exposed to be stolen for reuse by an adversary.

GitHub.com provides a "Actions Secrets" GUI to store encrypted access tokens to access cloud assets outside GitHub. Variables to obtain secrets:

- VAULT_ADDR

- VAULT_NAMESPACE (when using HCP)

- VAULT_ROLE

- VAULT_SECRET_KEY

- VAULT_SECRET_PATH

PROBLEM: Such credentials are repeated stored in each GitHub repository. Rotation of the credentials would require going to each GitHub repo. Or if secrets are set at the enviornment, anyone with access to the enviornment would have access to the keys (secrets are not segmented).

On Oct. 2021 GitHub announced its OIDC provider. OIDC stands for "OpenID Connect", spec defined by the OpenID Foundation on February 26, 2014. OIDC solves the "Deleted Authorization Problem" of how to "provide a website access to my data without giving away my password".

Technically, OIDC standardizes authentication (AuthN), which uses OAuth 2.0 for delegated authorization (AuthZ). use of a ID token (JSON JWT format) with a UserInfo endpoint for getting more user information. It works like a badge at concerts.

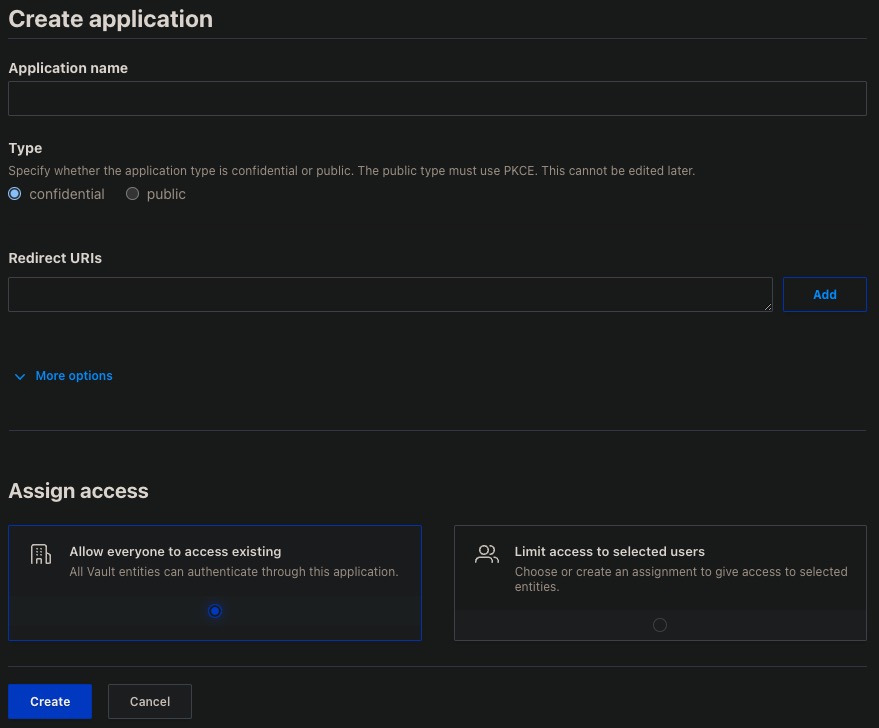

Vault creates OIDC credentials as a OIDC Identity Provider with a OIDC authorization (AuthZ) code flow (grant type).

What is passed through the network can be decoded using https://jsonwebtoken.io. A part of the transmission includes a cryptographic signature used to independently verify whether contents have been altered. The signature is created using an IETF JSON standard. OIDC provides a standard alternative to adaptations such as Facebook's "Signed Request" using a proprietary signature format.

TODO: Automation in this repo has already configured Vault to dynamically generate access credentials with a short lease_duration which becomes useless after that period of time. Such secrets are generated based on parameters defined in the tfvars file used to automatically fill out the GUI ACCESS: OIDC Provider: Create your first app form:

TODO: Some variable names come from the configuration file for the JWT (Java Web Token) protocol, displayed using command:

vault read auth/jwt/config

The response include:

key Value --- ----------- bound_issuer https://token.actions.githubusercontent.com

See https://wilsonmar.github.io/github-actions about additional utilities (such as hadolint running .hadolint.yml).

Within a GitHub Actions .yml file, a secret is requested from Vault VIDEO: like this during debugging, NOT in PRODUCTION.

-

See tutorials about application programs interacting with Vault:

-

Set the context within local file $HOME/.kube/config so local awscli and kubectl commands know the AWS ARN to access:

aws eks --region us-west-2 update-kubeconfig \ --name $(terraform output --raw eks_cluster_name) -

Verify kubeconfig view of information about details, certificate, and secret token to authenticate the Kubernetes cluster:

kubectl config view

-

Verify kubeconfig view of information about details, certificate, and secret token to authenticate the Kubernetes cluster:

kubectl config get-contexts

-

Obtain the contents of a kubeconfig file into your Clipboard: ???

terraform output --raw kubeconfig_filename | pbcopy -

Paste from Clipboard and remove the file path to yield:

apiVersion: v1 kind: ConfigMap metadata: name: aws-auth namespace: kube-system data: mapRoles: | - rolearn: arn:aws:iam::123456789123:role/node_group_01-eks-node-group-12340214203844029700000001 username: system:node:{{EC2PrivateDNSName}} groups: - system:bootstrappers - system:nodes -

TODO: Set the

KUBECONFIGenvironment variable ???PROTIP: Configuring policies is at the core of what Administrators do to enhance security.

https://developer.hashicorp.com/vault/tutorials/policies/policies

Limit risk of lateral movement by hackers to do damage if elevated Administrator credentials are compromised.

-

Create an account for the Admininstrator to use when doing work as a developer or other persona.

TODO:

-

TODO: "Userpass" static passwords?

https://developer.hashicorp.com/vault/docs/concepts/username-templating

On developer machines, the GitHub auth method is easiest to use.

For servers the AppRole method is the recommended choice. https://developer.hashicorp.com/vault/docs/auth/approle

https://developer.hashicorp.com/vault/docs/concepts/response-wrapping

-

Click Tools in the Vault menu to encrypt and decrypt pasted in then copied from your Clipboard.

-

Grab the kube auth info and stick it in ENVVARS:

export TOKEN_REVIEW_JWT=$(kubectl get secret \ $(kubectl get serviceaccount vault -o jsonpath='{.secrets[0].name}') \ -o jsonpath='{ .data.token }' | base64 --decode) echo $TOKEN_REVIEW_JWT export KUBE_CA_CERT=$(kubectl get secret \ $(kubectl get serviceaccount vault -o jsonpath='{.secrets[0].name}') \ -o jsonpath='{ .data.ca\.crt }' | base64 --decode) echo $KUBE_CA_CERT export KUBE_HOST=$(kubectl config view --raw --minify --flatten \ -o jsonpath='{.clusters[].cluster.server}') echo $KUBE_HOST

-

Enable the auth method and write the Kubernetes auth info into Vault:

vault auth enable kubernetesvault write auth/kubernetes/config \ token_reviewer_jwt="$TOKEN_REVIEW_JWT" \ kubernetes_host="$KUBE_HOST" \ kubernetes_ca_cert="$KUBE_CA_CERT"

-

Deploy Postgres:

kubectl apply -f files/postgres.yaml

-

Check that Postgres is running:

kubectl get pods

-

Grab the Postgres IP and then configure the Vault DB secrets engine:

export POSTGRES_IP=$(kubectl get service -o jsonpath='{.status.loadBalancer.ingress[0].hostname}' postgres) echo $POSTGRES_IP

-

Enable DB secrets:

vault secrets enable database -

Write the Vault configuration for the postgresDB deployed earlier:

vault write database/config/products \ plugin_name=postgresql-database-plugin \ allowed_roles="*" \ connection_url="postgresql://{{username}}:{{password}}@${POSTGRES_IP}:5432/products?sslmode=disable" \ username="postgres" \ password="password" vault write database/roles/product \ db_name=products \ creation_statements="CREATE ROLE \"{{name}}\" WITH LOGIN PASSWORD '{{password}}' VALID UNTIL '{{expiration}}'; \ GRANT SELECT ON ALL TABLES IN SCHEMA public TO \"{{name}}\";" \ revocation_statements="ALTER ROLE \"{{name}}\" NOLOGIN;"\ default_ttl="1h" \ max_ttl="24h" -

Ensure we can create credentials on the postgresDB with Vault:

vault read database/creds/product -

Create policy in Vault:

vault policy write product files/product.hcl vault write auth/kubernetes/role/product \ bound_service_account_names=product \ bound_service_account_namespaces=default \ policies=product \ ttl=1h -

Deploy the product app:

kubectl apply -f files/product.yaml

-

Check the product app is running before moving on:

kubectl get pods

-

Set into background job:

kubectl port-forward service/product 9090 & -

Test the app retrieves coffee info:

curl -s localhost:9090/coffees | jq .

PROTIP: Because this repo enables a new Vault cluster to be easily created in HCP, there is less hesitancy about destroying them (to save money and avoid confusion).

-

CAUTION: PROTIP: Save secrets data stored in your Vault instance before deleting. Security-minded enterprises transfer backup files to folders controlled by a different AWS account so that backups can't be deleted by the same account which created them.

-

PROTIP: Well ahead of this action, notify all users and obtain their acknowledgments.

-

Sign in HCP Portal GUI as an Administrator, at the Overview page of your Vault instance in HCP.

-

PROTIP: Click API Lock to stop all users from updating data in the Vault instance.

NOTE: There is also a "break glass" procedure that seals the Vault server that physically blocks respose to all requests (with the exception of status checks and unsealing.discards its in-memory key for unlocking data).

-

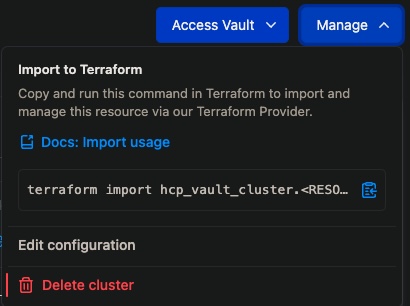

If you ran Terraform to create the cluster, there is no need to click Manage at the upper right for this menu:

If you used Terraform to create the cluster, DO NOT click the GUI red Delete cluster square nor type "DELETE" to confirm.

-

In your Mac Terminal, set credentials for the same AWS account you used to create the cluster.

-

navigate to the folder holding the sample.auto.tfvars file when Terraform was run.

-

There should be files terraform.tfstate and terraform.tfstate.backup

ls terraform.* -

Run:

time terraform destroy -auto-respondSuccessful completion would be a response such as:

module.hcp-eks.module.vpc.aws_vpc.this[0]: Destruction complete after 0s Destroy complete! Resources: 58 destroyed. terraform destroy -auto-approve 9.06s user 3.65s system 0% cpu 21:20.24 total

Time to complete the command has been typically about 20-40 minutes.

-

Remove the files terraform.tfstate and terraform.tfstate.backup

rm terraform.* -

PROTIP: Send another notification to all users and obtain their acknowledgments.

-

If you saved the HCP Vault cluster URL to your browser bookmarks, remove it.

VIDEO: "HCP Vault: A Quickstart Guide" (on a previous release with older GUI)

Automation and defaults in this repo minimize the manual effort otherwise needed to create enterprise-worthy secure production environments.

Use pre-defined Terraform modules which have been reviewed by several experienced professionals to contain secure defaults and mechanisms for security hardening that include:

-

RBAC settings by persona for Least-privilege permissions (separate accounts to read but not delete)

-

Verification and automated implementation of the latest TLS certificate version and Customer-provided keys

-

End-to-End encryption to protect communications, logs, and all data at rest

-

Automatic dropping of invalid headers

-

Logging enabled for audit and forwarding

-

Automatic movement of logs to a SIEM (such as Splunk) for analytics and alerting

-

Automatic purging of logs to conserve disk space usage

-

Purge protection (waiting periods) on KMS keys and Volumes

-

Enabled automatic secrets rotation, auto-repair, auto-upgrade

-

Disabled operating system swap to prevent the from paging sensitive data to disk. This is especially important when using the integrated storage backend.

-

Disable operating system core dumps which may contain sensitive information

-

etc.

Let's start by looking at the structure of the repo's folders and files.

At the root of the repo, a folder is created for each cloud (AWS, Azure, GCP).

Within each cloud folder is a folder for each environment: "dev" (development) and "prd" (for production) use.

Within each of environment folders is an examples folder for each alternative configuration you want created.

As is the industry custom for Terraform, a sample file is provided so you can rename sample.auto.tfvars to customize values to be used for your desired set of resources.

Many enterprises create "Doormat" type systems to create new credentials that are good only for a short time for those who have been validated by an IdP (Identity Provider) system such as Okta.

Credentials with enough permissions are needed to create resources in each cloud. For AWS, that means creating for each account AWS credentials set as environment variables or in a credentials file for AWS to read.

This repository, at https://github.com/stoffee/csp-k8s-hcp provides the custom Terraform HCL (HashiCorp Language) files (with a .tf suffix) to

Additionally, templates and the resources they reference are also stored in the registry.

Since this presentation is focused on enterprise production environments, we assume that there is a segregation of responsibilities among different central work groups common within large organizations.

The number of and naming of custom code repositories holding Terraform often reflects organizational divisions (see "Conway's Law").

In large organizations, the repositories containing Terraform:

- A tools-install repo to establish utility programs on the laptop of a DevSecOps administrator, to minimize frustration

- A pre-requisites repo to establish core cloud services and ensure they are working (one repo per cloud vendor)

- A different repo for each product (HashiCorp Vault, Consul, etc.) because different teams create them

- Additional add-on functionality and systems

WARNING: Using multiple repos to install services under the same account is that you need to remember which repo (state file) created which resources.

TODO: github.com different strategies for managing state files ..

Terraform Cloud enables Private objects in the HashiCorp Registry.

Additionally, there are several licensed editions (with free trial periods):

Cloud "Team" plan adds:

* Role-based access and Team Management

Cloud "Team & Governance" plan adds:

* Configuration designer

* Cross-organization registry sharing

* Cost estimation

* Policy as code (Sentinel or OPA)

* Run tasks: Advisory enforcement

* Policy enforcement

Cloud "Business" plan adds:

* SSO

* Team Management

* Drift detection

* Audit logging

* ServiceNow integration

Enterprise level plan adds:

* Runtime metrics (Prometheus)

* Air gap network deployment

* Application-level logging

* Log forwarding

https://app.terraform.io/signup

For example, AWS HashiCorp established its "terraform-aws-modules/" TODO:

Each cloud vendor has its own way to provide cloud services. In AWS, these are typical Terraform modules:

| module name | AWS | terraform-aws-modules/ | note |

|---|---|---|---|

| database | _rds | rds-aurora/aws | aurora-postgresql |

| iam | _iam | Identity and Access Management | |

| kms | _kms | Key Management | |

| load-balancing | _ingress | - | |

| networking | networks | - | |

| s3 | s3 | Objects (files) in Buckets | |

| secrets-manager | _manager | - |

edit options in sample.auto.tfvars to configure values for variables used in Terraform runs.

"Feature flags" -- variables with true or false values defining whether a Kubernetes add-on or some feature is optionally included or excluded in a particular install, such as:

- VPC, DNS, and other networking "prerequisite" resources

- Verification of endpoints

- Observability Extraction (Prometheus)

- Analytics (dashboarding) of metrics (Grafana)

- Scaling (Kubernetes Operator Karpenter or cluster-autocaler) to provision Kubernetes nodes of the right size for

HashiCorp Case Study: Automating Multi-Cloud, Multi-Region Vault for Teams and Landing Zones Feb 03, 2023 by Bryan Krausen and Josh Poisson.