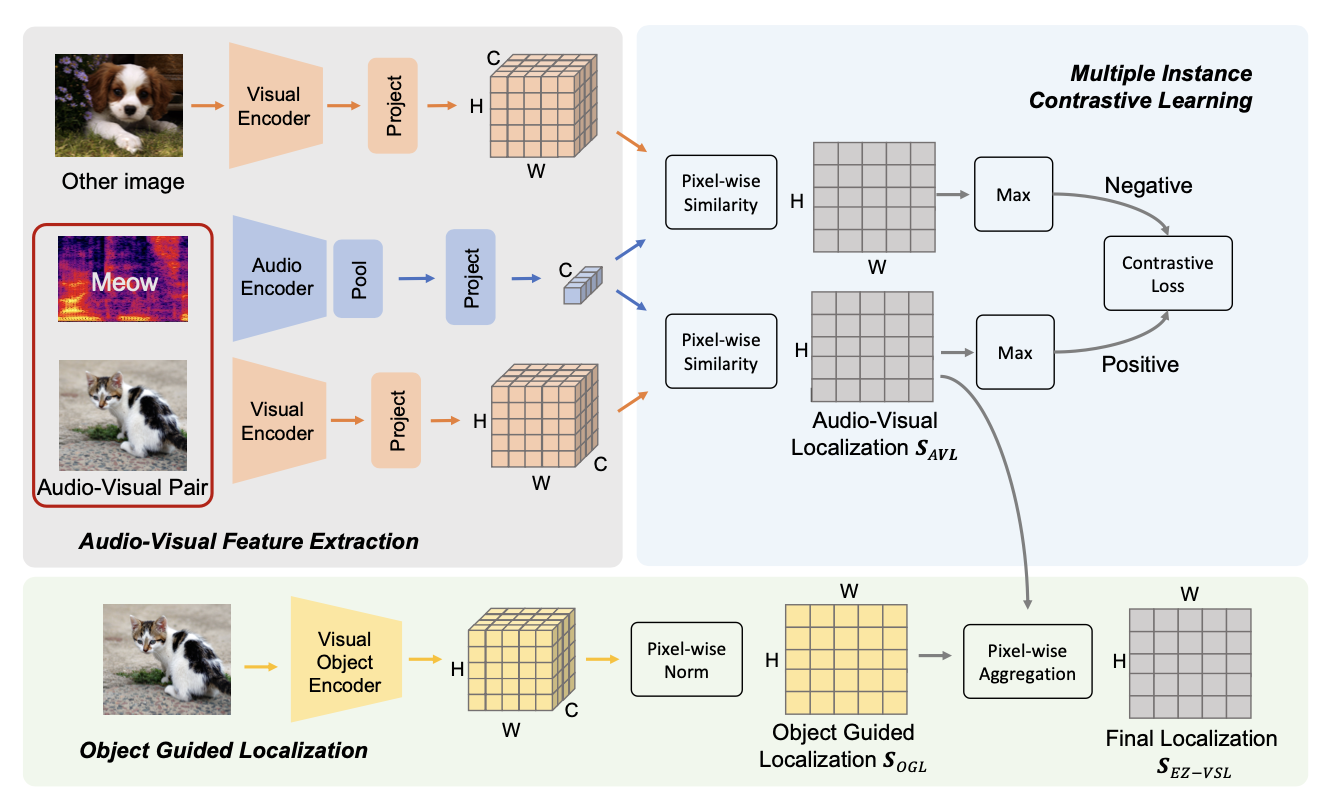

Official codebase for EZ-VSL. EZ-VSL is a simple yet effective approach for Visual Sound Localization. Please check out the paper for full details.

Localizing Visual Sounds the Easy Way

Shentong Mo, Pedro Morgado

arXiv 2022.

To setup the environment, please simply run

pip install -r requirements.txt

Data can be downloaded from Learning to localize sound sources

Data can be downloaded from Localizing Visual Sounds the Hard Way

Data can be downloaded from Unheard and Heard

We release several models pre-trained with EZ-VSL with the hope that other researchers might also benefit from them.

| Method | Train Set | Test Set | CIoU | AUC | url | Train | Test |

|---|---|---|---|---|---|---|---|

| EZ-VSL | Flickr 10k | Flickr SoundNet | 81.93 | 62.58 | model | script | script |

| EZ-VSL | Flickr 144k | Flickr SoundNet | 83.13 | 63.06 | model | script | script |

| EZ-VSL | VGG-Sound 144k | Flickr SoundNet | 83.94 | 63.60 | model | script | script |

| EZ-VSL | VGG-Sound 10k | VGG-SS | 37.18 | 38.75 | model | script | script |

| EZ-VSL | VGG-Sound 144k | VGG-SS | 38.85 | 39.54 | model | script | script |

| EZ-VSL | VGG-Sound Full | VGG-SS | 39.34 | 39.78 | model | script | script |

| EZ-VSL | Heard 110 | Heard 110 | 37.25 | 38.97 | model | script | script |

| EZ-VSL | Heard 110 | Unheard 110 | 39.57 | 39.60 | model | script | script |

For training an EZ-VSL model, please run

python train.py --multiprocessing_distributed \

--train_data_path /path/to/Flickr-all/ \

--test_data_path /path/to/Flickr-SoundNet/ \

--test_gt_path /path/to/Flickr-SoundNet/Annotations/ \

--experiment_name flickr_10k \

--trainset 'flickr_10k' \

--testset 'flickr' \

--epochs 100 \

--batch_size 128 \

--init_lr 0.0001

For testing and visualization, simply run

python test.py --test_data_path /path/to/Flickr-SoundNet/ \

--test_gt_path /path/to/Flickr-SoundNet/Annotations/ \

--model_dir checkpoints \

--experiment_name flickr_10k \

--save_visualizations \

--testset 'flickr' \

--alpha 0.4

The training script supports the following training sets: flickr, flickr_10k, flickr_144k, vggss, vggss_10k, vggss_144k or vggss_heard.

For evaluation, it supports the following test sets: flickr, vggss, vggss_heard, vggss_unheard.

The test.py script saves the predicted localization maps for all test images when the flag --save_visualizations is provided.

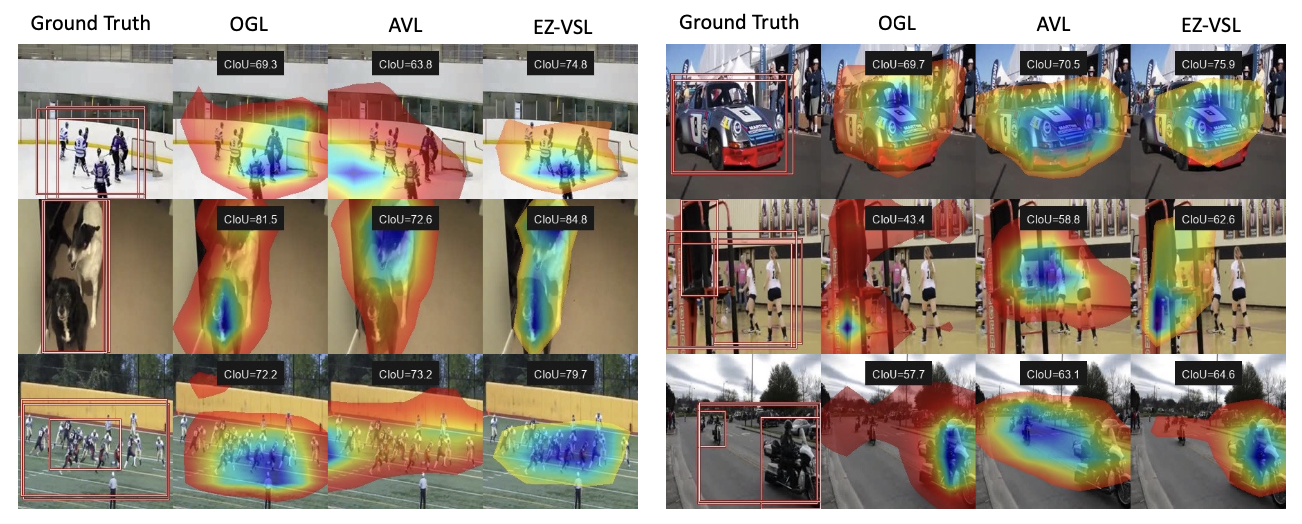

All visualizations for OGL, AVL and EZ-VSL localization maps are saved under {model_dir}/{experiment_name}/viz/.

Here's some examples.

If you find this repository useful, please cite our paper:

@article{mo2022EZVSL,

title={Localizing Visual Sounds the Easy Way},

author={Mo, Shentong and Morgado, Pedro},

journal={arXiv preprint arXiv:2203.09324},

year={2022}

}